Taking a look under the hood

After popping apart the cooler we find that Nvidia had the “thermal pad all the things” and this is understandable. Previous coolers had a vapor chamber on the GPU with surrounding cooling plate for ancillary components which allowed airflow down to less critical components and even sometimes offering a separate cooler for VRM components and the like. With this larger full cover vapor chamber, Nvidia had to take this into consideration as it means that it is a solid plate with no areas for cooling air to pass to other board components which means those components that may have been cooled by passive air now may need a thermal pad. The large rainbow-colored strip of wires with the connector if an all in one for LED and fan control. The backplate also has thermal pads to help make the thin metal backplate a functional heat spreader vs a visual piece. The backplate also has a black shiny sheet which is a plastic material which will help SMD components from shorting against the metallic backplate.

Here you see the card undressed and you can see there is a ton of complexity to this board. The VRM is a 13+3 design with 10 of the GPU phases on the right-hand side of this photo and the remaining 6 phases to the left with 3 being the remaining GPU phases and 3 remaining phases for GDDR6.

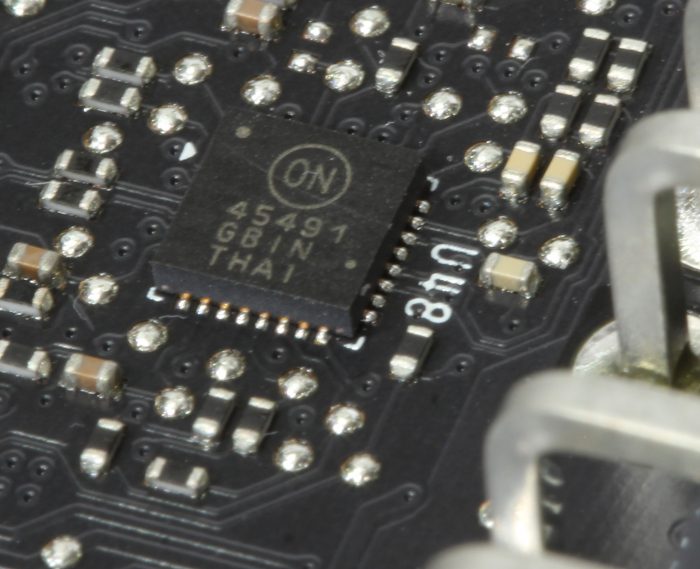

I found multiple of the ON Semiconductor NCP45491 which can monitor multiple bus current and voltages on 4 channels.. these can be paired up to monitor multiple different sources.

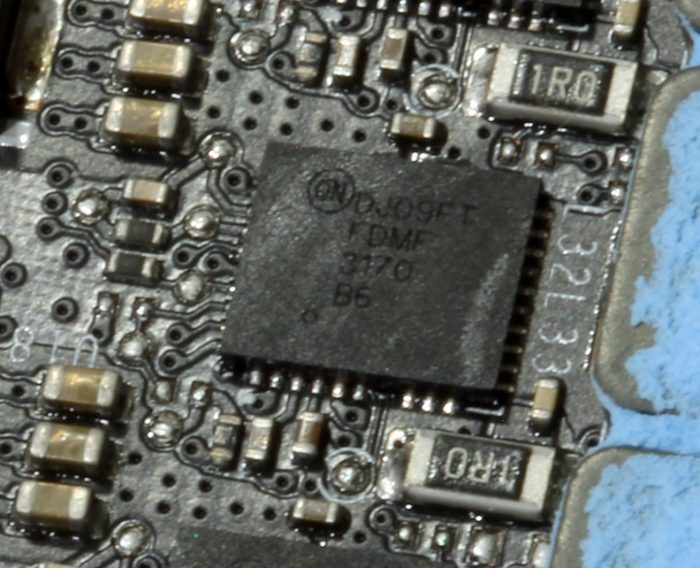

Here we see one of the power stages that are used to feed the VGPU and the VDDR. They are Fairchild Semiconductor FDMF 3170 and they are 70A per unit. So at a 13 power stage configuration, this VRM can supply ridiculous amounts of power and means it can be quite efficient in doing so. Nvidia did mention that the VRM can actively switch off VRM phases as needed which will reduce heat and power consumption at low loading scenarios. With the observed power needs of the TU102 GPU, you could realistically run well on half of this VRM but if you decided to LN2 an FE card this power supply should be able to handle some extreme loading.

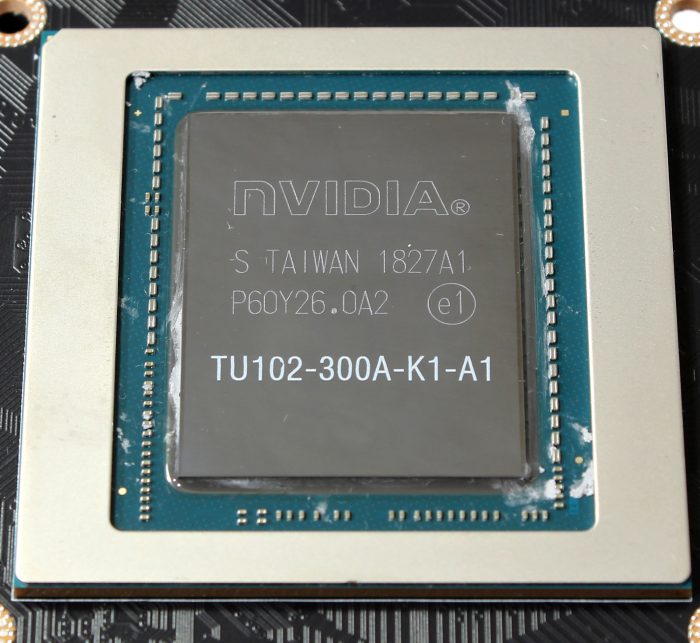

Here is where the magic happens, the Turing TU102 Die. This is what makes it all happen and inside this sizable die is new SM’s RT Cores and Turing Tensor cores. According to Nvidia, this is the 2nd largest die ever made next to the GV100.

Here you see the Micron GDDR6 modules which are 1GB per IC Spec’d at 7000MHz (14000MHz effective data rate) which is why we have 11 on the board with 1 empty pad. This does raise the question as to whether we will see a 12GB card such as a new TITAN based on this same PCB to replace the TITAN Xp.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Two years later a new generation card should deliver around the performance the 2080ti does however the price is also expected to stay equal or less than the card it replaces. This is where the 2080ti fails big time and it’s sad to see reviewers ignore the fact. All serious reviewers should ignore nvidia marketing and compare the cards to similarly priced previous gen cards. So 1080 to 2060, 1080 to 2070 and 1080ti to 2080.

I agree, if this was a direct comparison as in Rasterization to Rasterization that would be 100% true and this review would be much different. However, as in life, things are much different and it has nothing to do with Nvidia’s marketing but more to do with having a long view on the direction of the industry. There is a lot at work which will come around as this all matures and new features really get to be explored. Thank you for the comment and for giving it a read as I always welcome constructive feedback. That being said, I would definitely revisit not just my review but others as the new features change the way you can render in-game scenes and opens the doors to next level performance differences along with massively more immersion with what the new real-time ray tracing capabilities start to show up.

I do agree that the new tech will change how rendering is done, in a GPU generation or two as it is the right way forward. But the 2080 and 2080ti cards simply do not currently deliver the value for anyone but developers that need to work on this for 1-3 years before it hits the market for real.

A card should be reviewed from a ‘consumer’ perspective. The consumer here being the gamer not a developer. Any existing consumer with a 1080ti looking to upgrade would be considering to spend around the same as amount for a new card. So his upgrade here is the 2080.. The 2080 does not currently provide the usual generational performance increase and is therefore close to worthless as a upgrade. If the consumer was willing to spend $1200 his existing card would most likely be a Titan X.

So from this perspective the 2080 upgrade is turing and rtx cores and they are not supported in DX12 yet (with luck it comes in October), furthermore there are no games currently available.

So all in all you are buying something that might work but most indications point to RTX forcing you to go from 4k -> 1080p on a 2080TI and the 2080 will probably not do well at all. This leaves DLSS as the only real gain.

Now if NVIDIA has launched this without marketing bullshit trying to sell a titan as a TI I would have no issues because people know what they are buying and all reviews would be done correctly comparing apples to apples instead of apples to oranges.

So the conclusion for now is that the 2080 card probably only provides DLSS as a improvement over a TI for a higher price as the RTX cores most likely wont be able to render in a usable resolution for guys doing 4k or 144hz gaming .

Furthermore I would expect the 2080 FE (factory overclocked card) to be compared to a equally overclocked 1080 TI.

Any conclusion that skips these obvious issues are hard to take serious.

Very well said Reviewers are missing this very important point: nvidia is ripping us off with this outrageous prices

This is not true, as in any market the tech will go for what the market will bear. If you are not happy with the prices, you have no requirement to make a purchase or even buy when a model which meets your expectations show up. I have no skin in this game as far as what Nvidia sells their product for, of course as a consumer I would love to see it for 500 or even 99 bucks but the amount of research and time that goes into these products it is simply not feasible.

Same goes for any tech product… Try telling Tesla their products (cars) are overpriced.. Many feel that way and they choose not to purchase them, but knowing what I know about them, same as I know about this tech I would buy one if I could… today…

IF this were true I would definitely agree. but we have seen games which are in process of adding these features now.. many games which already exist and more are gonna be added to this list soon I am sure. I would definitely agree once again if this was some far off tech, but it’s simply not. This is tech we will see implemented within the next few quarters is what I am seeing.