Nvidia GeForce GTX 780 Ti

Nvidia launched the 700 series and really took the market by storm with the capability to offer exceptional power savings and super strong performance per watt, along with cool running cards which makes for a great gaming package. However nothing ever is safe for very long and with that AMD showed off their new R series of cards to compete. Unfortunately for AMD, almost all of the models in the R series were actually existing chips with a new name. The exception was the flagship product–the R9-290X.

The R9-290X takes the TITAN head on, and the price point is a real killer for the gaming market, but this kind of value does not come without compromises. Unfortunately, these come in the form of 92-95C loaded temps and noise which can be akin to a vacuum cleaner inside your chassis. But even with these issues this got a lot of performance enthusiasts’ attention as some of these issues can be relieved with liquid cooling fitment or just dealing with the heat on quiet mode.

Nvidia today releases the answer to 290X, and really the answer to what gamers have been seeking since Kepler or TITAN launched almost a full year ago. As TITAN launched we finally saw Nvidia take a step into its enterprise GPU lineup to give an insanely capable GPU to the consumer gaming lineup and now Nvidia has upped the ante by giving a full GK110 GPU to a gaming GPU which still is well below the 1K pricepoint where the TITAN presently resides.

The GK110 Kepler Architecture

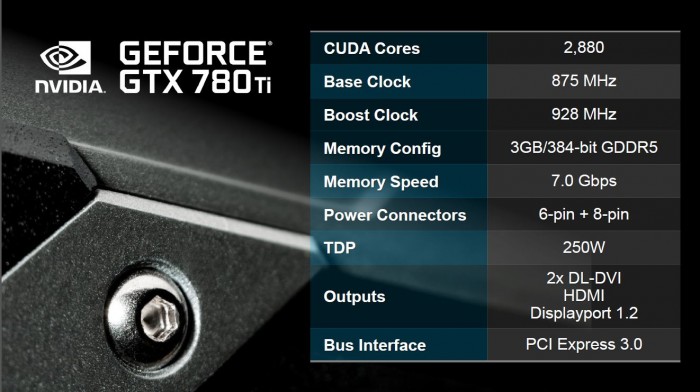

First up when we look at the 780 Ti model you need to know that there is a full fledged GK110 here, we are talking full 2880 CUDA Cores which puts it even higher spec’d than the beloved TITAN. However do understand that the compute performance has been “sacrificed” here, which in my opinion is really the only way to keep relevancy for the TITAN model cards. If the 780 Ti also offered the full compute performance there would really be little use for the TITAN model to continue. Keep in mind, though, that the TITAN has some special design features such as 6GB framebuffer which can be utilized in GPU computation situations and can still be a real excellent cost saver for production usage or GPU compute cards when compared to 3K+ pricepoint of comparable Professional Compute cards from Nvidia.

The GTX 780 Ti shows some beastly specs including the 384-bit memory bus backed by 3GB 7Gbps memory which mans a ton of memory bandwidth ready should you find a way to push the GPU to its computational or graphical limits the memory is ready to keep the data blasting in queue for GPU usage.

Do note that the GTX 780 Ti carries the same 250W TDP of its brothers so we know that this card while being super powerful should also easily keep power usage in check as well.

Here you can see some of the specs of the card compared to what is offered presently from the Kepler lineup.

| GTX 690 | GTX TITAN | GTX 780 Ti | GTX 780 | GTX 770 |

GTX 760 |

|

| Stream Processors | 1536 x 2 | 2688 |

2880 |

2304 |

1536 |

1152 |

| Texture Units | 128 x 2 | 224 | 240 | 192 | 128 | 96 |

| ROP’s | 32 x 2 |

48 |

48 | 48 | 32 | 32 |

| Base Core Clock | 915MHz | 837MHz | 875MHz | 863MHz | 1046MHz | 980MHz |

| Boost Clock | 1019MHz | 876MHz | 928MHz | 900MHz | 1085MHz | 1033MHz |

| Memory Clock | 6008MHz | 6008MHz | 7000MHz | 6008MHz | 7010MHz | 6008MHz |

| Memory Interface | 256-bit x 2 | 384-bit | 384-bit | 384-Bit | 256-bit | 256-bit |

| Memory Qty | 2GB x 2 | 6GB | 3GB | 3GB | 2GB or 4GB | 2GB or 4GB |

| TDP | 300W | 250W | 250W | 250W | 230W | 170W |

| Transistors | 3.5B x 2 | 7.1B | 7.1B | 7.1B | 3.54B | 3.54B |

| Manufacturing process | 28nm | 28nm | 28nm | 28nm | 28nm | 28nm |

| Price | $999 | $999 | $699 | $649 | $399 | $249 |

Upon review of the specs you can see this card is a monster, and from what I can tell Nvidia pulled out pretty much all the stops to ensure this card is not just awesome but even reaches Iconic gaming level as being what we consider to be one of the most powerful single GPU cards ever to see a gaming PC.

Cooler

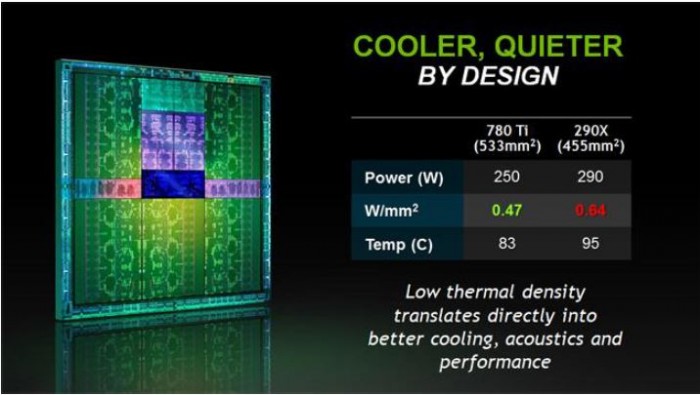

Nvidia came prepped and ready to support their claims of dominion over the 290X with one of the main points hitting on a real sore spot for AMD which is the heat. Nvidia explains this as the Watt per mm squared so basically with the 780 Ti GPU you have 0.47W per square mm, whereas the 290X theoretically has 0.64W per square mm which means the total thermal dissipation is higher in any set point on the GPU itself. This means users need a lot more dissipation capability from the cooler to keep the card at nice temps and this is why think we have seen such high powered cards from the Nvidia camp–they take this kind of engineering into account when designing the product, so as to ensure the gamers do not have to deal with huge thermal loads.

Bigger, Better and Ready to PWN

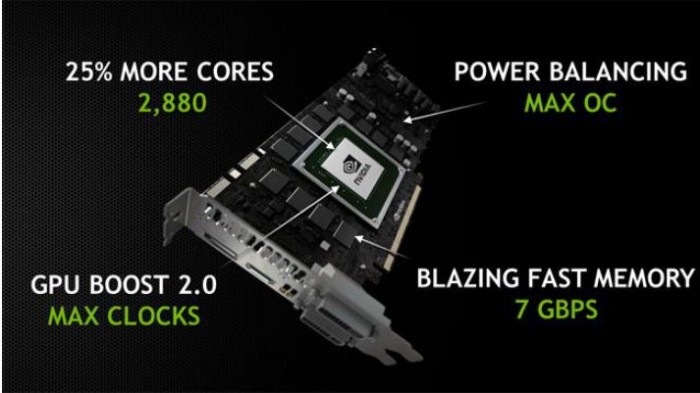

Here you can see the key features all in one picture of the card and gives a good picture of exactly what this card is packing.

25% More Cores – Up to 2880 CUDA Cores for maximum GPU power an serious gaming performance on tap for even the most demanding gaming situations.

Power Balancing – The Nvidia team as spent sometime tuning the card function to allow for the card to balance where it pulls power from to allow even better and smoother power delivery which in turn will yield very stable OC performance and better performance capabilities for the 780 Ti.

7Gbps Memory – This is similar to what we saw on the GTX 770 previously with super fast memory chips rated at 7GHz and we’re sure if anything like the 770 will see high 7000 if not into 8000MHz range but also on top of this it running through a 384-bit memory bus which allows for massive memory bandwidth from the 780 Ti model.

Existing and improved Kepler features

GPU Boost 2.0 -Thermals

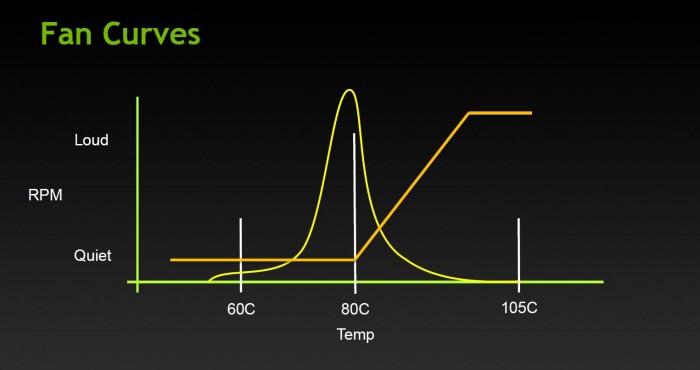

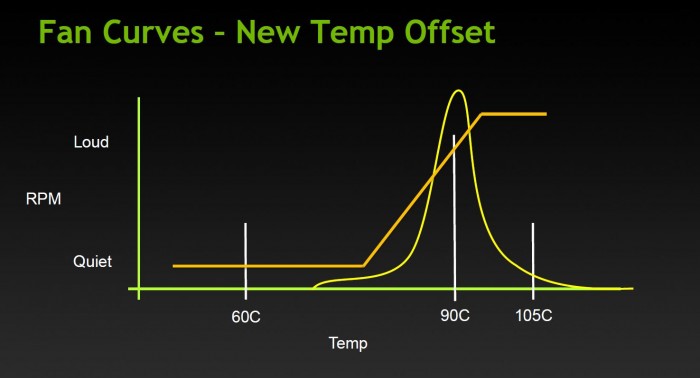

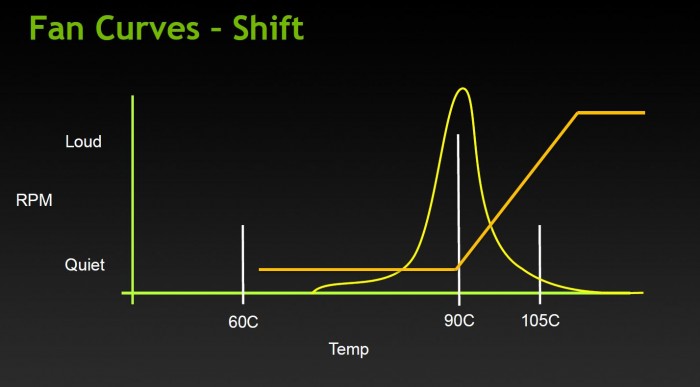

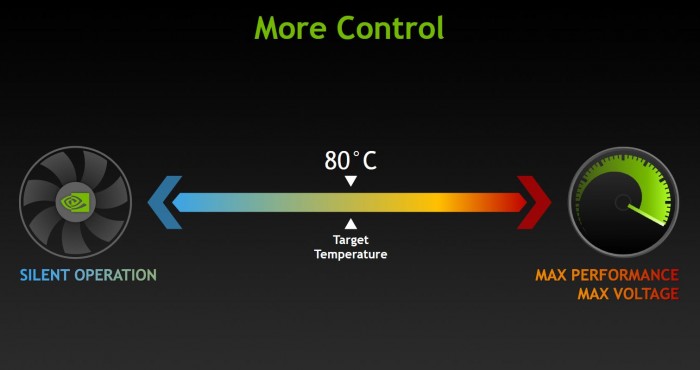

Just as we saw on the TITAN then 780, the 700 series cards carry the new GPU Boost 2.0 which offers even more flexibility in overclocking and even more control over how your card runs. If you want it to only run at a max of a certain temp you simply set that in the thermal target of the EVGA precision utility and the card does the rest. However if you can live with some more heat there is potential for more performance by simply adjusting the frequency and thermal target up a little.

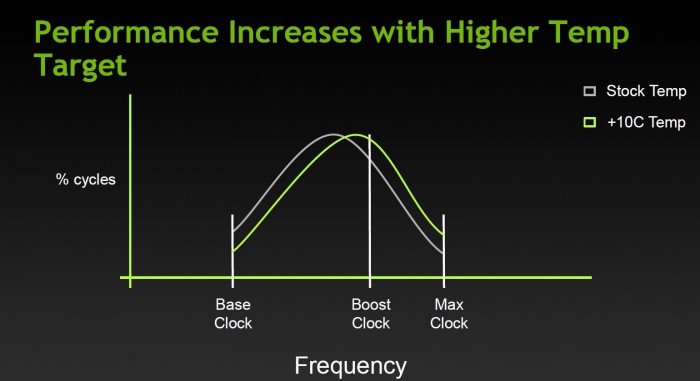

The GPU boosting function is controlled largely by the GPU temps, which by default means the GPU will boost volts and clocks up until the thermal target of 80 C is reached. What is nice about this is that the user can control the thermal target, so if you are fine with your card loading up to 90C, you can raise the target temp to allow for even more overclock and even voltage headroom.

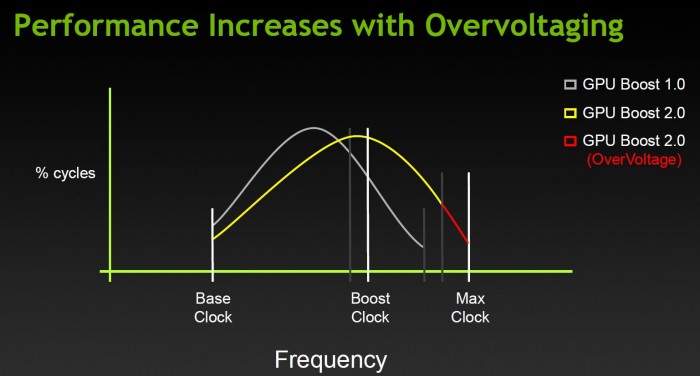

GPU Boost 2.0 – Voltage

Nvidia has employed GPU boost for some time and in simple terms it allows the GPU to overclock itself in situations where there is extra thermal and voltage headroom to spare. This was quite a good feature, but clocks could in some cases be rather erratic and varied greatly depending upon operating environment. Nvidia introduced the newest iteration of the GPU boost feature in TITAN, including a new way to control your card. In the new version, boost clock and voltage levels are directly tied to GPU temps, and therefore voltages can be pushed higher than before. Previously, some GTX 680 models did not have a lot of voltage control options. Nvidia corrected this oversight by opening up the GPU Boost 2.0 to enable higher overvoltage options. As you can see, you have the standard boost clock which can already go higher with the sliding scale of the thermal targets, and then you factor in the higher level over-voltage, leading to an amazing amount of headroom.

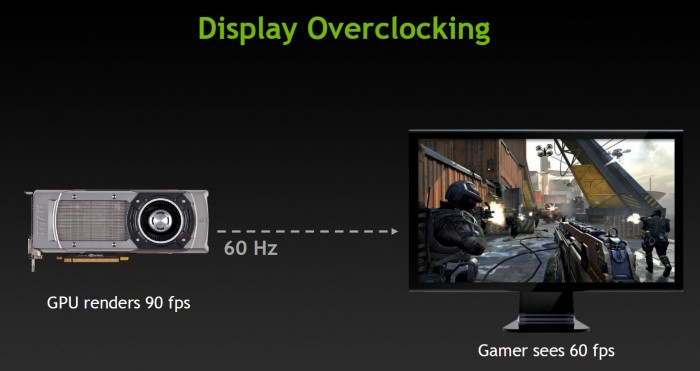

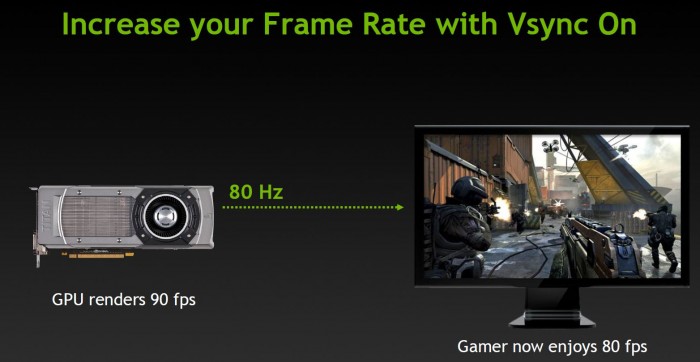

Nvidia Display Overclocking

Another cool feature from Nvidia is the ability to overclock the display, which means you can push your display to higher refresh rates for even smoother performance from a display that normally may only be at 60Hz. One word of caution: not all displays will support the overclock, and there will likely be a limit as to how far they can be pushed so it will take a bit of trial and error to find where your display is happy at.

Video Encoding

Kepler features a dedicated H.264 video encoder called NVENC. Fermi’s video encoding was handled by the GPU’s array of CUDA cores. By having dedicated H.264 encoding circuitry, Kepler is able to reduce power consumption compared to Fermi. This is an important step for Nvidia as Intel’s Quick Sync has proven to be quite efficient at video encoding and the latest AMD HD 7000 Radeon cards also feature a new Video Codec Engine.

Nvidia lets the software manufacturers implement support for their new NVENC engine if they wish to. They can even choose to encode using both NVENC and CUDA in parallel. This is very similar to what AMD has done with the Video Codec Engine in Hybrid mode. By combining the dedicated engine with GPU, the NVENC should be much faster than CUDA and possibly even Quick Sync.

- Can encode full HD resolution (1080p) video up to 8x faster than real-time

- Support for H.264 Base, Main, and High Profile Level 4.1 (Blu-ray standard)

- Supports MVC (multiview Video Coding) for stereoscopic video

- Up to 4096×4096 encoding

According to NVIDIA, besides transcoding, NVENC will also be used in video editing, wireless display, and videoconferencing applications. NVIDIA has been working with the software manufacturers to provide the software support for NVENC. At launch, Cyberlink MediaExpresso will support NVENC, and Cyberlink PowerDirector and Arcsoft MediaConverter will also add support for NVENC later.

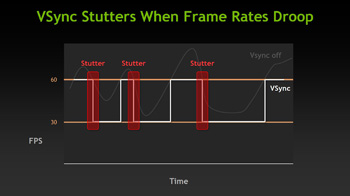

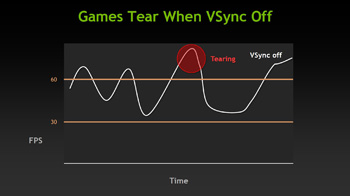

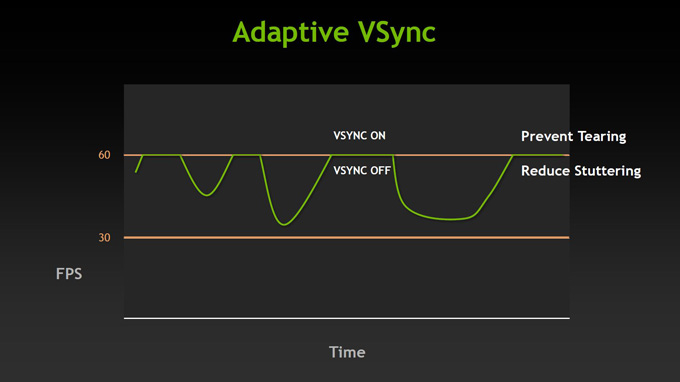

Adaptive VSync

Click on the Images to View a Larger Version

Improved Software Experience

Nvidia supplied us with some really cool software and also showed us some cool stuff that we can now show off to all of you!

GeForce Experience

Here you can see the GeForce Experience program, which is actually a very cool free software for GeForce users. Once you install the program it will scan your system hardware and all installed games, and then optimize your game settings for the best experience.

This may not seem like much, but think of it this way: When you go in and just crank up the settings, are you really running the game the best that your system can? Most likely not. The GeForce Experience program is a better alternative to guessing which settings will provide a balance of performance and eye-candy. This automatically sets up your system to run well, so you don’t have to.

Do note that the GeForce Experience software has been out for BETA for some time, but as of now is moving into full user ready state.

GeForce Experience – Shadowplay

Ever since the introduction or preview of what Shadowplay will be, media and community alike have been waiting for this to drop so we all could get some play time to see how this new app will benefit gamers.

Well now as of the most recent GeForce Experience updates the Shadowplay feature is unveiled and allows for constant recording of the last 20 minutes of your gameplay or even constant recording however you want to use it to capture the game winning kill or even a full match, it really is whatever you want it to be.

The great part about this is that I am a long time FRAPS user and anyone who has used a SSD and FRAPS knows that in longer recording sessions the drive space can disappear very quickly as the file size grows to very high sizes. Shadowplay uses specific features built in to the Kepler based GPU to actively encode in real time on the GPU which in turn means the files you are saving o recording with Shadowplay will be smaller in size so that you can record longer without having to worry about drive space.

Nvidia has seen Shadowplay usage go completely insane since its beta introduction with videos made using Shadowplay all over YouTube (Over 20,000 Videos and 200,000 users on YouTube alone) and popular social media communities. So from what we can see adoption of the Shadowplay is rapidly taking off an its obvious it works very well the quality of videos tells the tale here.

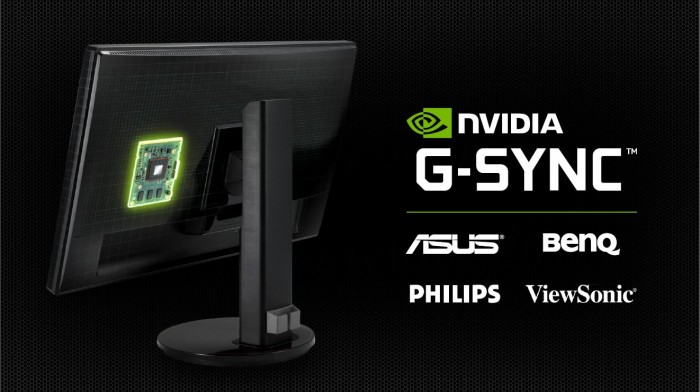

G-SYNC – Designed to ensure the best and smoothest gaming performance!

G-Sync is a new technology which was demo’d during a recent media visit to Canada and it honestly is very interesting technology.

First thing to understand is that as you see in the image above it is a hardware based solution. This has a single MXM style card integrated into the display which is used to actually sync the data from the GPU and what is displayed on the screen. This in essence removes some of the tearing or other issues you can see with standard solutions or Vsync as in some scenarios you a see some tearing o even chops in display as the sync between GPU an display is lost.

The hardware in a G-Sync display is required for this technology an I do figure it will add some cost to the display but IF it works as intended this could literally be a game changer (pun intended)

I am hoping that soon I can get hands on with G-Sync monitor to see exactly how i works compared to a standard display and see if its a difference we can see.

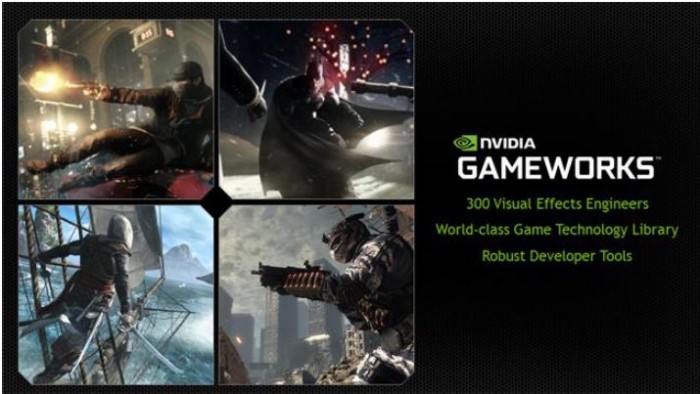

Nvidia Gameworks

Nvidia as a gaming company has made some great decisions but this has to be one of the best in my opinion, Nvidia employs a ton of engineers and they send them all around the world to different game design studios just to ensure that as games are developed maximum compatibility and performance are achieved through optimizations to allow excellent performance with Nvidia GPUs.

This is also another reason why as soon as a game launches you have game ready drivers to support and enhance performance for the newly launched games.

This kind of forward thinking and even expense by Nvidia is the kind of thing that shows that their target truly is to offer the best possible experience when you get an Nvidia based card.

Review Overview

Performance - 95%

Value - 90%

Quality - 95%

Features - 95%

Innovation - 90%

93%

The 780 Ti has proven to be an obscenely powerful performer with ability to spare, all while staying cool and quiet.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

that picture of the PCB is inaccurate. there is one capacitor that is out of line with the rest, and some of the voltage regulator components are in different spots on the 780ti vs 780 and titan.