The AMD HD6990 is AMD’s latest dual-GPU card. This beast is big, power hungry, and quite expensive. It is also very very fast. Read our review to find out how fast.

INTRODUCTION

For over a year, AMD has had the fastest dual-GPU graphics card in its arsenal: the HD5970. This dual-GPU beast is yet unbeaten, and while Nvidia’s GTX580 is the fastest single-GPU card on the market, it has not yet toppled the HD5970 for the performance crown. Ever since AMD announced the HD6xxx-series, we all have been awaiting the successor to the HD5970, and we’re presenting the AMD HD6990 in all its impressive glory.

We first heard about the HD6990, codename Antilles, back in October 2010 when the HD6850 and HD6870 were released. On one of the slides there was a mention that the Antilles would be released sometime in Q4 2010. However, production seemed to stall through fall 2010, and not much was heard about the card; when we attended CES in January 2011, one of the vendors even told us that they did not know what AMD was doing and that they were considering building their own dual-GPU card. That is now unnecessary, as AMD finally has unveiled this dual-GPU beast. The HD6990 is big, power-hungry, expensive and fast. Join us as we put it through a variety of benchmarks trying to find a game that can tax this card.

SPECIFICATIONS

Let’s compare the HD6990 with the previous cards in the HD6xxx-series.

The HD6990 uses the same VLIW4 core architecture as the HD6970 and the HD6950. This is an improved design that allows better utilization than the previous VLIW5 design. Some of the advantages over the previous design are a 10% improvement in performance per square millimeter, simplified scheduling and register management, and extensive logic re-use.

| HD6990 | HD6990 OC | HD6970 | HD6950 | |

|---|---|---|---|---|

| Process |

40 nm |

40 nm |

40 nm |

40 nm |

| Engine clock |

830 MHz |

880 MHz | 880 MHz | 800 MHz |

| Stream Processors |

3072 |

3072 | 1536 | 1408 |

| Compute Performance |

5.1 TFLOPs |

5.4 TFLOPs |

2.7 TFLOPs |

2.25 TFLOPs |

| Texture Units |

192 |

192 | 96 | 88 |

| Tex. Fillrate |

159.4 GTex/s |

169 GTex/s |

84.5 GTex/s | 70.4 GTex/s |

| Memory | 4 GB GDDR5 | 4 GB GDDR5 | GDDR5 | GDDR5 |

| Memory clock | 1250 MHz | 1250 MHz | 1375 MHz | 1250 MHz |

| PowerTune Max Power | 375W | 450W | 250W | 200W |

| Typical Gaming Power | 350W | 415W | 190W | 140W |

| Typical Idle Power | 37W | 37W | 20W | 20W |

The HD6990 has a default core-clock of 830 MHz, that putting it between the HD6950 and the HD6970. However, it also has an enhanced performance BIOS that puts its clockspeed at 880 MHz, the same as the HD6970. The memory is clocked at 1250 MHz, same as the HD6950. The card has 4 GB GDDR5 memory (2 GB for each core). AMD is not planning a 2 GB version at this time.

In fact, if we look closely at the specifications, we can see that mostly, this card has 2x what is observed on either the HD6950 or the HD6970. The HD6990 has 3072 stream processors compared to 1536 on the HD6970; it has 192 texture units while the HD6970 has 96 (notice a pattern here?); and the texture fillrate is either 159.4 Gtex/s or 169 Gtex/s depending on the BIOS setting, double that of the HD6950 and HD6970. This also extends to the power consumption–the typical idle power sits at around 37W (as compared to 20W for the HD6950 and HD6970) while the typical load power during gaming is around 350-415W (as compared to 140-190W for the HD6950 and HD6970).

FEATURES

The HD6990 has the same features as the other HD6xxx cards, plus a trick or two up its sleeve.

Dual-BIOS with factory overclocking

One cool feature that we saw introduced on the HD6950/HD6970 cards is the use of a dual-BIOS on the card. While the BIOS-switch on the HD6950/HD6970-cards were used for backup BIOS chips, AMD has used the dual-BIOS in a different way on the HD6990. The read-only BIOS is still a backup running at 830 MHz with a voltage of 1.12V, which is the stock speed for this card. However, when changed to the second setting, the card was overclocked to 880 MHz and the voltage increased to 1.175V. The BIOS switch is covered with a label advising users to first read the manual.

For those wondering why AMD doesn’t use the overclocked setting as default, part of the problem could be that overclocked settings use a lot of power, which pushes the limit of what the PCI-Express specifications permit.

For those who want to continue overclocking the card even more AMD has increased the maximum available in Overdrive to 1200 MHz for the GPU cores.

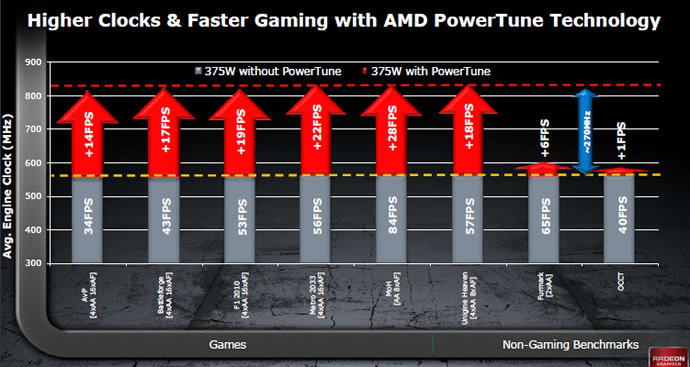

Powertune

Powertune was introduced with the HD6950/HD6970, and is an interesting technology that tries to get the most out of the cards while still staying under TDP. PowerTune dynamically adjusts the clock frequency so that the power usage never exceeds the TDP limit. This allows the card to automatically fine-tune its settings depending on the kind of application a user is running.

Manufacturers who build a card without any kind of power saving technology must design it to cope with a situation where it draws the highest amount of power possible. A few examples of this situation are “worst-case” programs like Furmark or OCCT, which are designed to load the GPU completely. However, the simple fact is that the GPU is rarely (if ever) at full load in games. With PowerTune, the GPU can be clocked at a much higher frequency; in the rare instances that the GPU is loaded so much that it consumes too much power (breaking the TDP limit), PowerTune can downclock the GPU *or GPUs) until the whole card is within the TDP limit. AMD says that without PowerTune, the HD6990 would never have been able to run at 830/880 MHz, instead having to settle for around 600 MHz. While PowerTune technology might downthrottle the GPUs a bit when using Furmark or OCCT, this benefits games as the card can be clocked much higher.

Updated Eyefinity

The HD6990 supports the new Portrait 5×1 mode that was introduced in the Catalyst drivers in late 2010.

With the right monitors, this setup should be one of the best uses of Eyefinity, as it allows users to get a much better aspect ratio when using 5 monitors in vertical orientation.

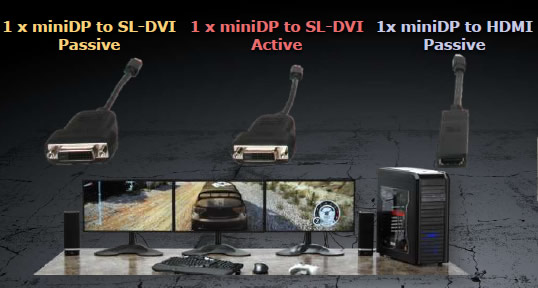

One problem for AMD with Eyefinity is that it requires at least one monitor with DisplayPort. Even though the prices for DisplayPort monitors are coming down, they still are more expensive than regular HDMI/DVI monitors. AMD has decided to get around this by including an active DVI to DisplayPort adapter with every HD6990 card. This means users will be able to set up a 3-monitor Eyefinity setup using 3 DVI monitors. This will drastically decrease the entry-price for Eyefinity.

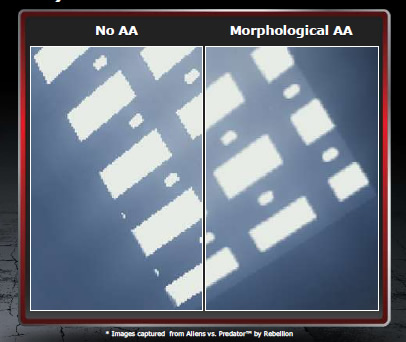

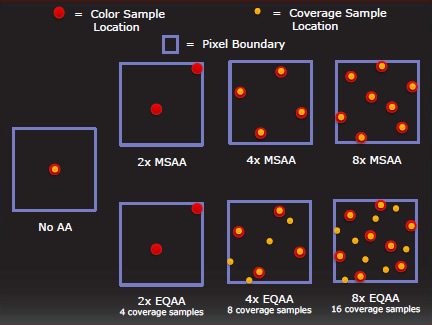

EQAA and MLAA

The HD6990 also supports the new anti-aliasing methods called Enhanced Quality Anti-aliasing (EQAA) and Morphological Anti-aliasing (MLAA).

EQAA is a new set of MSAA modes with up to 16 coverage samples per pixel. It has custom sample patterns and filters, and according to AMD, offers better quality at the same memory footprint. It is compatible with adaptive AA, Super-sampling AA and Morphological AA.

Morphological AA is a post-process filtering technique accelerated with DirectCompute. It delivers full-scene anti-aliasing, meaning it is not limited to polygon edges and alpha-tested surfaces. Performance is similar to edge-detect CFAA, though morphological AA applies to all edges. It can even be used on still images. Games do not need AA-support to use MLAA, and it can be turned on in the Catalyst Control Center.

Due to logistical restrictions, we could not more closely examine these different AA techniques and other image optimizations, but we hope to revisit this and include comparisons to Nvidia’s cards. As far as we can see in our testing, the image quality still is great.

3D

While AMD is not pushing 3D as much as Nvidia, they still do support 3D gaming. Instead of doing it with their own closed technique, they are supporting open standards, and many games can be played in 3D using a middle-ware program like DDD. During their presentation of the HD6990, AMD discussed 2 upcoming games: Dragon Age 2 and Deus Ex: Human Revolution. Both games will support 3D with AMD’s cards. Dragon Age 2 uses DDD to support AMD H3D3 and Deus Ex will actually have native support for HD3D. At this time we do not know much more, but it will be interesting to see how well they play in 3D on AMD’s hardware compared to Nvidia’s more matured 3D technology.

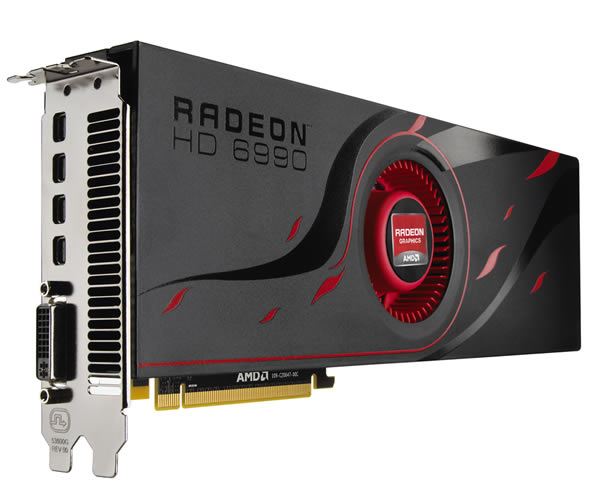

Pictures & IMpressions

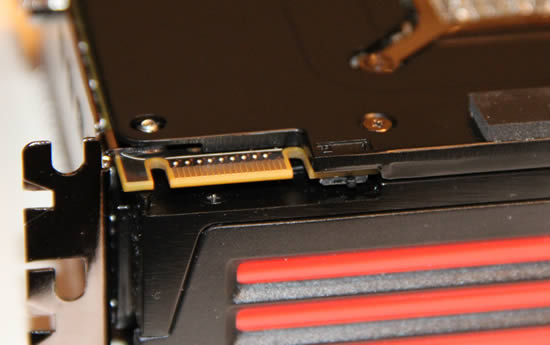

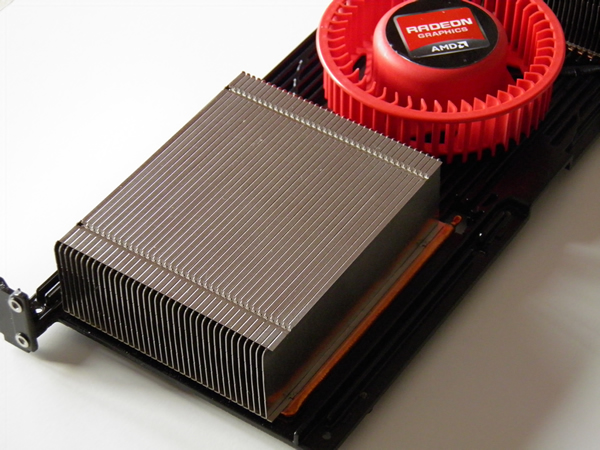

At first glance this card looks remarkably similar to the other cards of the HD6xxx series, just longer. It is a huge card–30.5 centimeters long, the same as the HD5970. As expected, the card needs power from two 8-pin PCI-Express connectors.

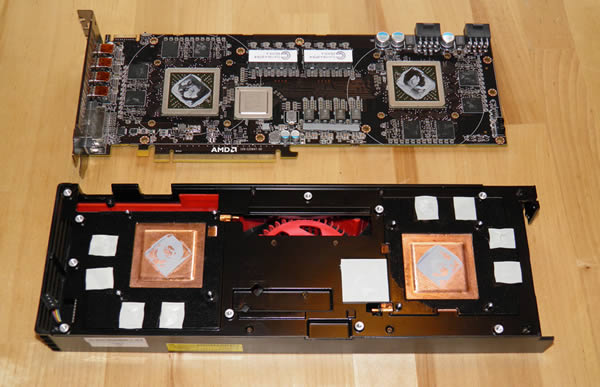

Since AMD uses TIM-paste (Thermal Interface Material) for the coolers, which changes phase when it is heated up for the first time. Unfortunately, this means we cannot remove the heatsink and cooler to take a look at the chips without decreasing the performance of the cooling system. AMD therefore supplied us with a few images from an opened card.

The GPUs now sit on each side of the big fan and are angled.

2 large vapor chambers sit on top of each GPU, helping cool away up to 450W of heat.

The card comes with 4 mini-DP connectors (DP1.2) and one DL-DVI connector. As we mentioned earlier, all cards will come with one active DP to DVI adapter, one passive DP to DVI adapter, and one passive DP to HDMI adapter. This allows users to use 3 DVI monitors for Eyefinity. For those who want more monitors, at least one needs to be a monitor with a DisplayPort.

Testing Methodology

We tested this card on two base systems, with an additional system for comparison.

System 1: Intel [email protected] GHz, 8 GB DDR3, HD 6990, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

System 2: Intel [email protected] Ghz, 8 GB DDR3, HD 6990, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

System 3: Intel [email protected], 16 GB DDR3, GTX580, Gigabyte P67A-UD7, Win7 Home Premium

SYNTHETIC BENCHMARKS

Our first batch of benchmarks are synthetic benchmarks, which try to simulate a real life situation.

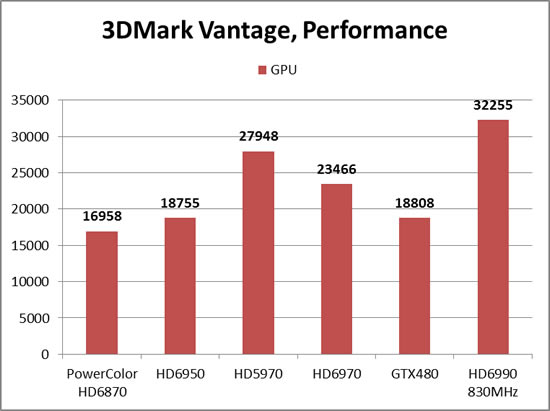

3DMark Vantage

3DMark Vantage is still one of the most popular benchmarks even though it is getting a bit old now. It lets us benchmark the DX10 performance of the cards.

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

In our first system the HD6990 excels in 3DMark Vantage’s Performance test. The GPU score for the HD6990 is 15% better than the card it is replacing, the HD5970.

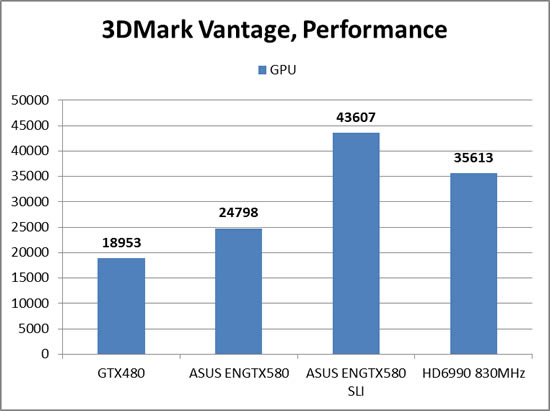

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

As we switch to a new motherboard that allows for more overclocking and compare the HD6990 to the GTX580 and two GTX580s in SLI, we see that the HD6990 still performs very well. The dominance of the GTX580 in SLI does give us a hint as to how the GTX590 could perform.

“3DMark 11 is the latest version of the world’s most popular benchmark for measuring the graphics performance of gaming PCs. Designed for testing DirectX 11 hardware running on Windows 7 and Windows Vista the benchmark includes six all new benchmark tests that make extensive use of all the new features in DirectX 11 including tessellation, compute shaders and multi-threading. After running the tests 3DMark gives your system a score with larger numbers indicating better performance. Trusted by gamers worldwide to give accurate and unbiased results, 3DMark 11 is the best way to test DirectX 11 under game-like loads.”

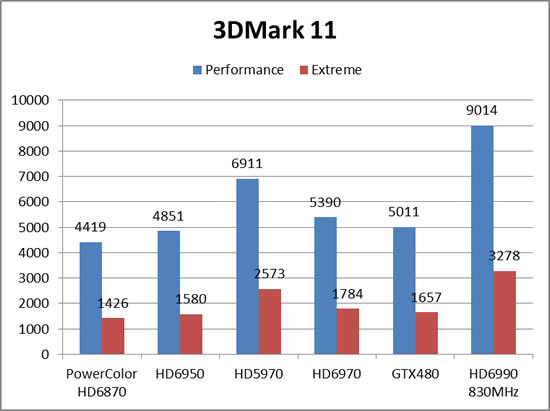

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

When we switch over to demanding DX11 benchmarks, the HD6990 is almost 30% faster than the HD5970. We see an almost 2x increase from the HD6970 to the HD6990.

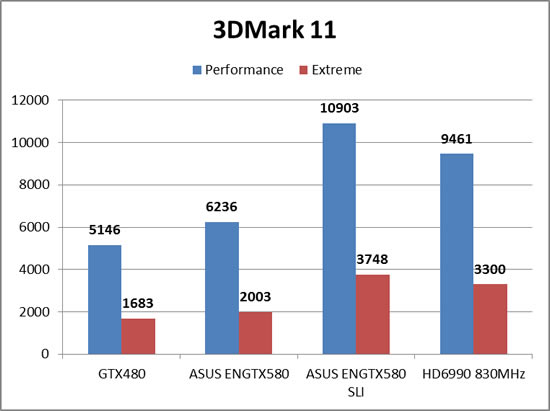

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

The two GTX580s in SLI barely beat the single card HD6990. Considering the price difference between one HD6990 and two GTX580s, it is a great result for the HD6990.

DX10 GAMING: CRYSIS WARHEAD

So how does the HD6990 handle older games like Crysis: Warhead?

Crysis Warhead is the much anticipated standalone expansion to Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

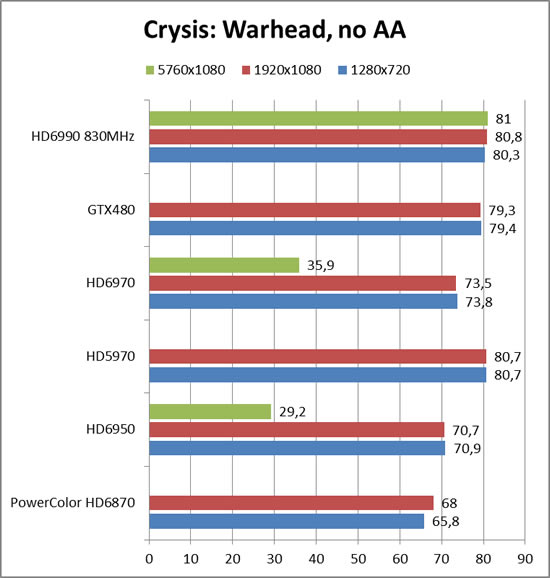

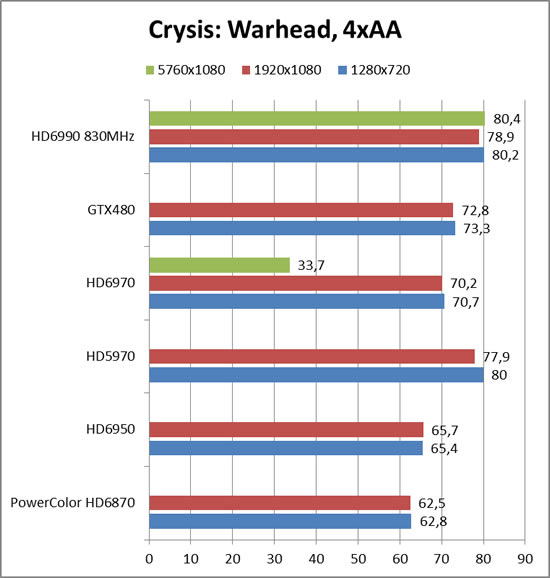

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

It is amazing to see that a game like Crysis Warhead, which was once considered to demanding to any video card, can now run on the Enthusiast settings at 5750×1080 (3 monitors in Eyefinity) and top out at 80 FPS regardless of the resolution.

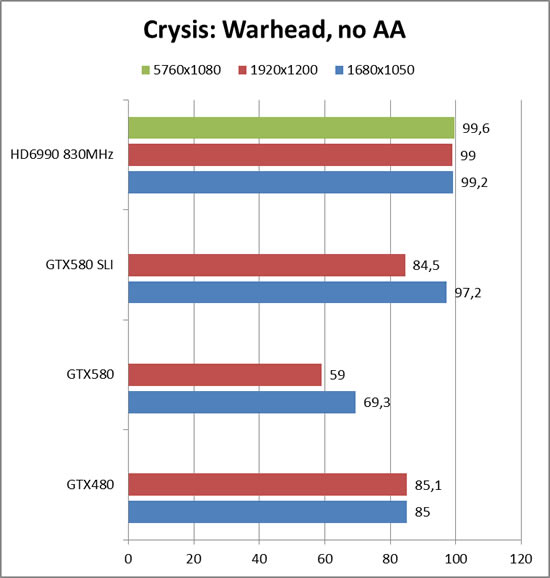

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

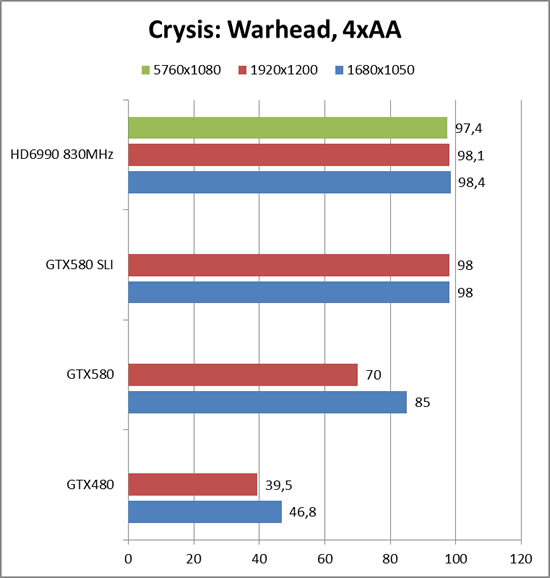

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

The extra CPU clockspeed of this system seems to boost performance. While the HD6990 still holds its own with the 2 extra monitors, the GTX580 SLI setup actually looses some performance as we move up to 1920×1200. As this does not happen at 4xAA we are suspecting that something else may have affected the runs for the GTX580.

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

When we turn on 4xAA, all cards except the HD6990 drop in performance.

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

Both the GTX580s in SLI and the HD6990 easily stay around 100 fps when we turn on 4xAA. It is obvious the video cards are not the limiting factor here.

DX11 GAMING: LOST PLANET 2

While it is nice to see that the HD6990 performs well in older games it is even more important that it perform well in recent games.

“Lost Planet 2 is a third-person shooter video game developed and published by Capcom. The game is the sequel to Lost Planet: Extreme Condition, taking place ten years after the events of the first game, on the same fictional planet.”

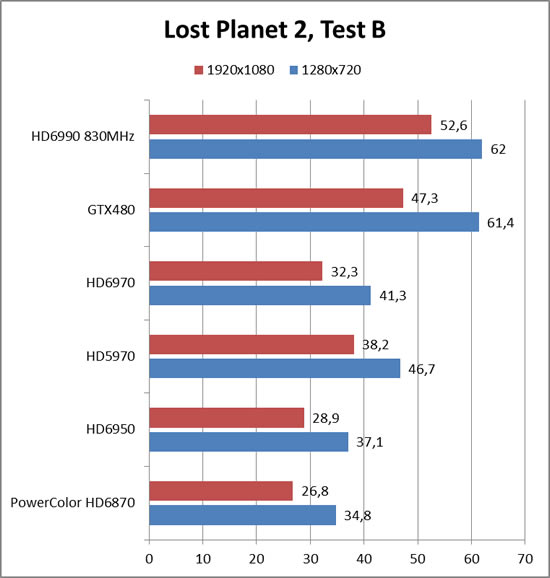

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

The engine that powers Capcom’s various games, including Lost Planet 2, seems to prefer Nvidia cards. Even though the HD6990 is a huge step up from the previous AMD cards, including the HD5970, it just barely manages to beat the GTX480. On the other hand the performance is still great.

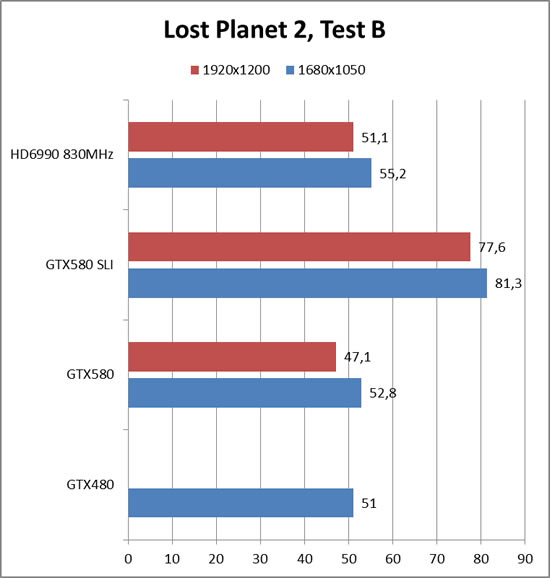

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

When comparing to the GTX580 and GTX580 in SLI configuration, we see that the HD6990 just barely manages to beat the GTX580 and comes far behind the GTX580 in SLI configuration. The performance still is great but it is obvious this engine favors Nvidia cards.

DX 11 Gaming: Just Cause 2

“Just Cause 2 is an open world action-adventure video game. It was released in North America on March 23, 2010, by Swedish developer Avalanche Studios and Eidos Interactive, and was published by Square Enix. It is the sequel to the 2006 video game Just Cause.

Just Cause 2 employs the Avalanche Engine 2.0, an updated version of the engine used in Just Cause. The game is set on the other side of the world from the original Just Cause, on the fictional island of Panau in Southeast Asia. Panau has varied terrain, from desert to alpine to rainforest. Rico Rodriguez returns as the protagonist, aiming to overthrow the evil dictator Pandak “Baby” Panay and confront his former mentor, Tom Sheldon.”

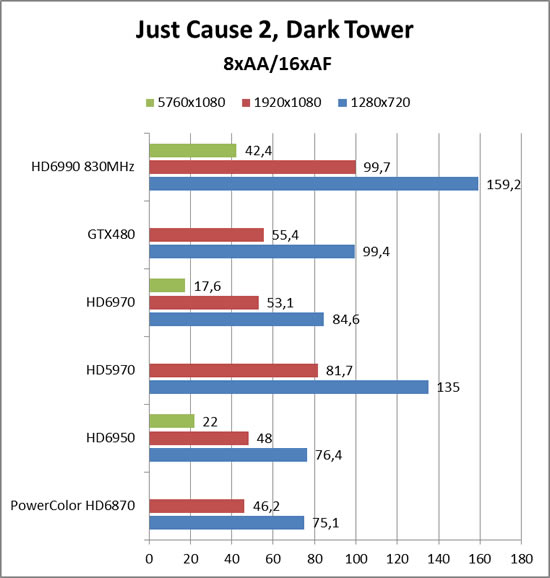

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

The HD6990 easily dispatches of all other cards in this system. It’s performance of 42 FPS with 3 monitors is impressive.

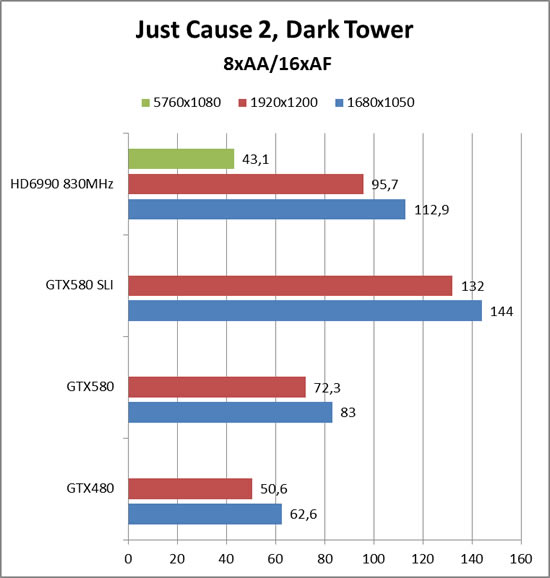

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

While the HD6990 cannot outperform the GTX580s in SLI, it still pumps out some impressive framerates.

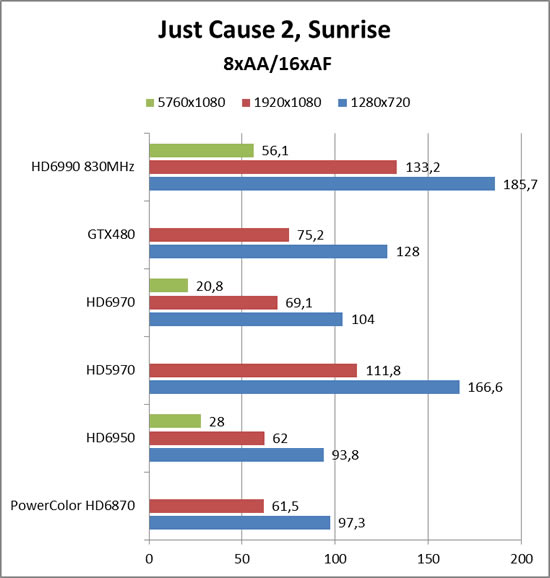

Intel [email protected] GHz, 8 Gb DDR3, Gigabyte H67MA-UD2H, OCZ Vertex2, Win7 Home Premium

Switching to the Sunrise test gives us the same result as the Dark Tower test on this system. The HD6990 crushes all the other cards when it comes to performance.

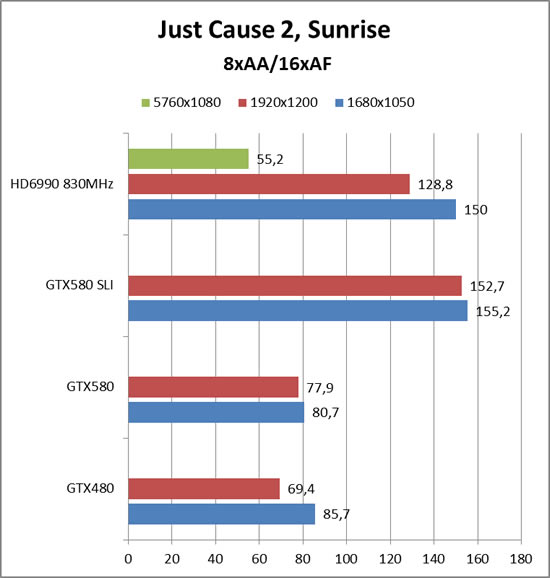

HD6990: Intel [email protected] Ghz, 8 Gb DDR3, ASUS P8P67 Pro, OCZ Vertex2, Win7 Home Premium

GTX580: Gigabyte P67A-UD7, 16 Gb memory. We could not manage to get a GTX580 to our tester in Europe which is why we have tried to match up these two systems as close as possible.

On this system, the HD6990 almost reaches the GTX580 in SLI. The extra clockspeed of the CPU helps us reach 55 frames per second with 3 monitors.

OVERCLOCKING

As we mentioned earlier the HD6990 comes with a second BIOS that increases both the clockspeed and the voltage of the CPU. It is not a huge jump (from 830 MHz to 880 MHz) but it should help. Unfortunately, it doesn’t. We ran the card not only at 880 MHz but also at 905 MHz, and the benefits were very small. In fact, in some cases, the framerate actually dropped a bit. As far as our own testing goes, we do not see any direct benefit in going from 830 MHz to 880 MHz.

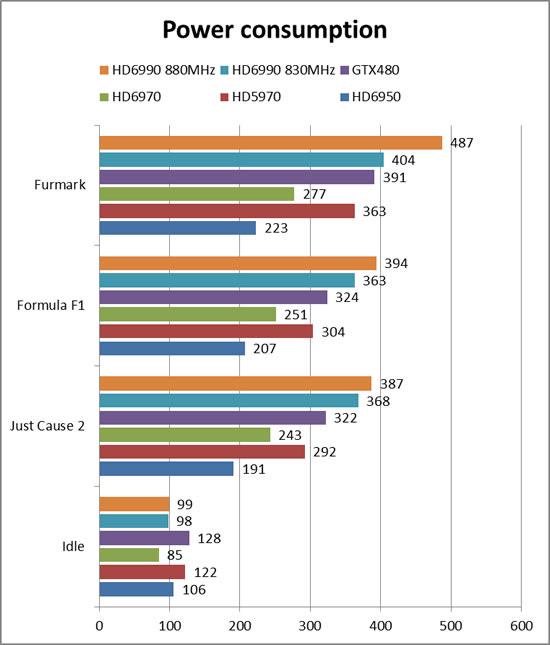

POWER CONSUMPTION

We measured the power consumption at the wall for the whole system except the monitor. The PSU installed was a Thermaltake GRAND 750W PSU.

As expected, the HD6990 needs a lot of power. When idling it is actually just as energy efficient as any of the other slow cards, but as we start to play games, we quickly see that the HD6990 needs the most power of all the tested cards. It is interesting to see that switching to the overclocked BIOS settings results in a lot more power being drawn even though our testing does not show any more performance.

TEMPERATURES

We ran Furmark with the card for an hour and noticed that the fan started running quicker when the GPU cores were around 80 degrees Celsius, keeping the temperature around 80-84C during the whole run. Users will certainly hear this card when gaming, as the fan is loud, and needs to spin fast to keep the card cool.

CONCLUSION

We had high expecations of the HD6990 and the card definitely delivered. It is extremely gratifying to know that we can play just about any game at the maximum setting and still get great framerates. Needless to say, this card is fast. We liked Eyefinity and the fact that it is possible to set up a 3-monitor Eyefinity setup with DVI monitors is great news, as it means that creating such a cool multi-monitor setup will no longer be as expensive. While the dual-BIOS solution with the overclocked BIOS is an interesting idea, we are not sure if it is that useful, since our testing showed a negligible performance increase.

When it comes to price, AMD has told us the recommended price will be $699. Compared to the GTX580, which starts at USD 470, this is an excellent price. This is especially true as the HD6990 almost outperforms two GTX580s in SLI in certain games. Here in Sweden, e-tailers have said that the card will cost around 5500-5995 SKr with tax (USD 730 – 800). Again, that is a great price, as a single GTX580 costs around 4000 SKr (USD 530). If anything, this should put some pressure on Nvidia to decrease prices on the GTX580, and also to bring out their own dual-GPU card at a competitive price.

The HD6990 is an impressive successor to the HD5970. AMD has once again shown that they still hold the crown of fastest overall video card.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996