Nvidia fielded two variants of the GTX-460, the 768MB model and the 1GB model, both have 336 shaders but there are subtle differences in the architecture.

Nvidia GTX-460(s) 768 & 1GB

Nvidia went a little different on these GPU’s and both models have 336 Shaders but they have subtle differences in design. The 1GB model has a wider memory bus at 256 bit and the 768MB card has 192 bit memory bus. Having two GTX-460’s is a little confusing so we will be referring to them as the GTX-460 768 and GTX-460 1GB. The GTX-460 1GB has 32 ROPs and the 768MB model has 24 ROP’s. SO our major differences on the two card are the 1GB card has 256MB more memory, 8 more ROP’s and 64bit more memory bus. The projected prices on the cards are $199 for the 768MB model and $229 for the 1GB model.

If you are stuck on a monitor with 1680×1050 resolution then the 768MB model will probably be great, if you plan on going to 1920×1200 or have a 1920×1200 monitor then it’s likely you’ll want the 1GB model. For the extra $30 bucks we’d say hold out and get the 1GB model, thirty bucks for a small measure of future proofing is a small price to pay.

From the outward appearance you can’t tell the difference between the two GPU’s. Retail models will have a sticker telling you, but the reference cards look identical.

| GPU | GTX-480 | GTX-470 | GTX-465 | GTX-460 1GB | GTX-460 768MB | GTX-295 | 5970 |

|---|---|---|---|---|---|---|---|

| Shader units | 480 | 448 | 352 | 336 | 336 | 2x 240 | 2x 1600 |

| ROPs | 48 | 40 | 32 | 32 | 24 | 2x 28 | 2x 32 |

| GPU | GF100 | GF100 | GF100 | GF104 | GF104 | 2x GT200b | 2x Cypress |

| Transistors (approx) | 3200M | 3200M | 2336M | 2240M | 2240M | 2x 1400M | 2x 2154M |

| Memory Size | 1536 MB | 1280 MB | 1024 MB | 1024 MB | 768 MB | 2x 896 MB | 2x 1024 MB |

| Memory Bus Width | 384 bit | 320 bit | 256 bit | 256 bit | 192 bit | 2x 448 bit | 2x 256 bit |

| Core Clock | 700 MHz | 607 MHz | 607 MHz | 675 MHz | 675 MHz | 576 MHz | 725 MHz |

| Memory Clock | 924 (X4) MHz | 837 (X4) MHz | 802 (x4) MHz | 900 (x4) MHz | 900 (x4) MHz | 999 MHz | 1000 (x4) MHz |

| Price | $499 | $319 | $250 | $229 | $199 | $500 | $599 |

In addition to the differences we mentioned above, the 768MB model has a 384KB cache and the 1GB model has a 512KB cache. While the differences might seem subtle, at higher resolutions in some games the 1GB model is sure to be the better choice. What we’ve seen is that most gamers live at 1680×1050 and below, the percentage of users on 1920×1200 is fairly small. It’s a hard choice to make but we feel like the extra $30 you pay for the better equipped GTX-460 1GB is well worth the money. Heavily leveraged video games using the latest technology need lots of memory and even at 1680×1050 you’ll reap benefits from the 1GB model.

General Features

Powered by NVIDIA® GeForce GTX460

GeForce CUDA™ Technology

Microsoft® DirectX® 11 Support

Bring new levels of visual realism to gaming on the PC and get top-notch performance

Microsoft® Windows® 7 Ready

Enable PC users to enjoy an advanced computing experience and to do more with their PC

SLI Support

Multi-GPU technology for extreme performance

Take Your Game Beyond HD

Dual-link DVI able to drive the industry’s largest & highest resolution flat-panel displays up to 2560×1600

The Evolution of Multi-Display Gaming powered by GeForce® GTX 400 Series GPUs

Imagine expanding your gaming real estate across three displays in Full HD 3D for a completely immersive gaming experience. With the introduction of NVIDIA GeForce GTX 400 GPUs, you can now use the award winning NVIDIA 3D Vision to build the world’s first multi-display 3D gaming experience on your PC.

EXPAND YOUR GAMING REAL-ESTATE FOR THE ULTIMATE IMMERSIVE EXPERIENCE.

> Get a complete view of the battlefield in real-time strategy games.

> Manage your inventory windows, quest logs, and track your party in your favorite MMORPGs.

> See your enemy’s movement quicker and react first in first-person-shooters.

> Immerse yourself in the driver seat of your favorite racing game and be a part of the action.

|

3D GAMING ACROSS THREE 1080P DISPLAYS, FOR A BREATHTAKING GAMING EXPERIENCE. > 3D gaming across three 1080p displays, for a breathtaking gaming experience. > Advanced NVIDIA software automatically converts over 425 games to stereoscopic 3D without the need for special game patches. > GeForce GTX 400 GPUs deliver the graphics horsepower to drive 750M pixels/second for screen 3 times Full HD 1080p 3D Vision gaming for an incredible 5760×1080 experience. > Compatible with all 3D Vision-Ready desktop monitors and projectors. |

| GET UP AND GAMING IN MINUTES WITH THE WORLD’S MOST ADVANCED MULTI-DISPLAY SOFTWARE. > Works with all standard monitor connectors, without requiring special display adapter dongles. > Simple to use setup wizard guides users through setup and allows bezel correction to enable a seamless display experience. > Advanced GPU synchronization ensures seamless support and maximum framerate > Use Accessory Displays to watch movies, browse the web, or chat with friends. |

|

BEST IN CLASS 2D SURROUND GAMING

> Game across three non-3D displays with resolutions up to 2560×1600.

> Supports landscape or portrait mode for ultimate display flexibility.

GTX 400 GPUs Video

|

For a complete list of system requirements for NVIDIA Surround Technology, click here. |

| AWARDS Popular Mechanics Editor’s Choice CES 2010 |

REVIEWS Tech Report “Nvidia’s 3D Vision Surround: to Eyefinity and beyond?” January 14, 2010 |

|

|

CUDA

What is CUDA?

CUDA is NVIDIA’s parallel computing architecture that enables dramatic increases in computing performance by harnessing the power of the GPU (graphics processing unit).

With millions of CUDA-enabled GPUs sold to date, software developers, scientists and researchers are finding broad-ranging uses for CUDA, including image and video processing, computational biology and chemistry, fluid dynamics simulation, CT image reconstruction, seismic analysis, ray tracing and much more.

Computing is evolving from “central processing” on the CPU to “co-processing” on the CPU and GPU. To enable this new computing paradigm, NVIDIA invented the CUDA parallel computing architecture that is now shipping in GeForce, ION, Quadro, and Tesla GPUs, representing a significant installed base for application developers.

In the consumer market, nearly every major consumer video application has been, or will soon be, accelerated by CUDA, including products from Elemental Technologies, MotionDSP and LoiLo, Inc.

CUDA has been enthusiastically received in the area of scientific research. For example, CUDA now accelerates AMBER, a molecular dynamics simulation program used by more than 60,000 researchers in academia and pharmaceutical companies worldwide to accelerate new drug discovery.

In the financial market, Numerix and CompatibL announced CUDA support for a new counterparty risk application and achieved an 18X speedup. Numerix is used by nearly 400 financial institutions.

An indicator of CUDA adoption is the ramp of the Tesla GPU for GPU computing. There are now more than 700 GPU clusters installed around the world at Fortune 500 companies ranging from Schlumberger and Chevron in the energy sector to BNP Paribas in banking.

And with the recent launches of Microsoft Windows 7 and Apple Snow Leopard, GPU computing is going mainstream. In these new operating systems, the GPU will not only be the graphics processor, but also a general purpose parallel processor accessible to any application.

For information on CUDA and OpenCL, click here.

For information on CUDA and DirectX, click here.

For information on CUDA and Fortran, click here.

PhysX

Some Games that use PhysX (Not all inclusive)

|

Batman: Arkham Asylum Watch Arkham Asylum come to life with NVIDIA® PhysX™ technology! You’ll experience ultra-realistic effects such as pillars, tile, and statues that dynamically destruct with visual explosiveness. Debris and paper react to the environment and the force created as characters battle each other; smoke and fog will react and flow naturally to character movement. Immerse yourself in the realism of Batman Arkham Asylum with NVIDIA PhysX technology. |

|

Darkest of Days Darkest of Days is a historically based FPS where gamers will travel back and forth through time to experience history’s “darkest days”. The player uses period and future weapons as they fight their way through some of the epic battles in history. The time travel aspects of the game, lead the player on missions where they at times need to fight on both sides of a war. |

|

|

Sacred 2 – Fallen Angel In Sacred 2 – Fallen Angel, you assume the role of a character and delve into a thrilling story full of side quests and secrets that you will have to unravel. Breathtaking combat arts and sophisticated spells are waiting to be learned. A multitude of weapons and items will be available, and you will choose which of your character’s attributes you will enhance with these items in order to create a unique and distinct hero. |

|

Dark Void Dark Void is a sci-fi action-adventure game that combines an adrenaline-fuelled blend of aerial and ground-pounding combat. Set in a parallel universe called “The Void,” players take on the role of Will, a pilot dropped into incredible circumstances within the mysterious Void. This unlikely hero soon finds himself swept into a desperate struggle for survival. |

|

|

Cryostasis Cryostasis puts you in 1968 at the Arctic Circle, Russian North Pole. The main character, Alexander Nesterov is a meteorologist incidentally caught inside an old nuclear ice-breaker North Wind, frozen in the ice desert for decades. Nesterov’s mission is to investigate the mystery of the ship’s captain death – or, as it may well be, a murder. |

|

Mirror’s Edge In a city where information is heavily monitored, agile couriers called Runners transport sensitive data away from prying eyes. In this seemingly utopian paradise of Mirror’s Edge, a crime has been committed and now you are being hunted. |

What is NVIDIA PhysX Technology?

NVIDIA® PhysX® is a powerful physics engine enabling real-time physics in leading edge PC games. PhysX software is widely adopted by over 150 games and is used by more than 10,000 developers. PhysX is optimized for hardware acceleration by massively parallel processors. GeForce GPUs with PhysX provide an exponential increase in physics processing power taking gaming physics to the next level.

What is physics for gaming and why is it important?

Physics is the next big thing in gaming. It’s all about how objects in your game move, interact, and react to the environment around them. Without physics in many of today’s games, objects just don’t seem to act the way you’d want or expect them to in real life. Currently, most of the action is limited to pre-scripted or ‘canned’ animations triggered by in-game events like a gunshot striking a wall. Even the most powerful weapons can leave little more than a smudge on the thinnest of walls; and every opponent you take out, falls in the same pre-determined fashion. Players are left with a game that looks fine, but is missing the sense of realism necessary to make the experience truly immersive.

With NVIDIA PhysX technology, game worlds literally come to life: walls can be torn down, glass can be shattered, trees bend in the wind, and water flows with body and force. NVIDIA GeForce GPUs with PhysX deliver the computing horsepower necessary to enable true, advanced physics in the next generation of game titles making canned animation effects a thing of the past.

Which NVIDIA GeForce GPUs support PhysX?

The minimum requirement to support GPU-accelerated PhysX is a GeForce 8-series or later GPU with a minimum of 32 cores and a minimum of 256MB dedicated graphics memory. However, each PhysX application has its own GPU and memory recommendations. In general, 512MB of graphics memory is recommended unless you have a GPU that is dedicated to PhysX.

How does PhysX work with SLI and multi-GPU configurations?

When two, three, or four matched GPUs are working in SLI, PhysX runs on one GPU, while graphics rendering runs on all GPUs. The NVIDIA drivers optimize the available resources across all GPUs to balance PhysX computation and graphics rendering. Therefore users can expect much higher frame rates and a better overall experience with SLI.

A new configuration that’s now possible with PhysX is 2 non-matched (heterogeneous) GPUs. In this configuration, one GPU renders graphics (typically the more powerful GPU) while the second GPU is completely dedicated to PhysX. By offloading PhysX to a dedicated GPU, users will experience smoother gaming.

Finally we can put the above two configurations all into 1 PC! This would be SLI plus a dedicated PhysX GPU. Similarly to the 2 heterogeneous GPU case, graphics rendering takes place in the GPUs now connected in SLI while the non-matched GPU is dedicated to PhysX computation.

Why is a GPU good for physics processing?

The multithreaded PhysX engine was designed specifically for hardware acceleration in massively parallel environments. GPUs are the natural place to compute physics calculations because, like graphics, physics processing is driven by thousands of parallel computations. Today, NVIDIA’s GPUs, have as many as 480 cores, so they are well-suited to take advantage of PhysX software. NVIDIA is committed to making the gaming experience exciting, dynamic, and vivid. The combination of graphics and physics impacts the way a virtual world looks and behaves.

NVIDIA PureVideo Technology

NVIDIA® PureVideo® technology brings movies to life. PureVideo uses advanced techniques found only on very high-end consumer players and TVs to make Blu-ray, HD DVD, standard-definition DVD movies, PC and mobile device content look crisp, clear, smooth and vibrant. Regardless of whether you’re watching on an LCD monitor or a plasma TV, with PureVideo the picture will always be precise, vivid and lifelike.

PureVideo is included on the processing cores on NVIDIA GPUs and handheld devices for the decoding and playback of video. If your PC is equipped with one of these GPUs, you can take advantage of PureVideo and PureVideo HD. NVIDIA applications processors also leverage this technology to deliver top class video quality to mobile devices.

|

NVIDIA PureVideo HD

Available on select NVIDIA® GeForce® 7,8, and 9 series and NVIDIA Quadro® GPUs, PureVideo HD is a superset of the PureVideo functionality that is essential for the ultimate

Blu-ray and HD DVD movie experience on a Desktop PC or notebook computer. Learn More

|

NVIDIA PureVideo

Available on most NVIDIA GeForce, NVIDIA APX applications processors and NVIDIA Quadro GPUs, PureVideo is for playback of standard-definition DVDs, PC video and mobile content. Learn More

Direct Compute

DirectCompute Support on NVIDIA’s CUDA Architecture GPUs

Microsoft’s DirectCompute is a new GPU Computing API that runs on NVIDIA’s current CUDA architecture under both Windows VISTA and Windows 7. DirectCompute is supported on current DX10 class GPU’s and DX11 GPU’s. It allows developers to harness the massive parallel computing power of NVIDIA GPU’s to create compelling computing applications in consumer and professional markets.

As part of the DirectCompute presentation at the Game Developer Conference (GDC) in March 2009 in San Francisco CA, NVIDIA demonstrated three demonstrations running on a NVIDIA GeForce GTX 280 GPU that is currently available. (see links below)

As a processor company, NVIDIA enthusiastically supports all languages and API’s that enable developers to access the parallel processing power of the GPU. In addition to DirectCompute and NVIDIA’s CUDA C extensions, there are other programming models available including OpenCL™. A Fortran language solution is also in development and is available in early access from The Portland Group.

NVIDIA has a long history of embracing and supporting standards since a wider choice of languages improve the number and scope of applications that can exploit parallel computing on the GPU. With C and Fortran language support here today and OpenCL and DirectCompute available this year, GPU Computing is now mainstream. NVIDIA is the only processor company to offer this breadth of development environments for the GPU.

OpenCL

| OpenCL (Open Computing Language) is a new cross-vendor standard for heterogeneous computing that runs on the CUDA architecture. Using OpenCL, developers will be able to harness the massive parallel computing power of NVIDIA GPU’s to create compelling computing applications. As the OpenCL standard matures and is supported on processors from other vendors, NVIDIA will continue to provide the drivers, tools and training resources developers need to create GPU accelerated applications.

In partnership with NVIDIA, OpenCL was submitted to the Khronos Group by Apple in the summer of 2008 with the goal of forging a cross platform environment for general purpose computing on GPUs. NVIDIA has chaired the industry working group that defines the OpenCL standard since its inception and shipped the world’s first conformant GPU implementation for both Windows and Linux in June 2009. |

|

NVIDIA has been delivering OpenCL support in end-user production drivers since October 2009, supporting OpenCL on all 180,000,000+ CUDA architecture GPUs shipped since 2006.

NVIDIA’s Industry-leading support for OpenCL:

2010

March – NVIDIA releases updated R195 drivers with the Khronos-approved ICD, enabling applications to use OpenCL NVIDIA GPUs and other processors at the same time

January – NVIDIA releases updated R195 drivers, supporting developer-requested OpenCL extensions for Direct3D9/10/11 buffer sharing and loop unrolling

January – Khronos Group ratifies the ICD specification contributed by NVIDIA, enabling applications to use multiple OpenCL implementations concurrently

2009

November – NVIDIA releases R195 drivers with support for optional features in the OpenCL v1.0 specification such as double precision math operations and OpenGL buffer sharing

October – NVIDIA hosts the GPU Technology Conference, providing OpenCL training for an additional 500+ developers

September – NVIDIA completes OpenCL training for over 1000 developers via free webinars

September – NVIDIA begins shipping OpenCL 1.0 conformant support in all end user (public) driver packages for Windows and Linux

September – NVIDIA releases the OpenCL Visual Profiler, the industry’s first hardware performance profiling tool for OpenCL applications

July – NVIDIA hosts first “Introduction to GPU Computing and OpenCL” and “Best Practices for OpenCL Programming, Advanced” webinars for developers

July – NVIDIA releases the NVIDIA OpenCL Best Practices Guide, packed with optimization techniques and guidelines for achieving fast, accurate results with OpenCL

July – NVIDIA contributes source code and specification for an Installable Client Driver (ICD) to the Khronos OpenCL Working Group, with the goal of enabling applications to use multiple OpenCL implementations concurrently on GPUs, CPUs and other types of processors

June – NVIDIA release first industry first OpenCL 1.0 conformant drivers and developer SDK

April – NVIDIA releases industry first OpenCL 1.0 GPU drivers for Windows and Linux, accompanied by the 100+ page NVIDIA OpenCL Programming Guide, an OpenCL JumpStart Guide showing developers how to port existing code from CUDA C to OpenCL, and OpenCL developer forums

2008

December – NVIDIA shows off the world’s first OpenCL GPU demonstration, running on an NVIDIA laptop GPU at

June – Apple submits OpenCL proposal to Khronos Group; NVIDIA volunteers to chair the OpenCL Working Group is formed

2007

December – NVIDIA Tesla product wins PC Magazine Technical Excellence Award

June – NVIDIA launches first Tesla C870, the first GPU designed for High Performance Computing

May – NVIDIA releases first CUDA architecture GPUs capable of running OpenCL in laptops & workstations

2006

November – NVIDIA released first CUDA architecture GPU capable of running OpenCL

Pictures

Like we mentioned earlier the unbranded cards are impossible to tell apart until you plug them in and check with GPU-Z, but the GTX-460 768MB is on top and the GTX-460 1GB is on bottom in all the pictures.

Nvidia went with a larger fan and the temperatures reflect that. With fewer active transistors the temperatures we got were quite reasonable and the acoustics ran in the 30 Decibel range.

Both sport Dual DVI and a mini HDMI, the one retail model we have (Gigabyte) has HDMI by adapter (from mini HDMI).

Except for the stickers the backs of both cards are identical and remind us of a small populated city more than anything. The GTX-460 lineup is SLI capable but you can see from the single connector that Nvidia is only going to allow 2 of these in SLI. So, if your looking at triple SLI you’ll need to move to the GTX-465 or above.

To show the relative size, the GTX-480 is on top, the next row left is the GTX-470, right GTX-465, bottom GTX-460 768MB then bottom right the GTX-460 1GB. Compared to the GTX-460’s bigger brothers they almost look like a toy. Rest assured that in your hands they feel solid and durable. With this size reduction almost any chassis out there should accommodate the new GPU’s.

Same lineup here the mighty TX-480 on top, then the GTX-470, GTX-465, and the two GTX-460’s.

The GTX-460’s are on the left in this shot and you can see you will need two 6 pin PCI-E connectors to drive one.

Testing & Methodology

To Test the Nvidia GTX-460’s, we did a fresh load of Windows 7 Ultimate; then applied all the updates we could find. Installed the latest motherboard drivers for the Asus Rampage 3, updated the BIOS, and loaded our test suite. We didn’t load graphic drivers because we wanted too clone the HD with the fresh load of Windows 7 without graphic drivers. That way we have a complete OS load with testing suite, and it’s not contaminated with GPU drivers. Should we need to switch GPU’s, or run some Crossfire action later, all we have to do is clone from our cloned OS, install GPU drivers, and we are good to go.

We ran each test a total of 3 times, and reported the average score from all three scores. In the case of a screenshot of a benchmark we ran that benchmark 3 times, tossed out the high and low scores; then posted the median result from that benchmark. If we received any seriously weird results, we kicked out that run, and redid the run. Say if we got 55FPS in two benchmark runs, then the third came in at 25FPS we kick out the 25FPS run and repeat the test. That doesn’t happen very often but anomalous runs do rarely happen.

Please note that due to new driver releases with performance improvements, in this review we rebenched every card shown from HD5870 and up. The results here will be different than previous reviews due to the performance increases in drivers. So hold the “In the last Review” E-Mails and try not and overflow my E-Mail box yet again.

Test Rig

| Test Rig “HexZilla” |

|

| Case Type | Silverstone Raven 2 |

| CPU | Intel Core I7 980 Extreme |

| Motherboard | Asus Rampage 3 |

| Ram | Kingston HyperX 12GB 1600 MHz 9-9-9-24 |

| CPU Cooler | Thermalright Ultra 120 RT (Dual 120mm Fans) |

| Hard Drives | 2x Corsair P128 Raid 0, 3x Seagate Constellation 2x RAID 0, 1x Storage |

| Optical | Asus BD Combo |

| GPU Tested |

Nvidia GTX-460 768MB |

| Case Fans | 120mm Fan cooling the mosfet CPU area |

| Docking Stations | Thermaltake VION |

| Testing PSU | Silverstone Strider 1500 Watt |

| Legacy | Floppy |

| Mouse | Razer Lachesis |

| Keyboard | Razer Lycosa |

| Gaming Ear Buds |

Razer Moray |

| Speakers | Logitech Dolby 5.1 |

| Any Attempt Copy This System Configuration May Lead to Bankruptcy | |

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark Vantage | |

| Metro 2033 | |

| Battlefield Bad Company 2 | |

| Dirt 2 | |

| Stone Giant | |

| Unigine Heaven v.2.1 | |

| Crysis | |

| Crysis Warhead | |

| Stalker COP | |

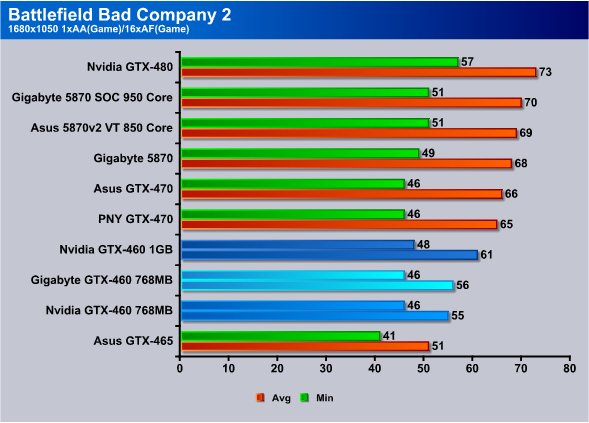

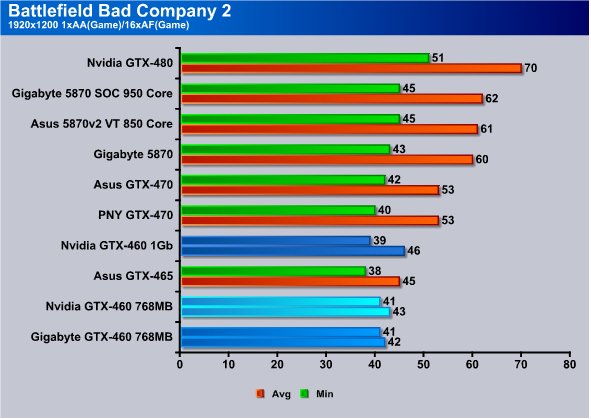

Battlefield Bad Company 2

Battlefield Bad Company™ 2 brings the spectacular Battlefield gameplay to the forefront of next-gen consoles and PC, featuring best-in-class vehicular combat set across a wide range of huge sandbox maps each with a different tactical focus. New vehicles like the All Terrain Vehicle (ATV) and the UH-60 transport helicopter allow for all-new multiplayer tactics in the warzone. Extensive fine-tuning ensures that this will be the most realistic vehicle combat experience to date. Tactical destruction is taken to new heights with the updated DICE Frostbite engine. Players can now take down entire buildings or create their own vantage points by blasting holes through cover, thereby delivering a unique dynamic experience in every match.

Players can also compete in 4-player teams in 2 exclusive squad-only game modes, fighting together to unlock exclusive team awards and achievements. Spawn on your squad to get straight into the action and use gadgets such as the tracer dart in conjunction with the RPG to devastating effect. Excellence in the battlefield is rewarded with an extensive range of pins, insignias and stars to unlock along with 50 dedicated ranks to achieve. Variety also extends into the range of customizable kits weapons and vehicles available. With 4 distinct character classes, dozens of weapons, several gadgets and specializations, players have over 15,000 kit variations to discover and master. Players will be able to fine-tune their preferred fighting style to give them the edge in combat.

The GTX-460 has a core revision advantage and edged out the GTX-465 in the 1680×1050 test.

Moving to 1920×1200 the GTX-460 1GB shows the advantage it has with extra memory. The GTX-460 768MB card fall behind the GTX-465 in Avg FPS and that’s likely due to the GTX-465 having 256MB more GDDR5 and a faster memory bus to work with.

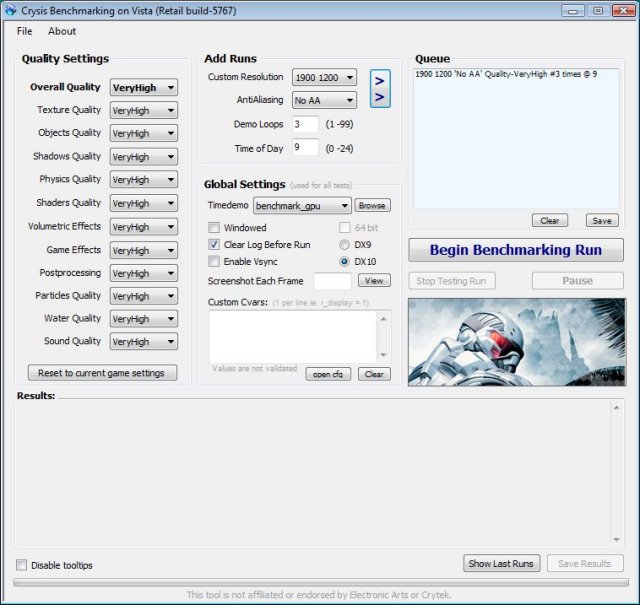

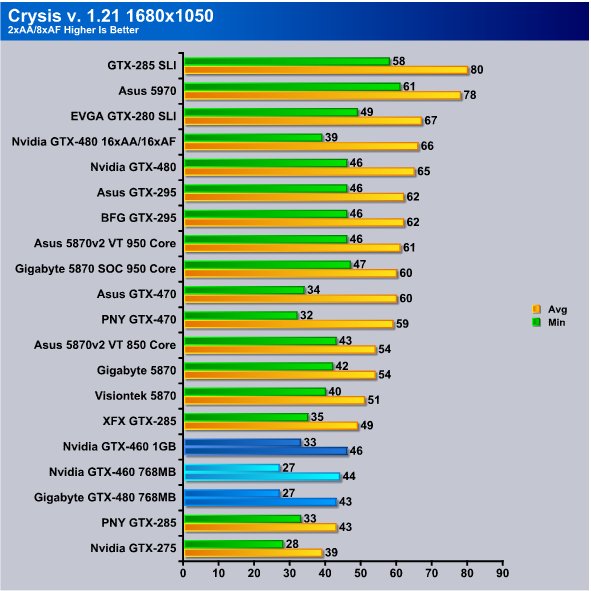

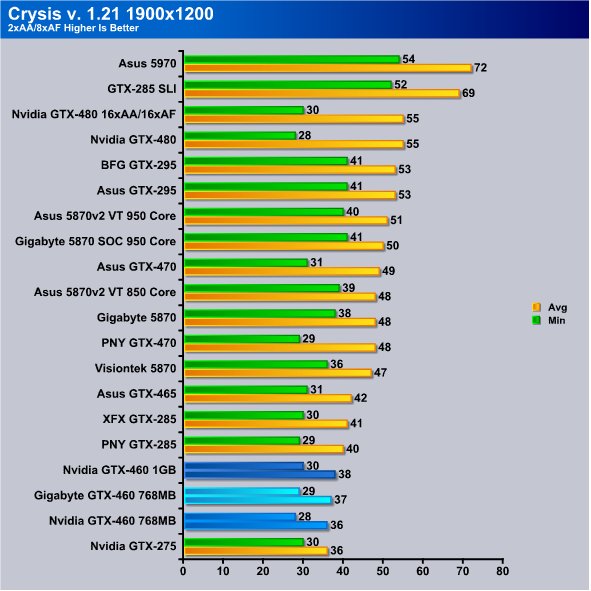

Crysis v. 1.21

Crysis is the most highly anticipated game to hit the market in the last several years. Crysis is based on the CryENGINE™ 2 developed by Crytek. The CryENGINE™ 2 offers real time editing, bump mapping, dynamic lights, network system, integrated physics system, shaders, shadows, and a dynamic music system, just to name a few of the state-of-the-art features that are incorporated into Crysis. As one might expect with this number of features, the game is extremely demanding of system resources, especially the GPU. We expect Crysis to be a primary gaming benchmark for many years to come.

With DX11 cards all over the E-Tailers shelves, Crysis is one of the few DX9/DX10 games left that stress the new generation of GPU’s and it allows us to show the power of the new generation against the old generation.

The GTX-460’s set above the GTX-275 and one of the GTX-285’s and just under the XFX GTX-285. They also fall below the 5870’s. Let’s think of it this way at 1680×1050, the GTX-480 hit 46/65 FPS at $499 while the GTX-460’s in the 27 to 33 FPS range (minimum FPS) and they are playable at less than half the cost of the GTX-480.

Crysis is somewhat bottlenecked by the CPU at lower resolutions, but at 1920×1200 we were amazed that the GTX-460’s actually picked up a few FPS and make a good showing of it. We played Crysis at this resolution for about a half hour and had a lot of enemy AI on the level and it was entirely playable.

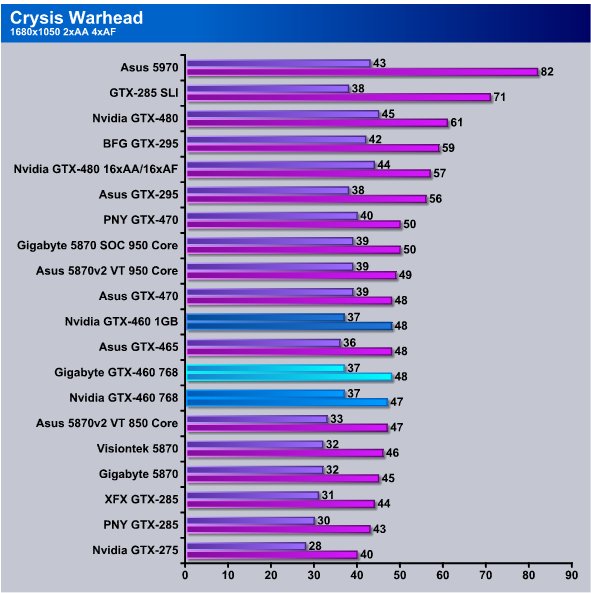

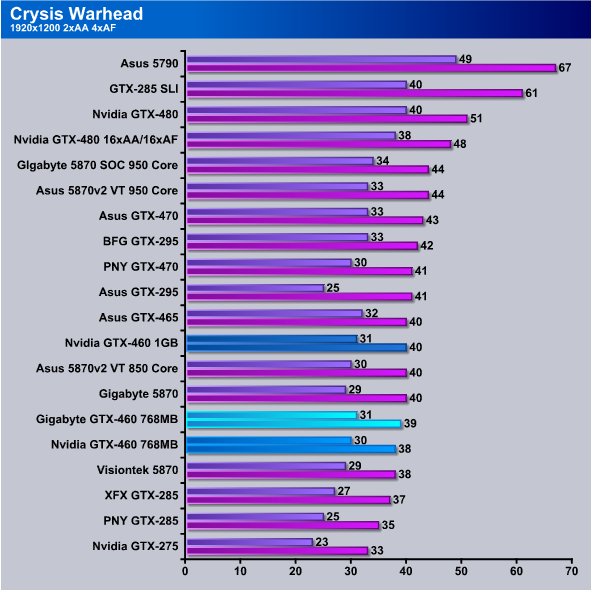

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

Warhead liked the GTX-460’s and they topped the stock clocked HD5870’s. The 768MB models came right below the GTX-465 and the 1GB model managed to squeak into the lead ahead of the GTX-465.

Here we see the GTX-460’s ahead of the older 275’s and 285’s which, if you remember correctly, just a short time ago retailed for $499 and $350 respectively. The 460 768’s even managed to slide past the Visiontek 5870 but came in below the Gigabyte HD5870. The GTX-460 1GB scored a little better than the 768MB models of the 460 but not much.

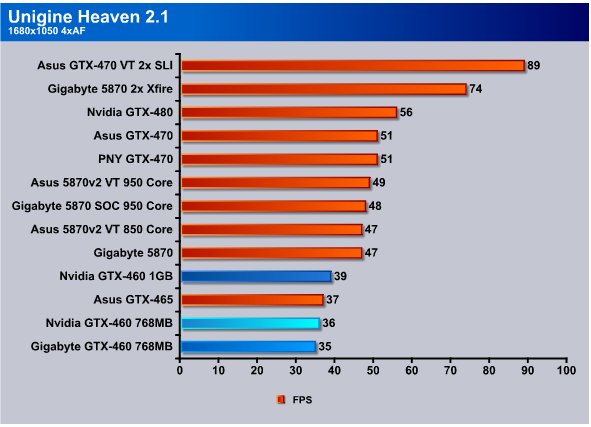

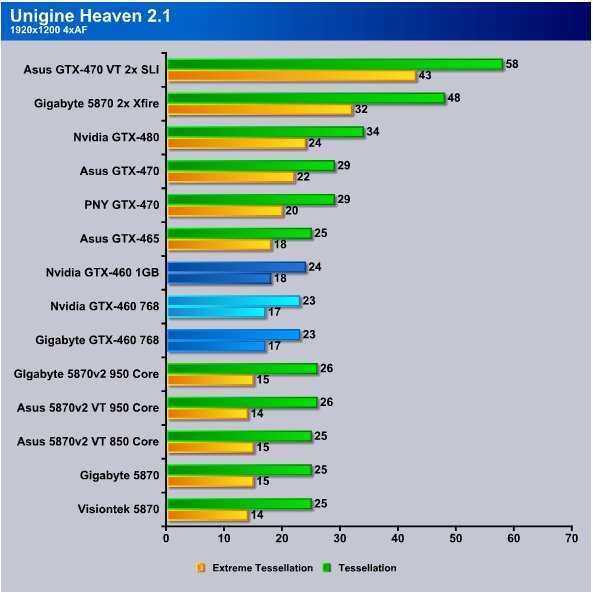

Unigine Heaven 2.1

Unigine Heaven is a benchmark program based on Unigine Corp’s latest engine, Unigine. The engine features DirectX 11, Hardware tessellation, DirectCompute, and Shader Model 5.0. All of these new technologies combined with the ability to run each card through the same exact test means this benchmark should be in our arsenal for a long time.

At the lower resolution of 1680×1050 we tested with normal Tessellation and the GTX-460’s were well into the playable FPS range and made a good showing for their price point.

Even at 1920×1200 with normal Tessellation, things looked pretty good and despite dropping below 30 FPS the benchmarks didn’t look choppy. Crank Extreme Tessellation on and they didn’t hold up very well but consider the mighty GTX-480 hit 24FPS and the 460’s hit 17 and 18, that’s not bad for GPU’s that cost less than half what the GTX-480 will set you back.

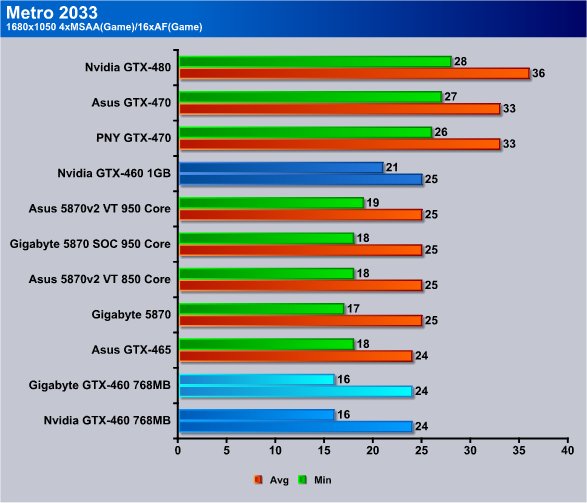

Metro 2033

Metro 2033 is an action-oriented video game with a combination of survival horror, and first-person shooter elements. The game is based on the novel Metro 2033 by Russian author Dmitry Glukhovsky. It was developed by 4A Games in Ukraine and released in March 2010 for the Xbox 360 and Microsoft Windows. In March 2009, 4A Games announced a partnership with Glukhovsky to collaborate on the game. The game was announced a few months later at the 2009 Games Convention in Leipzig; a first trailer came along with the announcement. When the game was announced, it had the subtitle The Last Refuge but this subtitle is no longer being used by THQ.

The game is played from the perspective of a character named Artyom. The story takes place in post-apocalyptic Moscow, mostly inside the metro system where the player’s character was raised (he was born before the war, in an unharmed city), but occasionally the player has to go above ground on certain missions and scavenge for valuables.

The game’s locations reflect the dark atmosphere of real metro tunnels, albeit in a more sinister and bizarre fashion. Strange phenomena and noises are frequent, and mostly the player has to rely only on their flashlight to find their way around in otherwise total darkness. Even more lethal is the surface, as it is severely irradiated and a gas mask must be worn at all times due to the toxic air. Water can often be contaminated as well, and short contacts can damage the player, or even kill outright.

Often, locations have an intricate layout, and the game lacks any form of map, leaving the player to try and find its objectives only through a compass – weapons cannot be used while visualizing it. The game also lacks a health meter, relying on audible heart rate and blood spatters on the screen to show the player what state they are in and how much damage was done. There is no on-screen indicator to tell how long the player has until the gas mask’s filters begin to fail, save for a wristwatch that is divided into three zones, signaling how much the filter can endure, so players must continue to check it every time they wish to know how long they have until their oxygen runs out, requiring the player to replace the filter (found throughout the game). The gas mask also indicates damage in the form of visible cracks, warning the player a new mask is needed. The game does feature traditional HUD elements, however, such as an ammunition indicator and a list of how many gas mask filters and adrenaline shots (med kits) remain.

Another important factor is ammunition management. As money lost its value in the game’s setting, cartridges are used as currency. There are two kinds of bullets that can be found, those of poor quality made by the metro-dwellers themselves, which are fairly common but less effective against targets, especially mutants, and those made before the nuclear war, which are rare and highly powerful, but are also necessary to purchase gear or items such as filters for the gas mask and med kits. Thus, the player is forced to manage their resources with care.

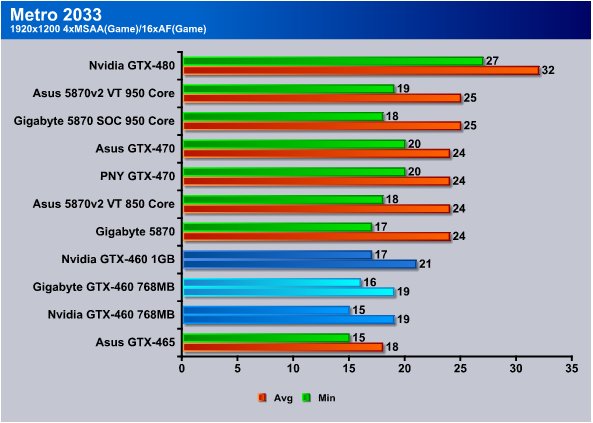

We left Metro 2033 on all high settings with Depth of Field on. We’ll get a screenshot as time allows.

Metro 2033 is the toughest game we bench and the charts reflect that. The 769MB models hit 16/24 FPS and the 1GB model hit 21/25. Now here’s the strange part. We play any game that looks bad on the charts to see about real life performance. Metro 2033 played seamlessly with no lag, drag or micro-stutters on both models of the GTX-460. We had considered benching it at lower setting but decided to let the chips fall where they may and we are glad we did.

Kick things up to 1920×1200 and the 460’s hit 15 to 17 FPS Minimum and 18/19 Average and to our amazement the game still played without lag or drag. Often when FPS drop the mouse will jump on the screen or scenes will stutter, we saw no evidence of that.

3DMark Vantage

For complete information on 3DMark Vantage Please follow this Link:

www.futuremark.com/benchmarks/3dmarkvantage/features/

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PyhsX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

3DMark Vantage needs an update badly at this point and doesn’t do the GTX-4xx lineup justice, people expect it so we are still using it until the new version retails.

The GTX-460 768’s hit 11.4 and 11.5k and the 1GB model hits 12.4k and even though they consistently trounce the GTX-285 and 275 in benchmarks score lower on this aging benchmark.

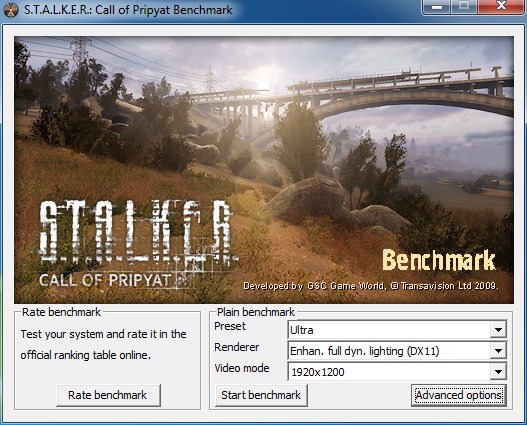

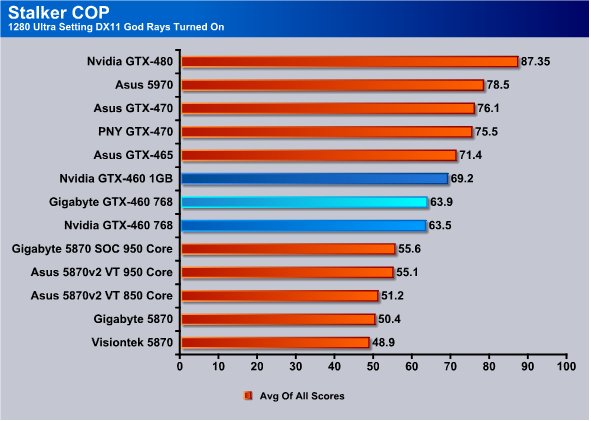

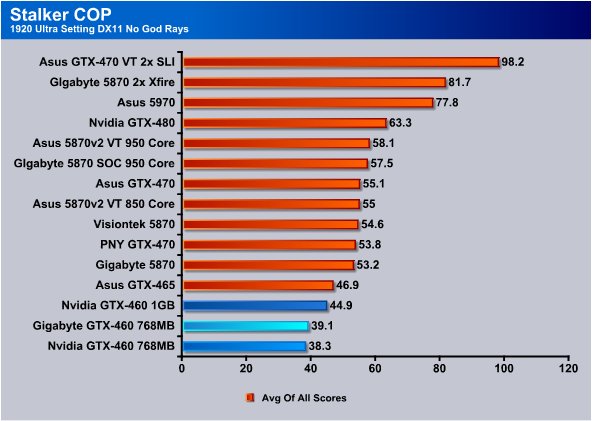

S.T.A.L.K.E.R.: Call of Pripyat

Call of Pripyat is the latest addition to the S.T.A.L.K.E.R. franchise. S.T.A.L.K.E.R. has long been considered the thinking man’s shooter, because it gives the player many different ways of completing the objectives. The game includes new advanced DirectX 11 effects as well as the continuation of the story from the previous games.

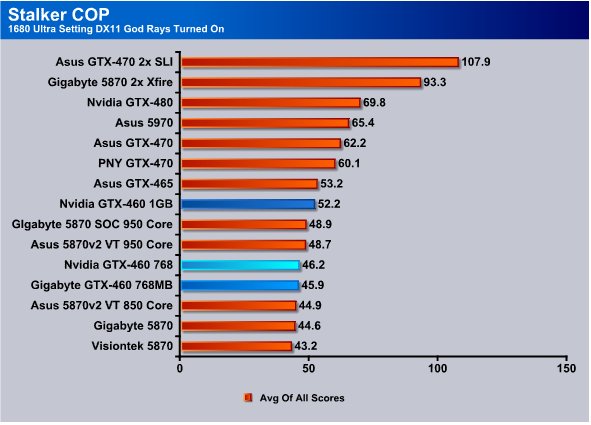

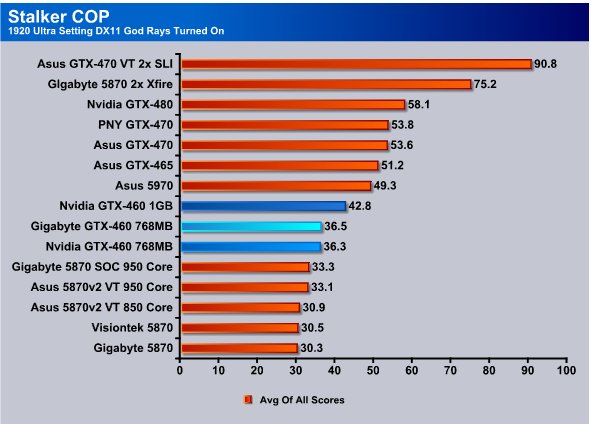

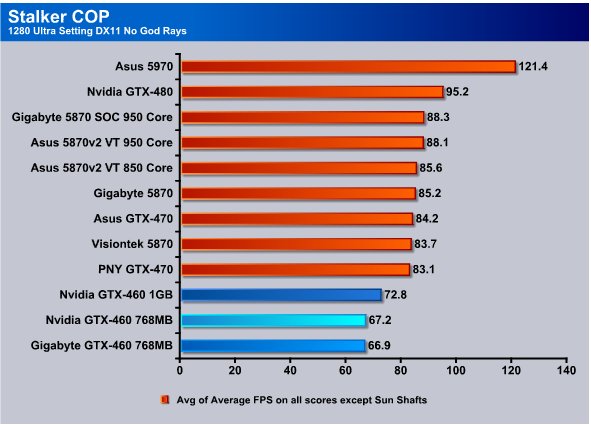

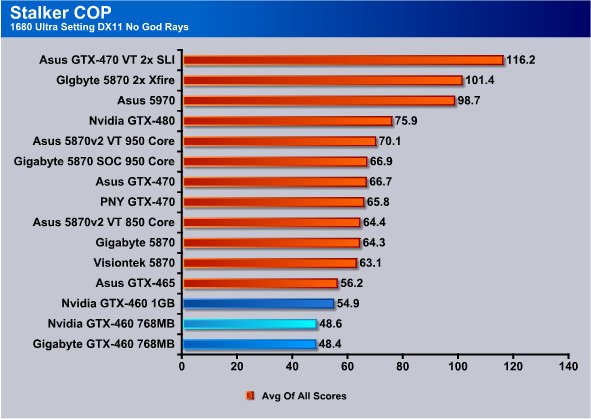

We bench Stalker and figure in the Sun Shafts (AKA God Rays) then we remove the Sun shafts from the results and show that in the last 3 charts. What you will generally see is that with Sun Shafts Nvidia’s design comes out ahead, without Sun Shafts it’s a race between Nvidia and ATI.

The GTX-460’s come out ahead of the HD5870’s in this test by a comfortable margin and they are right at their price point in the Nvidia lineup but way ahead of the curve for the ATI price point lineup.

Kick it up to 1680×1050 and the HD5870’s fight back and the GTX-460 1GB tops the HD5870’s but fall above the stock clocked HD5870’s and below the overclocked HD5870’s.

Top resolution of 1920×1200 and the GTX-460 lineup tops all the HS5870’s and falls right below the HD5970 which is a dual core top end ATI offering. Not too shabby for GPU’s that run $199 – $229.

With Sun Shafts out of the equation the ATI offerings jump up on top. The problem for us is that we like eye candy, mounds and mounds of eye candy. With Tessellation and Ray Tracing the Nvidia lineup just performs better and does so at a better price point. What we’ve been seeing is that DX11, Tessellation and somewhat Ray Tracing is picking up (mainstreaming) faster than any previous generation of GPU technologies so unless ATI catches up they could be in a serious performance hole.

With the eye candy turned down and God Rays out of the game the HD5870’s top the 460 lineup but let’s face it, in Stalker all the GPU’s have run acceptable levels. The GTX-460’s are doing it at less expense than any of the other offering so that’s a big big plus.

Here again at 1920×1200 no God Rays all the GPU’s are chugging along like champions. We can’t help reiterating that we like eye candy so the charts with Sun Shafts (AKA God Rays) will weigh heavier in our scoring. When we remove Sun Shafts for benching we just want to show that by adjusting (Accepting less) eye candy you can still enjoy games. Not that we ever accept less ourselves, we are eye candy hogs.

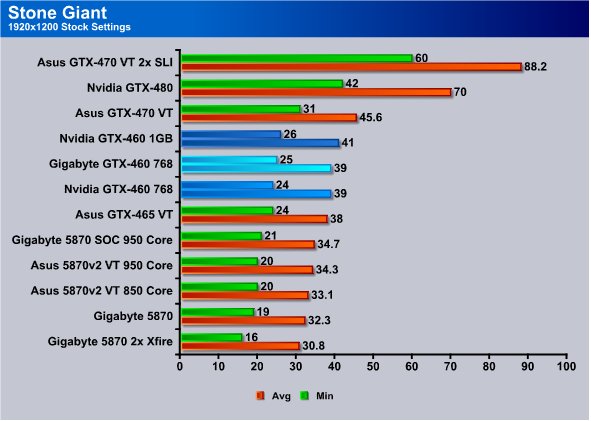

Stone Giant

We used a 90 second Fraps run and recorded the Min/Avg/Max FPS rather than rely on the built in utility for determining FPS. We started the benchmark, triggered Fraps and let it run on stock settings for 90 seconds without making any adjustments of changing camera angles. We just let it run at default and had Fraps record the FPS and log them to a file for us.

Key features of the BitSquid Tech (PC version) include:

- Highly parallel, data oriented design

- Support for all new DX11 GPUs, including the NVIDIA GeForce GTX 400 Series and AMD Radeon 5000 series

- Compute Shader 5 based depth of field effects

- Dynamic level of detail through displacement map tessellation

- Stereoscopic 3D support for NVIDIA 3dVision

“With advanced tessellation scenes, and high levels of geometry, Stone Giant will allow consumers to test the DX11-credentials of their new graphics cards”, said Tobias Persson, Founder and Senior Graphics Architect at BitSquid. “We believe that the great image fidelity seen in Stone Giant, made possible by the advanced features of DirectX 11, is something that we will come to expect in future games.”

“At Fatshark, we have been creating the art content seen in Stone Giant”, said Martin Wahlund, CEO of Fatshark. “It has been amazing to work with a bleeding edge engine, without the usual geometric limitations seen in current games”.

Stone Giant throws a wrench at ATI GPU’s, with Tessellation as part of its toolbox, the ATI GPU’s fall behind. The GTX-460 1GB and the 768MB models beat the minimum FPS ATI can offer by a wide margin.

Crank Stone Giant up to 1920×1200 and the GTX-460’s are still going strong and have moved completely ahead of the HD5870 pack. This is another case where the GTX-460 core 104 beat out the GTX-465.

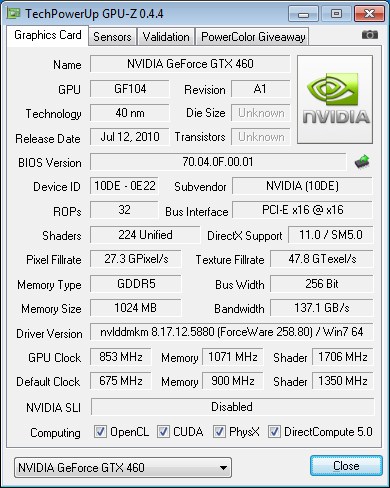

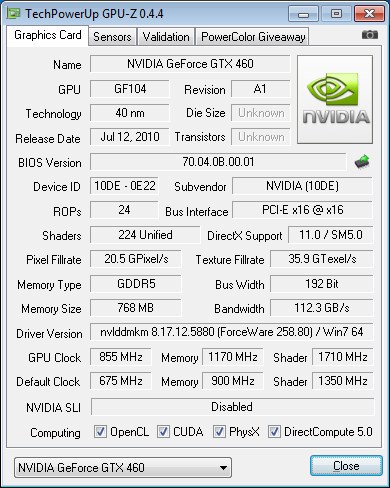

Overclocking

For Overclocking we used the latest version of MSI Afterburner and for some strange reason it would adjust the core clocks and memory clocks but not the fan. Every time we tried to adjust the fan it just reset. The good news is that the GTX-460’s ran cool enough we didn’t need the fan kicked up.

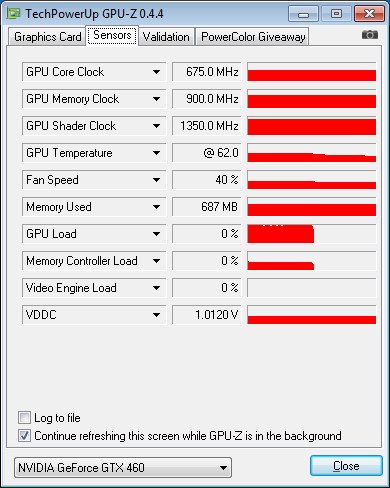

GPU-Z was reading everything right except the number of shaders. Factory specs say 336 and GPU-Z is showing 224. We contacted Nvidia and they assure us it’s 336.

We went from a 675 MHz core speed to 853MHz which is a core speed increase of 178MHz. We also managed a memory overclock of 1071 up from 900 and considering that GDDR5 is quad pumped that’s pretty darn amazing. That’s an increase of 171 but it’s quad pumped so 171 x 4 = 684MHz real memory OC.

GPU-Z is reading both 460’s as having 224 Shaders but once again that’s a glitch with GPU-Z and the GPU contains 336 Shaders. Core clock starts at 675MHz and memory at 900MHz.

We hit 855MHz core and 1170MHz memory so we got an 180MHz core speed increase and a whopping 270MHz memory speed increase. A 270MHz GDDR5 memory speed increase is insane but we ran both GPU’s at the OC speed in Vantage and games and saw no evidence of corruption or artifacts. They both also tested clean in ATITool so we know that these are legit clean overclocks.

TEMPERATURES

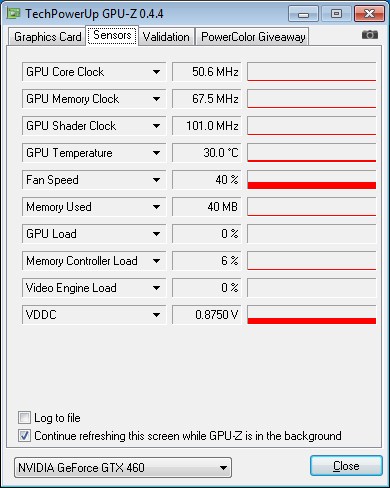

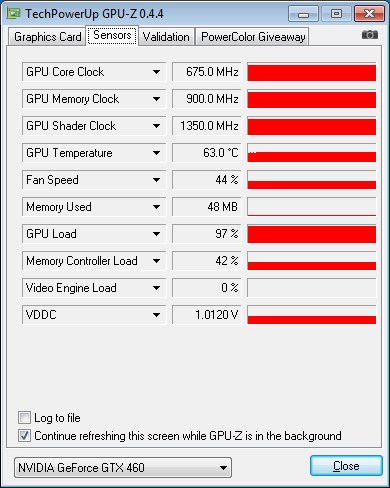

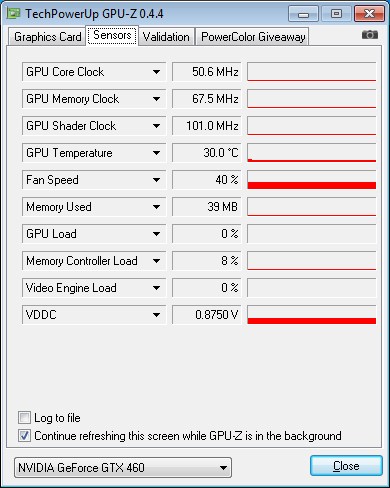

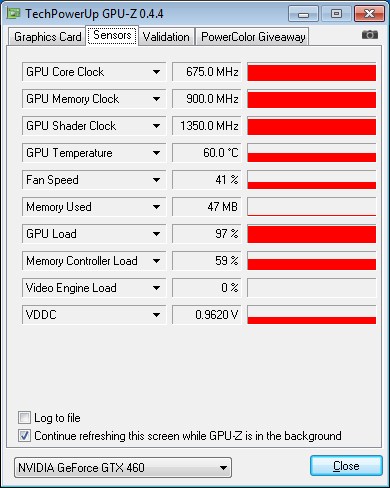

We went with screenshots of GPU-Z for temperatures and used ATITool for ten minutes to heat them up.

This is the 1GB model and we idled at the desktop for half an hour and took the reading. All that the GTX-460 1GB hit was 30°C which is really cool for a modern GPU.

Ten minutes of ATITool and all we saw was 63°C and the temperature leveled out at 5 minutes.

Here again the 768MB model only hit 30°C and remained at that for several hours until we remembered we had the idle temperature test running. We did get one little spike to 32°C when we came to take the reading and was messing with the mouse and opening the screen shot utility but the normal idle was 30°C.

On the 768MB model we got an amazing 60°C after 10 minutes and the thermal properties of the GTX-460 lineup are looking good.

We fired up the 1GB model for a couple of hours of Metro 2033 and when we exited what we saw was 62°C. We were running Level 4 in the tunnel area and spreading death and destruction on every AI in sight so this is a pretty good indication of performance in the most demanding games.

POWER CONSUMPTION

To get our power consumption numbers we plugged in our Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then we ran Furmark for 10 minutes and recorded the highest power usage.

| GPU Power Consumption | |||

| GPU | Idle | Load | |

| Gigabyte HD5870 SOC |

169 Watts | 391 Watts | |

| Gigabyte GTX-460 768 |

172 Watts | 462 Watts | |

| Nvidia GTX-460 768 |

170 Watts | 465 Watts | |

| Nvidia GTX-460 1GB |

175 Watts | 469 Watts | |

| Asus GTX-470 | 181 Watts | 488 Watts | |

| PNY GTX-470 | 178 Watts | 487 Watts | |

| Nvidia GTX-480 | 196 Watts | 521 Watts | |

| Gigabyte HD5870 | 167 Watts | 379 Watts | |

| Asus EAH5970 | 182 Watts | 412 Watts | |

| Gigabyte 4890 OC | 239 Watts | 358 Watts | |

| Asus EAH4770 | 131 Watts | 205 Watts | |

| BFG GTX-275 OC | 216 Watts | 369 Watts | |

| Nvidia GTX-275 Reference | 217 Watts | 367 Watts | |

| Asus HD 4890 Voltage Tweak Edition | 241 Watts | 359 Watts | |

| EVGA GTS-250 1 GB Superclocked | 192 Watts | 283 Watts | |

| XFX GTX-285 XXX | 215 Watts | 369 Watts | |

| BFG GTX-295 | 238 Watts | 450 Watts | |

| Asus GTX-295 | 240 Watts | 451 Watts | |

| EVGA GTX-280 | 217 Watts | 345 Watts | |

| EVGA GTX-280 SLI | 239 Watts | 515 Watts | |

| Sapphire Toxic HD 4850 | 183 Watts | 275 Watts | |

| Sapphire HD 4870 | 207 Watts | 298 Watts | |

| Palit HD 4870×2 | 267 Watts | 447 Watts | |

| Total System Power Consumption | |||

Here’s the only downside we see to the GTX-460. The power consumption isn’t much lower than the GTX-470 or GTX-480 but that may be reflected in minor system changes as what we record is total system power consumption.

Conclusion

The price performance ratio of the GTX-460 lineup is almost off the charts. They overclock like demons and while they don’t offer the performance of the GTX-480 or even the GTX-470, they offer the best price performance punch of the GTX-4xx family.

If your a hardcore enthusiast and have been for awhile you’ll remember the 8800GT; which was affordable and offered a great price performance ratio and overclocked like mad. That’s what the GTX-460 lineup reminds us of. It’s not quite enthusiast grade but it’s a little more than performance grade. Two of these in SLI would be a great combination that should handle anything you throw at them. One of them will be more than enough for all but the most demanding gamers. At $199 for the 768MB model and $229 for the 1GB model it’s a deal that’s hard to beat. For our money hook the 1GB model for the added advantage Nvidia gave it.

It’s nice to see a fairly affordable Fermi out there and Nvidia did an outstanding job with it considering the price.

| OUR VERDICT: Nvidia GTX-460’s 768MB & 1GB | ||||||||||||||||||

|

||||||||||||||||||

|

Summary: Price performance wise the GTX-460 lineup is hard to beat. It brings to mind the 8800GT which was the sweet spot for the 8800 generation. Performance was high on the 460 lineup. When you combine the $199 – $229 launch price, that makes the 460 a rock star. The 1GB model offers a little better performance and some hardware that the 768MB model doesn’t, so that would be pick of the litter. If you are running at 1680×1050 the 768MB model would be great. For future proofing and higher resolutions the 1GB model is a better choice. Fermi is a good design and the “Mighty Mini” Fermi’s are here to stay. (We like it enough that it’s still in the rig even though we have more powerful Fermi GPU’s right next to it that should tell you something)

|

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996