Gigabyte’s latest SuperOverclock series graphic cards features cherry picked GPUs with 2oz copper, low RDS MOSFET, ferrite chokes, and solid capacitors. As a result, we get a highly overclocked with lower power consumption and cooler operation temperature. Let’s check it out as we puts the GV-N26SO-860i from Gigabyte to the test.

INTRODUCTION

Unlike motherboards, where manufacturers have options to add features and components to differentiate one board from another, graphics cards often are manufactured with the same design specifications. In order to stand out in the sea of vendors, manufacturers often resort to after-market coolers to provide better cooling. In addition, they will sell cards with higher clock-speeds than the reference speeds, or bundle it with different accessories and games. They may also provide different warranty options, such as lifetime warranty or a double lifetime warranty.

Thus, choosing a GeForce GTX 260 card from manufacturer A that sells at $30~$40 more than the same card from manufacturer B often means that you are getting more bundled gear or a longer warranty, but the physical hardware from both companies are virtually identical. To stand out, a company needs to be inventive and willing to take some initiatives. This is where Gigabyte comes in with their latest line of graphics cards.

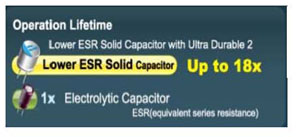

Taking the same technologies that they have initiated with their motherboards, Gigabyte integrates them into the design of their graphic cards. The latest family of Gigabyte cards, called the Super Overclock Series feature Gigabyte’s Ultra Durable VGA technology. The cards all feature a 2oz copper PCB, first tier memory, Japanese solid capacitors, ferrite core chokes, and low RDS on the MOSFET.

If you simply glimpse through any Gigabyte motherboard review Bjorn3D has done recently you should be familiar with the impact these technologies have on a motherboard. It is obvious why Gigabyte would include their motherboard technologies into their graphic cards. Do not forget a graphic card is just like a motherboard where both are made with a PCB board with electronic components like capacitors, voltage regulators, and memory chips. Thus, it is only logical to apply the same technology to a video card if it can bring the same benefits that have been proven on the motherboard.

The same proven technology of the 2oz copper that Gigabyte was spearheading way back when we reviewed their P35 boards has pretty much become a standard in many high-end motherboards, and all of the Gigabyte boards. Why 2 oz of copper? The 2 oz copper provides less impedance, which in term reduces the components temperature and better overclocking. As added benefit, it also means better power efficiency. Also, the extra copper yields lower EMI and has better ESD protection.

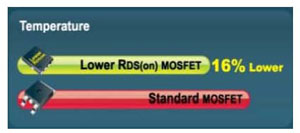

The ferrite chokes, low RDS MOSFETs, and solid capacitors are all features that we are quite familiar with and are pretty much taken for granted on high-end motherboards. Essentially all these features are more efficient and prevents energy loss, which lowers the power consumption, lowers the heat outputs, and increases the lifespan of the components. In addition, Gigabyte utilizes the high quality Samsung and Hynix memory on the video cards to provide higher overclobility.

All this technological jargon translates to the consumer by making this card a cooler running, more energy efficient, higher overclocking and better performing video card. Gigabyte has shown that on average the cards will run at 5~10% cooler, overclocks 10~30% higher, and 10~30% lower in power switching loss.

FEATURES

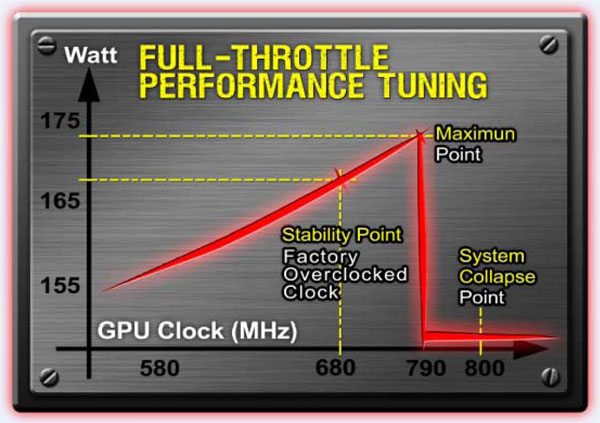

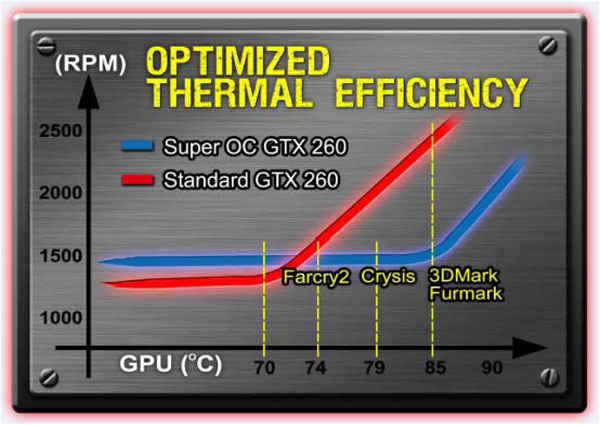

Every single GPU in the Super Overclock Series is manufactured with cherry picked GPUs that Gigabyte calls GPU Gauntlet Sorting. The GPU went through extensive testing of the GPU core engine, shader engine, and memory. If a GPU passed the test, it then will be tested for the maximum overclocking ability. The card is then tested with Furmark and 3Dmark Vantage to ensure its stability. Once it has passed the test, Gigabyte will then test the power switching and stability. Only the GPUs with best power efficiency and lowest power consumption are qualified for the Super Overclock Series.

The Super Overclock is not all about highest clock speed, but rather balanced performance and power consumption. The card was tested to achieve a high overclocking frequency yet keeping the thermal envelope within an acceptable range. As the components that are used in the card are hand picked, they are capable of delivering up to 5% to 10% lower temperatures.

2 oz Copper PCB

Benefit:

- 2oz PCB board helps heat spread

- Lower impedance

1st Tier Samsung and Hynix Memory

Benefit:

High quality memory to deliver 10% guard band

Benefit:

- Made by Japanese manufacturers

- Longer life span under extreme condition

- Boost system stability

Ferrite Core Chokes

Benefit:

- Store energy longer and prevent rapid energy loss at high frequency.

Benefit:

- Less switching loss

- Lower power consumption

- Less heat generation

SPECIFICATION

| Specification | |

|---|---|

| Chipset |

NVIDIA GeForce GTX 260 |

| Core Clock |

680 MHz |

| Mem Clock |

2500 MHz |

| Memory |

896MB |

| Memory Bus |

448bit |

| Memory Type |

GDDR3 |

| Card dimension |

ATX |

| Bus Type |

PCI-E 2.0 |

| Bus Speed |

x16 |

| Shader Clock |

1466 MHz |

| Maximum Digital Resolution |

2560×1600 |

| Maximum VGA Resolution |

2048×1536 |

| D-SUB |

Yes |

| TV-OUT |

No |

| DVI Port |

Yes |

| VIVO |

No |

| Multi View |

Yes |

PICTURES AND IMPRESSIONS

Gigabyte’s first Super Overclock Series cards will be the mainstream GTX 260 series. We think it’s a good choice to start introducing such technology. Mainstream users often cannot afford the high-end graphics cards, so spending a few bucks more than the cost of a mid-range card to gain an extra 10%~30% performance sure is a good investment.

Our sample, GV-N26SO0-896I from Gigabyte actually has the highest overclock speed that we have seen from the major video card manufacturers. The card is clocked at 680MHz for Core, 1466 MHz for Shader, and 2350 MHz for the memory. This is up from the standard clockspeed of 576MHz/1466MHz/2350MHz (core/shader/memory).

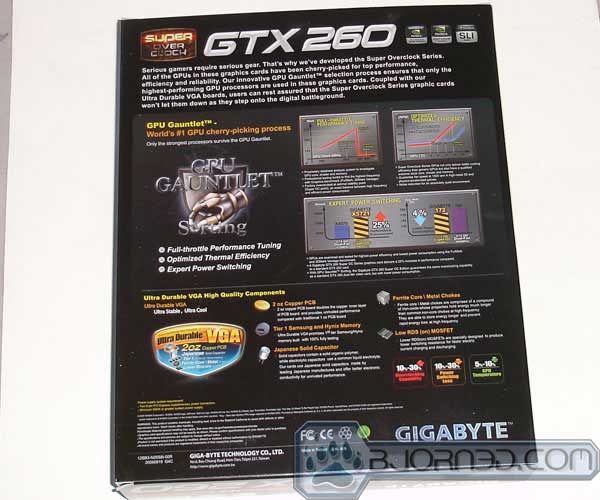

Gigabyte packages the card in a standard cardboard box where the front has eye-catching red lettering showing “Super Over Clock”. Information such as 2oz copper, 25% overclocking performance gain, Optimized Thermal Efficiency, and Expert Power Switching technology are neatly placed outside.

Inside of the cardboard box, we find another box with padded Styrofoam to protect the video card. The accessories are placed in their own compartment next to the video card so they won’t jump around in the box, causing damage your $200 investment.

In addition to the video card, we get a very nice manual,driver CD, two PCIE to molex power adapter, a DVI to HDMI adapter, SPDIF audio bypass cable, and a DVI to VGA adapter. Gigabyte chooses not to include any games with the GV-N260SO-896i.

Gigabyte uses the reference heatsink to keep the video card cool. GTX 260s generally do not get too hot and our tests have shown that the NVIDIA reference heatsink and fan design does pretty well keeping the card running cool and the noise level down. With the added benefit of 2oz of copper and better power efficiency, it should be expected that the card should run cooler than a reference card, so there is no need for after-market cooling.

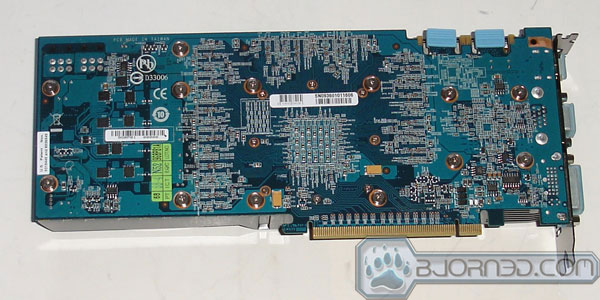

It is actually quite refreshing to see blue PCB on a video card. We do not often see such a color on a video card. Although some people may prefer black color PCB, we like to see some variations and choices.

On the top of the card is where you will find the two gold connectors for dual or triple SLI. In addition, two 6 pin PCIE power connector and a SPDIF audio connector are located in its usual place. As you can see, Gigabyte includes plastic covers for all of the connectors to prevent any damages. Very nice thought.

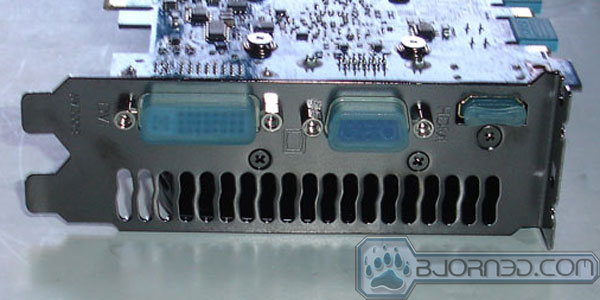

The card comes with DVI, VGA, and a HDMI with HDCP port. We like the fact that it comes with HDMI, but would also like to see two DVI ports instead of the VGA port.

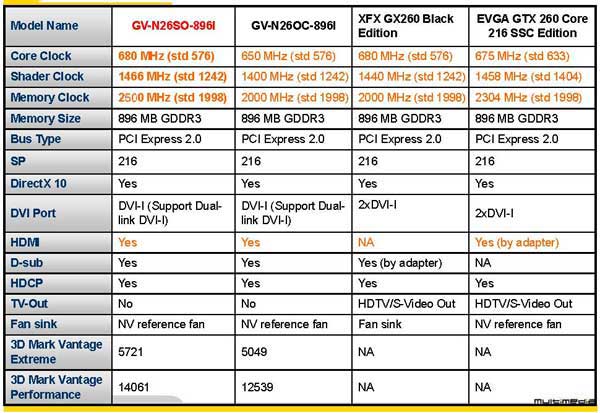

Let’s compare the Gigabyte Super Overclock GTX 260 against other overclocked cards on the market:

As you can see the Gigabyte card is clearly the card with the highest level of overclocking. However something to note is that the card lacks the ability to output through S-Video. It really should not be any issue because S-Video is largely obsolete. Most people having moved to HDTV with a DVI, VGA or HDMI plug in.

TESTING & METHODOLOGY

To test the Gigabyte card we did a fresh load of Vista 64 bit and updated the Operating System and drivers with all the latest versions and patches. Then we loaded the testing software and ran the machine for a few days to confirm everything was installed correctly and gave the machine a little burn in time.

Once again, we’d like to remind you that we have used the same hardware connected to the motherboard that we’ve been using on all our motherboards in this series of reviews. We use the same HD, same CPU Cooler, and keep the hardware except the Motherboard, RAM, and CPU the same as much as possible. It makes for better benchmarking if you keep the hardware as close to the same across the spectrum of tests.

| Test Rig | |

| Case Type | None |

| CPU | Intel Core i7 920 |

| Motherboard |

Gigabyte EX58-UD4P (BIOS F8) |

| RAM |

Kingston HyperX KHX12800D3LLK3/6GX |

| CPU Cooler |

Prolimatech Megahalems |

| Hard Drives | Seagate Barracuda 7200.11 1.5TB |

| Optical | Nec DVD-RW ND-3520AW |

| GPU Tested |

Asus ENGTX260 Matrix Gigabyte Gigabyte GV-N26OC-896I EVGA GTX 285 FTW |

| Testing PSU | Cooler Master UCP 900W |

| Legacy | Floppy |

| Mouse | Logitech G7 |

| Keyboard | Logitech Media Keyboard Elite |

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark06 v. 1.10 | |

| 3DMark Vantage | |

| Company of Heroes v. 1.71 | |

| Crysis v. 1.2 | |

| World in Conflict | |

| FarCry 2 | |

| Crysis Warhead | |

3DMARK06 V. 1.1.0

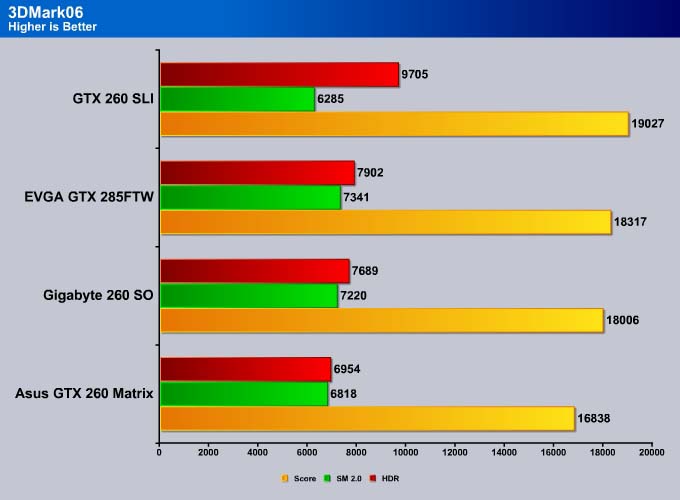

3DMark06 developed by Futuremark, is a synthetic benchmark used for universal testing of all graphics solutions. 3DMark06 features HDR rendering, complex HDR post processing, dynamic soft shadows for all objects, water shader with HDR refraction, HDR reflection, depth fog and Gerstner wave functions, realistic sky model with cloud blending, and approximately 5.4 million triangles and 8.8 million vertices; to name just a few. The measurement unit “3DMark” is intended to give a normalized mean for comparing different GPU/VPUs. It has been accepted as both a standard and a mandatory benchmark throughout the gaming world for measuring performance.

3DMark06 shows that the Gigabyte’s card yields a 7% higher performance in the overall and SM2 score, but a healthy 10% for the HDR. The Gigabyte card yields a very nice overall score, coming just a tad below the GTX 285 FTW with 18006 points. For a card that is selling at $150 cheaper, it’s a very nice score. We shall see if this translates to actual real game performance.

3DMark Vantage

www.futuremark.com/benchmarks/3dmarkvantage/features/

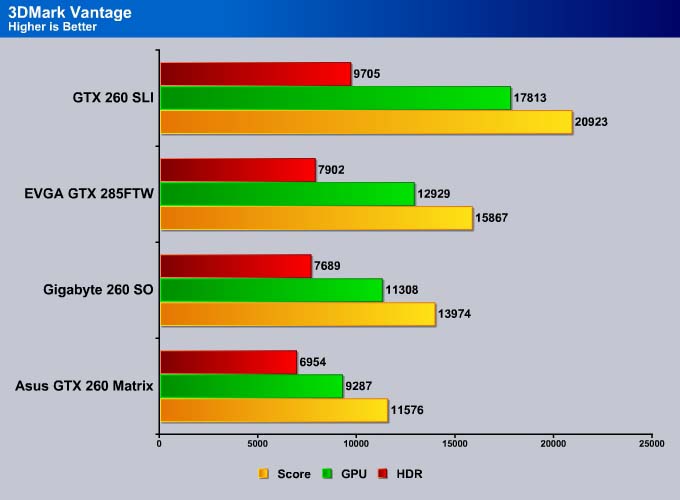

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PhysX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP?

Just like what we have observed with 3DMark06, Vantage also shows that the Gigabyte card is able to yield a better result than the Asus GTX 260 Matrix. Here we see the Gigabyte card is actually able to offer a 20% performance gain in the overall and GPU score. 3DMark Vantage is more system demanding than 3DMark06, so we see the the performance difference between the GTX 260 and the GTX 285 are wider, but the Gigabyte card is able to score a respectable 90% of the performance of the GTX 285.

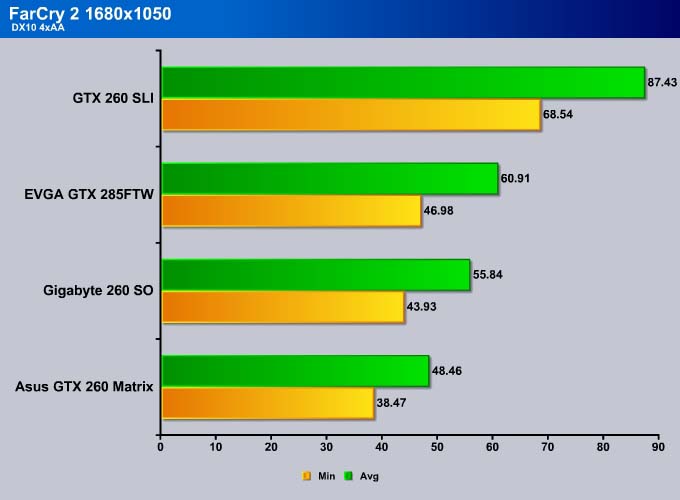

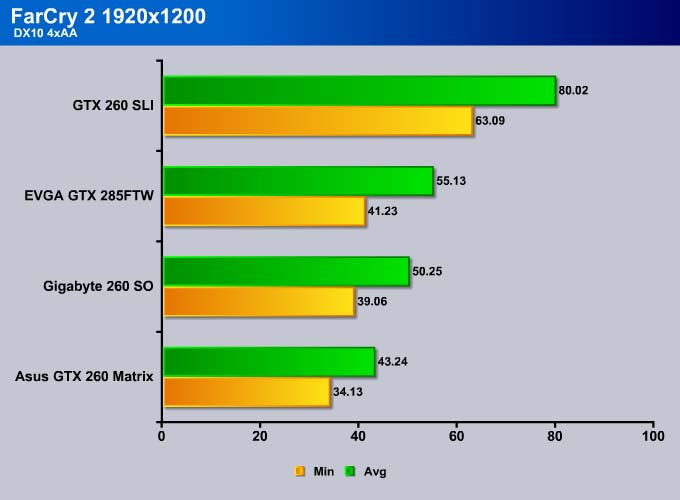

Far Cry 2

Far Cry 2, released in October 2008 by Ubisoft, was one of the most anticipated titles of the year. It’s an engaging state-of-the-art First Person Shooter set in an un-named African country. Caught between two rival factions, you’re sent to take out “The Jackal”. Far Cry2 ships with a full featured benchmark utility and it is one of the most well designed, well thought out game benchmarks we’ve ever seen. One big difference between this benchmark and others is that it leaves the game’s AI (Artificial Intelligence) running while the benchmark is being performed.

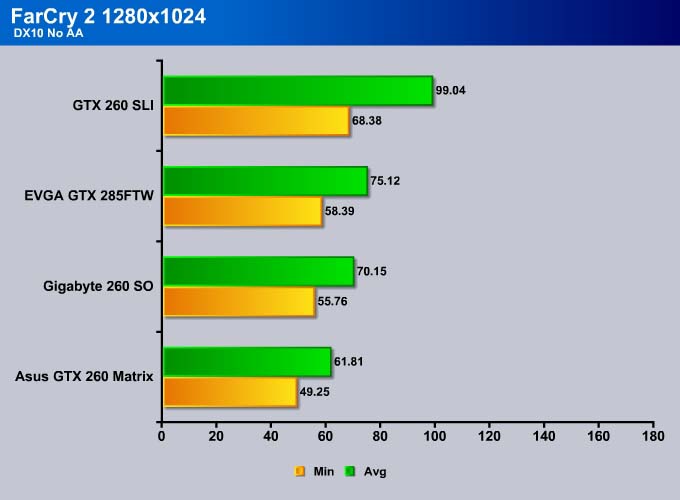

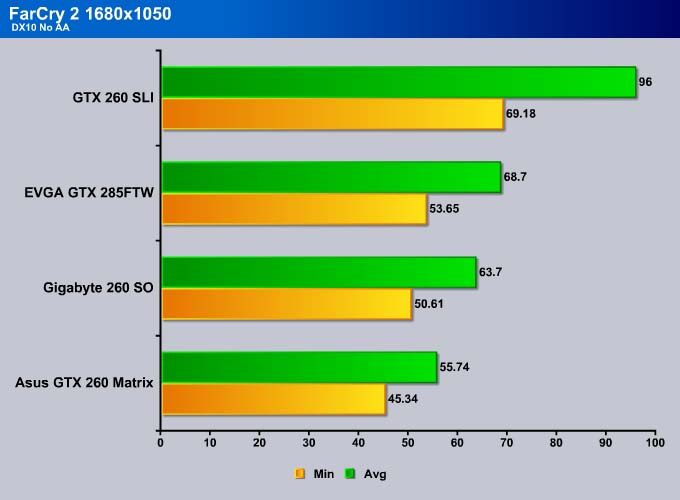

Let’s take a look at the actual gaming performance. With 3DMark benchmarks putting the Gigabyte card 10% ahead of the Asus card we do expect it to come ahead of its competitor. Without any AA or AF, we see the Gigabyte card is able to provide a healthy 20% performance gain over the Asus card. Compare it against the GTX 285 FTW from EVGA, we can see that the Gigabyte card’s performance is very nice as it is able to yield 90% of the performance of a card that’s selling at $150 more just like what we saw in 3DMark Vantage.

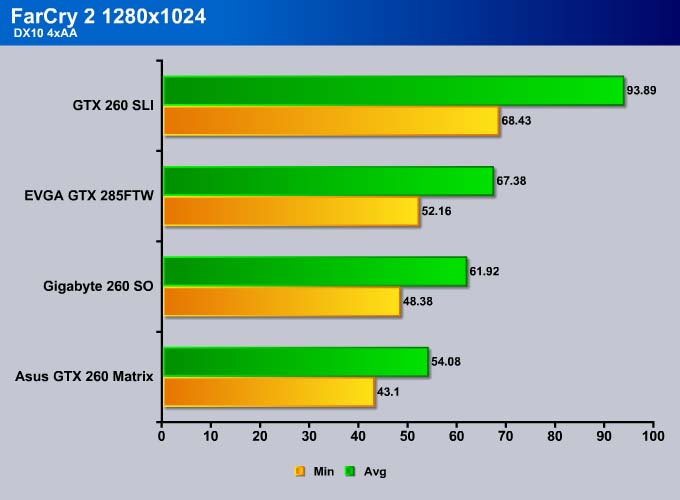

All of the cards tested are able to play the game at the magical minimum 30 FPS with 4AA enabled. As we turn on the eye-candy, we notice that the performance advantage of the Gigabyte card was narrowed slightly with only a 10% gain compare to the Asus card. Heck 10% gain is something that customer will sure appreciate.

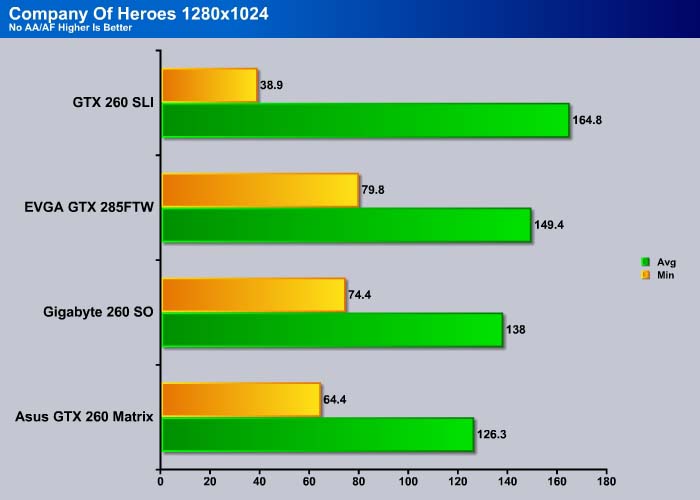

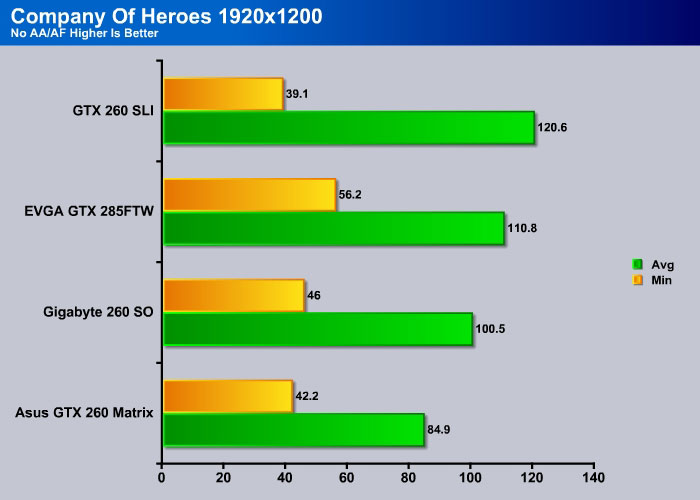

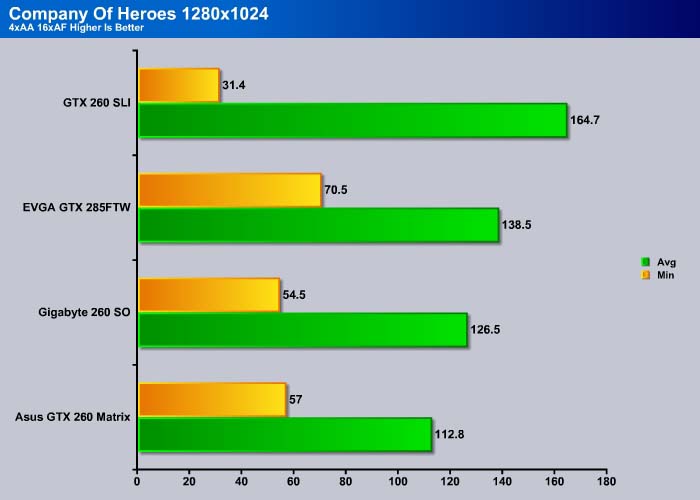

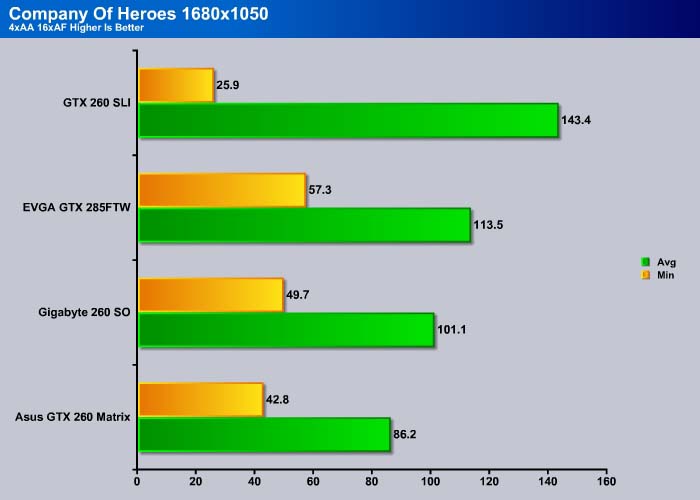

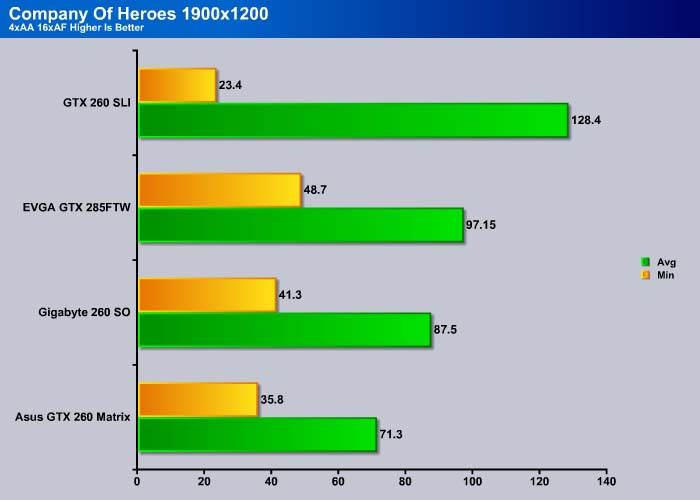

company of heroes

Company of Heroes(COH) is a Real Time Strategy(RTS) game for the PC, announced on April, 2005. It is developed by the Canadian based company Relic Entertainment and published by THQ. COH is an excellent game that is incredibly demanding on system resources thus making it an excellent benchmark. Like F.E.A.R., the game contains an integrated performance test that can be run to determine your system’s performance based on the graphical options you have chosen. Letting the games benchmark handle the chore takes the human factor out of the equation and ensures that each run of the test is exactly the same producing more reliable results.

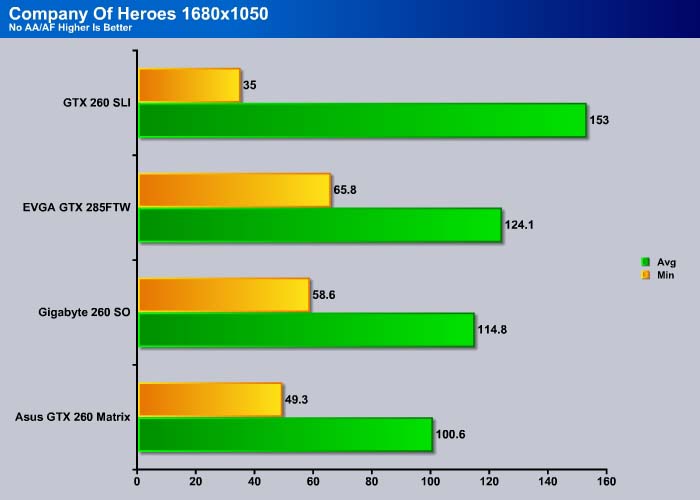

Company of Heroes is quite playable with all of our cards. Again, we see the same performance gain from the Gigabyte card.

As we turn on the AA and AF, we can get a sense of the highly overclocked GTX 260 is capable of.

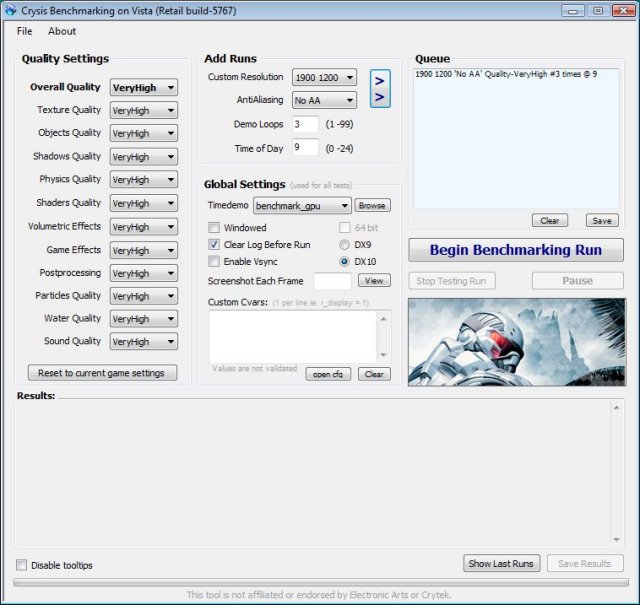

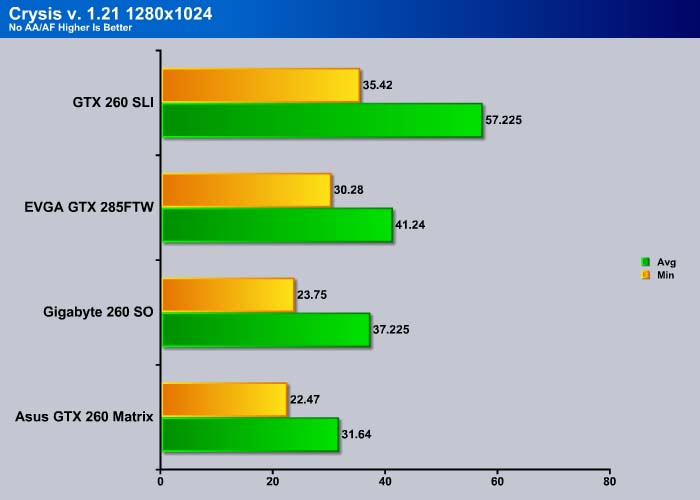

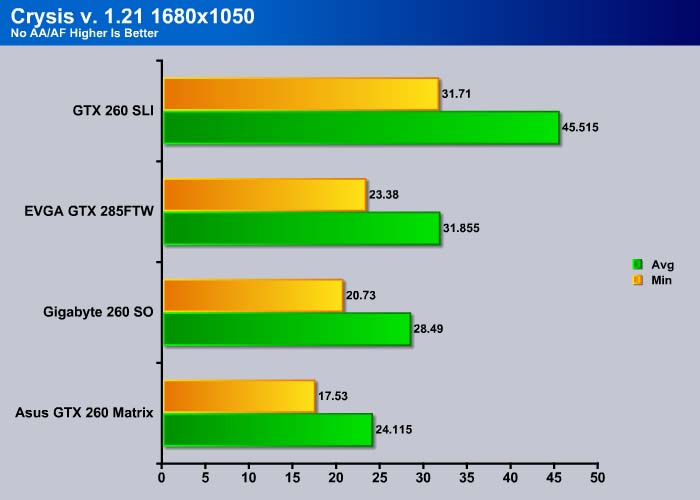

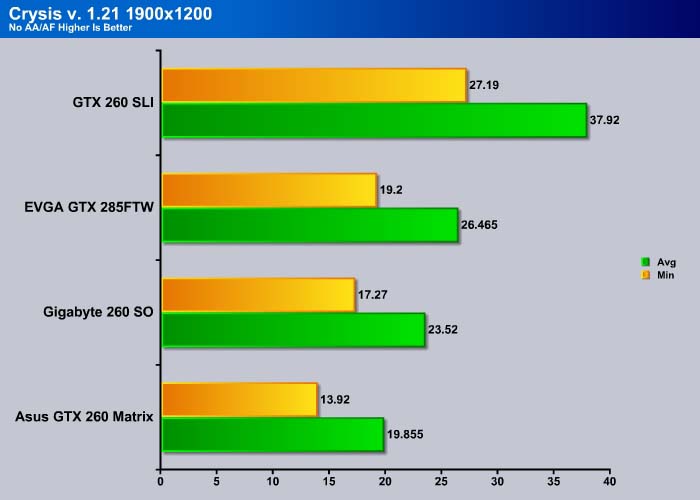

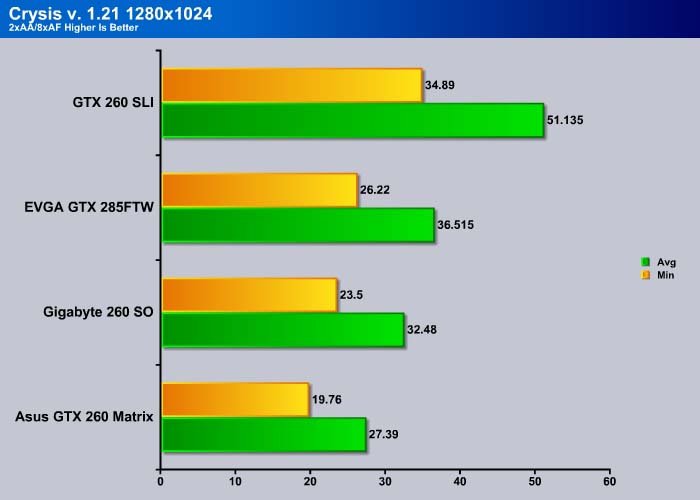

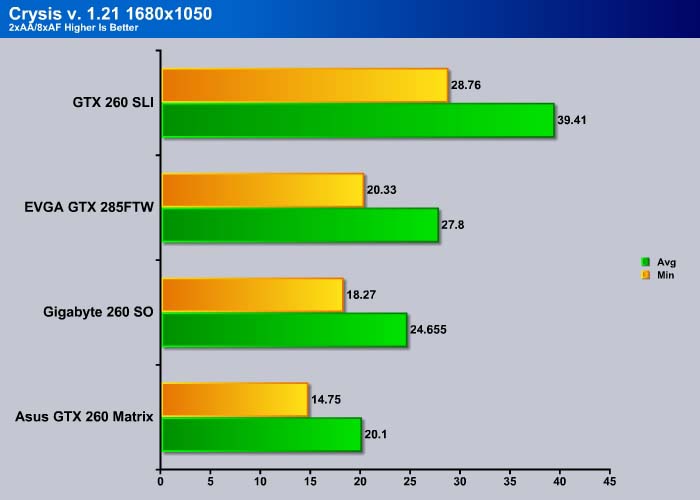

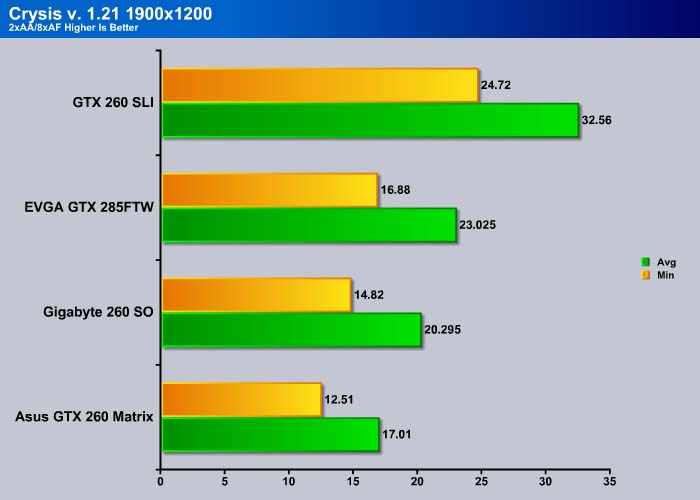

Crysis v. 1.21

Crysis is the most highly anticipated game to hit the market in the last several years. Crysis is based on the CryENGINE™ 2 developed by Crytek. The CryENGINE™ 2 offers real time editing, bump mapping, dynamic lights, network system, integrated physics system, shaders, shadows, and a dynamic music system, just to name a few of the state-of-the-art features that are incorporated into Crysis. As one might expect with this number of features, the game is extremely demanding of system resources, especially the GPU. We expect Crysis to be a primary gaming benchmark for many years to come.

As we turn on the AA and AF we can see that Gigabyte is again able to offer 20% performance gain at lower resolution and 10% at 1920×1200 over a regular GTX 260 in our Crysis benchmark. Crysis is one of the most demanding game on the market despite it has been on the market since 2007. We see the Gigabyte card barely made the cut of the magical 30 FPS at 1280×1024 with 2xAA/8AF while the reference GTX 260 is not. As the resolution is increased, only the SLI cards are capable of offering playable frame rates with AA and AF enabled.

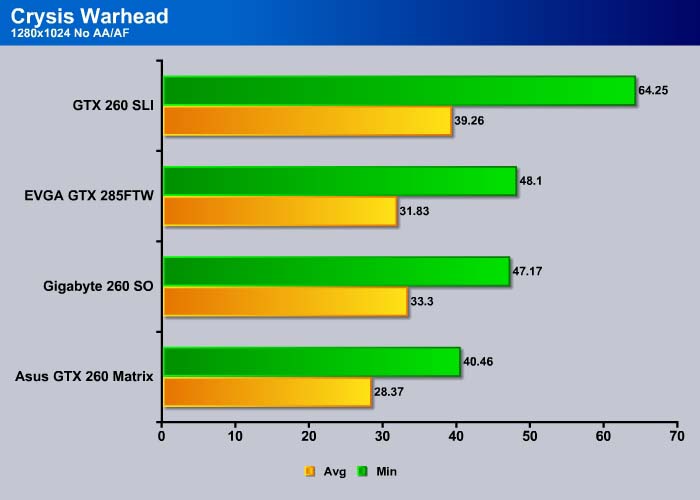

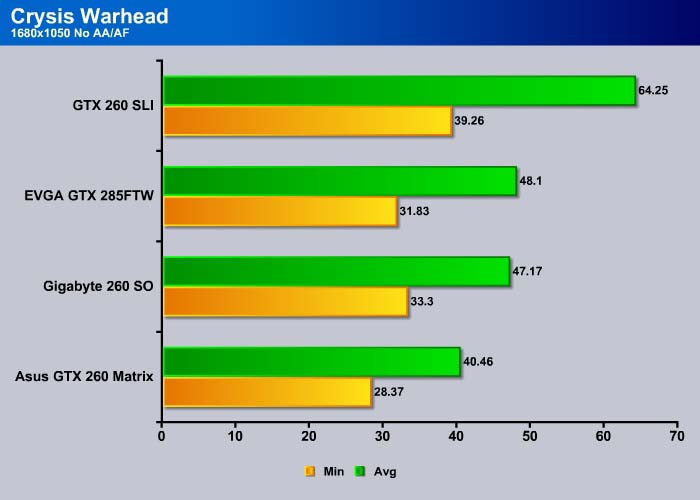

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

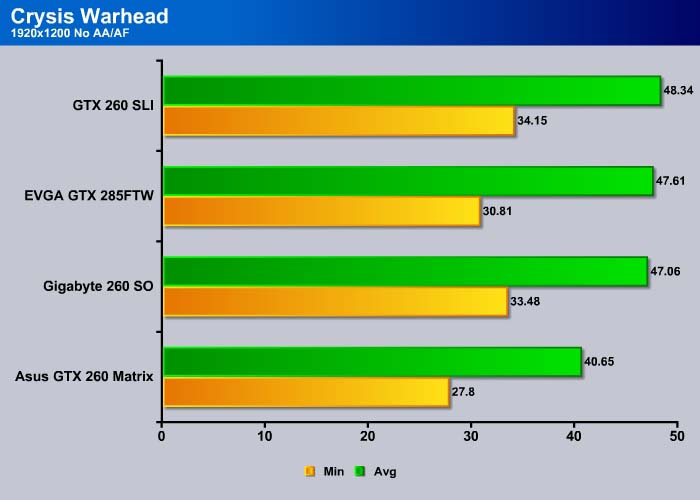

Crysis Warhead uses the updated CryEngine 2 where the game offers a more balanced performance to offload the task between GPU and CPU more evenly. Here we can see the Gigabyte card actually comes in just 1 frame behind the GTX 285.

Even as we upped the resolution, the Gigabyte card’s performance matches the more expensive GTX 285 and the GTX 260 in SLI when we do not enable the AA or AF while the Asus card trails behind.

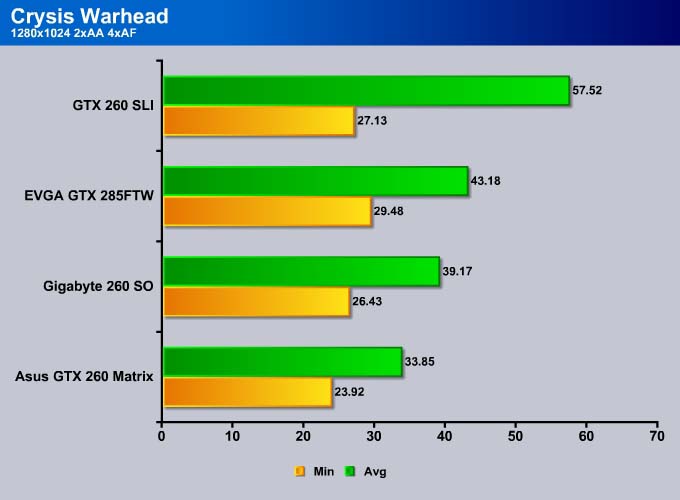

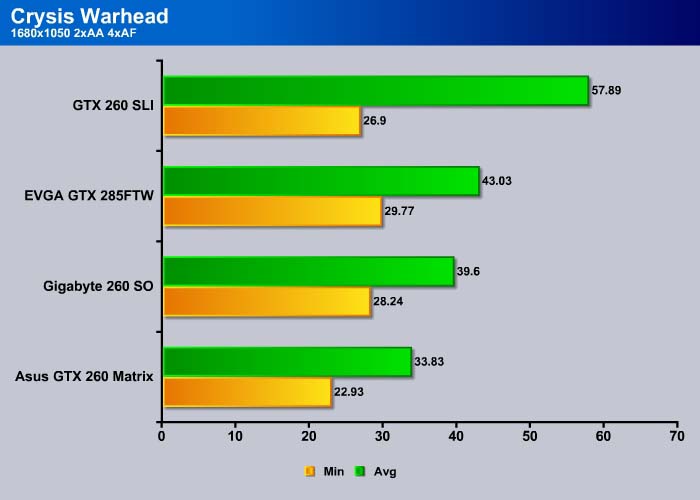

We can clearly see the benefit of the more powerful card and SLI once we enable AA and AF. Here the Gigabyte card still shows a very strong performance.

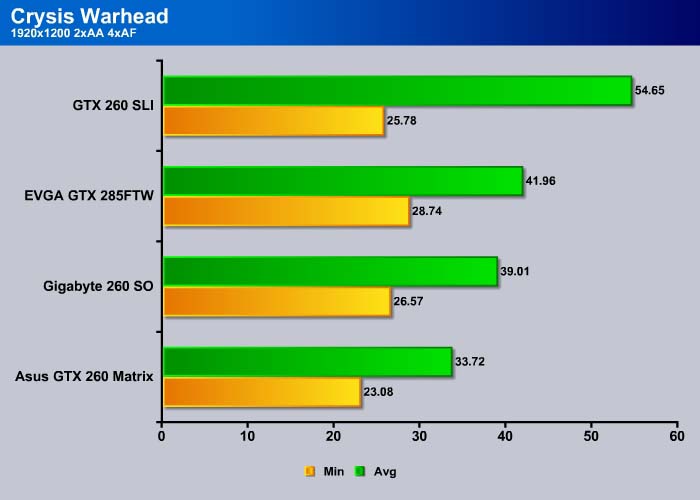

Even at 1920×1200 the Gigabyte card still holds a very strong result against the GTX 285, comes in just 2 frame short of our GTX 285.

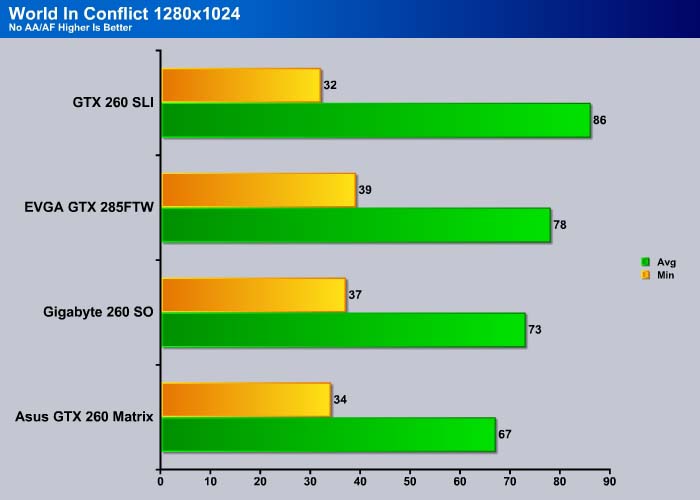

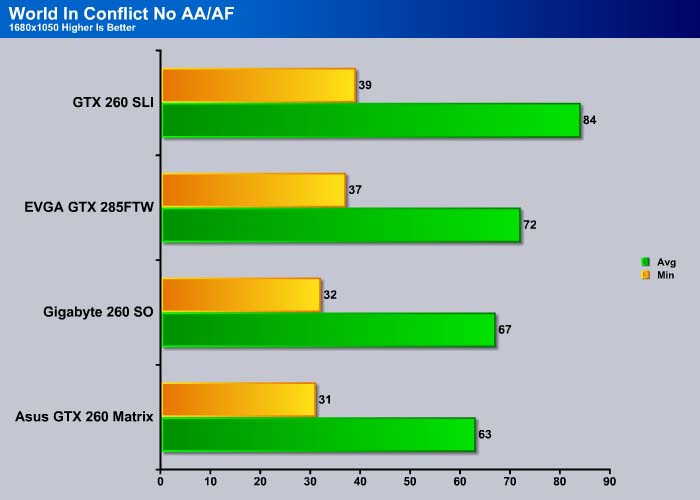

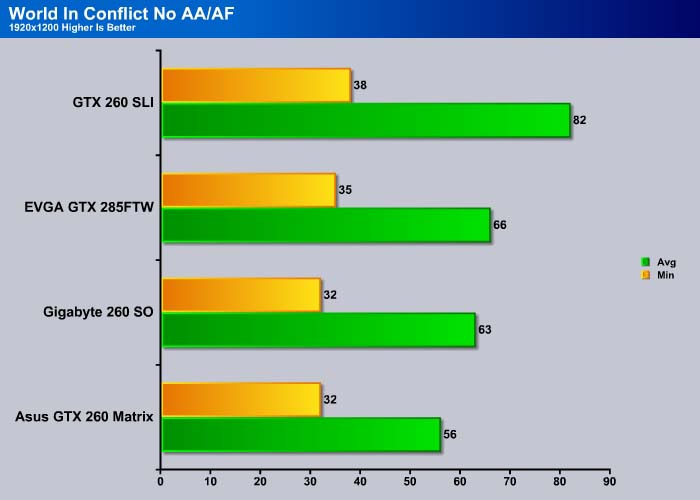

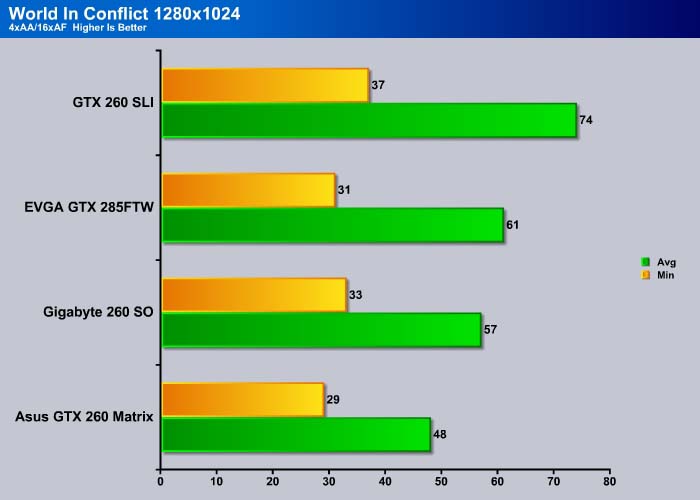

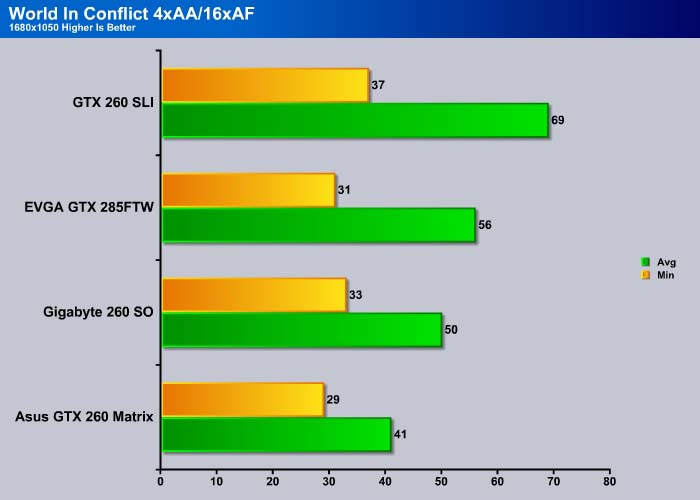

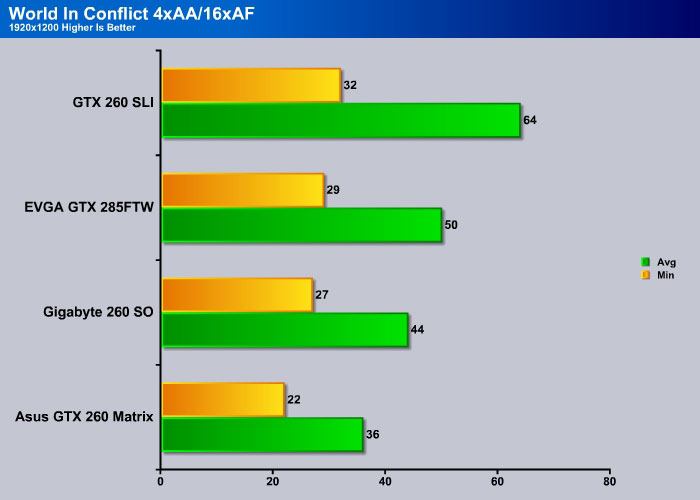

World in Conflict Demo

World in Conflict is a real-time tactical video game developed by the Swedish video game company Massive Entertainment, and published by Sierra Entertainment for Windows PC. The game was released in September of 2007. The game is set in 1989 during the social, political, and economic collapse of the Soviet Union. However, the title postulates an alternate history scenario where the Soviet Union pursued a course of war to remain in power. World in Conflict has superb graphics, is extremely GPU intensive, and has built-in benchmarks. Sounds like benchmark material to us!

TEMPERATURES

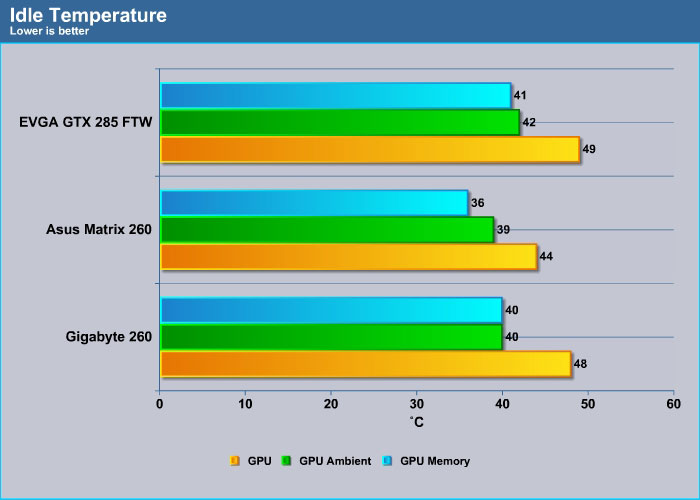

To get our temperature reading, we ran 3DMark06 looping for 30 minutes to get the load temperature. To get the idle temp we let the machine idle at the desktop for 30 minutes with no background tasks that would drive the temperature up. Please note that this is on an open test station, so your chassis and cooling will affect the temperatures your seeing.

All three cards run pretty cool under idle. The Asus card runs cooler than the rest of the card because it uses aftermarket fan which is more efficient in keeping the card cool than the stock cooler that is found on the GTX 260 and 285.

Under load, again, we see the Asus’s card runs the coolest. The Gigabyte card actually runs slightly hotter than the GTX 285 due to its high overclocks. Although Gigabyte claims that 2oz copper is able to help with the cooling performance, its high overclocking probably offset the added cooling benefit.

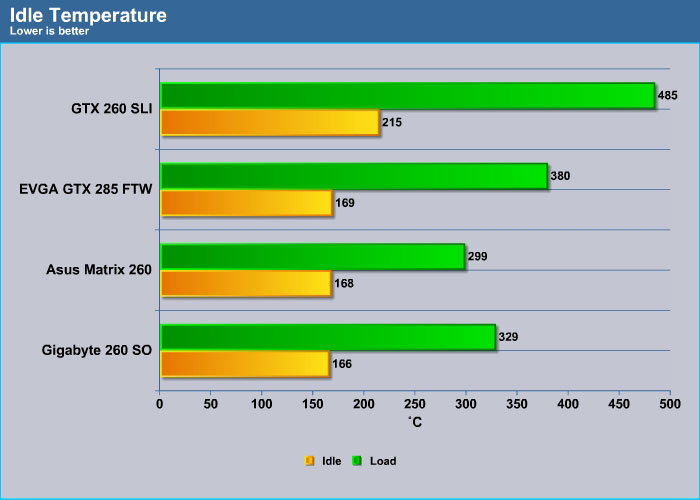

POWER CONSUMPTION

To get our power consumption numbers we plugged in out Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then, during the 30 minute loop of 3DMark Vantage we watched for the peak power consumption, then recorded the highest usage.

Under idle, Gigabyte’s card consumes the least amount of power with two watts lower than the Asus card. However, under load, the card actually consumes 30 watts more than the regular GTX 260. We think that 30 watts more power consumption for 10~20% performance gain is not too bad.

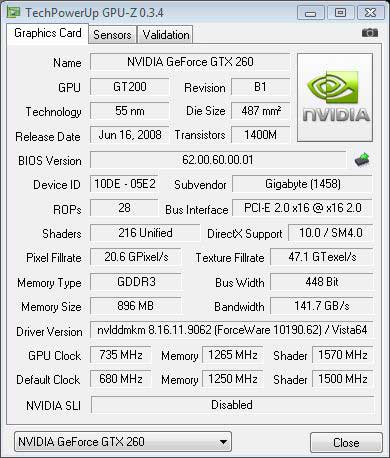

OVERCLOCKING

Given to the fact that this is a highly overclocked card already, we really did not expect much when it comes to overclocking. However, we were quite surprised to find that we were able to push the core clock to 735 MHz, memory clock to 1265MHz and the shader clock to 1570. When the card reaches to 90 ˚C we stopped testing, as that is too hot for the card. We kept the fan at the stock setting so if you crank up the fan speed it is possible to reach an even higher result.

CONCLUSION

The Gigabyte SuperOverclock GTX 260 offers an average of 10~20% consistent performance gain in all of our tests. At clockspeed of at 680MHz, 1466 MHz, and 2350 MHz (core/shader/memory), it is one of the highest factory overclock GTX 260 we have seen. We, however, experienced no issue whatsoever at this speed and the card sailed through every single test we throw at it.

The technology that Gigabyte puts into the card such as 2oz of copper, low RDS MOSFET, and ferrite core chokes that is supposed to help with less heat outputs and lower energy consumption does help maintain the card’s stability at such high clock speeds with room to spare. Although, Gigabyte claims that the card consumes less power, our tests shows that it actually consumes 30W more under load. Its temperature is also not significantly cooler due to the use of the NVIDIA factory heatsink and cooler. However these technologies help the card to achieve such high overclocking result without the need for any aftermarket cooling or voltage tweaks.

The fact that the card also comes an array of connectors which include HDMI, VGA, and DVI ports is a very welcoming feature, especially the HDMI port. Although we love to see two DVI instead of a VGA and DVI port, we really love the native HDMI port.

What is nice about the Gigabyte SuperOverclock GTX 260 is that it is able to offer the performance gain without incurring much additional cost. The card is currently selling at Newegg at 199.99. Although it is $20~$30 more than other GTX 260 (without considering the rebates). We think it is a very good investment as its performance clearly warrants that extra $30. With its 10~20% performance gain, the card actually is capable of competing against the GTX 275 that is selling at $50 more. It even comes in very close to the GTX 285 where it delivered 90% of the performance at 60% of the cost. We think this is one of the best cards in its price bracket and we would not be hesitant to recommend this card to anyone who is looking for a card at sub $200 mark.

| OUR VERDICT: GV-N26SO-896I | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The Gigabyte GTX 260 SuperOverclocked Edition earned our Golden Bear Award for able to deliver consistent 10~20% performance gain and being the best performing card in its price segment. |

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996