Looking for a capable video card, but don’t want to break the bank? Look no further, as Leadtek brings us the Nvidia GTS 250.

INTRODUCTION

If you’re anything like I am, it’s time to upgrade your PC, again. I know this because if you’re anything like me, it’s ALWAYS time to upgrade your computer. The current economic slump has hampered the market somewhat because most of us can’t afford to spend anywhere between $400 and $600 on a new video card these days. Well, Nvidia identified the problem and has presented us with the GTS 250 as the solution.

The GTS 250 is the latest addition to the Performance class of Nvidia GPUs. It is no secret that this particular GPU is a direct descendant of the 9800GTX series of cards. The core is a G92b. The G92 has gone through a die-shrink to 55nm as opposed to the older 65nm, and thereby reduced the energy consumption and cut back on the heat a little.

Nvidia was hammered the last time they offered a rebranded card with a different name, and this time they have avoided that by being completely up-front with their customers. This card was named the GTS 250 in order to help eliminate confusion at the consumer’s end by simply having a higher numbered card perform better.

In addition to making things easier for the consumer, Nvidia offers two versions of the card, a 512MB version which is a rebranded 9800GTX+, and a 1GB version, the one we are testing today. Nvidia has stated that the 512MB version will SLI with a 9800GTX, while the 1GB version will not.

As for price, Nvidia has set the MSRP for the 512MB at $129.99, and the 1GB at $149.99. What this means to you, is that you can get the 512MB for less than a new 9800GTX+ would cost you, and a 1GB version for about the same price.

ABOUT LEADTEK

From the Leadtek website:

“Innovation and Quality” are all an intrinsic part of our corporate policy. We have never failed to stress the importance of strong R&D capabilities if we are to continue to make high quality products with added value.

By doing so, our products will not only go on winning favorable reviews in the professional media and at exhibitions around the world but the respect and loyalty of the market.

For Leadtek, our customers really do come first and their satisfaction is paramount important to us.

Using all digital means of communication available – such as our regularly updated Website- customers, current and potential, are easily able to refer to our catalogue of products with information on prices, services and future developments.

Looking ahead, Leadtek will continue to maintain its status on the Asia-Pacific market while extending its global sales network. By combining our trend-setting graphics, multimedia audio and video, communications technologies, we remain dedicated to cutting edge technologies and value added products.

We will work toward further integration of computer and communications technologies with our sights set on combining multimedia videophony, and GPS (Global Positioning System) in a single product. Also to extend the market for broadband network devices.

Our ultimate goal is to facilitate and improve human life through new technology and applications without harming the environment.

Together we can dream of a world where we enjoy the convenience of modern technology without compromising our natural heritage for future generations.

And turn even this Dream into a Reality with Leadtek.

SPECIFICATIONS

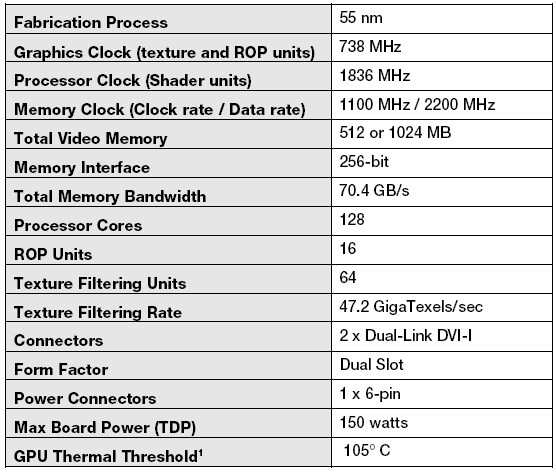

We’ll start out with a comparison between the GTS 250 and the other two cards that are at the same relative price point.

|

Specifications

|

|||

| GPU |

9800 GTX+

|

Leadtek Winfast GTS-250 1 GB

|

HD 4850

|

|

GPU Frequency |

740 MHz

|

738 MHz

|

750 Mhz

|

| Memory Frequency |

1100 MHz

|

1101 Mhz

|

993 Mhz

|

| Memory Bus Width |

256-bit

|

256-bit

|

256-bit

|

| Memory Type |

GDDR3

|

GDDR3

|

GDDR3

|

| # of Stream Processors |

128

|

128

|

800

|

| Texture Units |

64

|

64

|

40

|

| ROPS |

16

|

16

|

16

|

| Bandwidth (GB/sec) |

70.4

|

70.4

|

63.6

|

| Process |

55nm

|

55nm

|

55nm

|

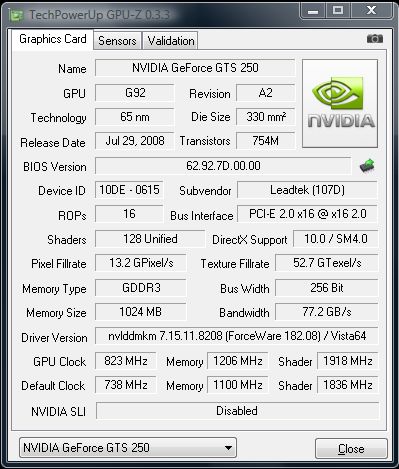

And here’s a quick look at some more detailed specifications.

FEATURES

|

PCI Express 2.0 GPU |

|

|

| GPU/Memory Clock at 738/1101 MHz!! |

||

| HDCP capable | ||

| 1024 MB 256-bit memory interface for smooth, realistic gaming experiences at Ultra-High Resolutions /AA/AF gaming | ||

| Support Dual Dual-Link DVI with awe-inspiring 2560×1600 resolution | ||

| The Ultimate Blu-ray and HD DVD Movie Experience on a Gaming PC | ||

| Smoothly playback H.264, MPEG-2, VC-1 and WMV video–including WMV HD | ||

| Industry leading 3-way NVIDIA SLI technology offers amazing performance | ||

|

NVIDIA PhysX™ READY |

||

| Alloy core chokes, allowing for a higher working temperature, higher current saturation, lower power consumption and higher reliability than ferrite core chokes | ||

|

PCI Express 2.0 Support Designed to run perfectly with the new PCI Express 2.0 bus architecture, offering a future-proofing bridge to tomorrow’s most bandwidth-hungry games and 3D applications by maximizing the 5 GT/s PCI Express 2.0 bandwidth (twice that of first generation PCI Express). PCI Express 2.0 products are fully backwards compatible with existing PCI Express motherboards for the broadest support. |

|||

| 2nd Generation NVIDIA® unified architecture Second Generation architecture delivers 50% more gaming performance over the first generation through enhanced processing cores. |

|||

| GigaThread™ Technology Massively multi-threaded architecture supports thousands of independent, simultaneous threads, providing extreme processing efficiency in advanced, next generation shader programs. |

|||

| NVIDIA PhysX™ -Ready Enable a totally new class of physical gaming interaction for a more dynamic and realistic experience with Geforce. |

|||

| High-Speed GDDR3 Memory on Board Enhanced memory speed and capacity ensures more flowing video quality in latest gaming environment especially in large scale textures processing. |

|||

| Dual Dual-Link DVI Support hardwares with awe-inspiring 2560-by-1600 resolution, such as the 30-inch HD LCD Display, with massive load of pixels, requires a graphics cards with dual-link DVI connectivity. |

|

||

| Dual 400MHz RAMDACs

Blazing-fast RAMDACs support dual QXGA displayswith ultra-high, ergonomic refresh rates up to 2048×1536@85Hz. |

|||

| Triple NVIDIA SLI technology Support hardwares with awe-inspiring 2560-by-1600 Offers amazing performance scaling by implementing AFR (Alternate Frame Rendering) under Windows Vista with solid, state-of-the-art drivers. |

|

||

|

Click to Enlarge

|

|||

| Microsoft® DirectX® 10 Shader Model 4.0 Support

DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects. |

|||

| OpenGL® 2.1 Optimizations and Support Full OpenGL support, including OpenGL 2.1 . |

|||

| Integrated HDTV encoder Provide world-class TV-out functionality up to 1080P resolution. |

|||

| NVIDIA® Lumenex™ Engine Support hardwares with awe-inspiring 2560-by-1600Delivers stunning image quality and floating point accuracy at ultra-fast frame rates.

|

|

||

| Dual-stream Hardware Acceleration

Provides ultra-smooth playback of H.264, VC-1, WMV and MPEG-2 HD and Bul-ray movies. |

|||

| High dynamic-range (HDR) Rendering Support

The ultimate lighting effects bring environments to life. |

|||

| NVIDIA® PureVideo ™ HD technology

Delivers graphics performance when you need it and low-power operation when you don’t. HybridPower technology lets you switch from your graphics card to your motherboard GeForce GPU when running less graphically-intensive applications for a silent, low power PC experience. |

|

||

| HybridPower Technology support Delivers graphics performance when you need it and low-power operation when you don’t. HybridPower technology lets you switch from your graphics card to your motherboard GeForce GPU when running less graphically-intensive applications for a silent, low power PC experience. |

|||

| HDCP Capable

Allows playback of HD DVD, Blu-ray Disc, and other protected content at full HD resolutions with integrated High-bandwidth Digital Content Protection (HDCP) support. (Requires other compatible components that are also HDCP capable.) |

|

||

PICTURES & IMPRESSIONS

Click to enlarge

Leadtek’s packaging is fairly standard, nice graphics on the front, all the important info on the back.

Click to enlarge

The GTS 250 shipped in the standard closed-cell foam that is nice and rigid to prevent any damage, wrapped in a static bag, of course.

Click to enlarge

The included bundle is nice but simple, with the standard VGA->DVI adapter, the component video adapter and the PCIe power adapter. The box didn’t specify that it shipped with a game, but this one had Overlord in the box, which is always a nice surprise.

Click to enlarge

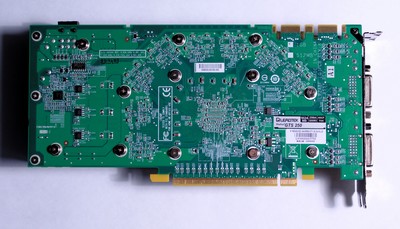

The back of the card is fairly straightforward, comparing it to the 9800GTX+, everything is laid out in similar fashion. Not sure if you could use a 9800GTX+ heatsink for this card, but I would imagine it would be a pretty close fit, depending on the IC’s and memory layout.

Click to enlarge

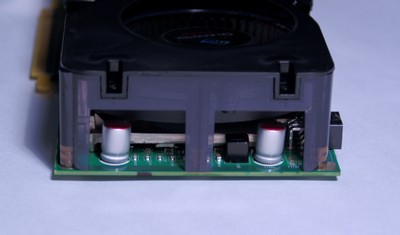

Looking at the card from the side, you can see the heatsink setup for this card does not fully encapsulate it, which may or may not be a bad thing, depending on how abusive you are with your cards.

Click to enlarge

Here you can see the power input, standard 6-pin, and the triple-SLI connector. That’s right, you can run three of these.

Click to enlarge

Here’s a closer look at the exposed circuitry. Again, not an issue if you’re careful. If you’re tight on space in your case however, don’t forget you’ve got stuff exposed.

TESTING & METHODOLOGY

To test the Leadtek Winfast GTS 250 a fresh install of Vista 64 was used. We loaded all the updated drivers for all components and downloaded then installed all available updates for Vista. Once all that was accomplished, we installed all the games and utilities necessary for testing out the card.

When we booted back up, we ran into no issues with the card or any of the games. The only error encountered was the recurring one of having the wrong game disk inserted, due to switching benches back and forth so often. It’s a rough life when your biggest problem is the wrong disk being in the drive, believe me. All testing was completed at stock clock speeds on all cards.

| LEADTEK WINFAST GTS 250 TEST RIG |

|

|

Case

|

Danger Den Tower 26 |

|

Motherboard

|

EVGA 790i Ultra

|

|

CPU

|

Q6600 1.400v @ 3.0 GHz

|

|

Memory

|

|

|

GPU

|

Leadtek Winfast GTS 250

EVGA 8800GT SC 714/1779/1032

GTS 250 SLI

|

|

HDD

|

2 X WD Caviar Black 640GB RAID 0

|

|

PSU

|

In-Win Commander 1200W |

|

Cooling

|

Laing DDC 12V 18W pump

MC-TDX 775 CPU Block

Ione full coverage GPU block G92

Black Ice GTX480 radiator

|

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark06 v. 1.10 | |

| 3DMark Vantage | |

| Crysis v. 1.2 | |

| World in Conflict | |

| FarCry 2 | |

| Crysis Warhead | |

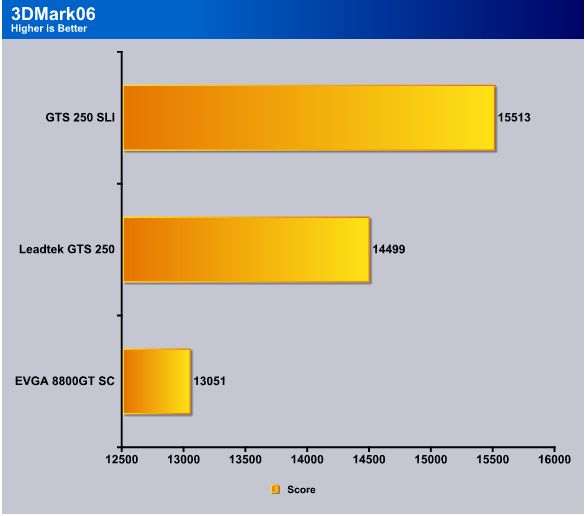

3DMARK06 V. 1.1.0

No big surprises here. The 8800 GT falls by the wayside as the GTS 250 jumps out in front. 3dMark06 is not known for its SLI-friendly features, because there are none. Still, the SLI cards pull ahead nicely.

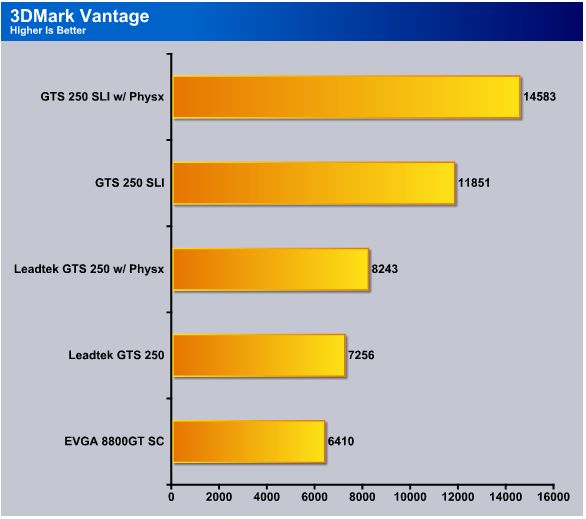

3DMark Vantage

www.futuremark.com/benchmarks/3dmarkvantage/features/

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PhysX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

This is where things get interesting. As stated above, some people like to see PhysX results. I find it interesting that for all the talk of “PhysX is unfair, it cheats the system”, and so on, it’s pretty interesting to see the splash screen on the first Vantage bench, which has the Aegia Physx logo as one of their sponsors.

As you can see from the results, PhysX with a single card doesn’t make that big of a difference, 1000 points or so. But SLI with PhysX turned on is a monster. A 2700 point increase with the flick of a radio button is not something that can be ignored. A score of 14,583 with a little old Q6600 is not too shabby!

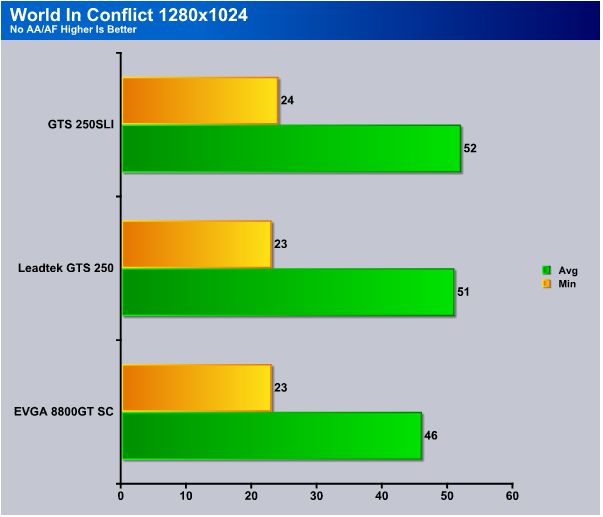

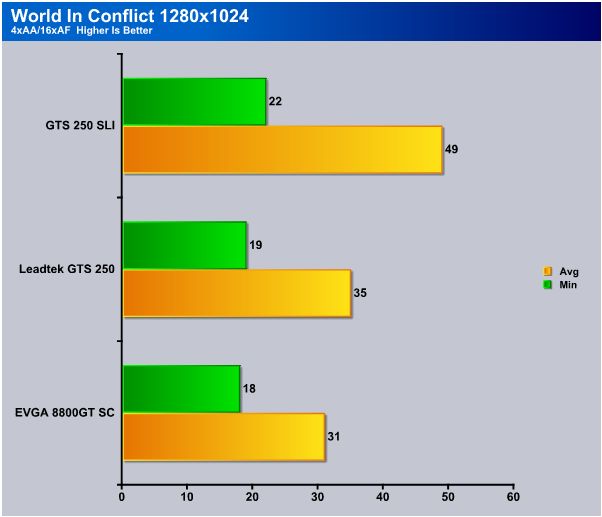

World in Conflict

At this resolution we’re seeing the CPU limiting the game somewhat. On one particular scene the frame rate would drop no matter what our settings were. Pay attention more to the averages on this test to see what the card is capable of.

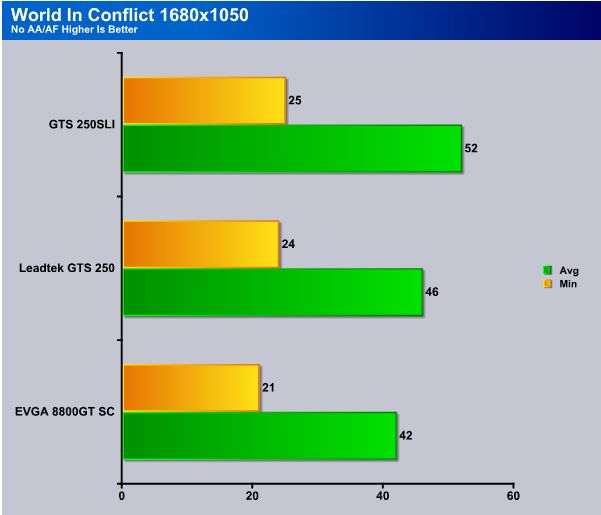

This resolution is the first where you will see the difference between the cards, as the 8800GT starts to become somewhat limited. The GTS 250 in single and SLI mode is still going strong, still CPU limited.

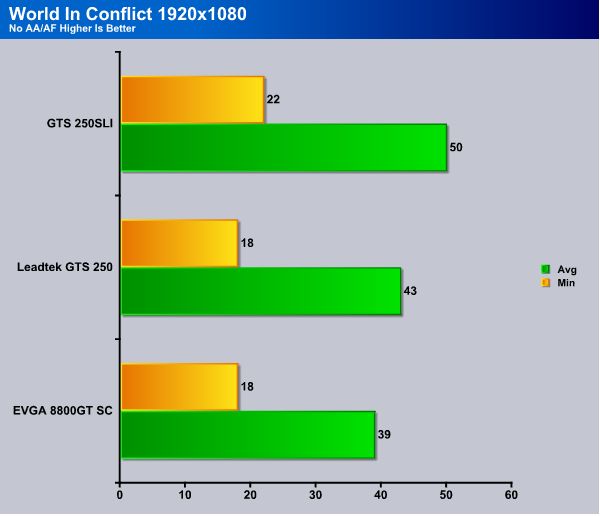

At this resolution, we lost a few FPS off the minimum, most likely due to the CPU again. The average frame rate is still strong across all three cards, but the 8800GT does show its age somewhat.

With the eye candy turned up, you can see that the game is not entirely playable. It is still somewhat CPU limited, but the GPU can’t quite handle all the eye candy either. The average frame rate is still acceptable.

The difference in this test was actually about 0.4 FPS on the minimum side, the minimum frame rate again being affected by the CPU. The average is a little better with the GTS 250 over the 8800GT and the SLI dominates it.

This is perhaps the most telling test. Anytime you test the highest resolution with the eye candy turned up you’ll separate the good from the bad. The average on the single card, as you can see, dips below 30 FPS for the first time in all of our testing. The SLI setup still doesn’t break a sweat, disregarding the CPU limitations.

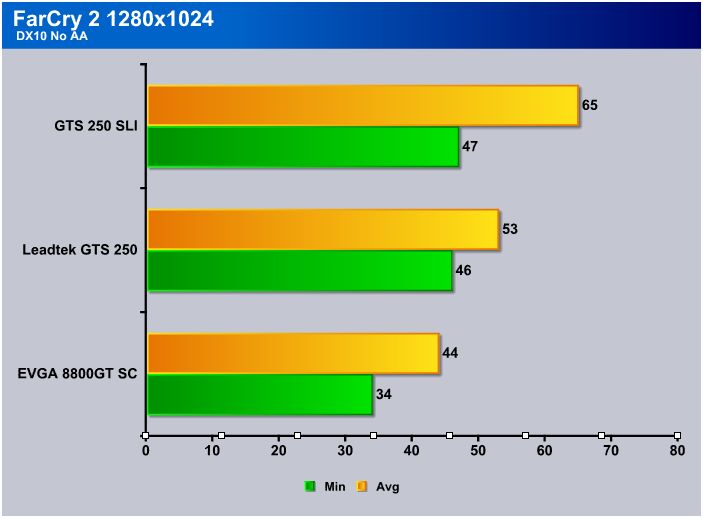

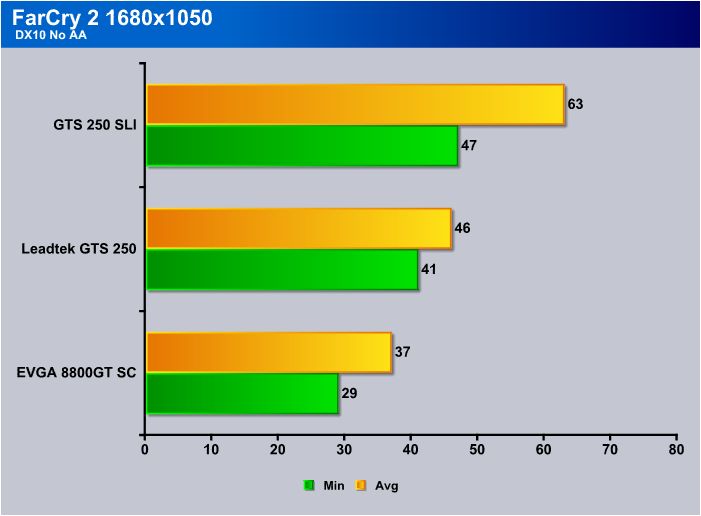

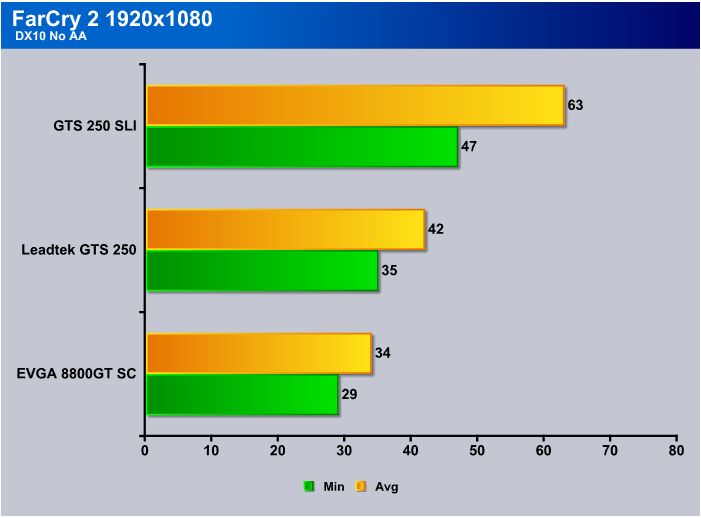

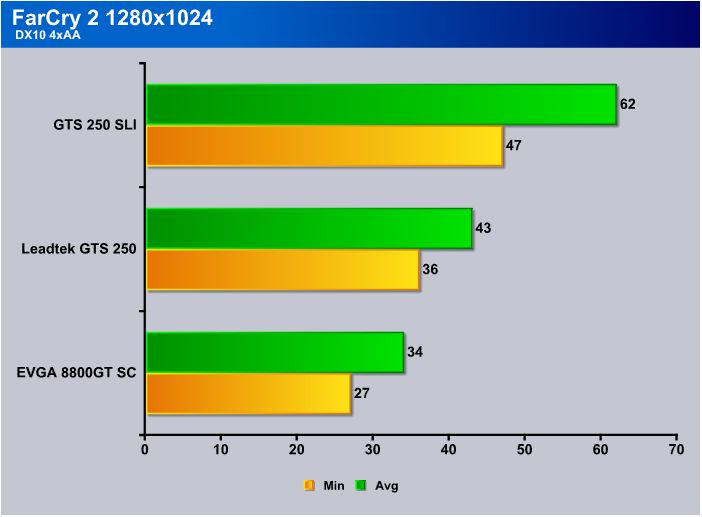

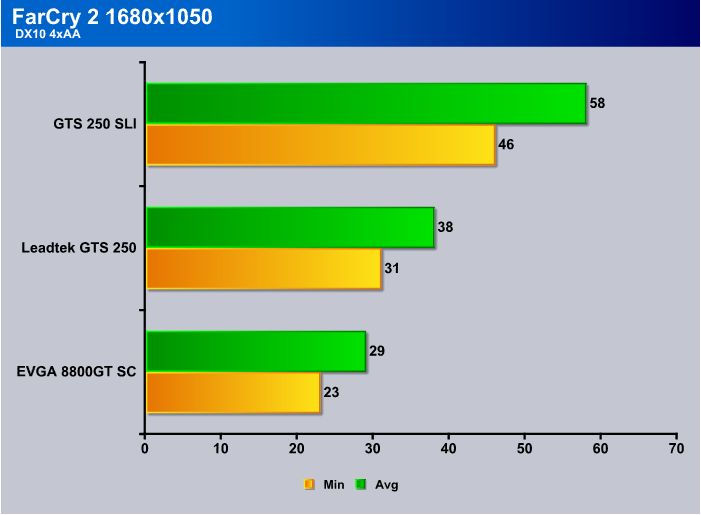

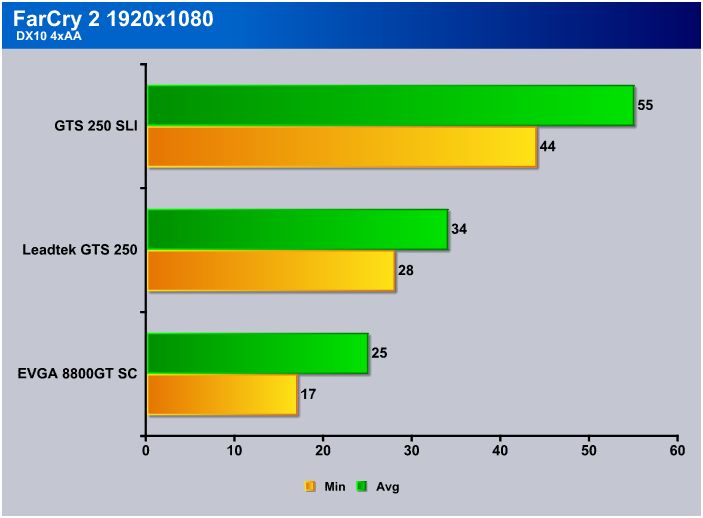

Far Cry 2

At this resolution, none of the cards had trouble keeping up, with the GTS 250 staying about 12FPS ahead on the minimum, and 9FPS on average. SLI didn’t help the minimum FPS much but bumped the average up 21FPS over an 8800GT.

For this test, the 8800GT dropped below 30FPS and had a pretty low average. The GTS 250 only lost about 5FPS, which isn’t too bad at this resolution. SLI remained impressive, and this is what we like to see. No issues with SLI, and a direct reflection of how it’s supposed to work.

Even at the highest resolutions the GTS 250 kept the game playable with no skips or stutters. Again though, it dropped about 5FPS, which is no problem as long as it stays over 30.

This is where the real testing begins. All that eye candy turned up and the GTS 250 keeps on truckin’. The 8800GT couldn’t hang though, dropping below 30FPS on the very first test. Notice the SLI setup maintained the same scores as the previous test, at 1920×1080 with no eye candy. That 4xAA puts quite a draw on a card.

Here, the 8800GT is all but out of the race, not even able to maintain 30 FPS average. The GTS 250 remained just barely on the better side of 30FPS, for a solid score. Note how well FC2 does with SLI though.

And once again, the 1920×1080 resolution (which is 1080p, HD gaming by the way) with 4xAA turned on finally drags our test card under 30FPS, and bring the average dangerously close to the mark. The 8800GT is all but forgotten while the SLI setup is still going strong.

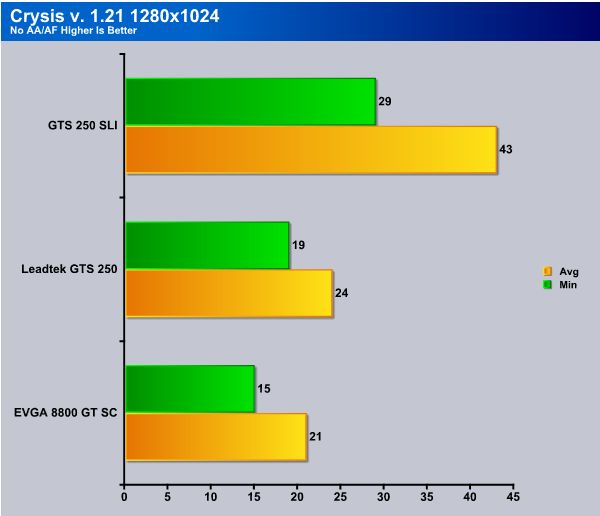

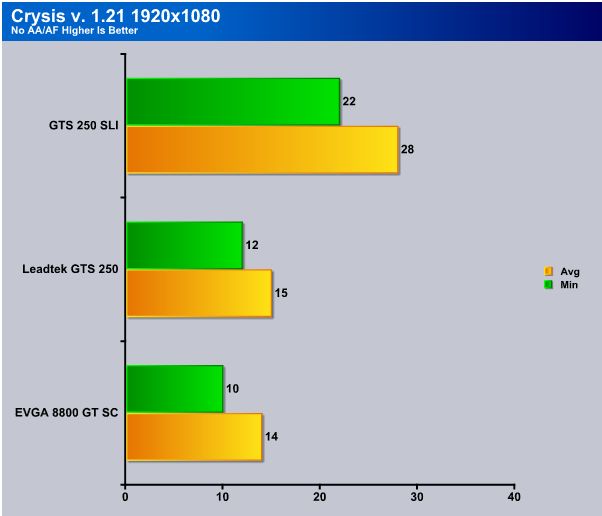

Crysis v. 1.2

Lets just start this section by saying that we don’t, and you shouldn’t expect to be able to play Crysis or Crysis Warhead with a performance class GPU without giving up some eye candy. Most enthusiast class GPU’s will fold in Crysis.

Perhaps the greatest thing about using Crysis for a benchmark is that it does a great job of offloading work from the CPU, avoiding the CPU limitations that you see with a game like World in Conflict. As you can see above, this test is going to be rough on our GPU’s, with even the SLI setup falling just below 30 FPS minimum.

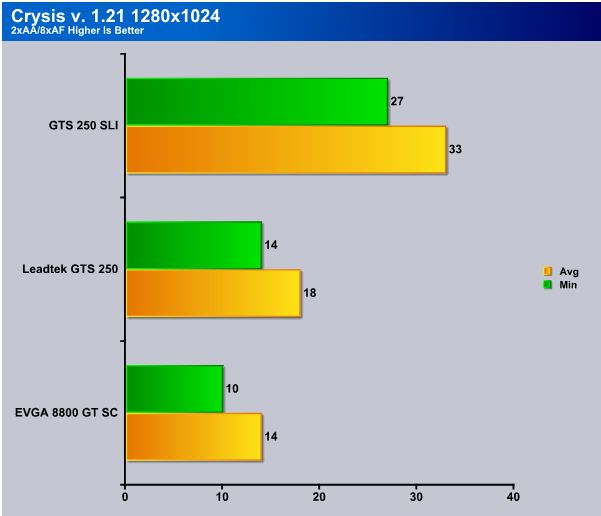

Now you’re starting to see what we mean by “rough.” The 8800GT was begging for mercy, the GTS 250 was screaming and the SLI setup was trying to comfort them both, to no avail, as it’s only going to get worse.

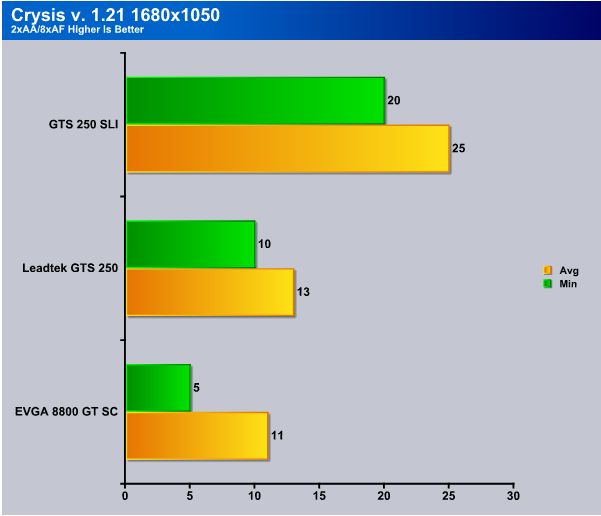

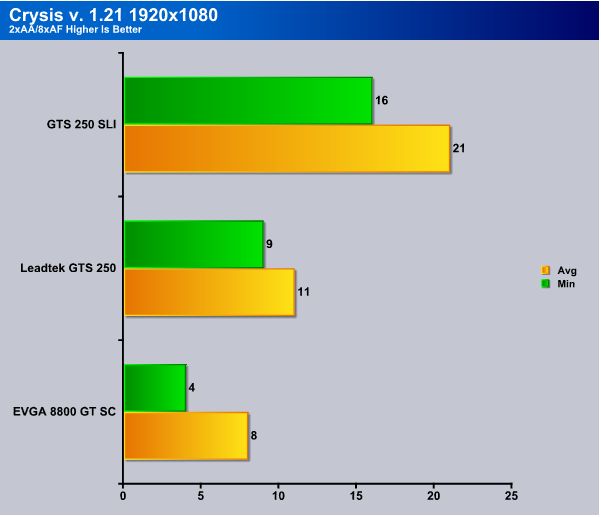

Okay, this is just brutal. Fans are running at full speed, the computer sounds like it’s rotating for lift-off, and we haven’t even gotten to the AA tests yet. Keep in mind that cards at this price-point just aren’t going to run all the eye candy on Crysis. Enthusiast grade cards still have a hard time with this bench.

Here you can see the 8800GT is running the same FPS as the high resolution, no AA test above. The GTS 250 picked up a couple of FPS from that last test, but is still not playable with all the eye candy on.

Okay, the 8800GT is in single digits now, that should give you an idea of how intense this test is. The SLI setup can’t even maintain 30 FPS here, which is to be expected.

This is the test, again, that really speaks to the intensity of the benchmark. Both cards are in single digits, with the 8800GT unable to even pull out an average with two numbers on it. The SLI setup does pretty well here, but not playable.

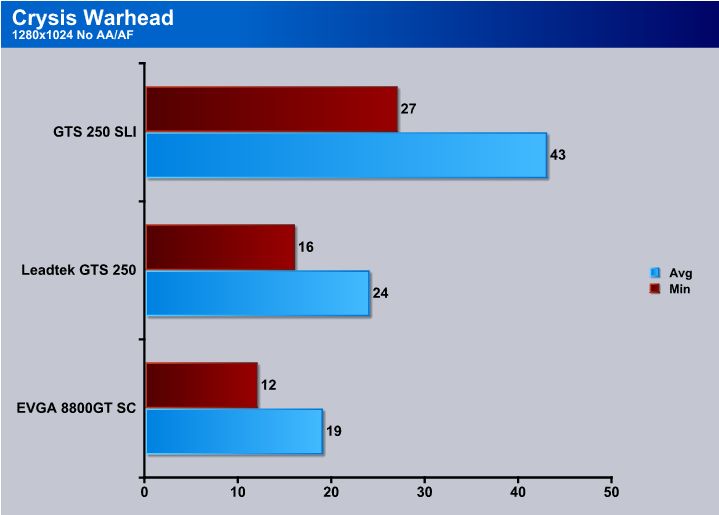

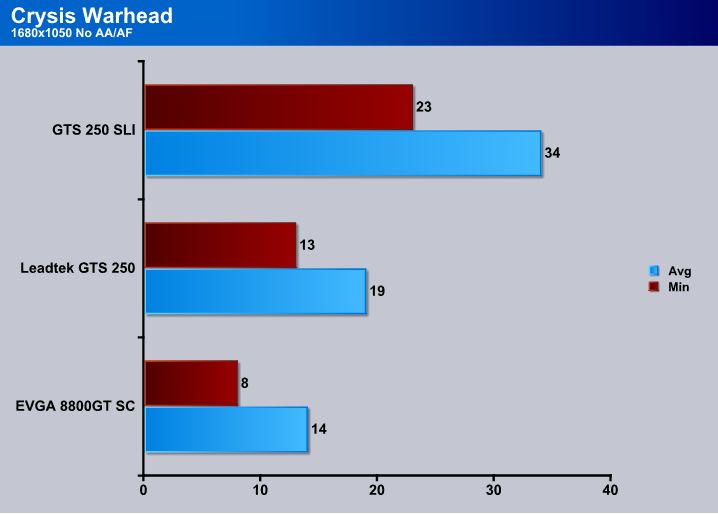

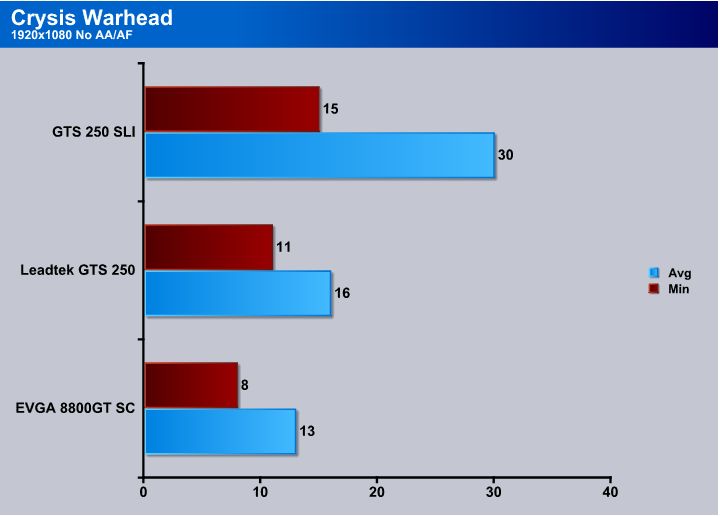

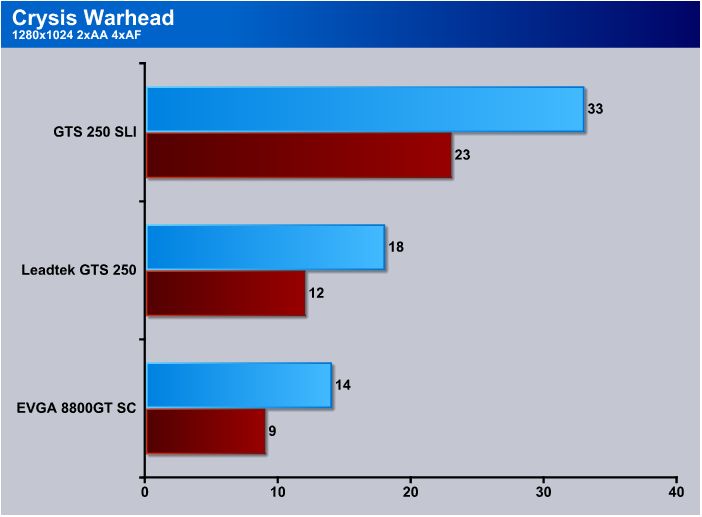

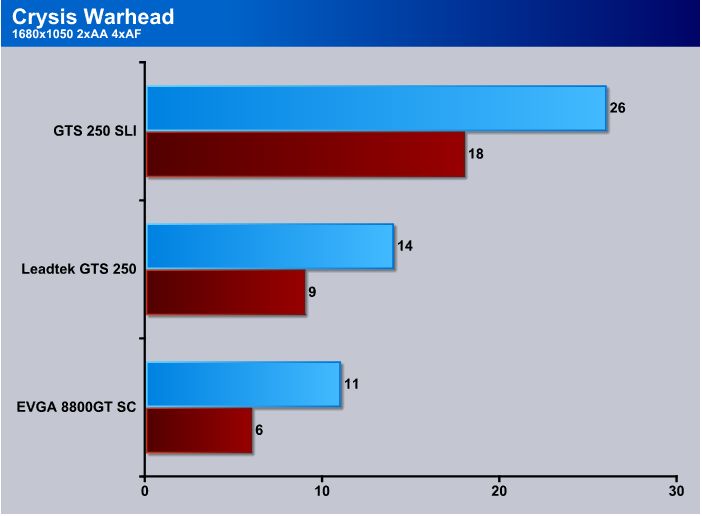

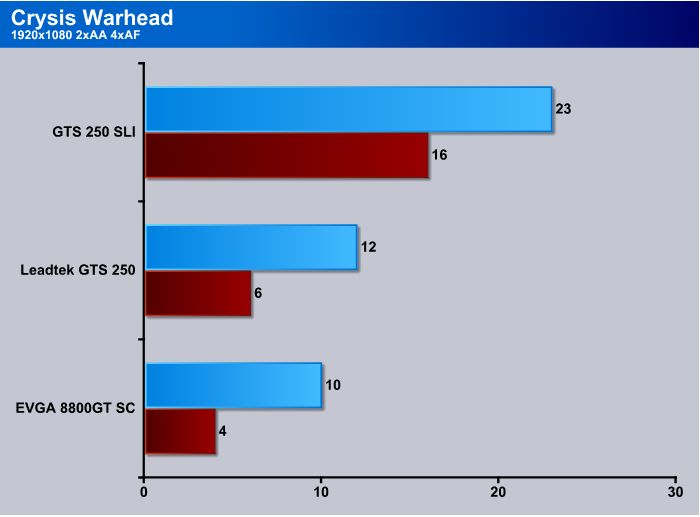

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008. One of the interesting things that you may notice is that in many tests, Warhead produced the same minimum FPS as Crysis, with averages that are close to the same.

Here you can see results pretty close to what we achieved with Crysis. None are playable, none were really expected to be. Remember, these tests are designed to make our GPU’s beg for mercy.

Again, pretty similar results to Crysis, with the 8800GT already dropping out of the race. SLI keeps the average above thirty, but the minimum falls below the magic number.

Don’t even consider trying to game at this resolution on Ultra High settings, even without the AA/AF on. The SLI setup keeps our average just over 30, but we spent enough time under 30 to make it unplayable.

The AA/AF turned on kept the 8800GT on its knees, even at a resolution of 1280×1024. The GTS 250 fared pretty well (relatively speaking, of course).

I don’t know about you, but I’m wondering just what they meant when they said the CryENGINE™ 2 was better optimized. It’s optimal for benching, for sure, as there’s not a card out there that will dominate these tests.

This is it, the mother of all tests. Crysis Warhead with AA and AF turned on. You would need an SLI setup to run this effectively, and you’d spend a whole lot more money that two or three of the GTS 250’s would cost you.

TEMPERATURES

To get our temperature reading, we ran 3DMark Vantage looping for 30 minutes to get the load temperature. To get the idle temp we let the machine idle at the desktop for 30 minutes with no background tasks that would drive the temperature up. Please note that this is on a DD Tower 26, which has a lot of room inside, so your chassis and cooling will affect the temps your seeing.

| GPU Temperatures | |||

| Idle | Load | ||

| 50°C | 66° C | ||

We were quite happy with the temperatures on the EVGA GTS-250. It came in at 34°C Idle and 66°C Load. Temperatures like that mean that you’re not going to need an aftermarket cooler which would increase the cost.

POWER CONSUMPTION

To get our power consumption numbers we plugged in our Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then, while3DMark Vantage was looping for 30 minutes we watched for the peak power consumption, then recorded the highest usage.

| GPU Power Consumption | |||

| GPU | Idle | Load | |

| Leadtek WinFast GTS 250 1GB | 224 Watts | 322 Watts | |

| Leadtek Winfast GTS 250 SLI | 254 Watts | 427 Watts | |

| EVGA GTS-250 1 GB Superclocked | 192 Watts | 283 Watts | |

| XFX GTX-285 XXX | 215 Watts | 322 Watts | |

| BFG GTX-295 | 238 Watts | 450 Watts | |

| Asus GTX-295 | 240 Watts | 451 Watts | |

| EVGA GTX-280 | 217 Watts | 345 Watts | |

| EVGA GTX-280 SLI | 239 Watts | 515 Watts | |

| Sapphire Toxic HD 4850 | 183 Watts | 275 Watts | |

| Sapphire HD 4870 | 207 Watts | 298 Watts | |

| Palit HD 4870×2 | 267 Watts | 447 Watts | |

| Total System Power Consumption | |||

Power consumption on the Leadtek is pretty reasonable. Keep in mind that most of these readings were taken on a Core i7 system, while the GTS 250 was run on a Q6600 system, which uses more power. Look at the total difference between load and idle to get a good idea of what the power requirements are. Overall, this card used about 7 watts less, at load, than the EVGA GTS 250 did.

OVERCLOCKING

What good would a video card review be without overclocking the thing? Not much, in our opinion. Most people that are looking into buying these cards will probably do a little overclocking anyhow, so we might as well show you what it’s capable of.

As you can see above, we managed to obtain a nice overclock on the Leadtek GTS 250. All clock settings were tested first with ATItool to scan for artifacts, and then we ran the FC2 benchmark again to see what, if any, difference it made. Overall, we got a 12.5% increase on the core clock. I did have to unlink the shader from the core because any higher on the shader from where it is now and I was getting lots of artifacts. When I dialed back the shader clock, all of the artifacts went away so I clocked the core a little higher. Memory came in at a respectable 2.4GHz. What all of this means is that the FC2 benchmark, at 1920×1080 with 4xAA turned on, managed to keep the minimum FPS above 31, making the game entirely playable at the highest resolutions. Overall, it gave us an extra 3FPS. But sometimes 3FPS makes the difference between an enjoyable playing experience, and a frustrating one.

Added Value Features

Windows 7

There are a few things we have that we’d like to cover that we don’t have a set place in review to cover. We mentioned earlier that we’d been meeting with Nvidia on a semi regular basis covering different topics.

We have it on good authority that in Windows 7 the core operating system will also, for the first time, take advantage of Parallel computing. Windows 7 will be able to offload part of its tasks to the GPU. That’s a really exciting development and we expect to see more of the computing load shifted to the GPU as Windows evolves. Given the inherent power of GPU computing, as anyone that has done Folding At Home on a GPU knows, this opens up whole new vistas of computing possibilities. Who knows we may see the day when the GPU does the bulk of computing and the CPU just initiates the process. That is, of course, made possible by close cooperation of Nvidia and Microsoft and may be an early indication of widespread acceptance of Parallel computing and CUDA.

The easiest way and most coherent way (we’ve had a hard few days coding and benching the GTS-250) to get you the information is to clip it from the press release PDF file and give it to you unmolested.

That’s right Nvidia and Microsoft are already working together and have delivered functional drivers for Nvidia GPU’s running under Windows 7 and they will be publicly available early in March.

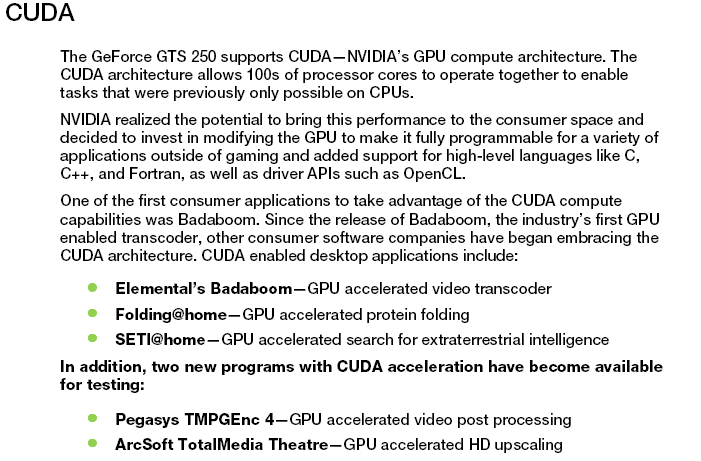

CUDA

Two new CUDA applications are available for testing.

3D Vision

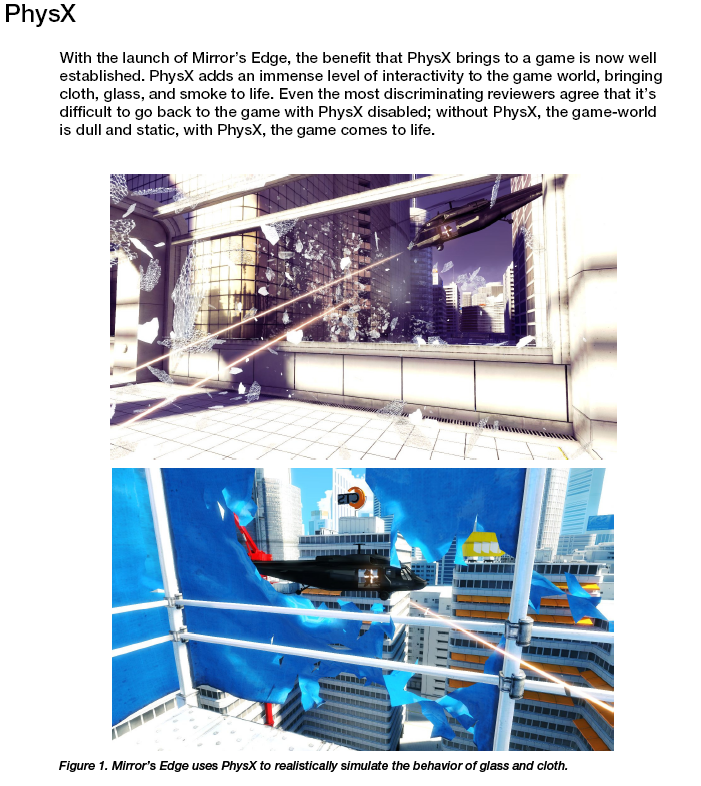

PhysX

CONCLUSION

Overall, we were very happy with the Leadtek Winfast GTS 250. It is a solid performer in its class and we didn’t run into any issues with it. Leadtek has been around long enough that you should have no worries when buying their version of this card. They do a pretty good job of standing behind their products from what we have seen.

A lot of you are going to ask the question, “Why should I buy one of these instead of a 9800GTX+?” Let me just say this, the price point for this card is incredible. You still can’t get a 512MB 9800GTX+ for $129, like you can the GTS 250 512MB. If I were you though, I’d skip the 512MB version, unless you plan to SLI with a 9800GTX+ you already have, and go straight to the 1GB version. For the 1GB flavor, expect to pay about $149 USD, which is still cheaper than the 9800GTX+. Heck, a few months ago I bought my wife a 9600GSO 1GB and it cost $149.99, and it’s not even in the same class! Also, the die shrink to 55nm is a big plus in decreased power draw and heat production.

The drawbacks list is short for this card, I think the biggest one is the heatsink, which does not fully enclose the card and leaves some of the circuitry open to damage. Second, it’d be nice to see a single slot version of this card somewhere. I know that big bad heatsinks are all the rage right now, but this card produces less heat than the EVGA 8800GT that it was tested against, partly because of the die shrink, yet the 8800GT uses a single slot, full shroud heat sink. I ended up have to drain my custom water loop and pull the waterblock off of the 8800GT, because the hoses made it impossible to remove the GTS 250, and because it has a dual slot cooler. I couldn’t reach inside to remove the 8800GT either. I know that’s not really a typical problem, but having to pull two other cards out if you have a problem with the bottom one is a bit of a hassle. Especially since these are designed to be Tri-SLI capable.

The LeadTek version of the card comes with LeadTek’s standard 3-year warranty.

We are trying out a new addition to our scoring system to provide additional feedback beyond a flat score. Please note that the final score isn’t an aggregate average of the new rating system.

- Performance 7

- Value 8.5

- Quality 8

- Warranty 7

- Features 10

- Innovation 7.5

Pros:

+ Runs cool for a stock heatsink

+ Tri-SLI Ready

+ Out-performs Other GPU’s At The Same Price Point

+ Quiet fan, at reasonable speeds

+ Lower Power Consumption

+ Alloy core chokes

Cons:

– Exposed edges on fan shroud leave components open to damage

– G92 Core Variant

The Leadtek WinFast 1 GB GTS 250 provides does an excellent job of providing sufficient graphics processing power at a reasonable price. Because of its low price-point and good performance it has earned: 7.0 out of 10 and the Bjorn3D.com Seal Of Approval.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996