The GTX275 from Nvidia is their answer to the ATI 4890. Will the Leadtek version of this card be able to strike fear into the ATI 4890?

Introduction

With the recent introduction of the ATI HD 4890, Nvidia needed something to directly compete with this card. Their answer was the GTX275, and today we have the Leadtek version of this card. Essentialy all Nvidia partners cards are the same, with the exception of the top sticker, so whats different about the Leadtek version? For one Leadtek does not officialy opperate in North America, which means it will be hard to get your hands on one of these cards without importing it from another country if you live in North America. That makes this primarily a review for our European friends, but if you want to see the GTX275 go head to head with the HD 4890 might as well read on!

Leadtek

Founded in 1986, Leadtek is doing something slightly different than other manufacturers. Their company has invested tremendous resource in research and development. In fact, they state that “Research and development has been the heart and soul of Leadtek corporate policy and vision from the start. Credence to this is born out in the fact that annually 30% of the employees are engaged in, and 5% of the revenue is invested in, R&D.”

Furthermore, the company focuses on their customers and provides high quality products with added value:

“Innovation and Quality ” are all and intrinsic part of our corporate policy. We have never failed to stress the importance of strong R&D capabilities if we are to continue to make high quality products with added value.

By doing so, our products will not only go on winning favorable reviews in the professional media and at exhibitions around the world but the respect and loyalty of the market.

For Leadtek, our customers really do come first and their satisfaction is paramount important to us.

Features

PCI Express 2.0 Support

Designed to run perfectly with the new PCI Express 2.0 bus architecture, offering a future-proofing bridge to tomorrow’s most bandwidth-hungry games and 3D applications by maximizing the 5 GT/s PCI Express 2.0 bandwidth (twice that of first generation PCI Express). PCI Express 2.0 products are fully backwards compatible with existing PCI Express motherboards for the broadest support.

2nd Generation NVIDIA® unified architecture

Second Generation architecture delivers 50% more gaming performance over the first generation through enhanced processing cores.

GigaThread™ Technology

Massively multi-threaded architecture supports thousands of independent, simultaneous threads, providing extreme processing efficiency in advanced, next generation shader programs.

NVIDIA PhysX™ -Ready

Enable a totally new class of physical gaming interaction for a more dynamic and realistic experience with Geforce

NVIDIA® Lumenex™ Engine

Delivers stunning image quality and floating point accuracy at ultra-fast frame rates.

- 16x Anti-aliasing: Lightning fast, high-quality anti-aliasing at up to 16x sample rates obliterates jagged edges.

- 128-bit floating point High Dynamic-Range (HDR): Twice the precision of prior generations for incredibly realistic lighting effects

High-Speed GDDR3 Memory on Board

Enhanced memory speed and capacity ensures more flowing video quality in latest gaming environment especially in large scale textures processing.

Dual Dual-Link DVI

Support hardwares with awe-inspiring 2560-by-1600 resolution, such as the 30-inch HD LCD Display, with massive load of pixels, requires a graphics cards with dual-link DVI connectivity.

Dual 400MHz RAMDACs

Blazing-fast RAMDACs support dual QXGA displayswith ultra-high, ergonomic refresh rates up to 2048×1536@85Hz.

3-way NVIDIA SLI technology

Offers amazing performance scaling by implementing AFR (Alternate Frame Rendering) under Windows Vista with solid, state-of-the-art drivers.

Microsoft® DirectX® 10 Shader Model 4.0 Support

DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects.

Dual-stream Hardware Acceleration

Provides ultra-smooth playback of H.264, VC-1, WMV and MPEG-2 HD and Bul-ray movies.

High dynamic-range (HDR) Rendering Support

The ultimate lighting effects bring environments

NVIDIA® PureVideo ™ HD technology

The combination of high-definition video decode acceleration and post-processing that delivers unprecedented picture clarity, smooth video, accurate color, and precise image scaling for movies and video.

HDCP Capable

Allows playback of HD DVD, Blu-ray Disc, and other protected content at full HD resolutions with integrated High-bandwidth Digital Content Protection (HDCP) support. (Requires other compatible components that are also HDCP capable.)

OpenGL® 2.1 Optimizations and Support

Full OpenGL support, including OpenGL 2.1

NVIDIA CUDA™ Technology support

NVIDIA® nView® multi-display technology

Specifications

|

Specifications

|

||||

| GPU |

GTX275

|

HD 4850 1GB

|

HD 4870 1GB

|

HD 4890

|

| GPU Frequency |

633 MHz

|

625 MHz

|

750 Mhz

|

850 Mhz

|

| Shader Frequency |

1404 MHz

|

625 MHz

|

750 Mhz

|

850 Mhz

|

| Memory Frequency |

1134 MHz

|

993 Mhz

|

900 Mhz

|

975 Mhz

|

| Memory Bus Width |

448-bit

|

256-bit

|

256-bit

|

256-bit

|

| Memory Type |

GDDR3

|

GDDR3

|

GDDR5

|

GDDR5

|

| # of Stream Processors |

240

|

800

|

800

|

800

|

| Texture Units |

80

|

40

|

40

|

40

|

| ROPS |

28

|

16

|

8

|

8

|

| Bandwidth (GB/sec) |

127

|

63.6

|

115.2

|

124.8

|

| Process |

55nm

|

55nm

|

55nm

|

55nm

|

Looking at the chart it seems like the GTX275 has a bit of a disadvantage. Nvidia goes about stream processors in a different way from ATI. They chose to use fewer but super clock them, thus matching the performance of ATI with fewer stream processors. Other than that the GPU’s are fairly even to eachother minus the GTX275’s higher memory bus width, which ATI makes up for by putting GDDR5 in their cards.

Keynote Features

We’ve encountered some features we feel worth expanding on so we’re including a Keynote features page in this review. Their features that often get overlooked, glossed over, or are new features we want you to know about.

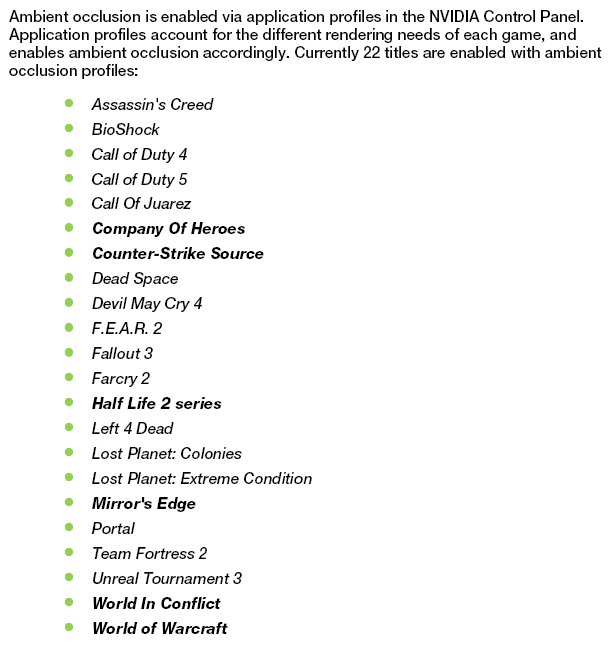

Ambient Occlusion

Ambient Occlusion is an exciting new technology specific to Nvidia GPU’s, it inserts shadows into games on a driver level and adds some really nice eye candy to gaming. Let’s throw out a few shots of before and after Ambient Occlusion and let the pictures do most of the talking.

World In Conflict Before Ambient Occlusion

World In Conflict After Ambient Occlusion

What you’ll notice most in these shots is that the grass is more detailed but that’s not all Ambient Occlusion does for you, if calculates and adds shadows in games.

Half Life 2 Before Ambient Occlusion

Half Life 2 After Ambient Occlusion

Take a look at the phone booth in the first picture as well as the corner next to it, notice in the second picture the Ambient Occlusion feature has calculated and inserted shadows. It’s a glaring omission in some games where the light source isn’t throwing shadows and Ambient Occlusion addresses that. Currently it’s set to on or off in the Nvidia Control Panel and available on limited games but we expect that list to grow as time progresses. Here’s a graphic of the games Ambient Occlusion is available in now, the highlighted games are the ones Ambient Occlusion works best in.

We know that one big question in your mind is does Ambient Oclussion come with a performance hit on frame rates, yes like all eye candy it comes with a performance hit. You can expect 20 – 40% decrease in frame rates so depending on the game you might need a pretty beefy GPU to drive the added eye candy. A lot of the games it’s available on though have high enough frame rates that the overhead for Ambient Occlusion will reside in undisplayable frames anyway. Meaning that if your monitor has a refresh rate of 60 Hz which is typical for most LCDs and frame rated are 80 – 100 FPS then your FPS will go down on paper but since your only capable of displaying 60 FPS on a 60 Hz monitor your not losing any displayable FPS.

Windows 7 Ready

Nvidia and Microsoft have been working closely together to ensure that when/if you make the move to Windows 7 the drivers for your Nvidia GPU will be there and ready for you. We’ve done some quite successful testing with drivers in Windows 7 and you’ll see the results starting to appear in review very soon. Here’s a little information on it gleaned from an Nvidia product information packet.

Not only are Nvidia GPU’s Windows 7 ready the operating system itself will be using GPU’s to accelerate performance. This is going to happen 2 ways. Through CUDA which we’ll cover in a few minutes and through Intel endorsing parallel computing with OpenCL in Havoc. While OpenCL in Havoc is still pretty much a paper concept we have no doubt that Intel has the resources to make it work. Here’s the interesting point. OpenCL and Havoc will run on Nvidia and ATI GPU’s so Windows 7 should (If OpenCL Havoc gets off paper and into code) be able to use Nvidia and ATI GPU’s for parallel processing. There’s still a downside, if you want PhysX in games (PhysX not Havoc) and GPU acceleration in Windows 7 you can only get both on an Nvidia GPU. With no less than 10 new PhysX titles slated for release in the remainder of 2009 we’re seeing a growing number of game developers turn to PhysX to reach the next level of gaming. With Intel endorsing Parallel computing we’re pretty sure GPU parallel computing is going to come of age and the pioneers of that, and the company with the most experience in it is of course Nvidia.

CUDA

Now that we’ve open the Parallel computing and CUDA can of worms we need to expand on that just a bit.

With humble beginnings in Folding@Home and Seti@Home CUDA has expanded it’s base to other endeavors. Right now that seems to be mainly Video Processing but you can bet that as programmers catch up and are given the tools to use CUDA you’ll be seeing more parallel computing applications including the Operating Systems of the future. The inclusion of Parallel computing in the OS itself we can’t stress how exciting and important that is. Here’s a graphic of the road map of already released and soon to be released CUDA capable applications.

We included the graphic of applications including CUDA so that you can see that programmers and applications are picking it up and having great success with it. If major companies like the ones in the graphic are picking up CUDA you can bet others will follow. CUDA offers faster processing and programs and applications that don’t embrace CUDA and parallel programming won’t run as fast. People are speed oriented from birth, we hate waiting on things, and in a lot of cases we just won’t wait. CUDA will help reduce the wait times. Right now the video editing is reaping the bulk of the CUDA benefits but as time progresses the technology will trickle down.

PhysX

PhysX is more than just the ability of your GPU to take the load off the CPU while doing boring calculations. The effects you’ll see in game are a result of lengthy difficult PhysX calculations that without a PhysX enabled GPU will drag your frame rates down to unplayable levels. Now in most games you can turn off PhysX and still play but lets examine the differences you’ll see with and without PhysX in a newly patched PhysX title Sacred 2 Fallen Angels.

We’ll be doing a separate review of Sacred 2 soon because we can only cover so much ground in the limited time we had to get the GTX-275 benched, charted and posted so we snagged some screen shots from the game for you to take a gander at. Believe us when we tell you the screen shots don’t do the technology justice.

In the pictures the left side is without PhysX and the right side is with PhysX. The left side of the graphic shows the Untouchable Force spell without PhysX and lacks any environmental effects. The Right side top shows the same spell with PhysX and you’ll see a better looking effect and the leaves and rocks are strewn about by PhysX making it a better looking effect and frankly more realistic. The next spell is Ground Dig and the left side of the graphic you just see a blue ring of magical energy, the right side you actually get to see the spell interacting with the environment. In other words without PhysX the ground doesn’t really get dug. With PhysX the objects surrounding you during the spell interact with it and the ambient environment. We’re not sure how you feel about it but look at it this way, take a shotgun and aim it some distance in front of you and shoot a pile of leaves (yes go get a real shotgun). Do you expect to see the shot impact the leaves or do you expect it to impact the leaves and blow the pile of leave to the four corners of the Earth? You expect the leaves to scatter, that’s what in game PhysX does for you. Objects are no longer objects they interact with the environment and you. Glass doesn’t just fade away it becomes a persistent object and unlike non-physX games it can hurt you and properly used hurt enemies. Once again if you have glass fall on you in real life you kind of expect to get hurt, not to see the glass fade into the gound and melt away.

The top graphic in this one is Dust Devil, in the left pane we might refer to it as Dustless Devil. You get a little flash of light and a purple haze. The right pane with PhysX applied you get Dirt, Dust and Debris scattering away from you which is what you’d expect from a spell called Dust Devil.

The Second pane shows the spell Frentic Fervor and in the left pane without PhysX you get a golden flash of light, the right pane with PhysX you get a trail of magic particles, and the force shield pushes all physical particles out and away from the spell. Might seem like a minor difference, until you’ve seen it in practice. We’ve yet to see someone experience the difference that wasn’t impressed. We wish the effects were available on all GPUs but at this time you can only experience it fully with Nvidia GPUs and using any other GPU frame rates with a good CPU taking the PhysX load FPS will drop down to a choppy nasty looking 10 FPS. Lesser CPU’s it gets even more painful to watch.

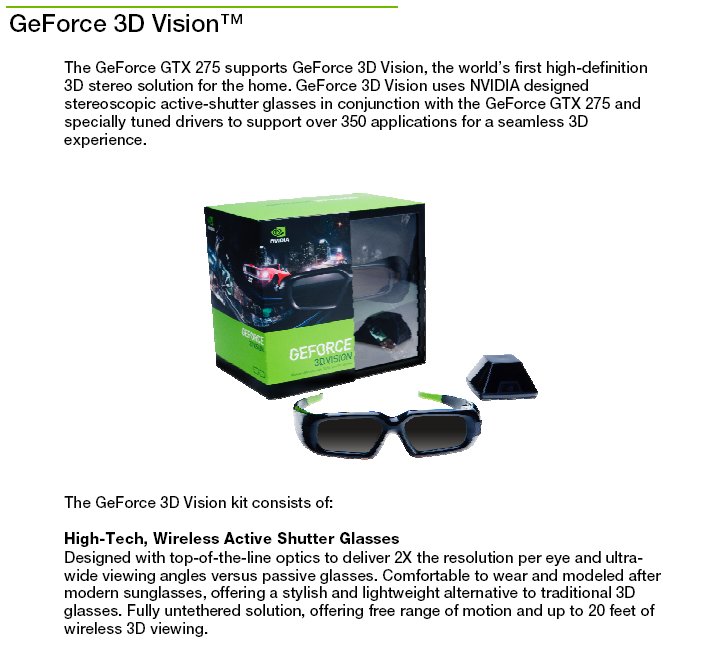

3DVision

That brings us to our last keynote feature Nvidia 3DVision. As the name implies this version of 3D gaming is only available on Nvidia GPU’s and you’ll need additional hardware to experience it.

TO experience Nvidia 3D Vision you’ll need the 3D Vision kit with the associated hardware that comes in the kit and you’ll need a True 120 HZ monitor.

The initial requirements might be a little steep but once you have the equipment 3D Vision takes place at a driver level and out of the box supports more than 300 games currently and unlike some 3D systems you don’t pay for 3d by the game, once you have the hardware you can experience true 3D gaming in the comfort of your own gaming shrine at no additional cost.

When it comes to Nvidia 3D Vision it’s another one of those thigns that words can’t describe well, we’ve reviewed the 3D Vision system and believe us when we tell you it’s an evolution in gaming. Once you’ve gamed in 3D chances are your spoiled for life.

Pictures & Impressions

The box was pretty high quality. Leadtek used very thin stickers to deliver much higher quality than just printing on the box can achieve. On the front Leadtek points out all of the basic fancy features of the card. When you flip the box over there is the usual more complicated feature set. They have chosen to advertise all of the Nvidia technologies such as PhysX and SLI. They also have a small section mentioning their own overclocking tool, which I will go into more depth later on in the review.

Inside of the box we see just as much case as outside. There is a thick layer of foam to ensure that even if the package is punted to your door the card will take no damage. included right on top are the manual and driver CD. Leadtek has made a small compartment to house the various other accessories such as the DVI to VGA adapter. Which brings us to the things included with this card. Sadly there is no software included, I feel this is a bit lacking, as other cards in this price range at least come with a version of DVD software at the very least. There is an instruction manual included for those who have never installed a graphics card before, along with the better Molex to six pin PCIE connectors, which use two Molex connectors to power the card. This is much better, because the card is able to draw the power it should.

When you pull the card out of the box you will notice the anti static bag around it. This ensures that no random sparks of electricity jump to the card, and thus ruin it. This protective covering also helps protect against any scratching that the manual or driver CD may cause by sitting on top of the card. Much to my surprise after removing this anti static bag, there was another layer of protection. This plastic covering was not as thick as the bag, but it still helps prevent any scratches on the card. This is going above and beyond to me. The HD 4890 sure wasn’t packaged this nicely.

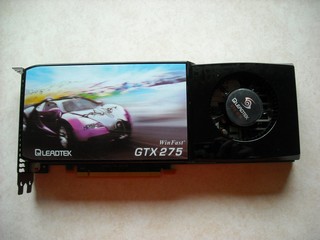

When you’ve finally dug through all that packaging and see the bare card it becomes all worth it. I would like to point out that my card had a few minor scratches on it, I am not sure if this is due to it being a lower quality review sample, or the one that got away. The sticker is of an interesting car, but I feel its not quite as cool as some of the stickers other manufacturers have used. Not that it really matters since this side of the card will be facing down.

One thing many are probably wondering is does this card take a eight pin PCIE connector? No it does not, just two six pin connectors just like the GTX260. This is good news for those out there with a PSU which only has two six pin PCIE connectors, and no eight pin connectors. As with almost all new cards made in recent history, the GTX275 has dual DVI ports. This card comes with one DVI to VGA adapter, so if your still using dual VGA monitors you will have to either buy one or use an old one you have laying around. Generally these cards do have another port to output component, RCA, or S-video, but this card strangely lacks them. I don’t use any of these connections, so it doesn’t bother me, but this may turn someone away if they use one of these connections to hook up to their TV.

The size of the card is very compareable to other Nvidia cards, but it is longer than the ATI HD 4890 by about an inch. This is bad news for those with a small case, but a plus is the PCIE power connectors on the GTX275 are on the side, so your case only needs to hold the length of the card, and not the length of the card plus the PCIE connector. The cage fans in the GTX275 and HD 4890 are pretty much the same size, but the GTX275 is much quieter. For those looking for a quiet case, you will definatly want the GTX275.

Testing & Methodology

To test the Leadtek GTX275, I used Windows Vista Ultimate 64-bit. I went through each test three times to ensure that I had very accurate data. Doing the test multiple times ensures that an out layer is not in the data, thus skewing the final results. All of the graphics cards where tested on the same machine to make sure that there are no added benefits to one card. I have included a table with my full system specs.

| Test Rig | |

| Case Type | Cooler Master HAF922 |

| CPU | Intel I7 920 @ 3.7 |

| Motherboard | Intel SmackOver X58 |

| Ram | (2×3) Mushkin HP3-12800 @ 1480, 8-8-8-20 |

| CPU Cooler | Cooler Master V10 |

| Hard Drives | WD SE16 640 GB WD SE16 750 GB |

| Optical | Lite-On DVD R/W |

| GPU |

Sapphire HD 4890 1GB |

| Case Fans |

One Top 200mm Exhaust |

| Testing PSU |

Corsair HX1000W |

In 3DMark06, the default settings where used on a resolution of 1280×1024. For 3DMark Vantage I used the Performance preset. For Company of Heroes I used the maximum settings, including the Model Detail slider all the way to the right. For Crysis, I used the highest settings. I used the Ultra Detail setting in World in Conflict, along with 4xAA and 4XAF. I used the highest settings in Far Cry 2; along with the DX10 renderer. In Clear Sky I used the maximum settings and used the automatic tester to ensure that all the test runs where the same.

For Crossfire, I tested the 4870 1GB with the 1 GB 4850, and for the 4870 Crossfire I used the 4870 with the 4890.

| Synthetic Benchmarks & Games | |

| 3DMark 06 | |

| 3DMark Vantage | |

| Company of Heroes | |

| Crysis | |

| World in Conflict | |

| FarCry 2 | |

| S.T.A.L.K.E.R. Clear Sky | |

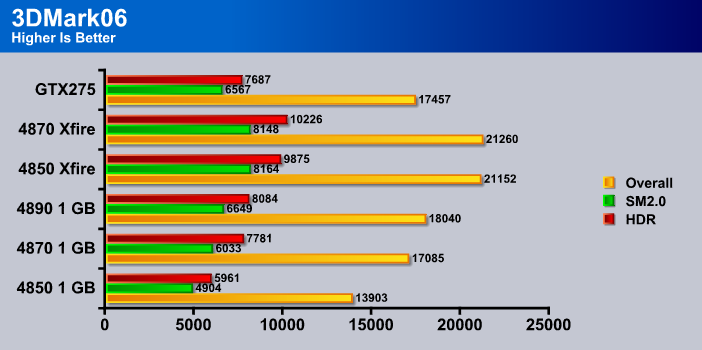

3DMark 06

3DMark06 developed by Futuremark, is a synthetic benchmark used for universal testing of all graphics solutions. 3DMark06 features HDR rendering, complex HDR post processing, dynamic soft shadows for all objects, water shader with HDR refraction, HDR reflection, depth fog and Gerstner wave functions, realistic sky model with cloud blending, and approximately 5.4 million triangles and 8.8 million vertices; to name just a few. The measurement unit “3DMark” is intended to give a normalized mean for comparing different GPU/VPUs. It has been accepted as both a standard and a mandatory benchmark throughout the gaming world for measuring performance.

The GTX275 gets off to a bit of a stumble. The HD 4890 was able to score over 500 points more in 3DMark06. The GTX275 did at least keep ahead of the HD 4870, falling halfway between the HD 4890 and HD 4870.

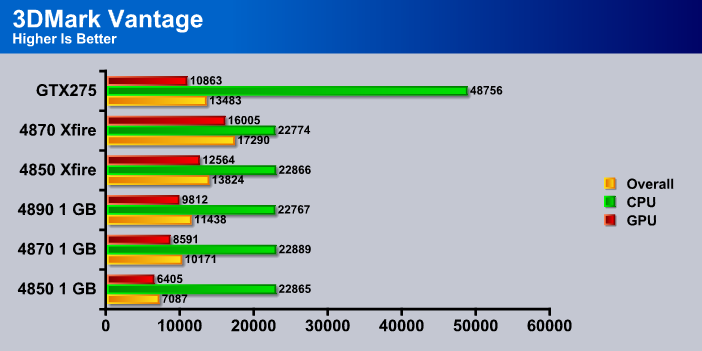

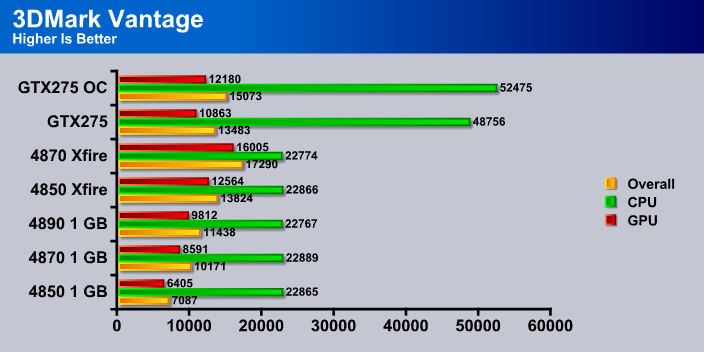

3DMark Vantage

For complete information on 3DMark Vantage Please follow this Link: www.futuremark.com/benchmarks/3dmarkvantage/features/

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PyhsX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

No surprize here the GTX275 easily won. This is due to Nvidias use of PhysX to calculate CPU score. While no show on the graph, when PhysX is turned off the GTX275 scores a little more than the HD 4890.

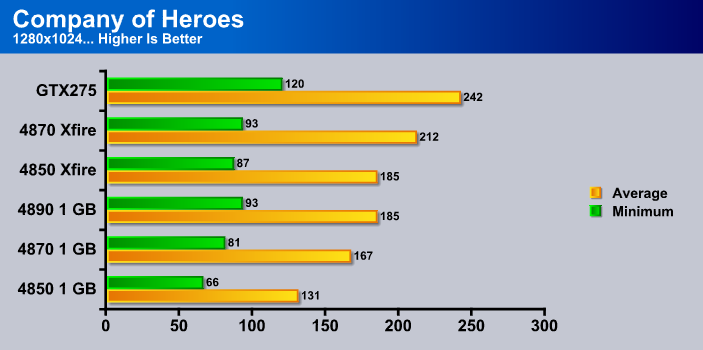

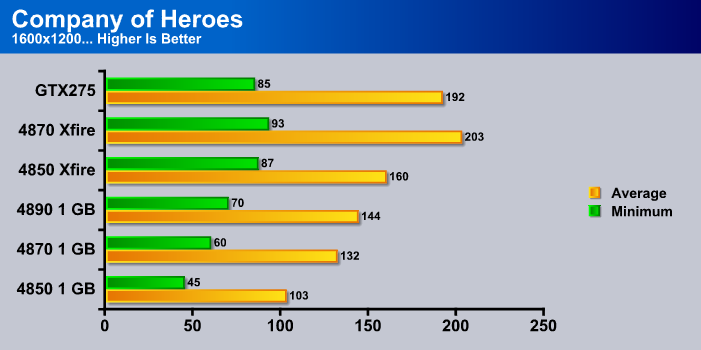

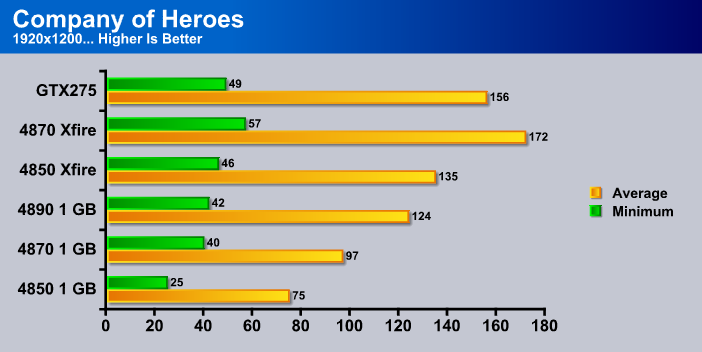

Company of Heroes

Company of Heroes(COH) is a Real Time Strategy(RTS) game for the PC, announced on April, 2005. It is developed by the Canadian based company Relic Entertainment and published by THQ. COH is an excellent game that is incredibly demanding on system resources thus making it an excellent benchmark. Like F.E.A.R., the game contains an integrated performance test that can be run to determine your system’s performance based on the graphical options you have chosen. Letting the games benchmark handle the chore takes the human factor out of the equation and ensures that each run of the test is exactly the same producing more reliable results.

The GTX275 really scores here. It easily disposed of the much more expensive Crossfire HD 4870’s. I am not sure the GTX275 can keep this up though, this seems like an awfully high score even though Company of Heroes is optimized for Nvidia cards.

The GTX275 didn’t live up to its previous triumph. Though it was pretty close to HD 4870 Crossfire. It even managed to beat Crossfire HD 4850’s, not an easy accomplishment.

Once again the GTX275 didn’t beat the HD 4870 Crossfire, but you cant really complain about these results, it was able to beat a much more costly HD 4850 Crossfire setup.

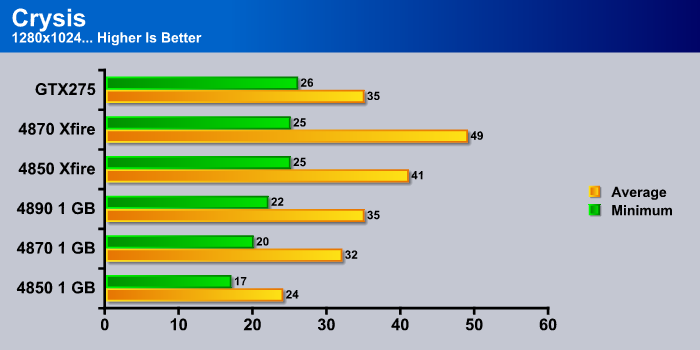

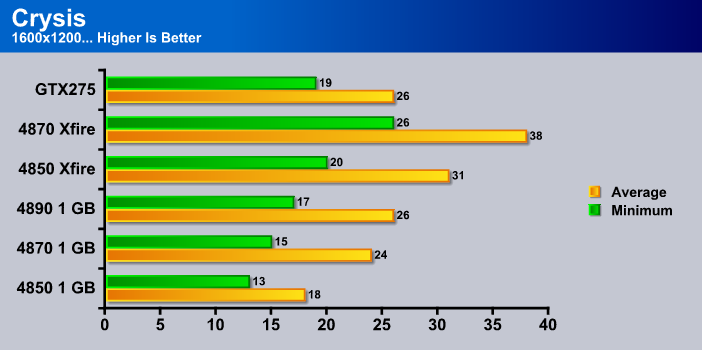

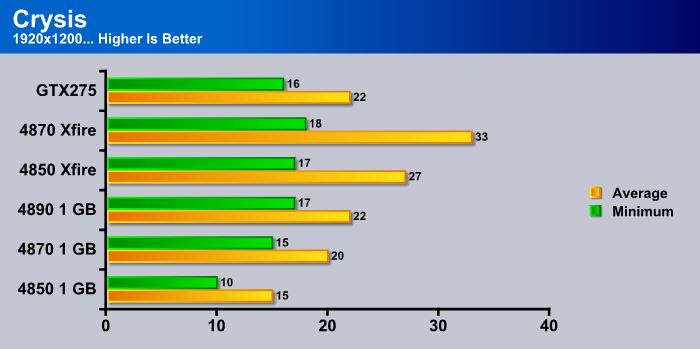

Crysis

Crysis is the most highly anticipated game to hit the market in the last several years. Crysis is based on the CryENGINE™ 2 developed by Crytek. The CryENGINE™ 2 offers real time editing, bump mapping, dynamic lights, network system, integrated physics system, shaders, shadows, and a dynamic music system, just to name a few of the state-of-the-art features that are incorporated into Crysis. As one might expect with this number of features, the game is extremely demanding of system resources, especially the GPU. We expect Crysis to be a primary gaming benchmark for many years to come.

Here we see the GTX275 just barely pulling ahead of the HD 4890. In fact the HD 4890 was able to match the GTX275’s average frame rate, but hit a few frames lower than the 275 in the tests.

Once again the HD 4890 is right on the GTX275’s tail. I am pretty surprized that the HD 4890 was able to keep up with the GTX275, like Company of Heros, Crysis is another game optimised for Nvidia cards, but the HD 4890 is still able to keep up.

When the resolution is increased, the HD 4890 was able to pull ahead of the GTX275 by just a tiny bit. The GTX275 is the overall winner here, but not by very much.

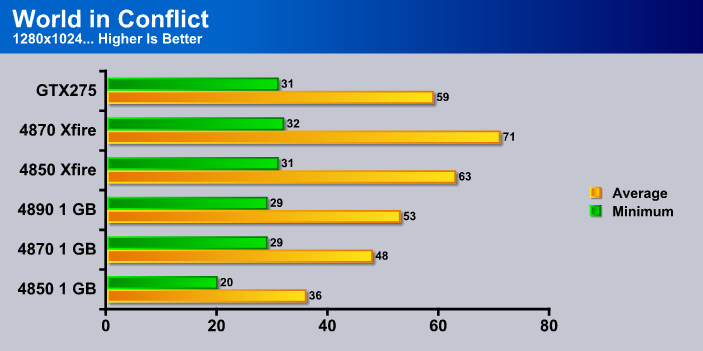

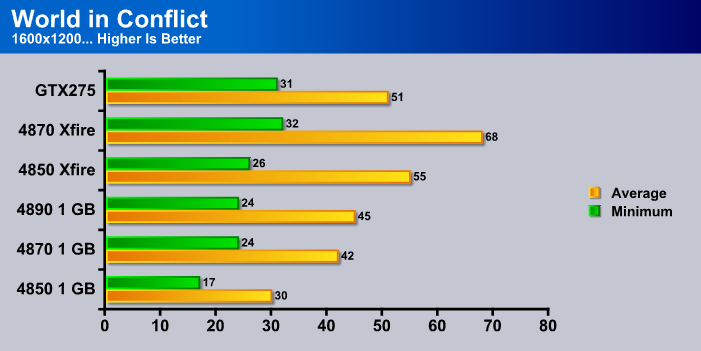

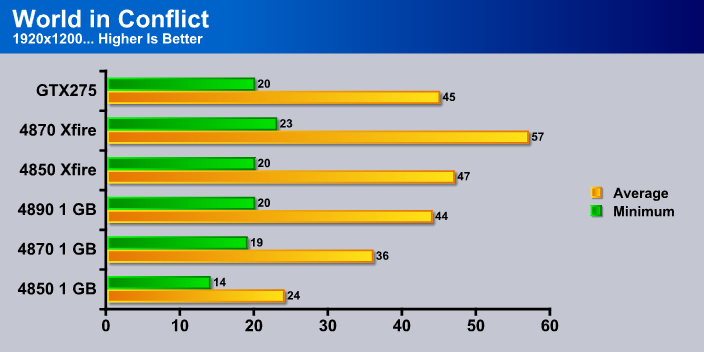

World in Conflict

World in Conflict is a real-time tactical video game developed by the Swedish video game company Massive Entertainment, and published by Sierra Entertainment for Windows PC. The game was released in September of 2007. The game is set in 1989 during the social, political, and economic collapse of the Soviet Union. However, the title postulates an alternate history scenario where the Soviet Union pursued a course of war to remain in power. World in Conflict has superb graphics, is extremely GPU intensive, and has built-in benchmarks. Sounds like benchmark material to us!

The GTX275 has come back to the top again. This time it comes close to matching Crossfire HD 4850’s, but still falls a bit short.

Once again the GTX275 is close behind the HD 4850 Crossfire, and even has a higher minimum frame rate. In fact the 275 almost matched the minimum frame rate of the HD 4870 Crossfire.

Here we have another close match. This time the HD 4890 is right in the thick of things, almost matching Crossfire HD 4850’s. World in Conflict is definatly not a game that benefits much from multi GPU solutions.

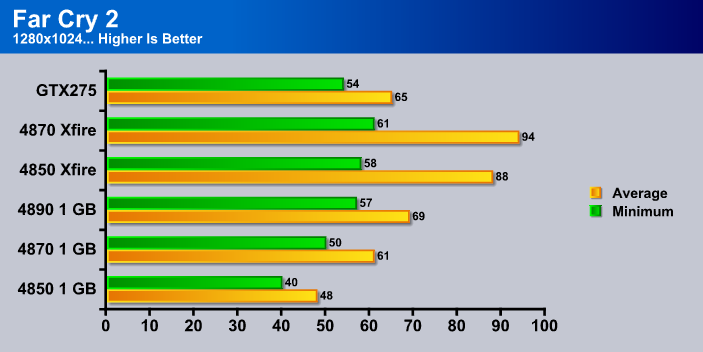

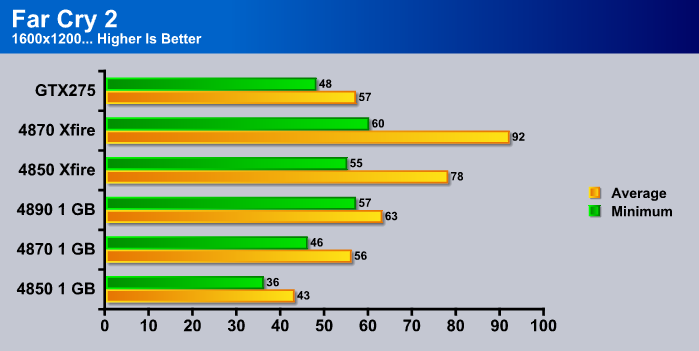

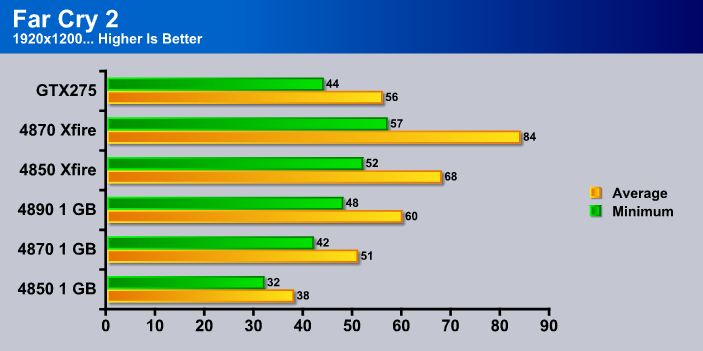

Far Cry 2

Far Cry 2, released in October 2008 by Ubisoft, was one of the most anticipated titles of the year. It’s an engaging state-of-the-art First Person Shooter set in an un-named African country. Caught between two rival factions, you’re sent to take out “The Jackal”. Far Cry2 ships with a full featured benchmark utility and it is one of the most well designed, well thought out game benchmarks we’ve ever seen. One big difference between this benchmark and others is that it leaves the game’s AI (Artificial Intelligence ) running while the benchmark is being performed.

Once again the HD 4890 manages to pull ahead of the GTX275, and just like before this game is also a game optimized for Nvidia cards.

The HD 4890 continues to hold off the GTX275 by just a few frames. The HD 4870 was almost able to match the GTX275 in performance, so far this game is not looking to be very well optimised for Nvidia cards.

Even when the resolution is increased the HD 4890 still manages to beat out the GTX275. This is a pretty bad place to loose, givin that this game is made for Nvidia cards.

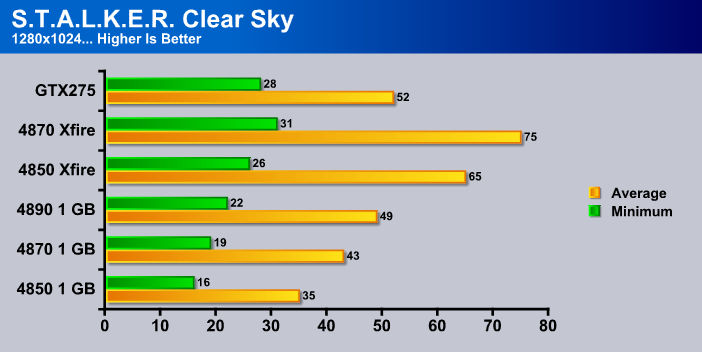

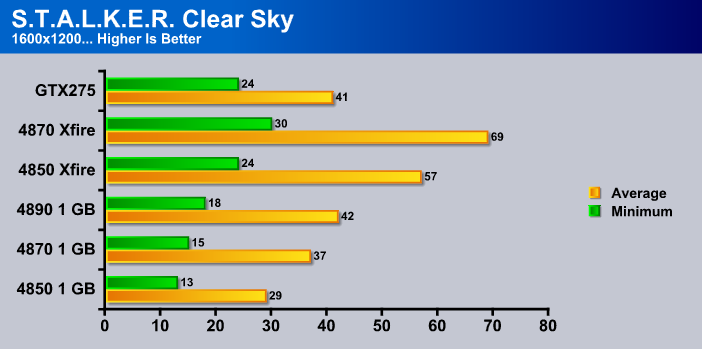

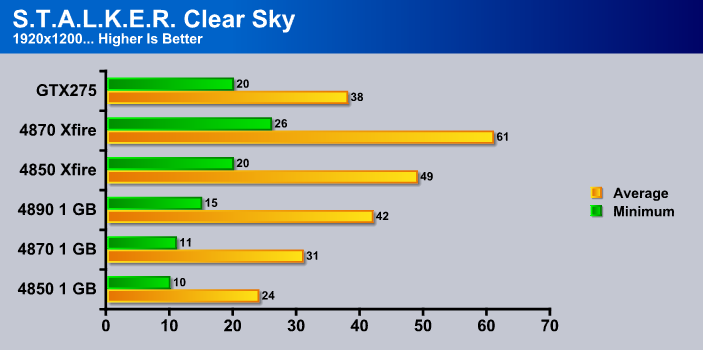

S.T.A.L.K.E.R. Clear Sky

S.T.A.L.K.E.R. Clear Sky is the latest game from the Ukrainian developer, GSC Game World. The game is a prologue to the award winning S.T.A.L.K.E.R. Shadow of Chernoble, and expands on the idea of a thinking man’s shooter. There are many ways you can accomplish your mission, but each requires a meticulous plan, and some thinking on your feet if that plan makes a turn for the worst. S.T.A.L.K.E.R. is a game that will challenge you with intelligent AI, and reward you for beating those challenges. Recently GSC Game World has made an automatic tester for the game, making it easier than ever to obtain an accurate benchmark of Clear Skie’s performance.

The GTX275 gets off to a pretty good start here. It beats out the HD 4890 by a few frames, which is always good.

Then the GTX275 falls on its face a bit. The HD 4890 was able to beat the 275 in minimum frame rate, but the HD 4890 did loose by a fair amount in the minimum frame rate.

The HD 4890 actauly manages to increase its lead by a little bit here. The minimum frame rate is a bit low on all of the cards, so gameplay would be sluggish at the slowest point, but the average is good enough to play and not notice it.

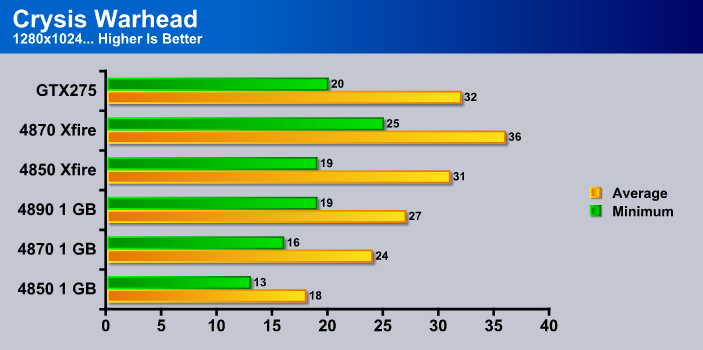

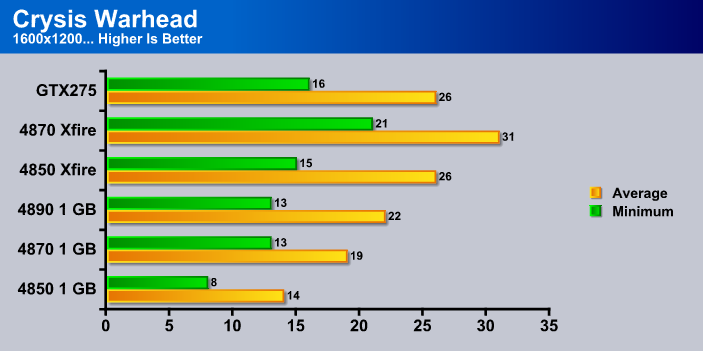

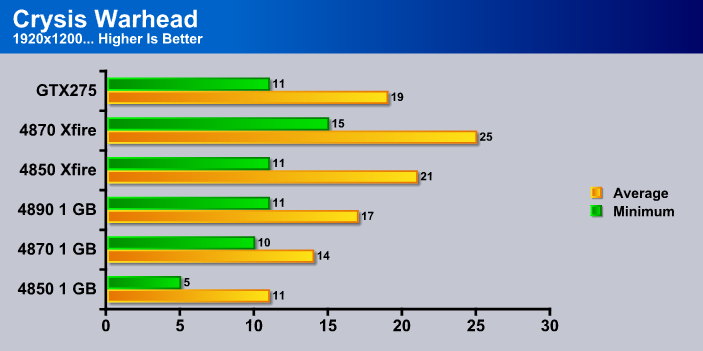

Crysis Warhead

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. The primary reason for this game was to put out a better image for Crysis, as the first game needed huge resources to run. While Warhead is more optimized, the current technology has not advanced enough to play the game at higher settings. It was one of the most anticipated titles of 2008.

The GTX275 once again starts with a bang. This time it easily desposes of HD 4850 Crossfire. Hopefully this will not turn out like the last test, ending in the GTX275 looseing to the HD 4890.

This time the HD 4850 Crossfire managed to catch up a bit to the GTX275, but the 275 just manages to squeeze out a win.

Unfortuneatly the GTX275 didn’t have quite enough juice to hold of Crossfire HD 4850’s. This is not really a bad thing, because one GTX275 is much cheaper than HD 4850 Crossfire.

Temperatures

The GTX275’s fan was much quieter than the HD 4890’s, always a nice welcome. To obtain the idle temperature, I let the computer sit and idle for about an hour before taking the temperature. For the load temperature, I booted up Furmark 1.6.5 and let it work for about another hour and then took down the highest temperature. I left the fan on auto control, so the average user’s temperatures would be recorded.

| Idle | Load |

| 54°C | 90°C |

I am not going to blame Leadtek on this one, but someone has to fix the auto fan control. 90 degrees is completely unacceptable. Its not a surprize to me when you hear about a dead GPU, its because these things run so hot on auto fan just to save some noise. I reccomend you manualy set your fan to at least 60% to help fight off the burning up of your GPU.

Overclocking

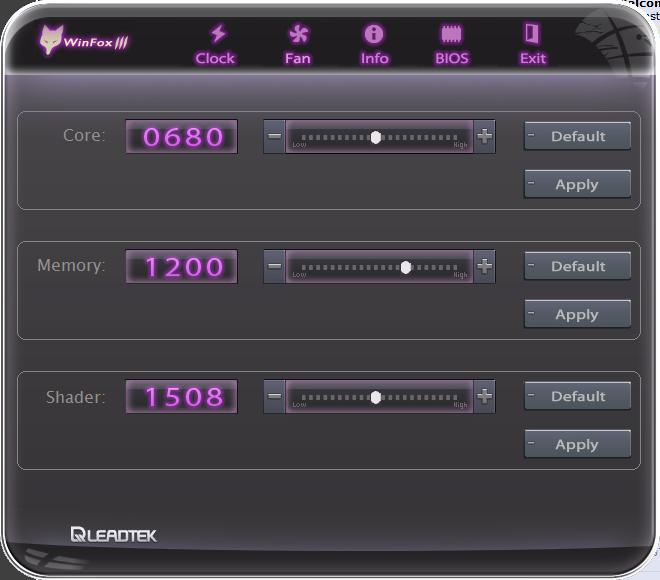

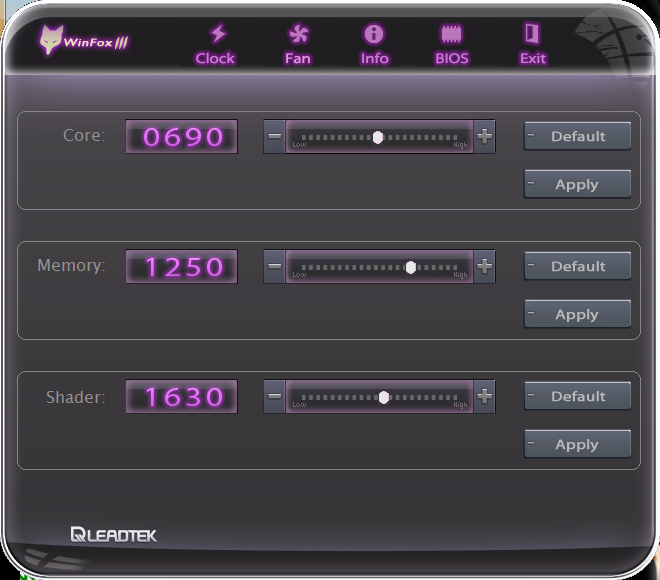

For overclocking I kept on raising the core speed of the card until it became unstable, and then preceded to increase the RAM. Once the card artifacted I backed down the clocks a bit to ensure stability. For overclocking I used the included overclocking program, Winfox3. I then tested the card’s overclock with a hour or so of playing Crysis to ensure that the card’s overclock was completely stable.

First I would like to take a look at the layout of Leadteks own overclocking software. As you can see from the first screen you can set the individual clocks of the card. The fan tab allows you to adjust fan speed, the info tab allows you to see information about your card, the BIOS tab allows you to update the cards BIOS, and the exit tab to obviously exit the window. Leadtek does try to be a bit sneaky, instead of doing Nvidias default shader clock link, Leadtek has opted to increase the shader by even more than its supposed to. This would lead to higher benches to the un-careful observer.

The maximum overclock I was able to acheive was 690 core (up from 633), 1250 memory (up from 1134), and 1630 shader (up from 1404). This increase should prove to be a decent increase, and now onto Vantage to test exactly how big of an increase.

As you can see the GTX275 gets a pretty big increase from overclocking. It was almost able to match the HD 4850 Crossfire’s GPU score, and because of the inflated CPU score, was able to increase its overall score by a lot.

Conclusion

The GTX275 is an unexpected surprize. With Nvidia’s highly optimised drivers it was able to keep yp with, and many times beat the ATI HD 4890. Currently the Leadtek GTX275 can be had for around $250 USD (249.90 EUR). This puts it right on the same price level as the HD 4890, but it is able to sqeeze out more performance, and has support for Cuda and other Nvidia technologies.

While its still a little early to determine if Cuda will become a complete industry standard, this card also supports parallel computing. This will allow the GPU to help the CPU with the operating system. This is currently a planned feature of Windows 7. Not only do you get parallel computing, you also have PhysX technology. PhysX adds advanced physics simulation to all games that support it, makeing the game look better.

The Leadtek GTX275 is also backed by Leadteks 2 year warranty in Europe (3 in the US). This is not the best warranty out there, but its not too bad considering the card will probably be obsolete by the end of the warranty. The Leadtek GTX275 is a powerful card, able to stay ahead of its class in nearly everything, and supports all of the latest Nvidia innovations for one of the best cards in its class.

We are trying out a new addition to our scoring system to provide additional feedback beyond a flat score. Please note that the final score isn’t an aggregate average of the new rating system.

- Performance 10

- Value 10

- Quality 10

- Warranty 8

- Features 8

- Innovation 8

Pros:

+ Great Price

+ Great Performance

+ 240 Stream Processors

Cons:

– Autofan Needs Adjustment

– Larger Than The HD 4890

With a final score of 8.5 out of 10 the Leadtek GTX275 receives the Bjorn3D Seal of Approval.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996