Famous for their non-reference video card designs, Gigabyte announces their Nvidia GTS 250, with Zalman cooling and native HDMI support.

INTRODUCTION

With all the video cards on the market today, which is the right one for you? Even on cards with the same GPU, you have lots of different choices. Clock speeds, outputs, fan noise, cooling efficiency, even PCB color! Gigabyte has long been famous for their non-reference design cards. They take a video card and make it their own.

They’ve done it again with the latest GTS 250. They’ve added Zalman cooling for noise reduction as well as better cooling, and they’ve put an HDMI port right on the card, no HDTV dongle to mess with here. They’ve used a 2oz copper PCB, Tier 1 Samsung/Hyrix memory, solid capacitors, lower RDS(on) MOSFET, and Ferrite Core chokes. In addition, they’ve overclocked the memory to 2.2GHz.

The GTS 250 is a recent release of an update to the 9800GTX+ card. While the 512MB version is a direct renaming of the 9800GTX+, Nvidia has even stated they can be run in SLI together, the 1GB version of the GTS 250 receives a die shrink to the 55nm process which produces less heat and consumes less energy.

Nvidia also managed to keep the price down with this card, they retail for $129 for the 512MB version and $149 for the 1GB version. Gigabyte’s version of the 1GB card retails for $139.

About GigaByte

|

1986 7,070 Up to 2009, GIGABYTE has knitted worldwide networks with 7070 employees in almost every continent to offer the most thorough and timeliest customer cares. GIGABYTE truly believes delighted customers are the basis for a successful brand. |

||

|

100% GIGABYTE aims for nothing but excellent. With all its outstanding products and designs, GIGABYTE for years has received numerous professional awards and media recognition. What’s worth mentioning is GIGABYTE’s unmatched record to have 100% of its entry qualified for Taiwan Excellence Award in 11 continuous years of entry. |

1/10 At least 1 of 10 PCs uses GIGABYTE motherboard everywhere in the world. |

|

|

M1022 GIGABYTE Booktop M1022 is equipped with a 10.1-inch LED backlight screen and the docking station exclusively from GIGABYTE. By attaching the M1022 to this docking station which is also a battery charger, users can turn the M1022 into a desktop and at the same time recharge the battery of the Booktop. Looking like a book that does not occupy much space with its slim appearance, the M1022 is a fashionable home accessory equipped with a 92% full-scale keyboard with 17.5 mm key pitch to deliver a comfortable typing experience. |

||

|

No.1 2oz GIGABYTE leads the industry with the highest quality and most innovative motherboard design, the latest Ultra Durable 3? technology featuring double the amount of copper for the Power and Ground layers of the PCB. A 2oz Copper layer design also provides improved signal quality and lower EMI (Electromagnetic Interference), providing better system stability and allowing for greater margins for overclocking. GIGABYTE ensures to deliver motherboard with highest quality and leading design. |

||

|

180° On June 2008, GIGABYTE launched the market 1st touchable and swivel screen mobile pc, M912. With the innovative technology and minimalistic design for truly carefree mobile lifestyles for professional or personal users with different budgets, M912 features the touch screen came with stylus and 180 degree swivel design to transform into a Tablet PC. M912 is the combination of notebook and table PCs that delivers multiple modes for convenience use and leads the industry to follow. |

||

Everyday, GIGABYTE aims to “Upgrade Your Life” by knitting a global network to effectively honor its commitment to world wide customers. What’s more important, GIGABYTE wishes all users to see and feel the brand through its products and all the touch points from GIGABYTE to the hands of all users. |

||

SPECIFICATIONS

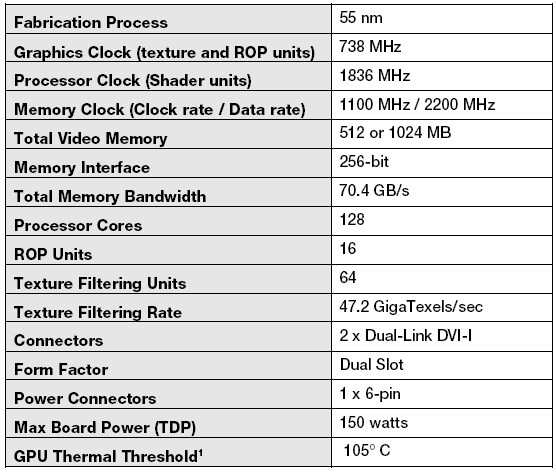

We’ll start out with a comparison between the GTS 250 and the other two cards that are at the same relative price point.

|

Specifications

|

|||

| GPU |

9800 GTX+

|

GIGABYTE GTS 250 1 GB

|

HD 4850

|

|

GPU Frequency |

740 MHz

|

738 MHz

|

750 Mhz

|

| Memory Frequency |

1100 MHz

|

1100 Mhz

|

993 Mhz

|

| Memory Bus Width |

256-bit

|

256-bit

|

256-bit

|

| Memory Type |

GDDR3

|

GDDR3

|

GDDR3

|

| # of Stream Processors |

128

|

128

|

800

|

| Texture Units |

64

|

64

|

40

|

| ROPS |

16

|

16

|

16

|

| Bandwidth (GB/sec) |

70.4

|

70.4

|

63.6

|

| Process |

55nm

|

55nm

|

55nm

|

And here’s a quick look at some more detailed specifications, at least for the reference card. Because Gigabyte’s version is non-reference, you don’t get 2x Dual-Link DVI-I connectors, more on that later.

FEATURES

Click to Enlarge

|

Dual-link DVI Able to drive two of the industry’s largest and highest resolution flat-panel displays up to 2560×1600. |

|

| SLI™ Technology Delivers up to 2X the performance of a single GPU configuration for unparalleled gaming experiences by allowing two graphics cards to run in parallel. The must-have feature for performance PCI Express graphics, SLI dramatically scales performance on 60 top PC games. |

||

|

RoHS Compliant As a citizen of the global village, GIGABYTE exert ourselves to be a pioneer in environment care. Give the whole of Earth a promise that our products do not contain any of the restricted substances in concentrations and applications banned by the RoHS Directive, and are capable of being worked on at the higher temperatures required for lead free solder. One Earth and GIGABYTE Cares! |

|

|

Windows Vista® Enjoy powerful graphics performance, improved stability, and an immersive HD gaming experience for Windows Vista. ATI Catalyst™ software is designed for quick setup of graphics, video, and multiple displays, and automatically configures optimal system settings for lifelike DirectX 10 gaming and the visually stunning Windows Aero™ user interface. |

|

| OpenGL 2.1® Optimizations Ensure top-notch compatibility and performance for all OpenGL 2.1 application. |

||

| Shader Model 4.1 Shader Model 4.1 adds support for indexed temporaries which can be quite useful for certain tasks.Regular direct temporary access is preferable is most cases. One reason is that indexed temporaries are hard to optimize. The shader optimizer may not be able to identify optimizations across indexed accesses that could otherwise have been detected. Furthermore, indexed temporaries tend to increase register pressure a lot. An ordinary shader that contains for instance a few dozen variables will seldom consume a few dozen temporaries in the end but is likely to be optimized down to a handful depending on what the shader does. This is because the shader optimizer can easily track all variables and reuse registers. This is typically not possible for indexed temporaries, thus the register pressure of the shader may increase dramatically. This could be detrimental to performance as it reduces the hardware’s ability to hide latencies. |

||

| PCI-E 2.0 PCI Express® 2.0 –Now you are ready for the most demanding graphics applications thanks to PCI Express® 2.0 support, which allows up to twice the throughput of current PCI Express® cards. Doubles the bus standard’s bandwidth from 2.5 Gbit/s (PCIe 1.1) to 5 Gbit/sec. |

||

|

HDCP Support High-Bandwidth Digital Content Protection (HDCP) is a form of copy protection technology designed to prevent transmission of non-encrypted high-definition content as it travels across DVI or HDMI digital connections. |

|

|

HDMI Ready High Definition Multimedia Interface (HDMI) is a new interface standard for consumer electronics devices that combines HDCP-protected digital video and audio into a single, consumer-friendly connector. |

|

|

PureVideo™ HD Available on HD DVDs and Blu-ray discs, high-definition movies are bringing an exciting new video experience to PC users. NVIDIA® PureVideo™ HD technology lets you enjoy cinematic-quality HD DVD and Blu-ray movies with low CPU utilization and power consumption, allowing higher quality movie playback and picture clarity. PureVideo HD technology provides a combination of powerful hardware acceleration, content security, and integration with movie players, plus all the features found in PureVideo. |

|

|

CUDA Technology NVIDIA® CUDA™ technology unlocks the power of the hundreds of cores in your NVIDIA® GeForce® graphics processor (GPU) to accelerate some of the most performance hungry computing applications. The CUDA™ technology already adopted by thousands of programers to speed up those performance hungry computing applications. |

|

|

PhyX Technology NVIDIA® PhysX™ is the next big thing in gaming! The best way to get real-time physics, such as explosions that cause dust and debris, characters with life-like motion or cloth that drapes and tears naturally is with an NVIDIA® PhysX™-ready GeForce® processor |

|

PICTURES & IMPRESSIONS

Click to Enlarge

Click to Enlarge

Here’s a good look at the packaging from Gigabyte, it describes pretty well the features and specifications on the back of the box. You even get a nice set of thermal imagery to show the difference between reference cooled cards and the Zalman cooled edition.

Click to Enlarge

Inside you’ll find the video card packaged up all nice and tight with the normal closed cell foam surrounding it.

Click to Enlarge

Well, it’s not exactly an expansive bundle, but it does include everything necessary to get started. If you need the second DVI-I port, it includes an HDMI–DVI-I converter. Native HDMI support is great to have, especially with the wide array of monitors that are including HDMI inputs nowadays.

Click to Enlarge

As you can see, the Zalman fan really opens the card up into plain view, allowing you to see the blue PCB that is a Gigabyte favorite.

Click to Enlarge

Here’s a good look at the back of the PCB. Pretty normal stuff here, you can see the memory layout across the top and left of the four GPU cooler screws, layed out just like the G92, which makes sense in a G92b.

Click to Enlarge

This picture makes it pretty evident just why there is only one DVI-I output on this card, with the HDMI input there just isn’t room for another. The included adapter will take care of that however, should the need arise.

Click to Enlarge

Even with a small footprint, the Zalman cooler sticks out high enough to be considered a double-slot cooler.

Click to Enlarge

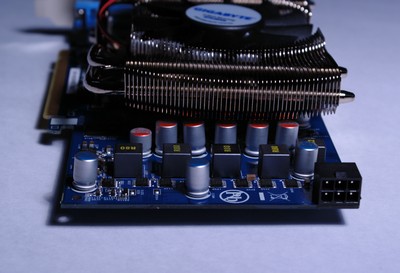

Here you get a good look at all those solid capacitors, along with the single 6-pin PCIe power connector. Be careful with this card, as it’d be a shame to knock one of those caps off.

Click to Enlarge

If you’ll look closely you’ll see the downside to a non-reference, HDMI ready card… No Tri-SLI.

TESTING & METHODOLOGY

To test the Gigabyte GTS 250 a fresh install of Vista 64 was used. We loaded all the updated drivers for all components and downloaded then installed all available updates for Vista. Once all that was accomplished, we installed all the games and utilities necessary for testing out the card.

When we booted back up, we ran into no issues with the card or any of the games. The only error encountered was the recurring one of having the wrong game disk inserted, due to switching benches back and forth in the middle of the night. It’s a rough life when your biggest problem is the wrong disk being in the drive, believe me. All testing was completed at stock clock speeds on all cards.

| GIGABYTE GTS 250 TEST RIG |

|

|

Case

|

Danger Den Tower 26 |

|

Motherboard

|

EVGA 790i Ultra

|

|

CPU

|

Q6600 1.400v @ 3.0 GHz

|

|

Memory

|

|

|

GPU

|

Gigabyte GTS 250 OC

Leadtek Winfast GTS 250

EVGA 8800GT SC 714/1779/1032

GTS 250 SLI

|

|

HDD

|

2 X WD Caviar Black 640GB RAID 0

|

|

PSU

|

In-Win Commander 1200W |

|

Cooling

|

Laing DDC 12V 18W pump

MC-TDX 775 CPU Block

Ione full coverage GPU block G92

Black Ice GTX480 radiator

|

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark06 v. 1.10 | |

| 3DMark Vantage | |

| Crysis v. 1.2 | |

| World in Conflict | |

| FarCry 2 | |

| Crysis Warhead | |

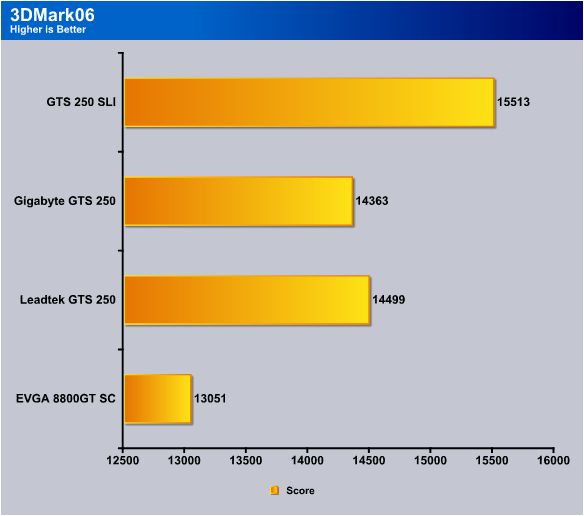

3DMARK06 V. 1.1.0

As you can see, the Gigabyte version of the GTS 250 comes in just behind the Leadtek. This is due more to variation in 3DMark06 scores more than it is to variations in the cards themselves. Expect a 200-300 point fluctutation with each 3DMark06 score on the same system.

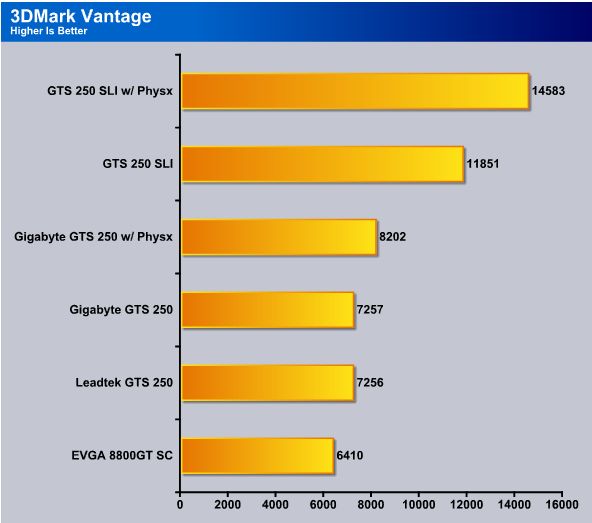

3DMark Vantage

www.futuremark.com/benchmarks/3dmarkvantage/features/

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PhysX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

The Vantage benchmark is much more consistent than 3DMark06 with the Gigabyte card coming out 1 point higher, the PhysX scores (not shown for the Leadtek card) were identical.

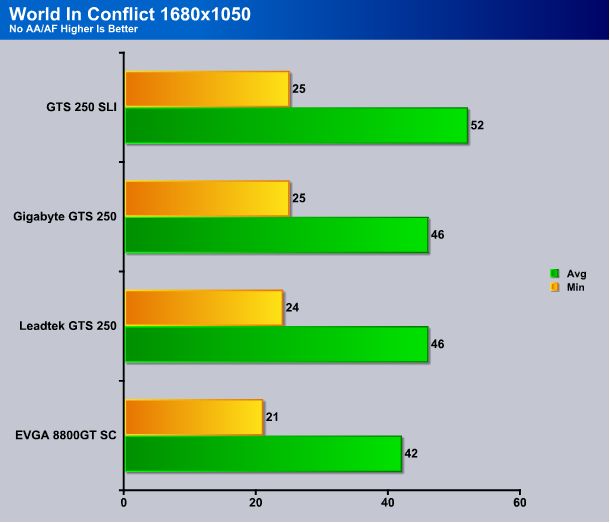

World in Conflict Demo

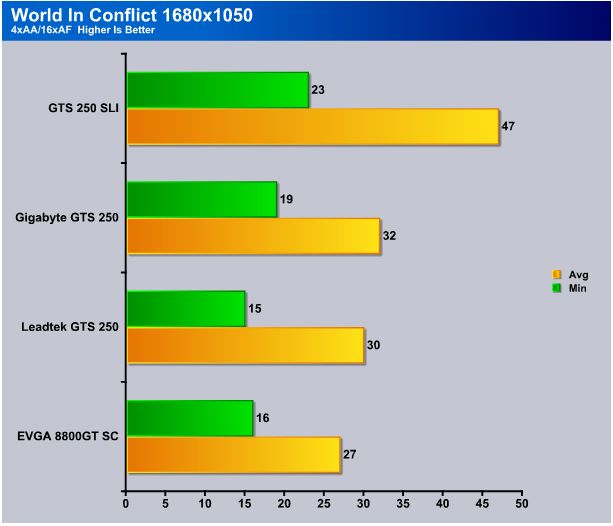

While both card performed almost identically, at this resolution the game seems to be CPU limited, rather than GPU limited, though SLI did pick up a few FPS on the average score.

Turning on the AA and AF at 1680×1050 gets us out of the CPU limitations and really stresses the GTS 250. The Gigabyte card performed noticeably better than the Leadtek flavor in this test, perhaps due to the factory overclocked memory.

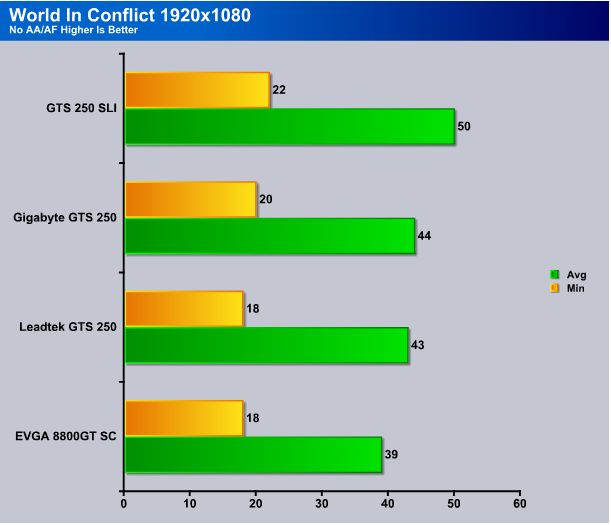

At 1920×1080 without any AA or AF the SLI testing only lost a few FPS over the lower resolution still maintaining a respectable average. The two GTS 250 cards performed well individually as well, with the Gigabyte taking the slight advantage.

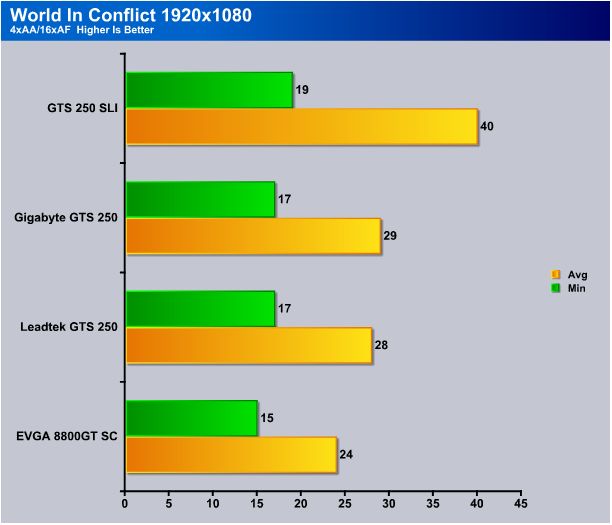

This resolution/AA/AF combo is what really separates performance series cards from the enthusiast level. The SLI did fairly well here, remember that if you turn down just a little of the eye candy the game is entirely playable at this resolution.

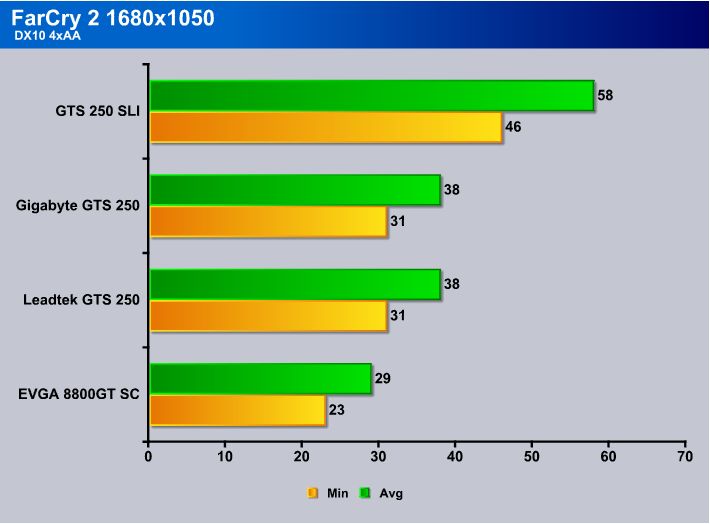

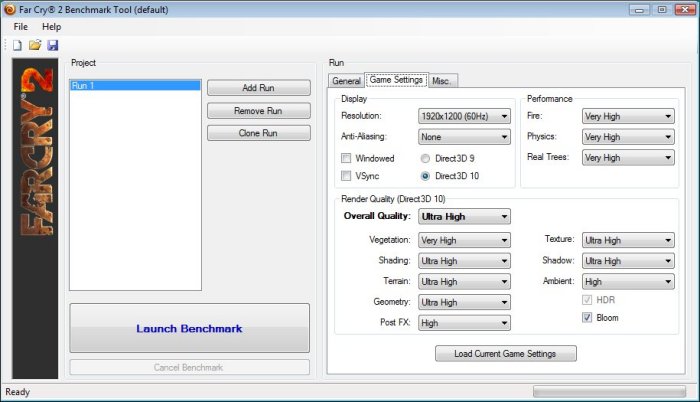

Far Cry 2

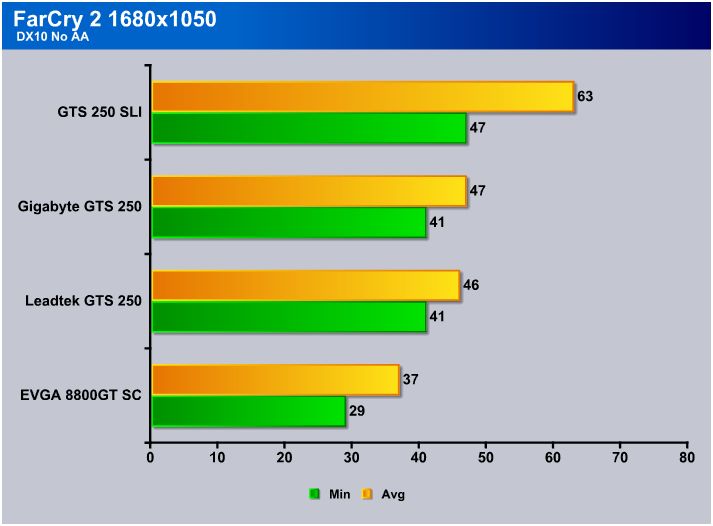

This test started off very well, all the GTS 250 cards maintaining fully playable levels. SLI scaling is excellent in FC2, setting the minimum FPS at the average FPS of a single card is no small feat.

Even with the AA turned on we remained entirely playable at this resolution, the SLI testing showing some CPU limitation on the minimum FPS side, as it didn’t drop at all from the previous test.

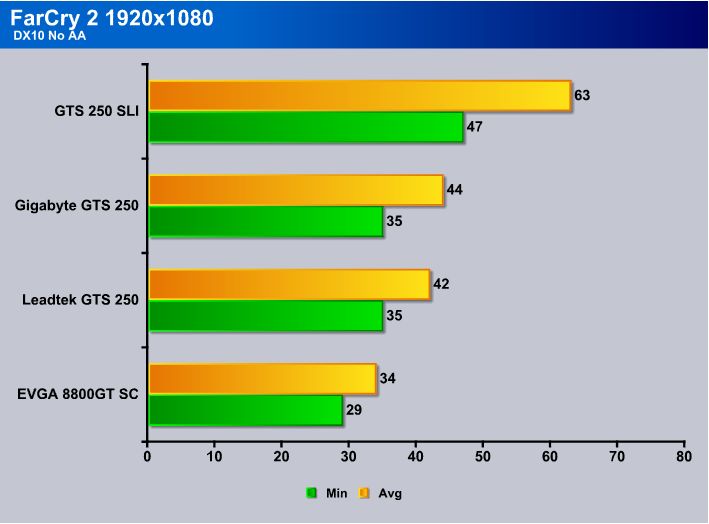

While we lost a few FPS here on both cards, both do a great job of maintaining playability. As we go through more testing, the CPU seems more and more to be the limiting factor when running the SLI testing, at least for FarCry 2.

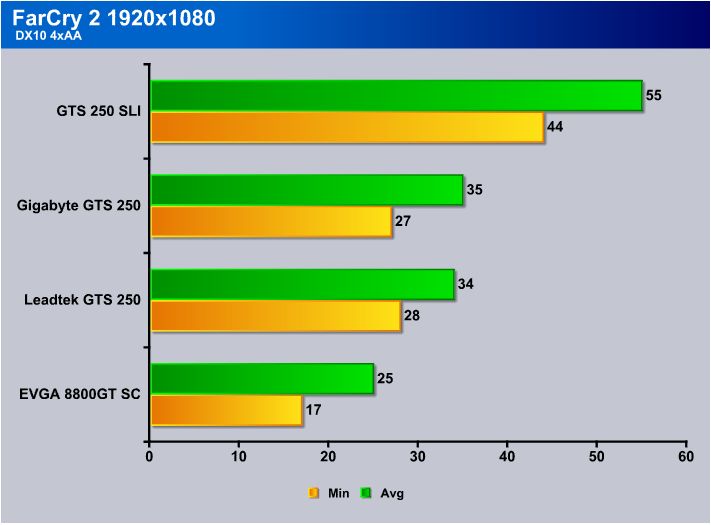

Down to our last FC2 test, the cards finally get pushed beyond the playable 30FPS limit. High resolutions with AA turned on are supposed to be stressful, both cards did an excellent job maintaining the average rates.

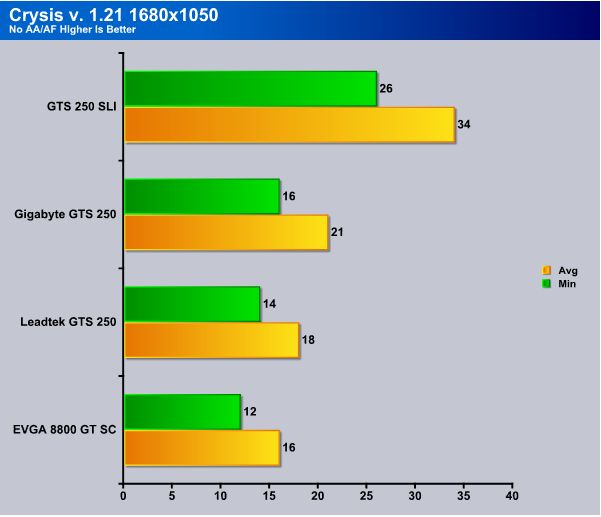

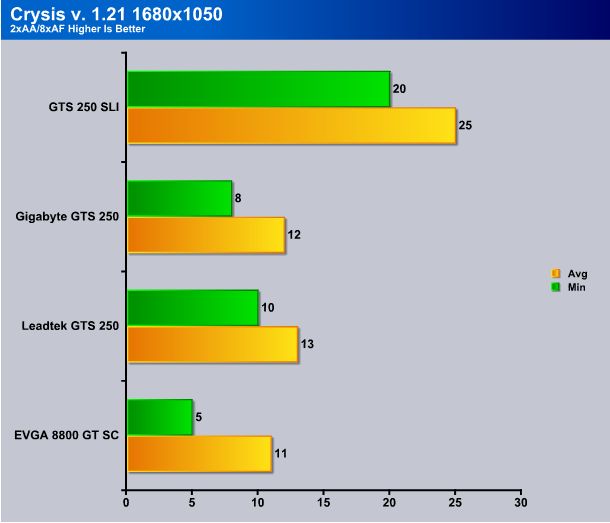

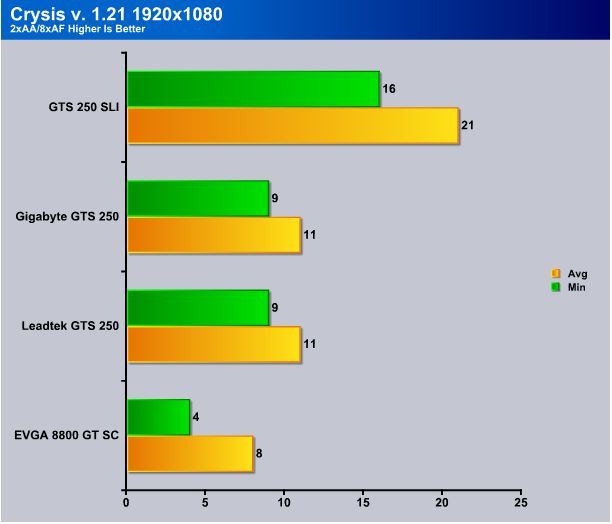

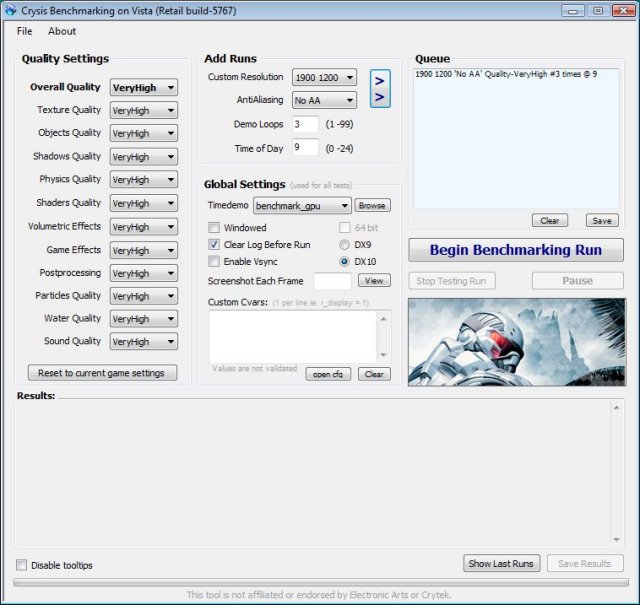

Crysis v. 1.21

Okay, right off the bat, Crysis is an extremely stressful benchmark and will continue to be used for quite some time. Keep in mind that to play this game, or at least run the bench, at maxed out levels and maintain 30 FPS, you’re going to spend at least $600 on GPU’s, probably closer to $1000, so these results are actually quite good considering these two cards can be had for under $300 USD.

On the plus side here, the SLI setup is still holding above 20 FPS, lose a little bit of the eye candy and you’re completely playable again. Our Gigabyte card comes in a little behind the Leadtek card here, losing 2 FPS.

To prove my point about the eye candy, we actually gained a couple FPS back at the higher resolution with the AA/AF off. If one were to say, drop the shadows and water quality from Very High to High or even Medium, you’d be completely playable with the SLI rig. It’d take a few more settings turned down to bring a single card in the playable range, but not many more.

This is again, the most stressful resolution/eye candy combo and it shows. The SLI test rig gets down below 20 FPS for the first time and the single cards are down in the single digits. Not so far as the 8800GT of course, but they’re down there a ways.

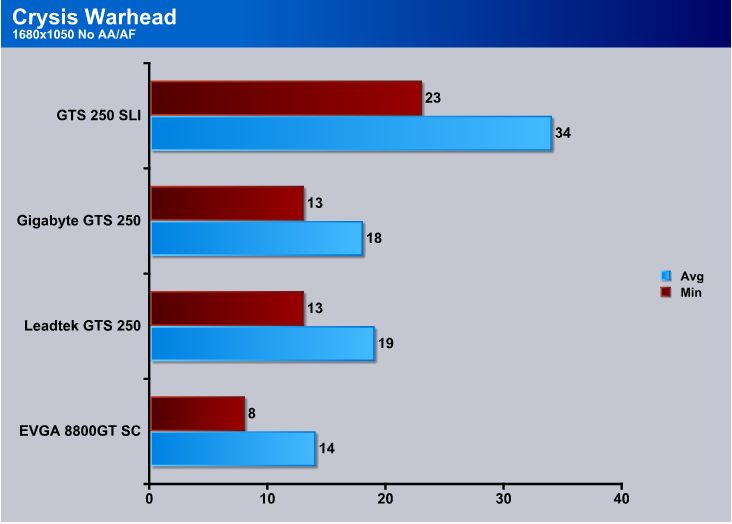

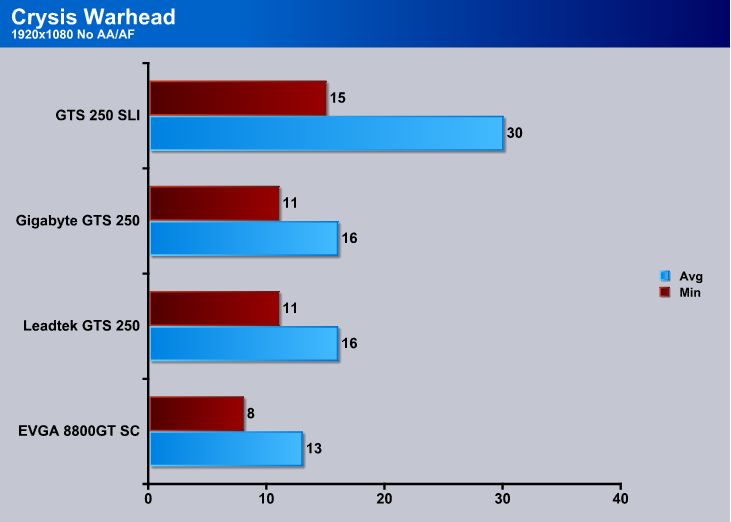

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

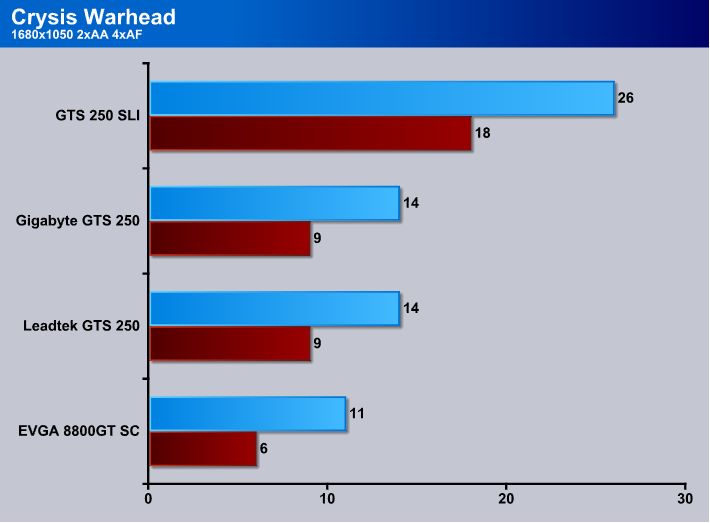

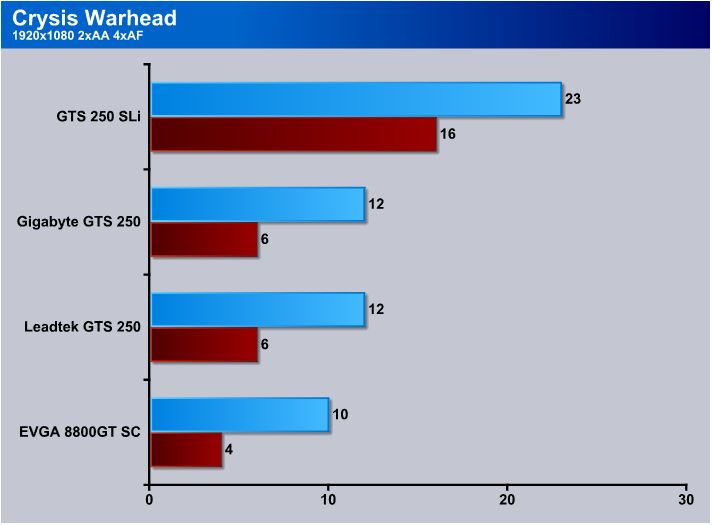

Like Crysis, Warhead is extremely hard on GPU’s. You can see this above, with our individual GTS 250’s starting out under 15 FPS on the minimum side. The 8800GT doesn’t have a prayer. SLI does pretty well here, drop a little eye candy and you’re playable again.

Stepping up to the higher resolution, still with the AA/AF turned off, the SLI setup takes a pretty big hit, bringing the minimum FPS down to 15. Still, take notice that the SLI setup keeps the minimum FPS at about the same level as the average framerate of the individual cards, showing decent scaling.

Back at 1680×1050, this time with the AA/AF turned on, the cards are by no means playable, but did anybody really expect them to be at this point? SLI does okay here, I have to wonder how it would do in Tri-SLI???

This is perhaps the most demanding test among the entire benchmarking suite of tools that we use here at Bjorn3D.com. These cards perform far better than this test would lead you to believe. Remember what I said about $1000 worth of GPUs to run over 30FPS? Well you’d still be pushing that boundary here.

S.T.A.L.K.E.R. Clear Sky

S.T.A.L.K.E.R. Clear Sky is the latest game from the Ukrainian developer, GSC Game World. The game is a prologue to the award winning S.T.A.L.K.E.R. Shadow of Chernoble, and expands on the idea of a thinking man’s shooter. There are many ways you can accomplish your mission, but each requires a meticulous plan, and some thinking on your feet if that plan makes a turn for the worst. S.T.A.L.K.E.R. is a game that will challenge you with intelligent AI, and reward you for beating those challenges. Recently GSC Game World has made an automatic tester for the game, making it easier than ever to obtain an accurate benchmark of Clear Sky’s performance.

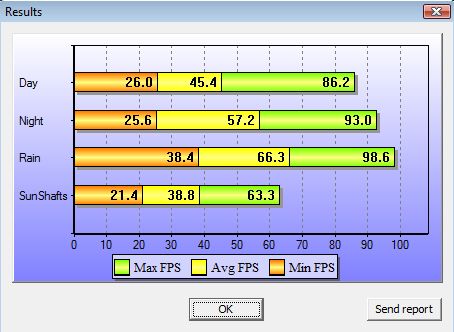

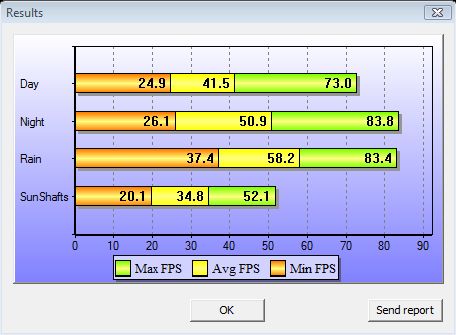

1680×1050 0xAA

Typically the SunShafts test is the most stressful for our cards, while the rain test seems to be the easiest, in this case the only test that maintained a framerate greater than 30FPS. The averages are all highly playable, while the minimum framerates will lead to a few issues.

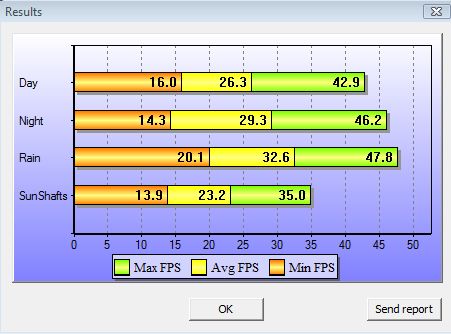

1680×1050 4xAA

With the 4xAA turned on, this bench brings the GTS 250 below playable levels, spending enough time under the 30 FPS average to make playing it undesireable.

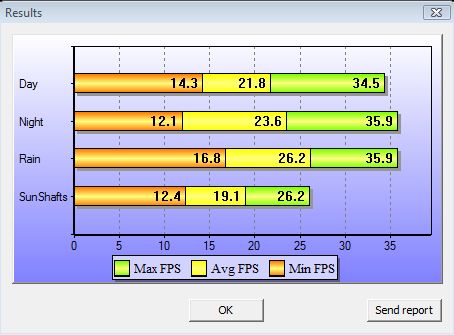

1920×1080 0xAA

At 1920×1080 the game stutters somewhat, but still manages to maintain a high average framerate, implying that it dips below 30FPS a few times in each category but is for the most part playable.

1920×1080 4xAA

Anti-aliasing strikes again! This test brought the card to its knees, much like the Crysis bench, though not quite so severe a beating was administered. The game is not playable at this resolution, barely earning a maximum framerate over 30 FPS. Even the Rain test, which has been pretty good to us laughed in our faces on this one.

TEMPERATURES

To get our temperature reading, we ran 3DMark Vantage looping for 30 minutes to get the load temperature. To get the idle temp we let the machine idle at the desktop for 30 minutes with no background tasks that would drive the temperature up. Please note that this is on a DD Tower 26, which has a lot of room inside, so your chassis and cooling will affect the temps your seeing.

| GPU Temperatures | |||

| Idle | Load | ||

| 41°C | 59° C | ||

We were extremely pleased with the temps shown by the Gigabyte GTS 250. The Zalman cooler certainly kept temps down.

POWER CONSUMPTION

To get our power consumption numbers we plugged in our Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then, while3DMark Vantage was looping for 30 minutes we watched for the peak power consumption, then recorded the highest usage.

| GPU Power Consumption | |||

| GPU | Idle | Load | |

| Gigabyte GTS 250 OC | 213 Watts | 294 Watts | |

| Leadtek WinFast GTS 250 1GB | 224 Watts | 322 Watts | |

| Leadtek Winfast GTS 250 SLI | 254 Watts | 427 Watts | |

| EVGA GTS-250 1 GB Superclocked | 192 Watts | 283 Watts | |

| XFX GTX-285 XXX | 215 Watts | 322 Watts | |

| BFG GTX-295 | 238 Watts | 450 Watts | |

| Asus GTX-295 | 240 Watts | 451 Watts | |

| EVGA GTX-280 | 217 Watts | 345 Watts | |

| EVGA GTX-280 SLI | 239 Watts | 515 Watts | |

| Sapphire Toxic HD 4850 | 183 Watts | 275 Watts | |

| Sapphire HD 4870 | 207 Watts | 298 Watts | |

| Palit HD 4870×2 | 267 Watts | 447 Watts | |

| Total System Power Consumption | |||

Power consumption on is outstanding. Keep in mind that most of these readings were taken on a Core i7 system, while the GTS 250 was run on a Q6600 system, which uses more power. Look at the total difference between load and idle to get a good idea of what the power requirements are. The Gigabyte GTS 250 came in almost 30 watts below the Leadtek on the same system.

OVERCLOCKING

What good would a video card review be without overclocking the thing? Not much, in our opinion. Most people that are looking into buying these cards will probably do a little overclocking anyhow, so we might as well show you what it’s capable of.

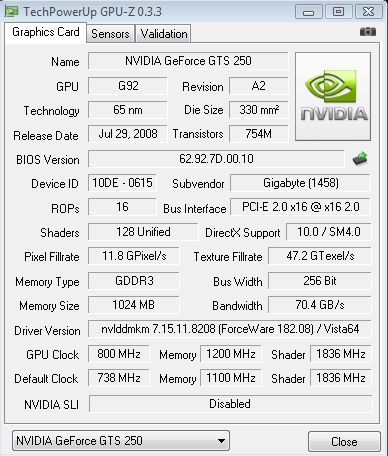

Not too shabby, eh? It didn’t overclock quite as well as the Leadtek, but a 800MHz core is nothing to sneeze at. I couldn’t touch the shader on this card however, as anything higher produced artifacts almost instantly. 1200 MHz DDR3 on a GPU is always a nice little touch though.

Added Value Features

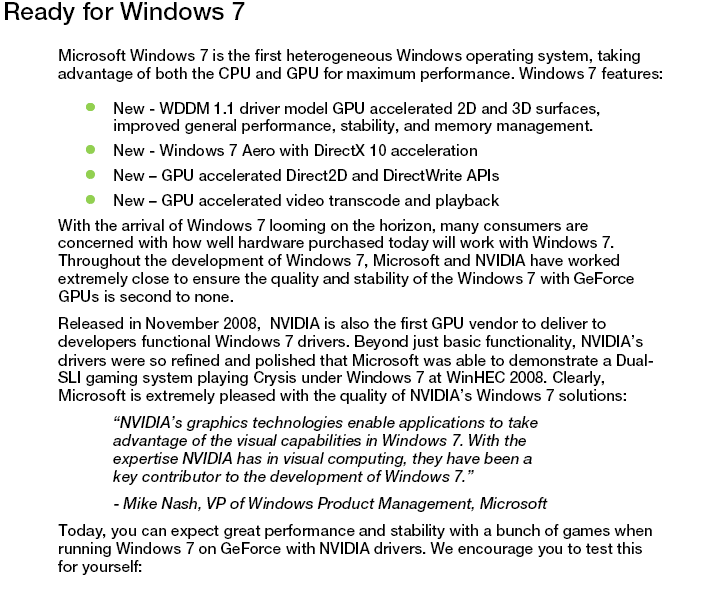

Windows 7

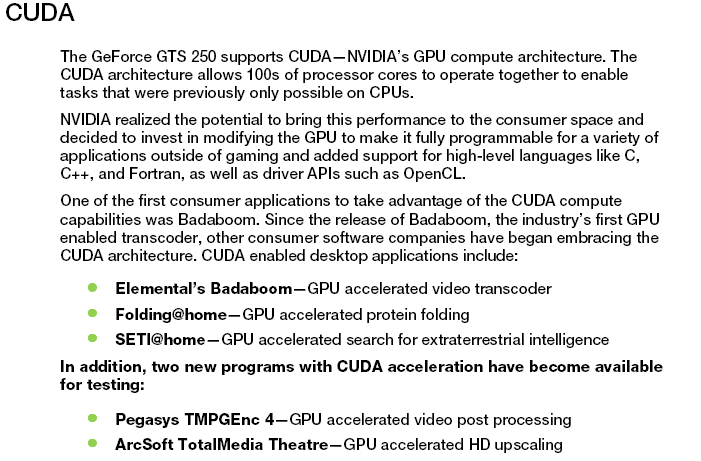

There are a few things we have that we’d like to cover that we don’t have a set place in review to cover. We mentioned earlier that we’d been meeting with Nvidia on a semi regular basis covering different topics.

We have it on good authority that in Windows 7 the core operating system will also, for the first time, take advantage of Parallel computing. Windows 7 will be able to offload part of its tasks to the GPU. That’s a really exciting development and we expect to see more of the computing load shifted to the GPU as Windows evolves. Given the inherent power of GPU computing, as anyone that has done Folding At Home on a GPU knows, this opens up whole new vistas of computing possibilities. Who knows we may see the day when the GPU does the bulk of computing and the CPU just initiates the process. That is, of course, made possible by close cooperation of Nvidia and Microsoft and may be an early indication of widespread acceptance of Parallel computing and CUDA.

The easiest way and most coherent way (we’ve had a hard few days coding and benching the GTS-250) to get you the information is to clip it from the press release PDF file and give it to you unmolested.

That’s right Nvidia and Microsoft are already working together and have delivered functional drivers for Nvidia GPU’s running under Windows 7 and they will be publicly available early in March.

CUDA

Two new CUDA applications are available for testing.

3D Vision

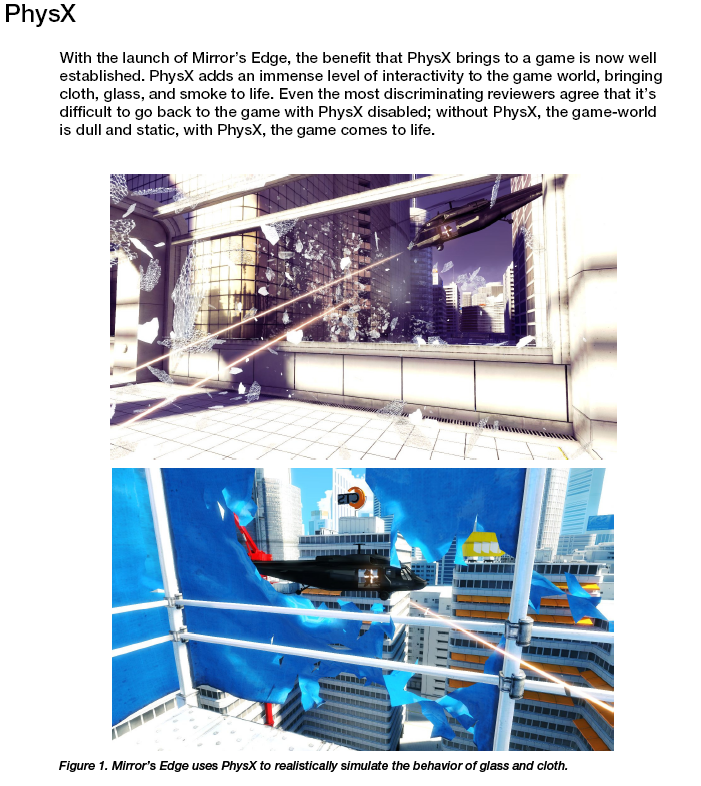

PhysX

CONCLUSION

All in all, the Gigabyte GTS 250 is an excellent card. Gigabyte has long been famous for their non-reference design motherboards and video cards, and has delivered another winner. The solid capacitors are a nice touch, as is the HDMI port on the card itself. Perhaps the feature on this card that makes it a better value than the rest of the GTS 250 series however, is the Zalman heatsink/fan combo. Heat is always an issue in today’s modern PC, many users shell out good money for aftermarket cooling solutions which just add to the total overall cost of the machine. The fact that this card is available with the factory warranty (often voided by aftermarket cooling solutions installed by the end user) at the same price point as the reference card adds a lot of value, those Zalman coolers aren’t cheap you know!

I came across two downsides while working with this card. The first one, and the most apparent, is the lack of Tri-SLI compatibility. The reference design includes this feature while the Gigabyte card does not, most likely due to the lack of space around the HDMI port for the Tri-SLI interface. The other problem is only having one DVI-I output. This is mitigated with the inclusion of an HDMI-DVI adapter, which was a nice touch.

One of the benefits of this card was the lower power consumption. It used almost 30 watts less than the Leadtek card on the same test rig, my guess is that this is due in part to the Zalman cooler, which is much more efficient and allows the fan to remain at 35%. It also includes Gigabyte’s standard 3 year limited warranty. You can find them online for $149.

We are trying out a new addition to our scoring system to provide additional feedback beyond a flat score. Please note that the final score isn’t an aggregate average of the new rating system.

- Performance 7

- Value 8.5

- Quality 9

- Warranty 7

- Features 10

- Innovation 8.5

Pros:

+ Cool-running Zalman heatsink/fan

+ HDMI output on-board instead of a typical HDTV dongle

+ Extremely low power consumption

+ Ferrite core chokes

+ Solid capacitors

+ Overclocked memory

Cons:

– Exposed components due to aftermarket cooling solution

– G92 Core Variant

– Not Tri-SLI capable

– Only one DVI-I output

The Gigabyte 1 GB GTS 250 does an excellent job keeping costs down while maximizing value for the end user. This card would be well served in an HTPC because of its lower power consumption and small size. For it’s performance here today it has received a final score of: 7.5 out of 10 and the Bjorn3D.com Seal Of Approval.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996