In our review of the XFX 8800 GT Alpha Dog XXX Edition we fully intend to either prove or dispel many of the myths that have been communicated concerning this graphics solution; “Graphics Myth Busters” if you will. We have also changed some of the products as well as the operating system used on our test system to bring you the most up to date and accurate results possible. These changes will be discussed in detail in the “Testing” segment of the review. Please join us as we endeavor to continue Bjorn3D’s tradition of bringing you not only the most, but the best graphics card reviews on the Net!

INTRODUCTION

For the last couple of months computer enthusiast forums around the world have been buzzing with discussion about the forthcoming release of NVIDIA’s new 8800 GT graphics card. We have read every imaginable rumor from: “The 8800 GT will be faster than the 8800 GTX” to “The 8800 GT will replace the 8800 GTS” and all other speculation in between. Fast forward to today where the NVIDIA 8800 GT is now reality and no longer just speculation!

In our review of the XFX 8800 GT Alpha Dog XXX Edition we fully intend to either prove or dispel many of the myths that have been communicated concerning this graphics solution; “Graphics Myth Busters” if you will. We have also changed some of the products as well as the operating system used on our test system to bring you the most up to date and accurate results possible. These changes will be discussed in detail in the “Testing” segment of the review. Please join us as we endeavor to continue Bjorn3D’s tradition of bringing you not only the most, but the best graphics card reviews on the Net!

XFX: The Company

XFX®, a company that is very well know not only to the general computer consumer but to the Computer Enthusiast community as well operates on the following corporate philosophy: “XFX® dares to go where the competition would like to, but can’t. That’s because, at XFX®, we don’t just create great digital video components–we build all-out, mind-blowing, performance crushing, competition-obliterating video cards and motherboards.” “Oh, and not only are they amazing, you don’t have to live on dry noodles and peanut butter to afford them.”

FEATURES & SPECIFICATIONS

Features

- NVIDIA® unified architecture with GigaThread™ technology: Massively multi-threaded architecture supports thousands of independent, simultaneous threads, providing

- extreme processing efficiency in advanced, next generation shader programs.

- NVIDIA® Lumenex™ Engine: Delivers stunning image quality and floating point accuracy at ultra-fast frame rates.

- Full Microsoft® DirectX® 10 Support: World’s first DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects.

- Dual 400MHz RAMDACs: Blazing-fast RAMDACs support dual QXGA displays with ultra-high, ergonomic refresh rates–up to 2048 x 1536 @ 85Hz.

- Dual Link DVI: Capable of supporting digital output for high resolution monitors (up to 2560×1600).

- NVIDIA® SLI™ Technology: Delivers up to 2x the performance of a single GPU configuration for unparalleled gaming experiences by allowing two graphics cards to run in parallel. The must-have feature for performance PCI Express graphics, SLI dramatically scales performance on over 60 top PC games.

- PCI Express™ Support: Designed to run perfectly with the next-generation PCI Express bus architecture. This new bus doubles the bandwidth of AGP 8X delivering over 4 GB/sec. in both upstream and downstream data transfers.

- 16x Anti-aliasing: Lightning fast, high-quality anti-aliasing at up to 16x sample rates obliterates jagged edges.

- NVIDIA® PureVideo™ Technology: The combination of high-definition video processors and NVIDIA DVD decoder software delivers unprecedented picture clarity, smooth video, accurate color, and precise image scaling for all video content to turn your PC into a high-end home theater. (Feature requires supported video software.)

- OpenGL™ 2.0 Optimizations and Support: Ensures top-notch compatibility and performance for all OpenGL applications. NVIDIA® nView® Multi-display Advanced technology provides the ultimate in viewing flexibility and control for multiple monitors.

- NVIDIA® nView® Multi-Display Technology: Advanced technology provides the ultimate in viewing flexibility and control for multiple monitors.

| XFX 8800 GT Alpha Dog XXX Edition Top of the Line 8800 Series … Comparative Specifications |

|||

| Specification | XFX 8800GT XXX PVT-88P-YDD4 |

XFX 8800GTS 320MB Fatal1ty PV-T80G-G1D4 |

XFX 8800GTX XXX PV-T80F-SHD9 |

| RAMDACs | Dual 400 MHz | Dual 400 MHz | Dual 400 MHz |

| Memory BUS | 256 bit | 320 bit | 384 bit |

| Memory Bandwidth | 64 GB/sec | 80 GB/sec | 86.4 GB/sec |

| Memory | 512 MB | 320 MB | 768 MB |

| Memory Type | DDR3 | DDR3 | DDR3 |

| Memory Clock | 1.95 GHz | 2 GHz | 2 GHz |

| Pixel Processors | 28 | 24 | 32 |

| Vertex Processors | 16 | 20 | 24 |

| Stream Processors | 112 | 96 | 128 |

| Shader Clock | 1675 MHz | 1500 MHz | 1350 MHz |

| Clock Rate | 670 MHz | 650 MHz | 630 MHz |

| Chipset | GeForce™ 8800 GT (G92) | GeForce™ 8800 GTS (G80) | GeForce™ 8800 GTX (G80) |

| Bus Type | PCI-E 2.0 | PCI-E | PCI-E |

| Fabrication Process | 65nm | 90nm | 90nm |

| Highlighted Features | HDCP Ready Dual DVI Out RoHS HDTV ready SLI ready TV Out |

HDCP Ready Dual DVI Out RoHS HDTV ready SLI ready TV Out |

HDCP Ready Dual DVI Out RoHS HDTV ready SLI ready TV Out |

CLOSER LOOK

In actuality the NVIDIA 8800 GT is the first card manufactured to shrink the dye of their chipset from the previous 90nm standard with the other 8800 graphics down to 65nm used on the G92 core. This dye shrink process has allowed the G92 chip to utilize roughly 754 million transistors as opposed to around 681 Million in its G80 counterpart. It is also our understanding that the 8800 GT has relegated much of the video processing (VP2) to the GPU thus being somewhat less demanding of the CPU. We also understand that there are some other new and innovative processes at work that will help to maintain higher FPS throughout the spectrum of resolutions that are supported by the 8800 GT (2560 x 1600 max). In theory, we should also see a marginal drop in temperatures with the G92 which is also directly related to shrinking the dye size to 65nm.

Packaging

XFX describes their Alpha Dog Editions for both the 8800 GTS and 8800 GT as follows:

Killer instincts matched with lightning fast speed, the 8800 GT and 8800 GTS 640 MB graphics cards are true alpha dogs in every sense of the word. These snarling pack animals deliver unprecedented performance and supreme realism; with these cards users aren’t playing the game, they’re in the game.

We feel the artistic rendering on the exterior packaging for these products expresses this mood and sentiment quite aptly.

Exterior Packaging: Front View

The rear and sides of the package also exhibit some very useful information to the potential consumer. Another thing that we find quite impressive is that XFX takes a minimalist approach in the size of their new graphics cards packages. While smaller than their previous versions they still offer significant protection for the valuable cargo contained inside.

Exterior Packaging: Rear View

Anyone that orders products from an internet vendor necessitating they be shipped via one of the primary delivery services either has experienced shipping damage or will experience it at some time in the future. Bjorn3D has seen our share of products that were pummeled during the shipping process. We are proud to say that XFX and a few other manufacturers we do reviews for have always gotten their merchandise to us reasonably unscathed, even though the carton it was contained in looked as though it had been through a war zone. We feel this is primarily due to the way they protect their cards with the interior packaging.

Interior Packaging

The Card: Images & Impressions

The first thing that will probably strike you when you view the single slotted XFX 8800 GT XXX is just how small it appears in comparison to its dual slot predecessors. The dimensions are (W)0.7 inches, (D)9.1 inches, and (H)4.3 inches. The dimensions of the card alone open many new avenues as far as case selection is concerned that heretofore were unavailable due to the sheer size of high performance graphics solutions.

XFX 8800 GT XXX … Front View

The next thing you will probably notice is that the cooler runs the entire length of the card but has no rear exhaust for hot air as was present on the dual slotted behemoths that preceded it. For reason you must make sure that your case has good to excellent air flow to help exhaust the heat build up in your case.

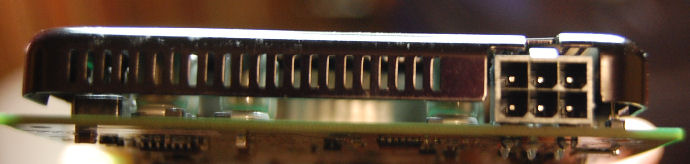

We are happy to see that the XFX 8800 GT XXX continues the tradition of only using one PCI-e connector, even with its added features. Another important feature is that the PCI-e connector is mounted on the end of the card allowing for better cable management than in the case of the XFX 8800 GTX XXX where the connectors are mounted on the side of the PCB.

XFX 8800 GT XXX … PCI-e Connector

One thing that we learned about the entirety of the NVIDIA 8800 GT series that was a bit disconcerting is that while it readily supports DirectX 10 and Shader 4.0 the current version of the card does not support the forthcoming release of DirectX 10.1 and Shader 4.1. We’re hoping that this can be rectified with a firmware upgrade but at this point in time are uncertain if this will be the case. In fairness to NVIDIA it will still be some time before the vast majority of gamers are using DX10 on a regular basis as currently there are only just a handful of games that support this protocol.

Looking at the rear of the card and the four positions of the chip placement it would appear that some of the older model after market cooling products would fit this card. This is speculation on our part as we have none immediately available to test.

XFX 8800 GT XXX … Rear View

The XFX 8800 GT Alpha Dog XXX Edition provides two DVI connectors that both natively support HDCP. Maximum resolutions of up to 2560 x 1600 @ 60Hz are supported. The business end of this card looks a bit barren as we have rapidly grown use to the dual slotted cards. Let’s face it everything in our computer system will shink as time goes on and we’ll just have to change our mindsets about bigger always being better.

XFX 8800 GT XXX … Side View

Bundled Accessories

About a year ago when the NVIDIA 8800 series of MCP/VPUs first made their presence on the market we noticed size and amount of the bundled accessories dwindling and repeatedly mentioned this in our product reviews. We are glad to see this trend has begun to reverse itself and feel the accessories included with the XFX 8800 GT Alpha Dog XXX Edition are back on the right track.

- 2 – DVI to VGA connectors

- 1 – S-Video to S-Video connector

- 1 – S-Video Dongle

- 1 – Dual 4-pin molex power to PCI-e power connector

- 1 – Instruction manual

- 1 – Quick install guide

- 1 – “I’m Gaming: Do not disturb” Door sign

- 1 – S-Video connector sheet

- 1 – Driver Disc

- 1 – Company of Heroes (full edition) with DirectX 10 patch

TESTING

Every time we receive a new graphics card to review we struggle with the dilemma of which other card(s) should we compare it to. In the case of the XFX 8800 GT Alpha Dog XXX Edition there was no dilemma! We knew it was the fastest (on paper) of all the 8800GT graphics cards available from XFX so we opted to test it against the two fastest 8800 series cards we had tested to date (excluding the Ultra); the XFX 8800 GTX XXX and the 8800 GTS Fatal1ty.

As we alluded to in the introduction we decided to make some changes to both our testing platform and operating system. We just received an Asus Maximus Extreme motherboard for review and we decided what better way to break it in than to put it through a massive graphics card review. This motherboard coupled with some extremely fast DDR-3 1600 memory we received from Crucial and changing our product enclosure to the Antec P190 case were the hardware changes that we made for this review. We also know that quite a few gamers out there are using the Vista 64-bit operating system and having quite good success, so we made this change as well.

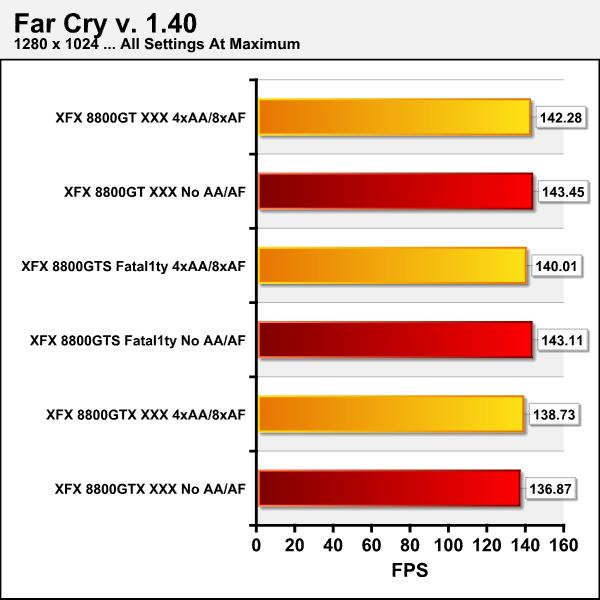

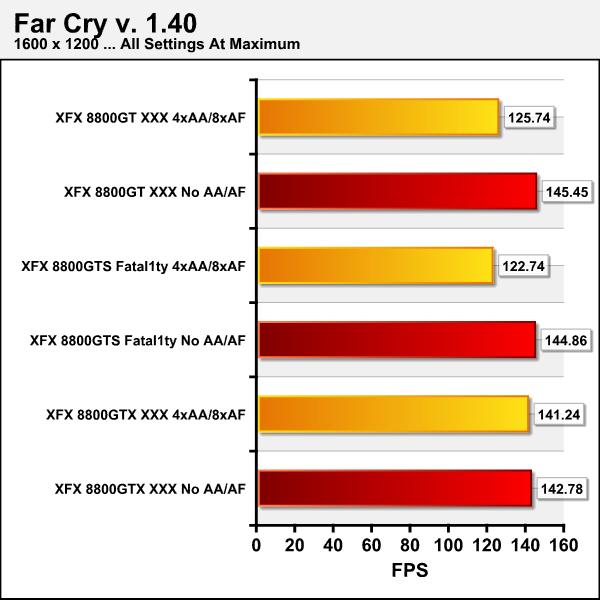

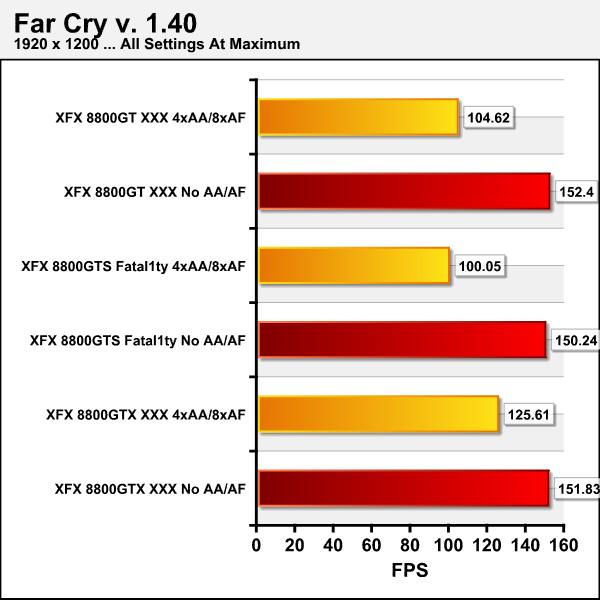

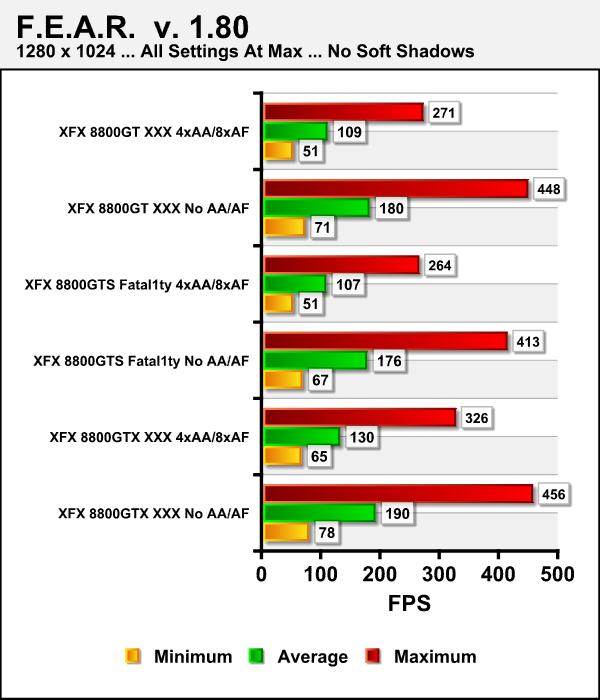

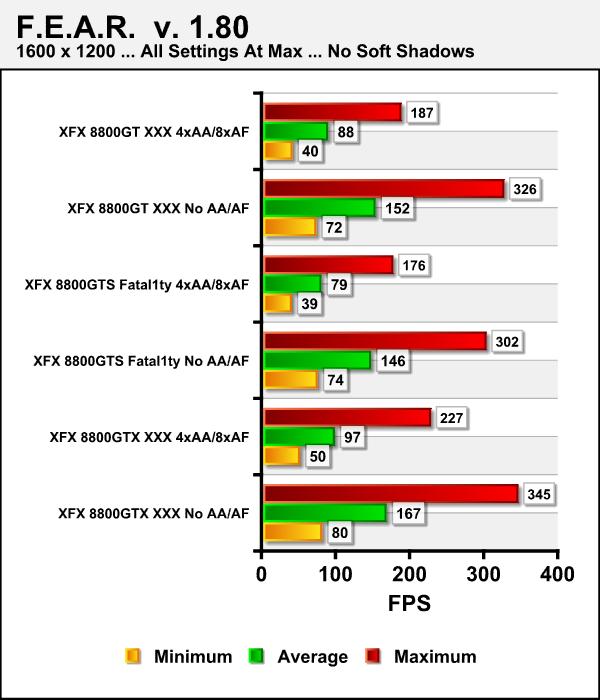

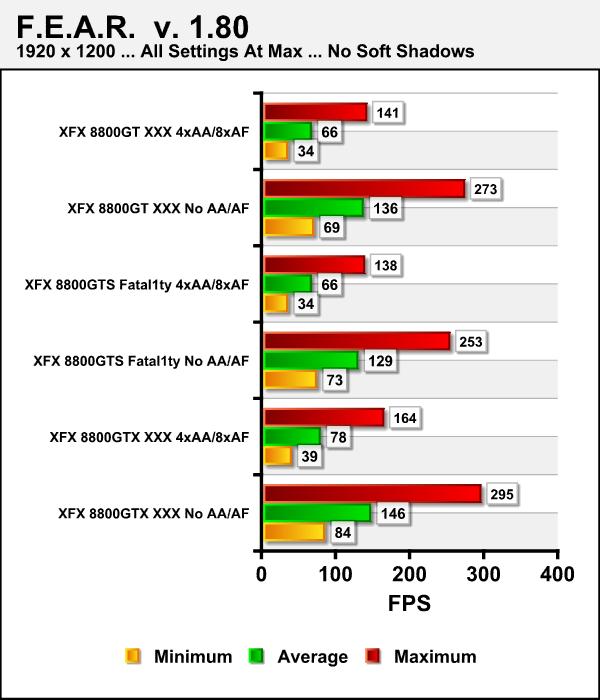

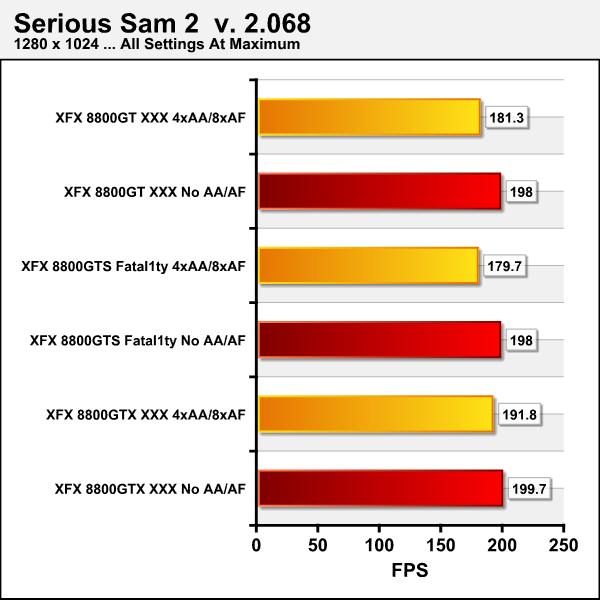

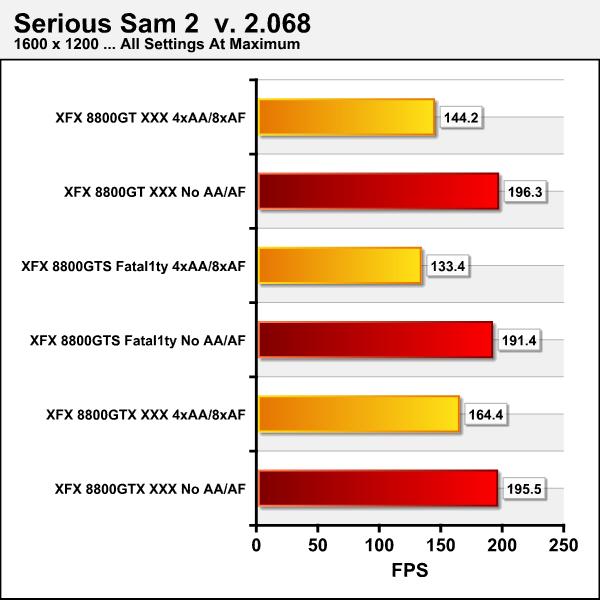

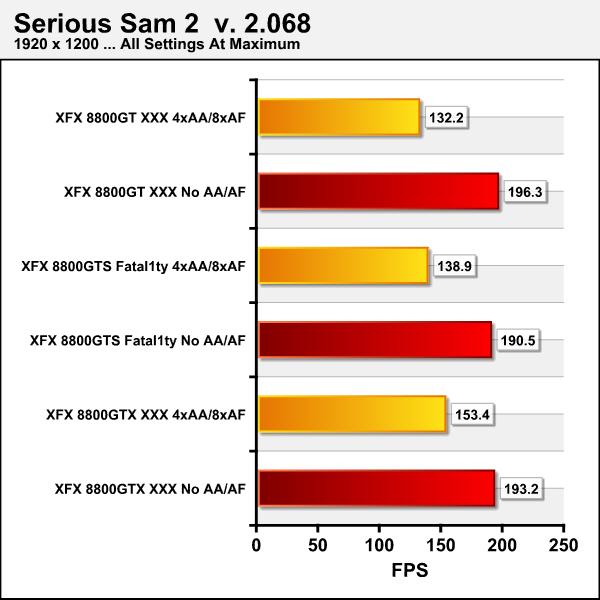

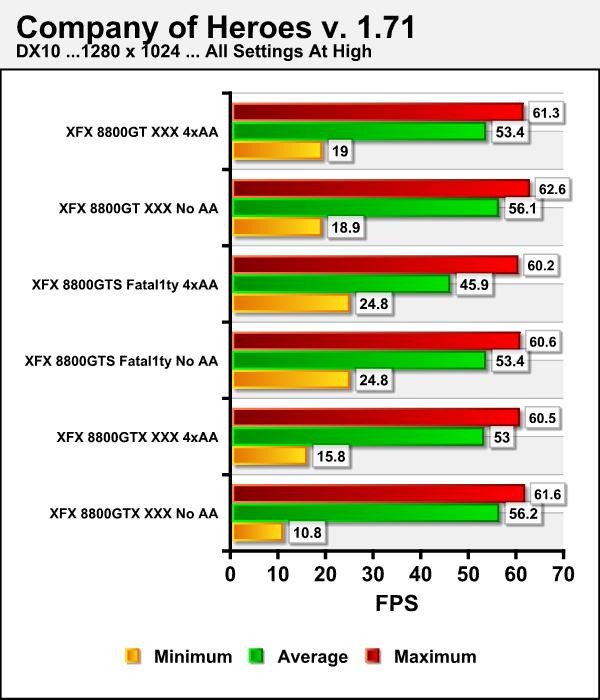

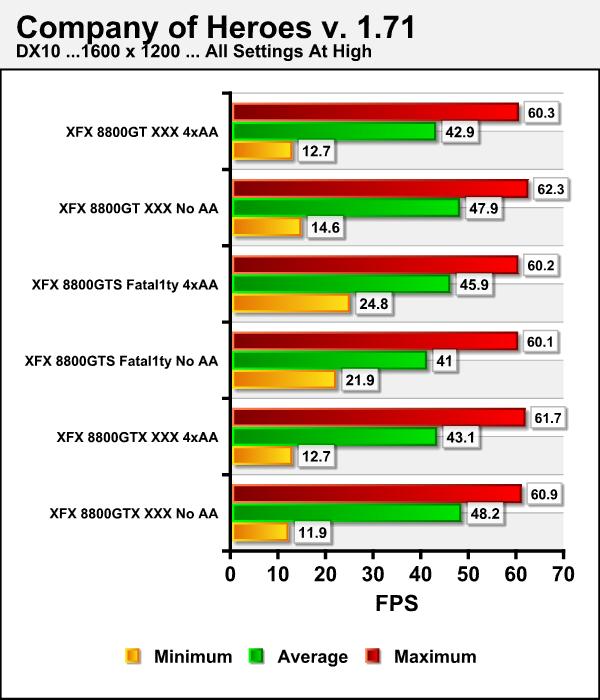

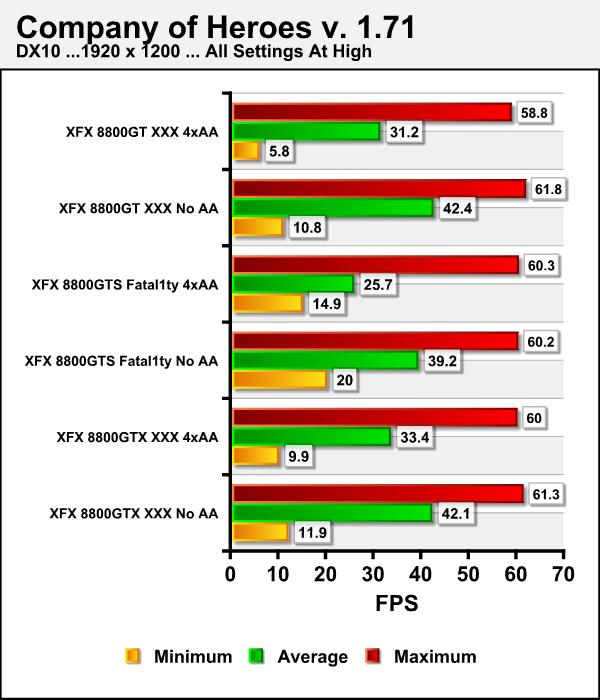

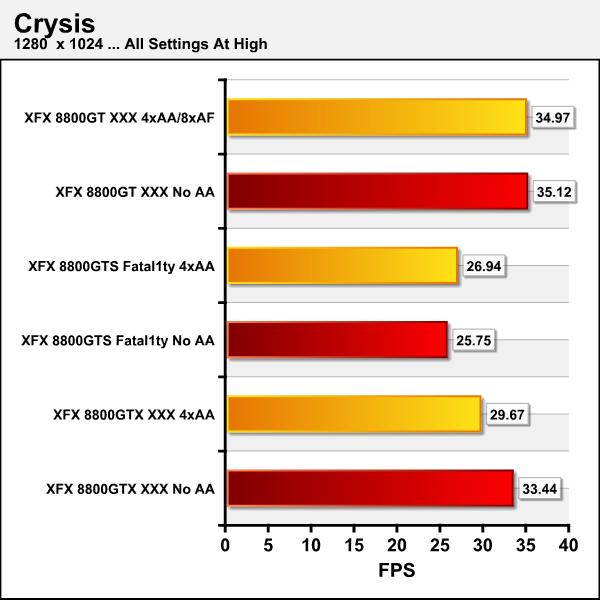

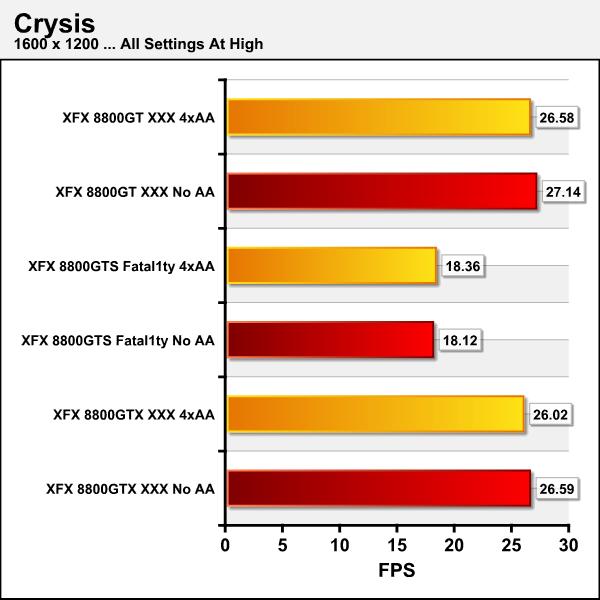

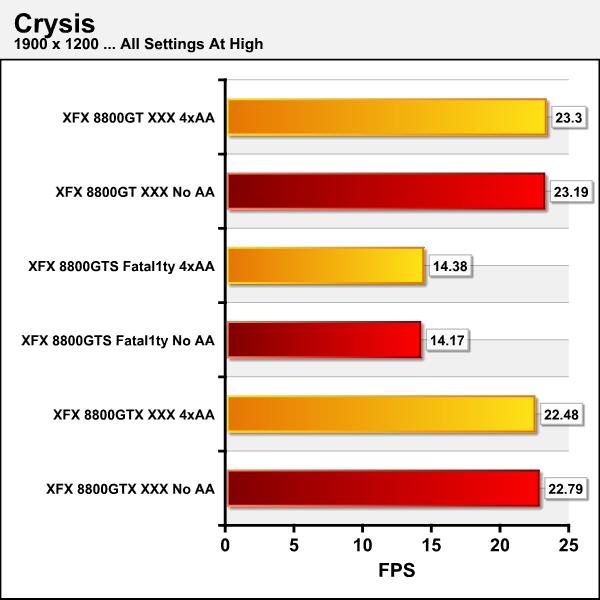

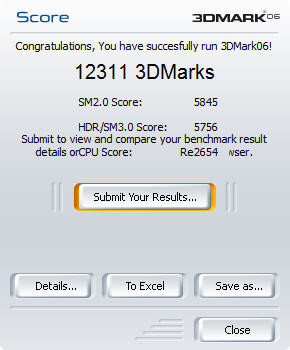

We will run our captioned benchmarks with each graphics card set at default speed. Our synthetic benchmarks 3DMark06 and PCMark Vantage will be run in default mode only with a resolutions of 1280 x 1024 with no anti-aliasing or anisotropic filtering used. All other gaming tests will be run at the 1280 x 1024, 1600 x 1200, and 1920 x 1200 both without AA and AF then repeated with anti-aliasing set to 4X and anisotropic filtering set to 8X, where available. Each of the tests will be run individually and in succession three times and an average of the three results calculated and reported. F.E.A.R. benchmarks were also run with soft shadows disabled.

|

Test Platform |

|

|

Processor |

Intel E6850 Core 2 Duo at 3.0GHz |

|

Motherboard |

ASUS Maximus Extreme |

|

Memory |

2GB Crucial BL2KIT12864BA1608 DDR-3 1600, 8-8-8-24 |

|

Drive(s) |

2 – Seagate 1TB Barracuda ES SATA Drives |

|

Graphics |

Test Card #1: XFX GeForce® 8800GT XXX, ForceWare 169.09 |

|

Cooling |

Enzotech Ultra w/120mm Delta Fan |

|

Power Supply |

Antec 650 Watt Neo Power & Antec 550 Watt Neo Power |

|

Display |

Dell 2407 FPW |

|

Case |

Antec P190 |

|

Operating System |

Windows Vista Ultimate 64-bit |

|

Synthetic Benchmarks & Games |

|

3DMark06 v. 1.10 |

|

PCMark Vantage |

|

Company of Heroes v. 1.71 |

|

F.E.A.R. v 1.08 |

|

Serious Sam 2 v. 2.068 |

|

Far Cry v 1.40 |

|

Crysis: Full Edition |

The Competitors

TEST RESULTS

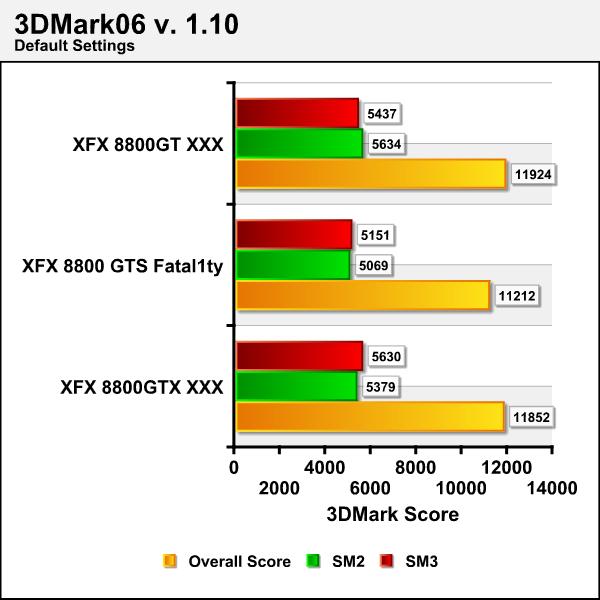

3DMark06 v. 1.1.0

3DMark06 developed by Futuremark is a synthetic benchmark used for universal testing of all graphics solutions. 3DMark06 features HDR rendering, complex HDR post processing, dynamic soft shadows for all objects, water shader with HDR refraction, HDR reflection, depth fog and Gerstner wave functions, realistic sky model with cloud blending, and approximately 5.4 million triangles and 8.8 million vertices; to name just a few. The measurement unit “3DMark” is intended to give a normalized mean for comparing different GPU/VPUs. It has been accepted as both a standard and a mandatory benchmark throughout the gaming world for measuring performance.

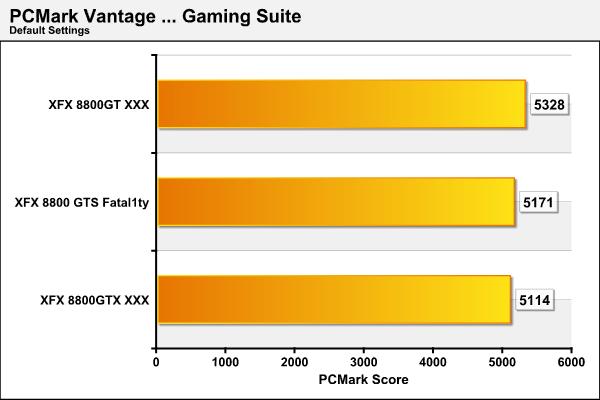

PCMark Vantage x64

PCMark® Vantage is the first objective hardware performance benchmark for PCs running 32 and 64 bit versions of Microsoft® Windows Vista®. PCMark Vantage is perfectly suited for benchmarking any type of Microsoft® Windows Vista PC from multimedia home entertainment systems and laptops to dedicated workstations and hi-end gaming rigs. PCMark® Vantage comes with both x32-bit and x64-bit testing modules, due the nature of the product we’re reviewing the x64-bit Gaming Suite was selected for testing.

Far Cry

Far Cry is a first-person shooter computer game developed by Crytek Studios and published by Ubisoft in March, 2004. The game is still considered by most to be an excellent test and benchmark of a graphics cards capabilities. Crytek Studios developed their CryEngine which uses “polybump” technology which increases the detail of low-polygon models through the use of normal maps. Far Cry comes with several standard benchmarks built in they must be manually run from the game’s console. We automate this process using benchmarking software from HardwareOC to automate the process.

F.E.A.R

F.E.A.R. (First Encounter Assault Recon) is a first-person shooter game developed by Monolith Productions and released in October, 2005 for Windows. F.E.A.R. is one of the most resource intensive games in the FPS genre of games ever to be released. The game contains an integrated performance test that can be run to determine your system’s performance based on the graphical options you have chosen. The beauty of the performance test is that it gives maximum, average, and minimum frames per second rates and also the percentage of each of those categorical rates your system performed. F.E.A.R. rocks both as a game and as a benchmark!

Serious Sam 2

Serious Sam 2 is a first-person shooter released in 2005 and is the sequel to the 2002 computer game Serious Sam. It was developed by Croteam using an updated version of their Serious Engine known as “Serious Engine 2”. We feel this game serves as an excellent benchmark which provides a variety of challenges for the the GPU/VPU you are testing. We once again automate the benchmarking process by using benchmarking software from HardwareOC to automate and refine the process.

Company of Heroes

Company of Heroes(COH) is a Real Time Strategy(RTS) game for the PC, announced on April, 2005. It is developed by the Canadian based company, Relic Entertainment, and published by THQ. We gladly changed from the first-person shooter based genres of the rest of our gaming benchmarks to this game which is RTS. Why? COH is an excellent game that is incredibly demanding on system resources thus making it an excellent benchmark. Like F.E.A.R. the game contains an integrated performance test that can be run to determine your system’s performance based on the graphical options you have chosen. It uses the same multi-staged performance ratings as does the F.E.A.R. test. We salute you Relic Entertainment!

Crysis

Crysis is the most highly anticipated game to hit the market in the last several years. Crysis is based on the CryENGINE™ 2 developed by Crytek. The CryENGINE™ 2 offers real time editing, bump mapping, dynamic lights, network system, integrated physics system, shaders, shadows and a dynamic music system just to name a few of the state of-the-art features that are incorporated into Crysis. As one might expect with this number of features the game is extremely demanding of system resources, especially the GPU. We expect Crysis to be a primary gaming benchmark for many years to come.

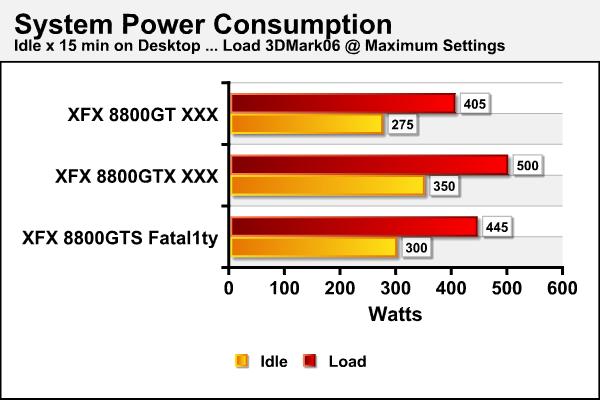

Power Consumption

We decided to measure both the power consumption and temperatures of each of these cards. To measure power we used our Seasonic Power Angel a nifty little tool that measures a variety of electrical values. We used a high-end UPS as our power source to eliminate any power spikes and to condition the current being supplied to the test systems. The Seasonic Power Angel was placed in line between the UPS and the test system to measure the power utilization in Watts. We measured the idle load after 15 minutes of totally idle activity on the desktop with no processes running that mandated additional power demand. Load was measured after looping 3DMark06 for 15 minutes at maximum settings.

As we would expect, the power consumption of the 8800 GTX XXX should be higher, linearly speaking, than that of the 8800 GT XXX. We did not expect the difference to be 50 Watts at idle and 95 Watts at load given the degree of the factory overclock the 8800 GT XXX. Again excellent results!

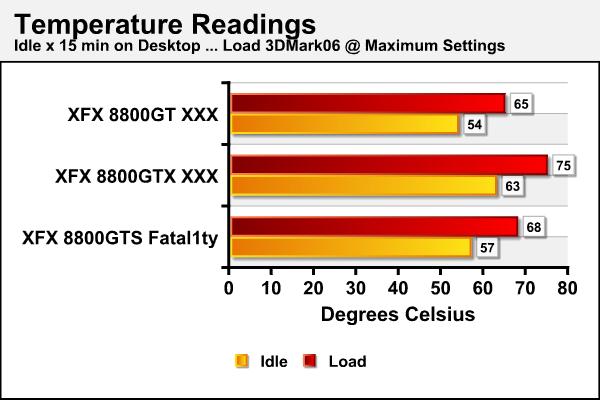

Temperatures

The temperatures of the cards tested were measured using Everest Ultimate Edition v. 4.20.1170 to assure consistency and remove any bias that might be interjected with the respective card’s utilities. The temperature measurements used the same process for measuring “idle” and “load” capabilities as did the power consumption measurements.

To say that we were amazed by how cool this card runs would be a vast understatement! It was 3 degrees Celsius cooler at idle and load when compared to the XFX 8800 GTS Fatal1ty which was one of the coolest 8800 series cards we had tested to date. We must mention that we are testing this group of cards using the Antec P190 case which has a side mounted 200mm fan which we ran a medium speed (107 CFM)throughout all of our testing. We are certain that this helped us to attain these lower cooling results, yet they are still quite impressive.

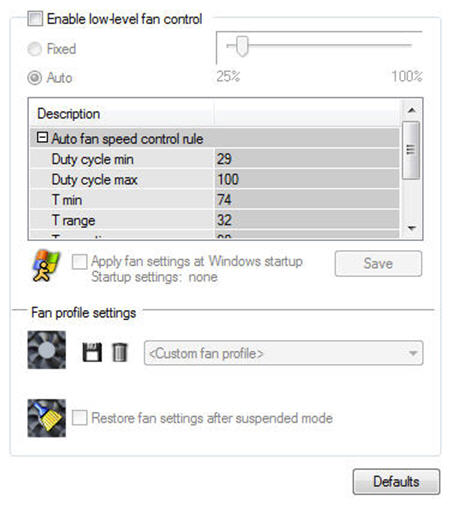

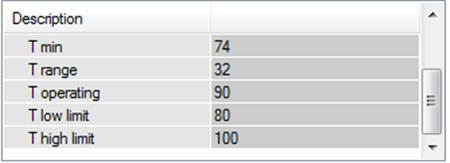

Now I must rain on this parade just a bit. In doing research for this review I digested everything both good and bad regarding the NVIDIA 8800 GT (G92). One of the major issues many of the users seem to have with this product is its temperatures in the enclosures they are using. It seems that NVIDIA has determined that the standard RPM level for the fan on this 8800 GT’s cooler should be 30% of maximum. Why you might ask? In a word noise, the fan is loud at anything above 80% and very loud at 100%. You might be asking yourself isn’t this fan’s RPMs temperature adjustable? You’d be right but NVIDIA chose to set the maximum temperature before the fan ramps up at between 90 and 100 degrees Celsius. As this was at best hearsay, I confirmed this using Riva Tuner v. 2.06.

Now again in fairness to NVIDIA, I never saw the temperatures go above 65 degrees Celsius even when overclocking and I stressed the hell out of this card. I’m also using a very well ventilated case with a 200mm fan blowing directly on the video card. I’m trying to be as objective as possible here and I’m sure NVIDIA would not set limitations that are unachievable with the card! That would be very costly if they all of a sudden had to replace a huge number of cards due to this issue. IMHO we’ve just got to realize that these cards are going to run very hot when stressed and do our best to provide them with the best cooling possible.

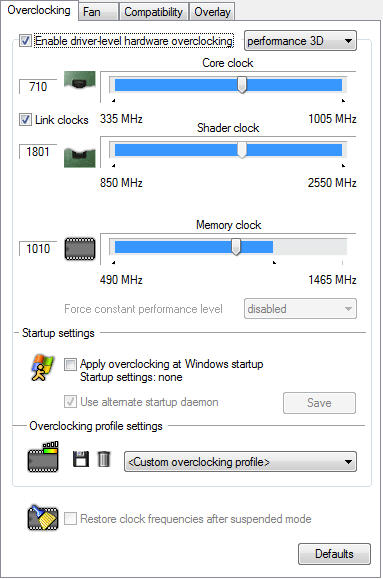

Overclocking

One can only question the logic of attempting to further overclock and already factory overclocked graphics card. We pursued this endeavor using Riva Tuner v. 2.06 to primarily find out how much headroom this card actually had. We realized that our results would be somewhat limited primarily by the fact that we are using Vista Ultimate 64-bit as our operating system of choice. We have had reasonably good luck with Vista in most regards when it comes to testing most products, but we will be the first to admit that overclocking any component on your system is not nearly as well supported by Vista as it is with Windows XP. That being said were able to reach a Core clock of 710MHz, a memory clock of 2020MHz and a shader clock of 1801 MHz.

The XFX 8800 GT Alpha Dog XXX Edition does in fact have a moderate amount of headroom as demonstrated by the results in the images above. We feel this card has more headroom than our tests actually represent and again state that we feel the OS used to test the card was the primary culprit.

CONCLUSION

This has been a very long and intense review covering every aspect of XFX 8800 GT Alpha Dog XXX Edition that we could possibly think of. A few weeks ago we reviewed the XFX 8800 GTS Fatal1ty Edition and at the time stated: “In short this is the best bang for the buck card we’ve ever tested and we can only hope XFX brought boatloads into the US, because supplies will probably dwindle quickly.” Well that has changed as this graphics solution literally blows it out of the water and in most cases the 8800 GTX XXX as well. My rationale for this statement follows:

- For a street price of somewhere aound $299.99 USD you get a graphics solution that in almost all of the scenarios we tested blows away its competition which costly significantly more!

- The only substantive difference is the GTX XXX has a 384 bit memory bus as opposed to a 256 bit bus for the GT XXX; and the GTX XXX has a slightly faster memory bandwidth at 86.4GB/sec as opposed to 64GB/sec for the GT. The GTX XXX also has slightly more memory at 768MB compared to 512 MB for the GT XXX.

- The 8800 GT XXX performs almost on par with the 8800 GTX XXX and in most cases surpasses it!

- The 8800 GTS Fatal1ty is 10 degrees Celsius cooler at full load than the 8800 GTX XXX and draws around 50 – 95 Watts less power

- The 8800 GT XXX is a single slot graphics card of normal size that doesn’t limit the users enclosure choices nearly as much as the 8800 GTX XXX does.

- There are a couple of very small issues with the 8800 GT XXX but in the grand scheme of things these are almost meaningless

We could not say what we feel any better than we said it the last time! In short this is the best bang for the buck card we’ve ever tested and we can only hope XFX brought boatloads into the US, because supplies will probably dwindle quickly. We can only hope for the computer enthusiasts out there that this scenario is like the movie Ground Hog Day and every few weeks we’re able to say the same thing about a new and even better graphics solution. I think it goes without saying again we recommend the XFX 8800 GT Alpha Dog XXX Edition to anyone that wants the best currently available at an extremely reasonable price!

Pros:

+ Vibrant and very life-like image rendering

+ 1675MHz Shader clock speed

+ 64GB/sec memory bandwidth

+ 675MHz core clock

+ 1.95GHz memory clock

+ SLI™ certified

+ Decreased CPU utilization in video processing due to the work being transferred to the GPU

+ NVIDIA® unified architecture with GigaThread™ technology

+ NVIDIA® Lumenex™ Engine

+ Runs extremely cool and quiet even under heavy load in our test system

+ A quality product

+ Double lifetime warranty

Cons:

– Doesn’t support the forthcoming release of DirectX 10.1 and Shader 4.1

– Factory fan RPMs are set at 30% of maximum and this doesn’t increase until temps of >90 degrees Celsius are reached

Final Score: 9.5 out of 10 and the prestigious Bjorn3D Golden Bear Award.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996