The HD2000 series of GPU’s is a first for AMD in many ways. It is their first GPU’s they release after they bought ATI. It is their first GPU’s with DX10 support and it is their first GPU’s with an unified shader architecture. We have taken some time to examine all the new features and also benchmarks the new enthusiast card from AMD, the HD2900XT.

INTRODUCTION

Maybe it is just me, but after covering video card launches for 11 years it is not always easy anymore to get excited about the new products that are being unveiled. Most of the time all we get is a bit faster clock speeds and a couple of new features. The last year however has been different. Several events have set up the stage for an interest launch of AMD’s new products.

First we had the acquisition of ATI by AMD. This caught me by surprise as I would rather have thought a marriage between AMD and NVIDIA would have been the logical choice. What plans would AMD have with ATI? Would they still want to participate in the speed race? Or would AMD rather concentrate on merging the CPU and the GPU?

Second we had the launch of Vista and DirectX 10. It has been a while since we had a new DirectX version and after listening to several briefings on the changes it is quite obvious Microsoft have done a lot with DirectX to give game developers even more tools to create cool games.

Third we have had the decline in AMD/ATI’s market share and NVIDIA’s success with their GeForce 8 video cards. It is not secret that AMD is not doing as well as they could right now and this makes it extra interesting to see how they will match the GeForce 8.

At a launch event in Tunis, Tunisia, AMD revealed all for us and in this article I hope to at least give you a general overview what AMD has in store for us. In addition to this article we have also released a review of the first retail HD2900XT card that we have received, the Jetway HD2900XT.

THE RADEON HD2000 GPU’S

The new GPU’s that are being revealed today go by the name of Radeon HD2000. As you may have noticed AMD has dropped the X-prefix and instead put HD in front of the model number. The reason for this is probably to enforce the feeling that these new cards will be the ones to get for the “HD-experience” that everyone is talking about now.

AMD unveiled 10 HD2x00 cards that will be available now or in the near future

The HD2900XT (R600)

This is AMD’s high-end card. With 320 stream processing units, 512 bit memory interface and HDMI, this is the card that takes on NVIDIA’s flagship products. The HD2900XT cards will ship with a voucher for Valve’s Black Box (Team Fortress 2, HL2:Episode 2 and Portal), most likely as a download from Steam but this is up to the vendors to decide.

Recommended price: $399

The HD2600Pro and HD2600XT (RV630)

In the performance corner we find the HD2600Pro and the HD2600XT. These cards come with 120 stream processing units, a 128 bit memory interface, Avivo HD, HDMI and are built with a 65nm process. Eagled-eyed readers probably also notice from the image above that they do not need an extra power-connector.

Recommended price: 99$ – $199 (depending on the configuration)

The HD2400 Pro and HD2400XT (RV610)

At the bottom of the scale we have the “Value” segment. The HD2400Pro and HD2400XT are the cards that AMD want to compete for market share with NVIDIA’s low-end cards. They come with “only” 40 stream processing units, a 64-bit memory interface, Avivo HD, HDMI and are also built with a 65nm process.

Recommended price: sub $99

In addition to these 5 chips, AMD also unveiled five Mobility Radeon GPU’s: the HD 2300, the HD 2400, the HD2400XT, the HD2600 and the HD2600XT. I will not go into that much detail about these in this article but these should start to pop up in notebooks pretty soon.

SO WHAT IS NEW?

AMD has both added new features and improved on features from previous generations.

Unified architecture

Some might think that NVIDIA was first with a unified shading architecture in the GeForce 8 but ATI actually was first with the Xenon GPU in the Xbox 360. We will discuss the unified architecture later in this article. Very briefly, the idea with a unified architecture is to no longer have to separare vertex and pixel shaders. Instead the shader units you got can handle both kinds of shaders in addition to a new set of shaders called geometry shaders.

Fully distributed memory controller

With the X1000-series, AMD created a Ring Bus architecture and made a partly distributed memory controller. In the 2000-series they finish the work they started and now have a fully distributed memory controller.

DirectX 10 support

DirectX 10 both introduces more ways to process, access and move data as well as raise the programming limits for shader instructions, registers, constants and outputs significantly. DirectX 10, adds support for the unified shading architecture making it possible for developers to fully utilize it right away in their DirectX 10 applications. DirectX 10 also introduces a new shader type – the geometry shader. This can create and destroy geometry and lies between the Vertex and the Pixel shader in the pipeline.

New and improved Image Quality features

AMD has continued to improve the image quality by introducing new anti-aliasing and texture filtering capabilities. They also have added a so called Tessellation unit to their cards to help amplify geometry.

Avivo HD technology

Avivo has been updated to make it possible for even the lower end cards to offer playback of 40 Mbps HD-material. This is done with a new chip called the UVD (Unified Video Decoder). AMD has also added HDMI v 1.2 support as well as HDCP support over Dual-Link DVI.

Native Crossfire

Yay! No more Master card and dongle on the high end cards. AMD has also improved the detection AFR algorithm making more games automatically use this method.

THE UNIFIED SHADER ARCHITECTURE

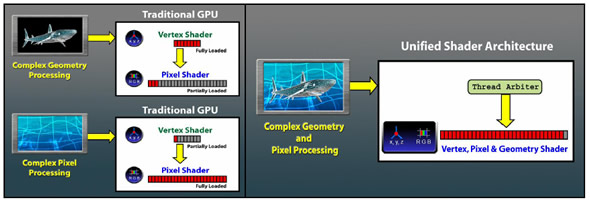

The idea behind a unified shading architecture is that it is a waste of resources to have shaders that are dedicated to either vertex or pixel operations. I think we all have seen these examples several times before but they are well worth to repeat.

Image from AMD

In a unified architecture each shader unit can act as either a pixel or a vertex shader and thus you make sure you can keep the pipeline full at all times. In addition to this, DirectX 10 also introduces a new type of shader: the geometry shader. This means that if you would build a GPU using the traditional methods you would have to add another type of shader unit. A better solution thus is to just create one type of shader unit that can handle all three shader types.

The AMD unified shader architecture is built in this way:

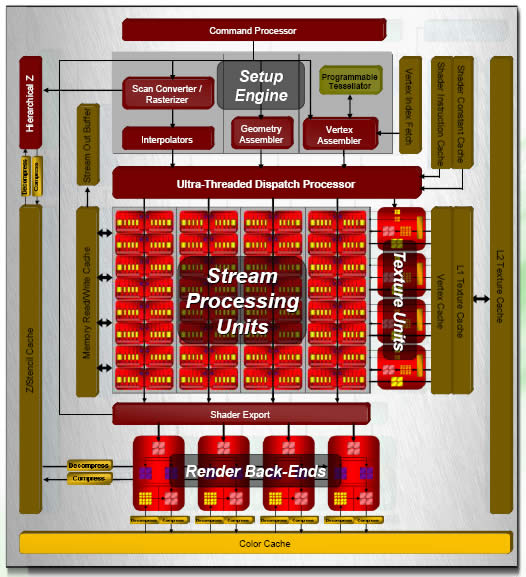

Image from AMD

The Command Processor processes commands from the graphics driver. It also performs state validation offloading this from the CPU.

The Setup Engine prepares the data for processing by the stream processing units. The data consists of three different functions: Vertex assembly and tessellation (for vertex shaders), Geometry assembly (for geometry shaders) and Scan conversion and interpolation (for pixel shaders). Each function can submit threads to the dispatch processor.

The Ultra-threaded Dispatch Processor is a kind of traffic cop and has the responsibility to fill threads (number of instructions that will operate on a block of data) that are waiting for execution. Each shader type has its own command queue.

Inside the Ultra-threaded Dispatch Processor we also find arbiter units that determine which thread next to process. Each array of stream processors has 2 arbiter units allowing the array to be pipelined with two operations at a time to process. Each arbiter unit in turn has a sequencer unit that determines the optimal instruction issue order for each thread.

Phew – are you still with me? Because we are not yet done with the Dispatch Processor. Connected to the ultra-Threaded Dispatch Processor is dedicated shader caches. The instruction cache allows for unlimited shader length while the constant cache allows for an unlimited number of constants.

So, we have processed the commands, submitted threads and now we are ready to execute the instructions. This is where the Stream Processing Units come in play. AMD has divided up all the stream processing units in arrays called SIMDs. On the HD2900 you have 4 SIMDs with 80 stream processing units in each (for a total of 320), on the HD2600 there is 3 SIMDs with 40 stream processing units in each (for a total of 120) and the HD2400 has 2 SIMDs with 20 stream processing units in each (for a total of 40).

Image from AMD

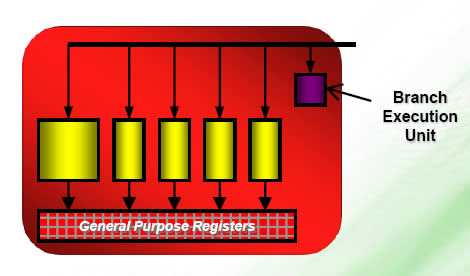

Each SIMD can execute the same instruction thread on multiple data elements in parallel. Each instruction word can include up to 6 independent, co-issued operations (5 math + flow control). Texture fetch and vertex fetch instructions are issued and executed separately.

The Stream Processing Units are arranged in 5-way superscalar shader processors. A branch execution unit handles the flow control and conditional operations. General purposes registers store input data, temporary values and output data. It is possible to co-issue up to 5 scalar MAD (Multiply-Add) instructions per clock to the shader processor. One of the 5 stream processing units handles transcendental instructions as well (SIN, COS, LOG, EXP, etc.).

The HD2900XT has four Texture Units each having 8 texture address processors, 20 FP32 Texture samplers and four FP32 texture filters. The HD2600 and 2400 also have these texture units, just less of them (the HD2600 has two and the HD2400 has one). Behind the texture units we find multi-level texture caches. The large L2 cache stores data retrieved on L1 cache misses. The size of the L2 Texture cache is 256 kB for the HD2900 and 128 kB for the HD2600. The HD2400 uses a single level vertex/texture cache. All texture units can access both vertex cache and L1 texture cache.

So, what are the improvements on the Texture Unit? The HD2000 now can bilinerary filter 64-bit HDR textures at full speed 7x faster than the Radeon X1000 series could. AMD has also improved the high quality anisotropic filtering and even made the high quality mode from the X1000 series the default setting. The Soft shadow rendering performance has also been improved and there is now support for up to 8162×8162 textures.

The last stop of our tour of the AMD HD2000 Unified Shading Architecture takes us to the Render Back-Ends. The HD2900 has four Render Back-Ends while the HD2600 and HD2400 have one each. One of the highlights of the HD2000 render Back-End is the programmable MSAA resolve functionality. This now makes AMD’s new AA-method, Custom Filter AA, possible.

So how does this stack up against NVIDIA’s architecture? Isn’t 320 stream processing units more than 128? Well, it isn’t that easy. In fact, at the event even AMD said that you cannot just compare the numbers. While NVIDIA has 128 standard ALU’s they also have 128 special function ALU’s which together makes 256. As AMD counts in everything in the total of 320 streaming processing units it is thus fairer to compare 320 to 256. The streaming processing units in the GeForce8 is also allegedly clocked faster than the streaming processing units in the HD2900XT so in the end it looks like NVIDIA still have the edge there. There are of course other differences and NVIDIA was very happy in providing a PDF pointing these out (and of course say they were better and smarter something AMD then countered was not true, sigh) but to be honest I do not have the knowledge and experience in these types of architectural creations to figure out who is right and who is wrong so in the end it looks like AMD and NVIDIA simply have choosen slightly different paths to develop a unified shader architecture.

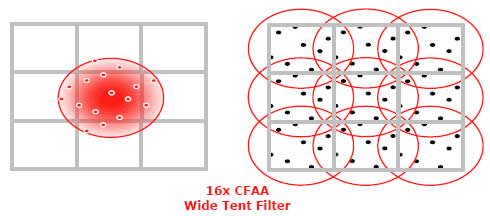

CUSTOM FILTER ANTI-ALIASING

The ATI Radeon cards always have had good quality Anti-Aliasing. The HD2000 GPU’s will continue with the support of the AA technologies we have come to get used to while introducing a new method:

- Multisampling

- Programmable sample patterns

- Gamma correct resolve

- Temporal AA

- Adaptive Supersampling/Multisampling

- Super AA (Crossfire)

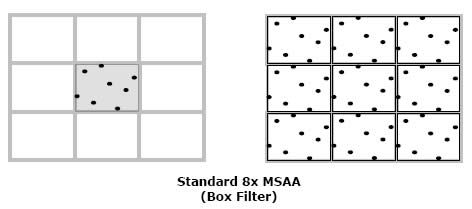

The new technology that AMD is introducing here is called CFAA: Custom Filter Anti-Aliasing. Regular Anti-Aliasing methods are restricted to pixel boundaries and fixed weights. By sampling from outside the pixel boundaries, AMD claims they can get better quality per sample than supersampling, with better performance.

Old people like me probably now try to remember if we haven’t heard about something like this before. And it turns out we have. NVIDIA was kind enough to claim that CFAA is nothing more than the Quincunx AA method they used on the GeForce 3 where they also sampled outside the pixel boundaries to get more effective samples. They stopped using this method “as gamers thought it blurred the image”.

According to AMD the CFSS method they use should avoid blurring of fine details. They have an edge detection filter that performs an edge detection pass on the rendered image. The edge filter then resolves using more samples along the direction of an edge while using fewer samples and box filter on other pixels.

CFAA works together with in-game AA-settings as well as together with both Adaptive and Temporal AA, HDR and stencil shadows.

So who is right? Is the new CFAA method crap or are NVIDIA just sour because AMD has managed to better a technique they discarded long ago? The proof is in the pudding so to speak. Later in this article I have taken screenshots in several games on both the HD2900XT and the GeForce8800GTX to see how good the quality really is.

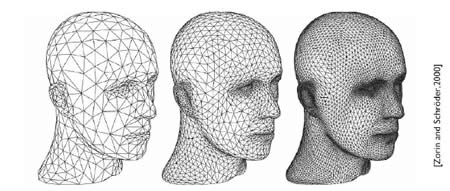

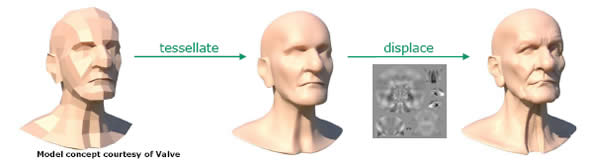

TESSELATION

Ever since the release of the first 3D video cards there have been a race between the GPU-makers to introduce new features that the competition does not have. In many cases these features can help separate the cards from the opposition and help sell them. NVIDIA beat ATI for SM3.0 support, ATI on the other hand was first to support AA together with HDR. With the HD2000 AMD is introducing a new feature that they hope will help set the cards apart from NVIDIA’s GPU’s: Tessellation on the GPU. All the Radeon HD2000 GPUs feature a new programmable tessellation unit.

Tessellation: Subdivision Surface

Basically tessellation is a way of taking a relatively low-polygon mesh and then use subdivision on the surface to create a finer mesh. After the finer mesh has been created, a displacement map can then be applied to create a detailed surface. The cool part in all this is that this can all be done on the GPU. There is no need to access the CPU for anything; the GPU takes care of it. As the Tessellation unit is situated before the Shaders there also is no need to write any new shaders.

Image from AMD

This technology comes from the Xbox 360 and is for instance used in the game Viva Piñata. We had a guy from Microsoft/Rare show how they used it in the game and it looked real cool.

So, this does sound cool, doesn’t it? So what is the catch? Well, the catch is that AMD is alone with supporting this right now and it is not even in DirectX. This means developers have to add the support for AMD hardware themselves. AMD of course hopes that games being ported from Xbox360 or developed for P C and Xbox 360 will have support for it but you never know.

It does however look like Microsoft will add support for Tessellation in a future revision of DirectX and during a GDC 2007 presentation named “The Future of DirectX” they even presented a possible way that this would be implemented which looks exactly like what AMD has put on their chip. So odds are we will see Tessellation support sooner than later.

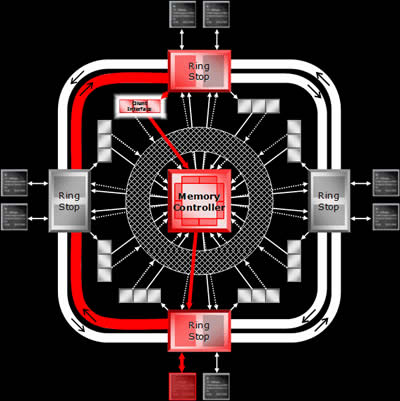

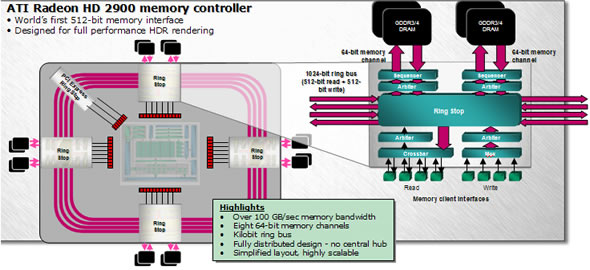

THE UPDATED MEMORY CONTROLLER

With the X1000 series ATI introduced the ring-bus configuration for the memory controller. In this configuration requests went to the memory controller and then the response went out to one of the “ring stops” that you could find around the controller.

The X1000 series memory controller.

Click on it for a larger version.

While this was a great idea, it still wasn’t perfect. For instance, while the bus was built up by two internal 256 bit rings creating a 512 bit ring bus, the memory interface out still was just 256 bit. AMD found that it was quite hard to increase the bus to 512 bit.

The solution for AMD was to move from a partially distributed memory controller where you still had the memory controller in the middle of the ring bus, to a fully distributed memory controller where everything you need now resides in the ring itself. The benefits are that it simplifies the routing (improves scalability), reduces wire delay and reduces the numbers of repeaters requiered. By doubling the I/O density of the previous designs AMD was able to fit in 512 bits into the same area as they before could fit 256 bits.

The X2000 series memory controller

Click on it for a larger version

Instead of two 256-bit rings, as in the X1000 memory controller, there is now eight 64-bit memory channels. At each ring stop there is a 1024-bit ring bus (512-bit read, 512-bit write). This architecture makes it very flexible and it is easy to add/remove memory channels.

The benefits with a 512-bit interface is that AMD can get out more bandwidth with existing memory technology, and that at a lower memory clock. As you might have noticed AMD has chosen to only go with DDR3 on the HD2900XT. One reason was that AMD did not see any big advantages over DDR4 that would justify the added cost.

THE ULTIMATE HD EXPERIENCE – AVIVO HD

I cannot think that anyone has missed the whole HD-craze that has been going on for the last year or so. While us PC-gamer have been HD-gaming for quite some time now, the rest of the gaming world is finally catching up. We also finally are getting new High Definition video formats that put new demands on the hardware.

AMD has always been in the forefront when it comes to video quality with Avivo and they have of course improved it for the HD2000 series.

Support for HD-playback is really nothing new. Even the X1000 series cards had support for accelerated h.264 playback. The new feature is that AMD has taken the support and improved it in many ways as well as added more support for Blue-ray and HD-DVD playback.

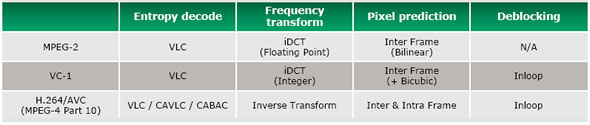

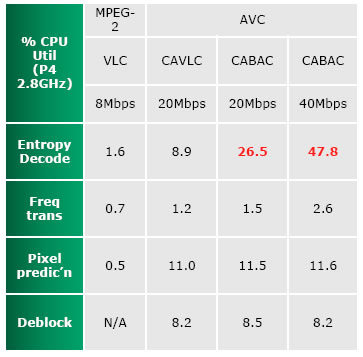

The challenges with HD

As the new HD-content becomes more widespread the demands on the hardware increases. While a regular DVD has a maximum bit-rate of ~9.5 Mbps, the maximum bit-rate for HD-DVD is around ~30 Mbps and for Blue-ray ~40 Mbps. In addition to higher bit-rates both Blueray and HD-DVD introduces new codecs that in turn add even more demands on the hardware.

New codecs use more decode stages and more computational complex stages

CABAC based compression offers very high compression rates

while minimimizing information loss. The tradeoff is that it is very

computationally expensive.

Another challenge with HD-content is how it can be played back. Regardless how we feel about it the movie industry has put conditions on how you can watch HD-DVD and Blue-ray on your computer, specifically in Vista.

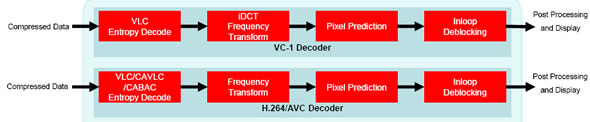

The Unified Video Decoder

While higher SPEC’d video cards should have no problems playing back video even at the higher bit-rates, there has been a problem to get the lower end cards to manage to play back that material at full HD (1080p). Up until now also the video acceleration of the GPU’s have not accelerated the first entropy decode step in the decode process. This means you had to leave this to the CPU.

AMD has solved these problems with the new Uniified Video Decoder, a little chip that sits on both the HD2400 and the HD2600 (the HD2900XT does not need it as it can handle all this by itself).

The UVD handles the entire decode process, offloading both the CPU and GPU. It supports full 40 Mbps bit-rates optical HD-playback. The decode data is also handled internally which removes any need for passes to the memory (system and GPU) between the decode stages.

In comparison NVIDIA’s G80 only assists on the last two stages of the decode process while their new G84/86 also handles all stages on the GPU (except when the VC1 codec is used, then the first stage still is done by the CPU).

On the G80/G7X, the first two steps are done in the CPU. The G84/86

however can handle almost everything with the exception of VC1 decoding.

Click on the image for a larger version.

One benefit of UVD is that as the CPU is not used as much, the overall power consumption goes down. For laptop users this will be a welcome feature as AMD claims it means that you will be able to watch a HD-DVD or Blueray movie on one charge.

In addition to the UVD, the HD2400 and HD2600 have custom logic for Avivo Video Post Processing (AVP). This is capable of deinterlacing with edge enhancement, vertical&horizontal scaling and color correction, all on HD-content.

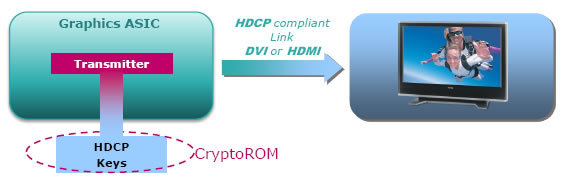

Digital Content Protection

Regardless if you like it or not, the simple fact is that to playback protected video content you need to have hardware that “follow the rules”. On the PC it means that each link in the chain from the optical drive to the screen needs to be “protected” so that no-one can access the video and audio stream at any stage. For the video cards it means that they need to support HDCP, either with HDMI or DVI. Up until now the HDCP-compliant solutions have stored the encryption keys table on an external CryptoRom. The downside with this is that it adds extra cost to the boards and that you cannot be sure all board makers will make their cards HDCP-compliant.

This is how HDCP have been implemented earlier

On the HD2000, AMD has chosen to put the HDPC encryption keys table inside the GPU instead. This means that the board makers no longer need to add an extra chip to the board and thus it both lowers cost as well as ensure that all the HD2000 boards will be fully HDCP-compliant.

HDCP on HD2000

In addition to this, the HD2000 cards will all have as many HDCP ciphers as they have output links. This means that you will be able to run HD content at native resolutions of the dual-links panels (no need to drop the desktop resolution to HD or single-link).

HDMI

The HDMI-connector has basically taken over the TV-market. It is easy to understand why. Instead of having to use different cables for video and audio, HDMI combines everything into one cable. The connector itself also is much smaller and more convenient than a DVI-connector.

The HD2000 of course supports HDMI, albeit ‘only’ v1.2 (NVIDIA supports 1.3). I googled a bit on the difference between v1.2 and v.1.3 and this is what I found:

Increases single-link bandwidth to 340 MHz (10.2 Gbps)

Optionally supports 30-bit, 36-bit, and 48-bit xvYCC with Deep Color or over one billion colors, up from 24-bit sRGB or YCbCr in previous versions.

Incorporates automatic audio syncing (lip sync) capability.

Supports output of Dolby TrueHD and DTS-HD Master Audio streams for external decoding by AV receivers.[9] TrueHD and DTS-HD are lossless audio codec formats used on HD DVDs and Blu-ray Discs. If the disc player can decode these streams into uncompressed audio, then HDMI 1.3 is not necessary, as all versions of HDMI can transport uncompressed audio.

Availability of a new mini connector for devices such as camcorders.[10]

Source: Wikipedia

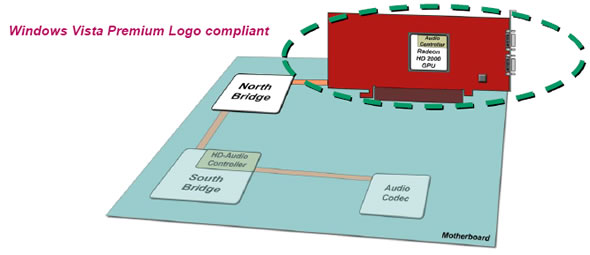

The “correct” way to support HDMI with both sound and video in Vista is to make sure there is no unsecure path for either. Some current solutions use an extra cable between the video card and the motherboard but that actually is not allowed by Vista. Right now Vista seems to accept it anyway but if Microsoft would to enforce the protected path requirement you can run into issues when trying to get sound over HDMI.

On the HD2000 AMD has built in an audio controller into the GPU. The controller still needs an Audio Codec on the motherboard but there is no need for an extra cable or connection to connect them. All is handled over the North and South bridge making the cards fully compliant with Vista.

The HD Audio system supports the following:

- Microsoft UAA driver (Vista)/ AMD driver (XP)

- 32kHz, 44.1kHz, 48kHz 16-bit PCM stereo

- AC3 (5.1) Compressed multi-channel audio streams such as Dolby Digital & DTS’

Those of you that were paying attention will have realized that on all images of the cards, they still use DVI-connectors. And that is right. Instead of putting an HDMI-connector onto the cards, AMD has opted to include a DVI=>HDMI adapter instead.

This is not your everyday cheap $5 adapter though. Those usually only carry the video portion of the stream and not any audio. The adapter AMD includes takes care of both audio and video.

RADEON HD2000 – THE LINEUP

I briefly introduced the cards in the beginning of this article. Now it is time to look a bit more closely on their specifications.

|

|

ATI Radeon HD 2400 |

ATI Radeon HD 2600 |

ATI Radeon HD 2900 |

|

Stream Processing Units

|

40

|

120

|

320

|

|

Clock Speed

|

525-700 MHz

|

600-800 MHz

|

740 MHz

|

|

Math Processing Rate(Multiply-Add)

|

42-56 GigaFLOPS

|

144-192 GigaFLOPS

|

475 GigaFLOPS

|

|

Pixel Processing Rate

|

4.2-5.6 Gigapixels/sec

|

14.4-19.2 Gigapixels/sec

|

47.5 Gigapixels/sec

|

|

Triangle Processing Rate

|

262-350 Mtri/sec

|

600-800 Mtri/sec

|

740 Mtri/sec

|

|

Texture Units

|

4

|

8

|

16

|

|

Typical Board Power

|

~25W

|

~45W

|

~215W

|

|

Memory Frame Buffer

|

256MB GDDR3,128/256MB DDR2

|

256MB GDDR4, 256MB GDDR3,256MB DDR2

|

512MB GDDR3

|

|

Memory Interface Width

|

64 bits

|

128 bits

|

512 bits

|

|

Memory Clock

|

400-800MHz

|

400-1100 MHz

|

Something

|

|

Memory Bandwidth

|

6.4-12.8 GB/sec

|

12.8-35.2 GB/sec

|

106 GB/sec

|

|

Transistors

|

180 million

|

390 million

|

700 million

|

|

Process Technology

|

65G+

|

65G+

|

80HS

|

|

Outputs

|

sVGA+DDVI+VO (HDMI adaptor)

|

D+DL+DVI (HDMI adaptor)

|

D+DL+DVI w/HDCP (HDMI adaptor)

|

HD2400

We will start at the lower end of the scale. The HD2400Pro and HD2400XT are the cards that are positioned for the Value Segment. The GPU’s are made with an 65 nm process. The recommended price is sub-$99. The release date for the HD2400 is beginning July.

2 different versions of the HD2400

While the specifications are pretty low, the card still can boast with similar features as the other cards.

- DX10 support

- CFAA support

- Unified Shading Architecture

- ATI Avivo HD – provides smooth playback for HD-content

- Built-in 5.1 surround audio designed for an easy HDMI connection to big-screen TVs

- Tessellation unit

- The HD2400XT should also support Crossfire

The Radeon HD2400 of course is not intended for anything else than casual gamers. Its true potential lies in the low power usage and the Avivo HD support. I can see these cards being put in many HTPC’s.

HD2600

In the Performance segment we find two cards, the HD2600XT and the HD2600Pro. These GPU’s also are built with the new 65nm process. The recommended price is $99-$199 (depending on the configuration). The release date for the HD2600 is beginning July.

Look! No extra PCI-E power connector needed

These cards support the same features as the others:

- DX10 support

- CFAA support

- Unified Shading Architecture

- ATI Avivo HD – provides smooth playback for HD-content

- Built-in 5.1 surround audio designed for an easy HDMI connection to big-screen TVs

- Tessellation unit

- Native Crossfire support

This is the cards that I think will sell the best and that will bring in the money for AMD. On paper the performance seems very good for the asked price, even though a 256-bit interface would have been even nicer. Even more impressive is the fact that these cards do not need any extra power. At the event we saw several HD2600 based cards and they all were single-slot solutions and did not have any addition power connector. I do not think it is far-fetched to expect a few fan less versions of the HD2600 in the future.

HD2900

Last but far from least we have the HD2900XT. Positioned at the Enthusiast segment of the market, this is the card that takes on the GeForce 8800GTS and GeForce 8800GTX. This is not built using the 65nm process, instead the older 80 nm process was used. The recommended price is $399. The release date for the HD2900 is May 14th (thus when you read this they already should be available in the shops).

Being top of the line, this GPU has all the new features of the HD2000 series:

- DX10 support

- 512 bit memory controller

- CFAA support

- Unified Shading Architecture

- ATI Avivo HD – provides smooth playback for HD-content (through the GPU and not UVD)

- Built-in 5.1 surround audio designed for an easy HDMI connection to big-screen TVs

- Tessellation unit

- Native Crossfire support

As AMD has packed so much power into the HD2900 GPU they did not need the extra UVD (Unified Video Decoder) chip to off-load the GPU. So while the CPU still is being off-loaded, it is done over the GPU instead of the UVD.

The HD2900 is a dual-slot solution. The reference board is very similar to the X1950XT reference board. The biggest difference I’ve noted is that the HD2900XT weights more.

While the HD2400 and the HD2600 excels when it comes to low power consumption, the HD2900 unfortunately disappoints in that regard. AMD says the power consumption is around 225W and as you will see my own testing confirms this. This is also the first card I’ve seen where they use an 8-pin PCI-E power port in addition to a regular 6-pin port. You still can plug in a regular 6-pin connector but if you want to overclock it is required to use an 8-pin PCI-E power connector. Right now there are not that many PSU’s that include this and those who do cost a small fortune.

If the power consumption is disappointing the price is not. At $399 is puts it squarely around the same price as the cheapest 640MB GeForce 8800GTS cards. Considering that we are talking about the recommended price I think you can expect to find it even cheaper in a few months.

TESTING – HD2900XT

AMD provided us with a HD2900XT for benchmarking. The card was benchmarked on the following system:

| Review System | |

| CPU | Intel Core 2 Duo [email protected] |

|

Motherboards

|

EVGA 680i SLI (nForce 680i) |

|

Memory

|

Corsair XMS2 Xtreme 2048MB DDR2 XMS-1066 |

|

HDD

|

1×320 GB SATA |

|

Video cards

|

ASUS 8800GTS Sparkle Calibri 8800GTX Reference HD2900XT Reference X1950XT |

|

Software |

Windows Vista (32 bit) Windows XP SP2 |

|

Drivers |

HD2900XT: 8.37 X1950XT: 7.4 8800GTS/GTX: 158.22 (XP) / 158.24 (Vista) |

The following games and synthetic benchmarks were used to test the cards:

|

Software |

|

|

3DMark05

|

Default |

|

3DMark06

|

Default, 1600×1200, 1920×1200, Feature tests |

|

Quake4

|

Ultra Setting, 4xAA/16xAF |

|

Prey

|

Ultra Setting, 4xAA/16xAF |

|

Company of Heroes SP Demo

|

All settings to max, in-game AA turned on |

|

Supreme Commander

|

All settings to max, 4xAA/16xAF |

|

HL2: Lost Coast

|

Highest Quality, 4xAA/16xAF |

|

Power DVD Ultra

|

HD-DVD playback |

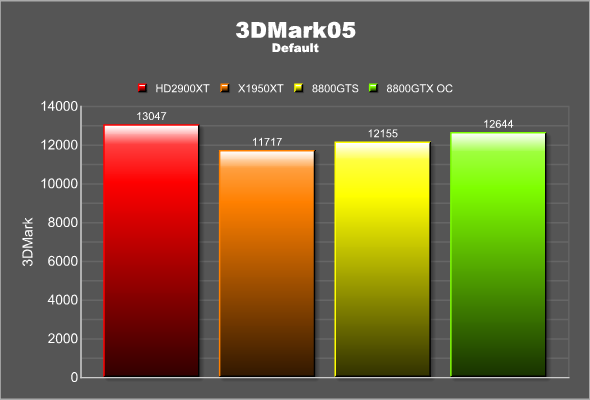

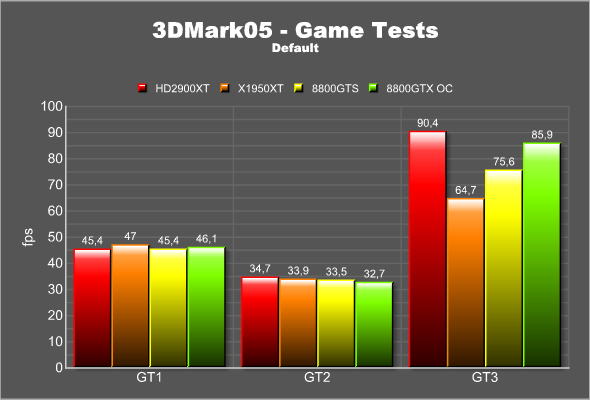

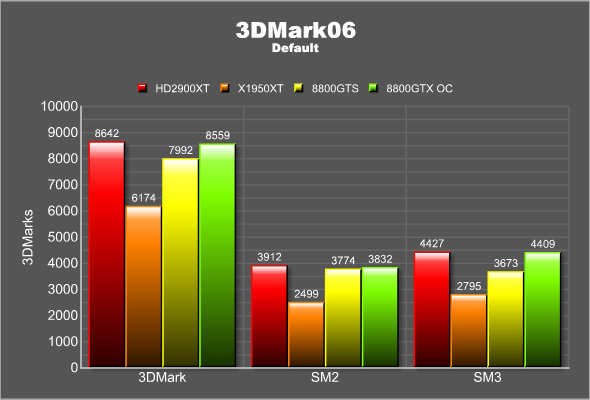

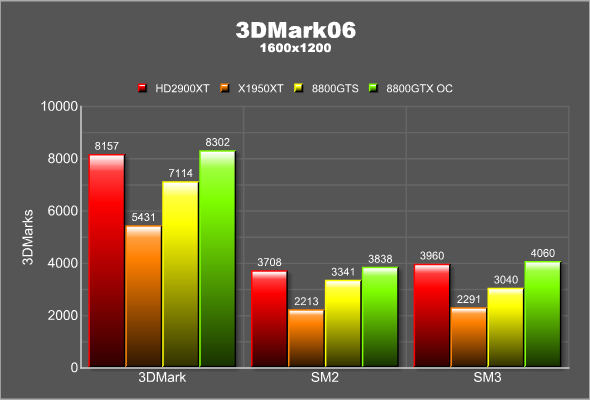

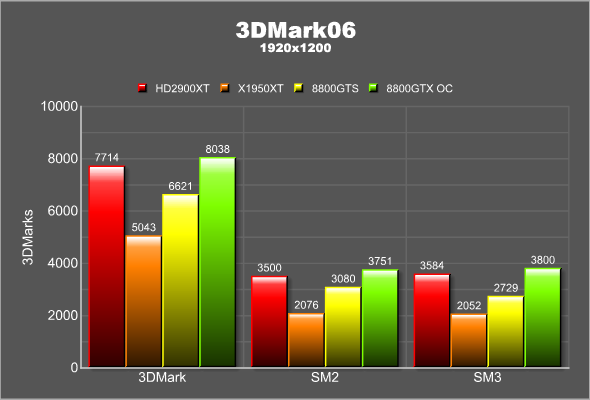

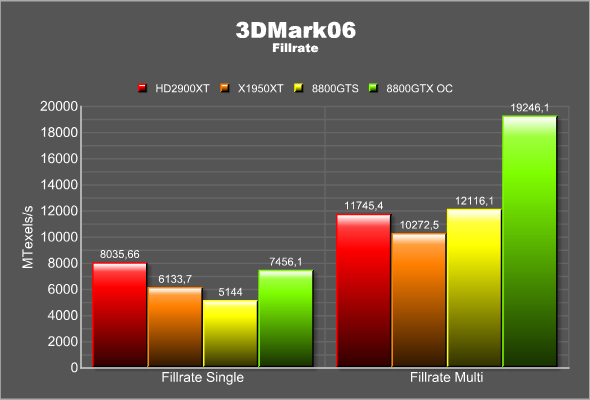

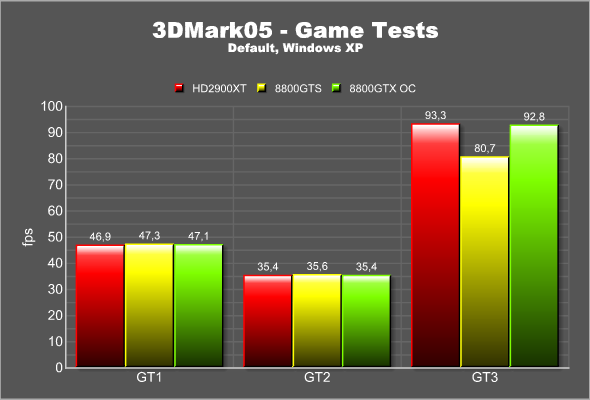

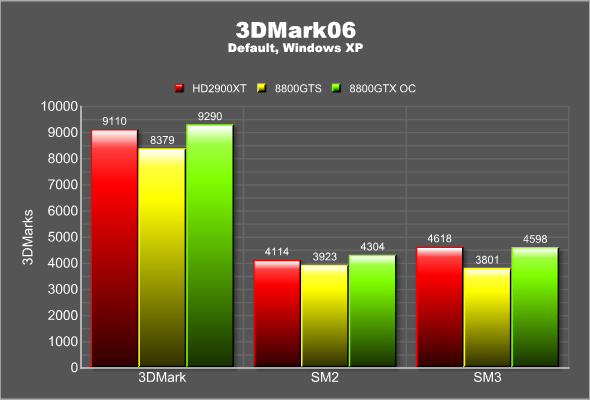

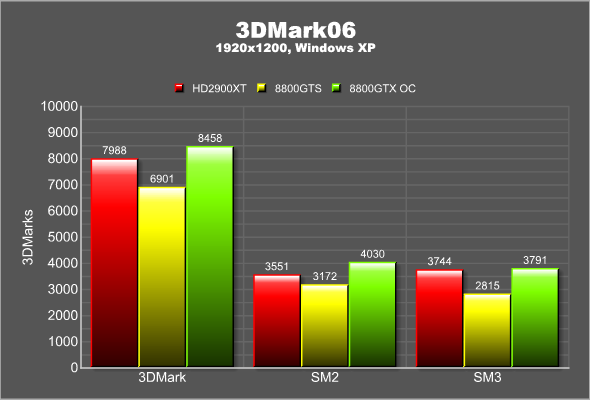

TESTING – 3DMARK05 AND 3DMARK06

These two synthetic software from Futuremark should be familiar to everyone by now. They allow us to test the cards in a variety of situations that have been created to mimic different sitautions in games that the cards can encounter.

3DMark05

The benchmark runs through three different game tests and then presents a score that is derived from the results of each of these game tests. This benchmark requiers support for Shader Model 2.0. If you are interested in what each Game Test actually tests, take a look at the explanation over at Futuremark.

3DMark06

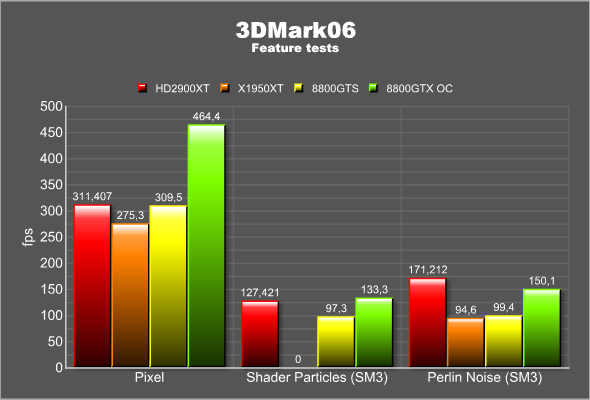

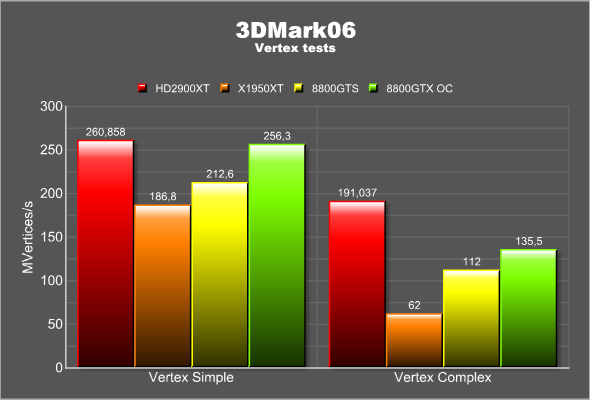

Released last year, the latest version from Futuremark runs both through a set of gaming benchmarks in addition to some CPU benchmarks. A combined score is calculated from the individual results. Futuremark has also posted a more detailed explanation on what each test does.

In addition to the general test, 3DMark06 also features special tests that allow you to benchmark more specific aspects of your video card. Again the information about each individual test can be found at Futuremark.

TESTING – SUPREME COMMANDER AND COMPANY OF HEROES

These two excellent RTS-games are not only fun to play, they also demand a lot from the video cards.

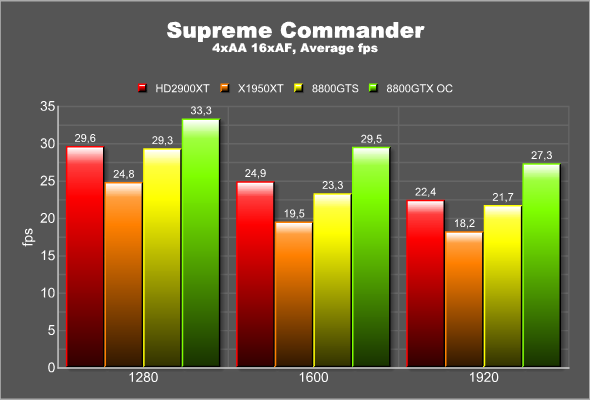

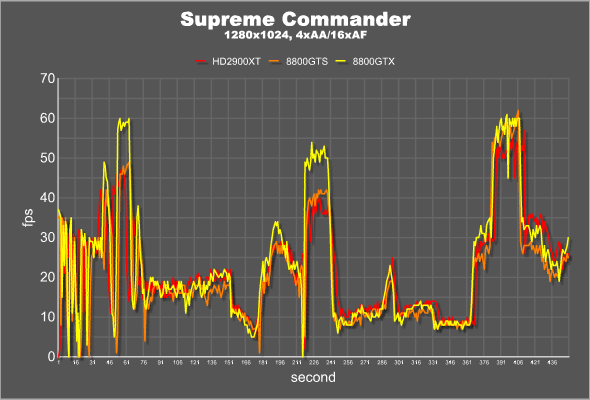

Supreme Commander

– 4xAA/16xAF

– Everything set to the highest quality setting

– Built in benchmark used. The benchmark runs a map with 4 opponents that blast eachother. It runs for about 7.5 minutes.

The HD2900XT performs pretty similar to the GeForce 8800GTS, beating it with a few frames at the higher resolutions. Looking at a graph of the actual fps during the whole benchmark, recorded with fraps, it is obvious that there really is not much difference between the different cards in this benchmark. The game relies heavily on the CPU and it is just in a few instances that you can see a definitive difference between the cards.

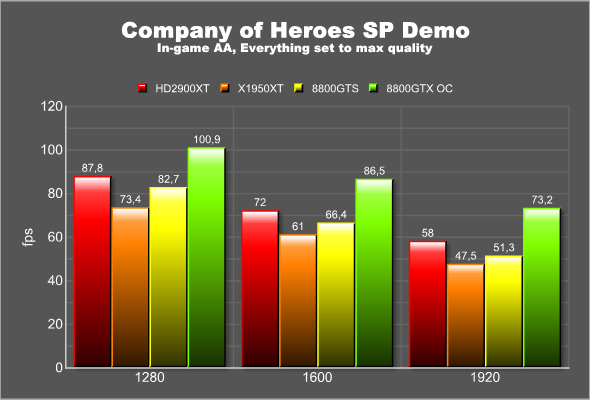

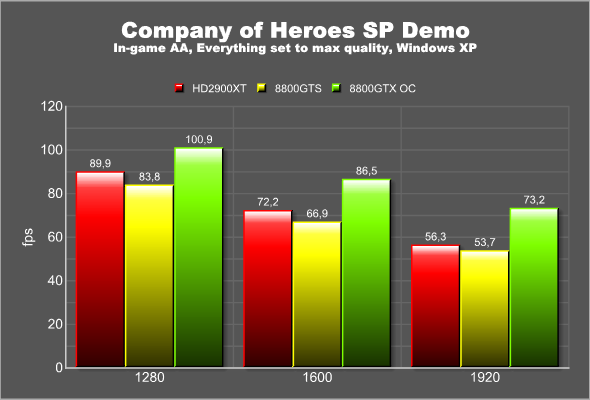

Company of Heroes Single Player Demo

– AA turned up to max inside the game

– All settings set to the highest quality setting

TESTING – HALF LIFE 2:LOST COAST AND CALL OF JUAREZ DX10 DEMO

For our second batch of games I have selected Half Life 2:Lost Coast and the DX10 Demo of the game Call of Juarez. The demo was supplied from Tech land and AMD but unfortunately have a bug in it that prevents NVIDIA cards from running it with AA turned on. As we do not have a patch yet or an updated demo I decided to run it without AA so we at least can get some preliminary results.

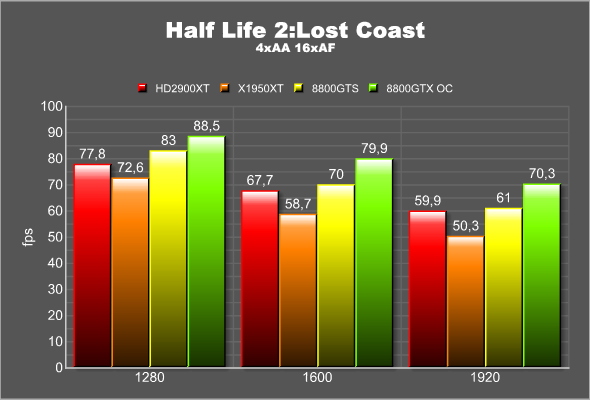

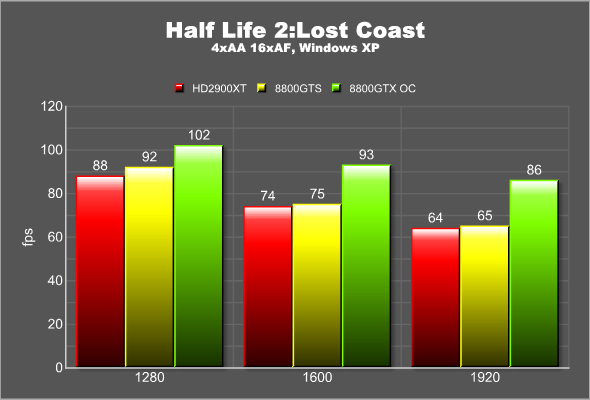

Half Life 2:Loast Coast

– 4xAA/16xAF

– All settings set to the highest quality settings

– HDR turned on

– The HL2:LC benchmark utility from HOC was used

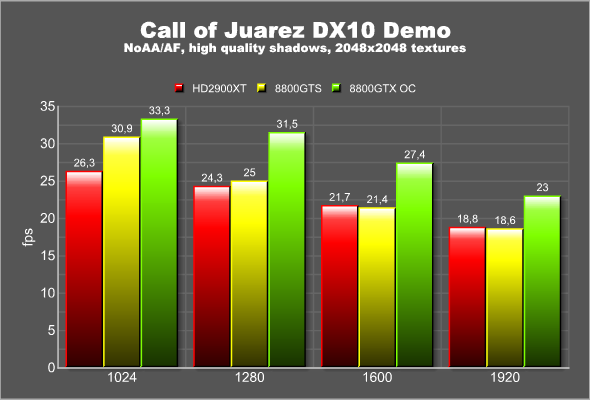

Call of Juarez DX10 Demo

– No AA/AF

– Shadow quality: Highest

– Textures: 2048×2048

The Call of Juarez Demo does not run especially fast, even on these high-end cards. I can’t helpt to wonder how optimized the code really is. While the HD2900XT falls behind the 8800GTS in the beginning it catches up as we increase the resolution.

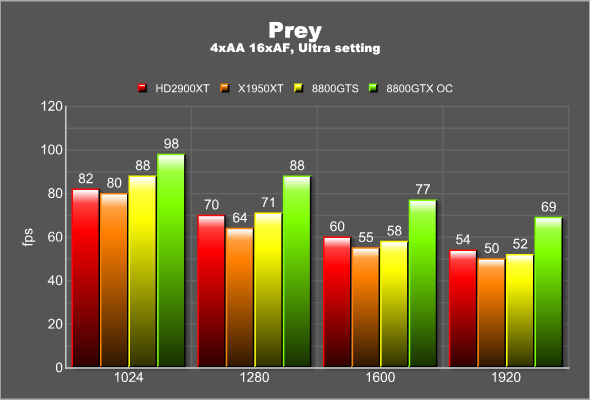

TESTING – QUAKE 4 AND PREY

Even though Direct3D is used in most games these days, there are some games that still use OpenGL. For this article I ahve tested two of them; Quake 4 and Prey.

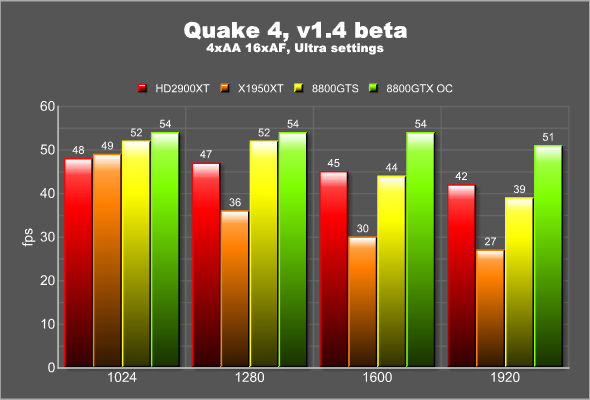

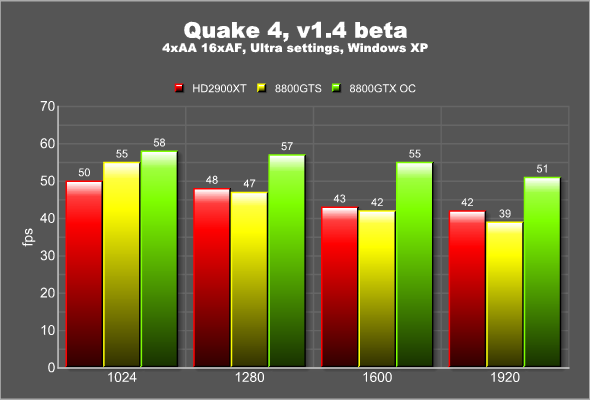

Quake 4

– 4xAA/16xAF

– Ultra setting

Prey

– 4xAA/16xAF

– Ultra setting

TESTING – WINDOWS XP PERFORMANCE

All the previous results has come from Windows Vista 32 bit. Since a lot of people still are sitting on Windows XP and have no intention of switching in the near future I also ran some benchmarks under Windows XP. Overall the picture is similar as on Vista, albeit with a bit lower results.

TESTING – HD MEDIA PLAYBACK, POWER CONSUMPTION

After all games it is time to look at two other types of performance: HD Media playback and the power consumption.

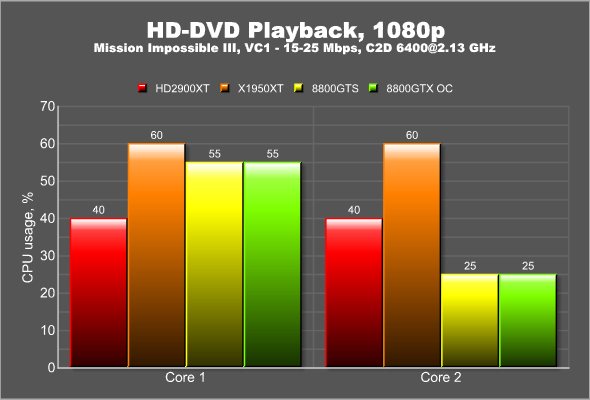

HD Media playback

As high-definition media is becoming more common it is important that the cards we use are able to handle it without relying to much on the CPU. I used Power DVD Ultra and a Xbox 360 HD-DVD drive to test the playback of a few HD-DVD movies: Mission Impossible III and King Kong. Mission Impossible III was run with the commentaries turned on. According to Power DVD Ultra both movies run at around 15-25 Mbps with occasional spikes towards 30 Mbps. I measured the CPU-usage by looking at the Performance view in the Task Master during roughly 15 minutes of the movie. This gives a rought estimate of the CPU-usage while the movies were running. As I got similar results from both movies I chose to just plot the results from Mission Impossible III below.

The HD2900XT performs well has no problems playing back the movie even at 1080p. The CPU runs pretty cool at around 40% for both cores. In comparison the X1950XT taxes the CPU a bit more and you end up at around 60% usage on both cores. The GeForce 8800 cards perform a bit differently. While one of the cores runs at around 55%, the other core only runs at 25% which means that overall it also only uses about 40% of the whole CPU. This is of course a pretty rough estimate and in the future I plan on recording the exact CPU-usage on both cores during the whole movie and plotting them against eachother to get a better view of the CPU-usage over a larger period of time.

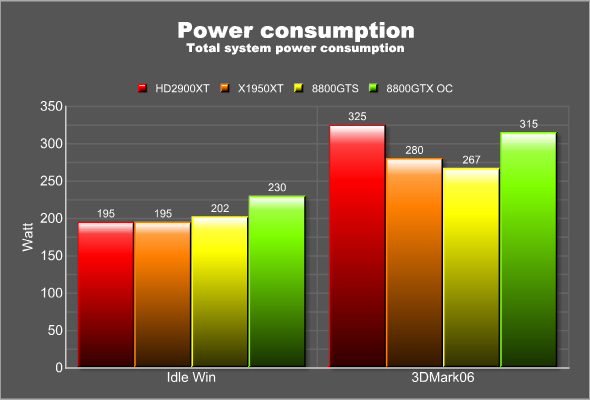

Power Consumption

Maybe it is because I live in my own house now and have to pay the electric bills myself, but while I never cared about the power consumption before, I am now starting to take more notice of it. To compare the power consumption of these high-end cards, I measured it at the wall mains with the Etech PM300 Energy Meter. This measures the total system power usage. I first let the system sit at the windows desktop for 1 hour and recorded the power usage. I then started 3Dmark06 at 1920×1200 and recorded the peak power usage during the benchmark.

A little reminded what I have in the system:

| Review System | |

| CPU | Intel Core 2 Duo [email protected] |

|

Motherboards

|

EVGA 680i SLI (nForce 680i) |

|

Memory

|

Corsair XMS2 Xtreme 2048MB DDR2 XMS-1066 |

|

HDD/Optical

|

1×320 GB SATA External USB DVD/RW that gets power from outside the measured power usage. |

|

Video cards

|

ASUS 8800GTS Sparkle Calibri 8800GTX Reference HD2900XT Reference X1950XT |

|

PSU |

High Power 620W |

|

Software |

Windows Vista (32 bit) Windows XP SP2 |

|

Drivers |

HD2900XT: xxx X1950XT: xxx 8800GTS/GTX: xxx |

Note – the Sparkle 8800GTX that I am using is factory overclocked. It also has a TEC-cooler which gets some extra power from a separate molex.

At idle the HD2900XT runs at just as low power usage as the other cards. In fact, I was surprised to see the Sparkle 8800GTX use 35W more at idle. Maybe that TEC-cooler needs it. When running the cards at their max things change. As expected the HD2900XT beats the others, although the 8800GTX is not that far behind, and the total system power usage does not stop until 325W.

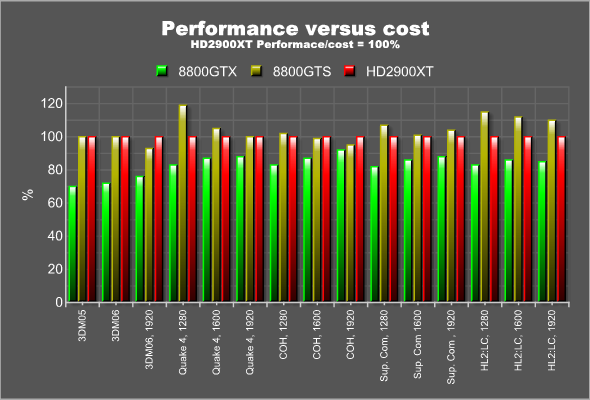

TESTING – PERFORMANCE PER COST RATIO

In addition to the regular benchmarks it also is interesting to see what you really are getting out of your hard earned cash when you buy one of these cards. Which card gives you the best “bang for your bucks”?

The first problem when determine this is what price you will use for each card. After looking around on prices for the three cards in both the US and in Europe I settled for these prices:

HD2900XT: $399

GeForce 8800GTS 640 MB: $370

GeForce 8800GTX: $550

As far as I can tell these are overall what you will have to pay to get either of these.

Click on the chart for a larger version

This chart was created this way: the results in each of the included benchmarks were divided with the cost. The HD2900XT performance/cost value was then set to 100% and the other values related to it. This means that scores below 100% means the card has a worse performance/cost value while a score above 100% means the card gives you more performance for your dollar (or euro).

Overall it is obvious that the real battle is between the 8800GTS and the HD2900XT. In this type of comparison the 8800GTX simply does not give you as much extra performance so that it justifies the extra cost. Overall the 8800GTS offers a slightly better value than the HD2900XT. The 8800GTS has been out for a longer period now and the prices have had a chance to go down. The HD2900XT on the other hand is a brand new card that enters at approximately the same cost. If the prices start to go down on the HD2900XT as well the chart above pretty quickly can change.

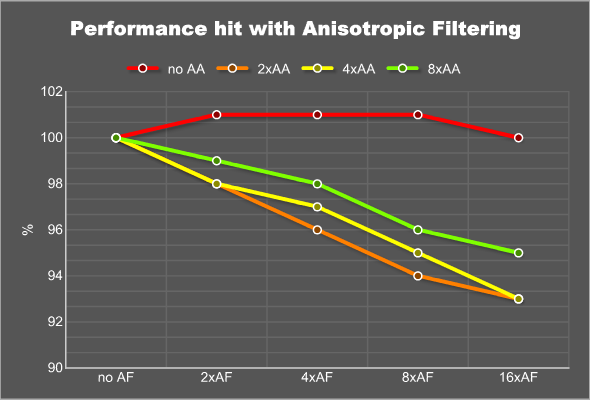

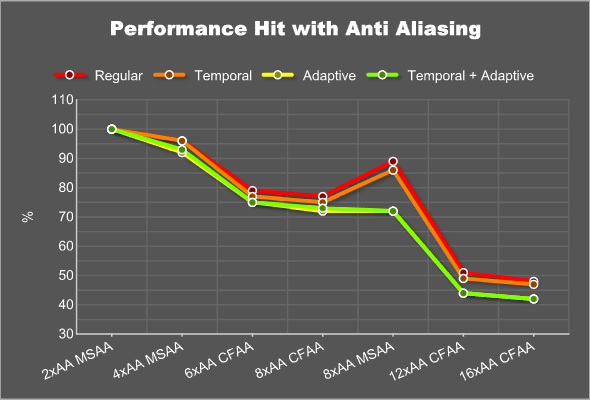

TESTING – AA AND AF PERFORMANCE

As we now have a wealth of different Anti-Aliasing and Anisotropic Filtering settings to turn on/off I wanted to see how they affect the performance. All the tests were run at 1280×1024 in Half Life 2: Lost Coast using HoC’s benchmark utility.

Please note that the scale of the chart is from 102% to 90%. Without any AA there basically is no hit enabling anisotropic filtering. With the different anti-aliasing settings there is a slight drop in performance as we got up in the anisotropic filtering levels. In the end you only loose 5-7% which is nothing compared to the increase in image quality.

This chart instead shows the performance hit when enabling the various AA-levels as well as the different AA-enhancements. Regardless if we turn on any of the enhancements (temporal and adaptive AA) or keep them turned off, we see a fairly similar drop in performance. The only odd results is when we just run regular AA or with temporal AA turned on at 8xMSAA. We get a better score then 8xCFAA which is nothing more than 4XAA with a narrow custom filter. I would have expected the opposite.

TESTING – IMAGE QUALITY

AMD has introduced a new Anti Aliasing method, CFAA, it is interesting to see how well it works compared to their earlier methods as well as NVIDIA’s solutions. To be able to compare the two GPU’s I took several screenshots with both the HD2900XT and the GeForce 8800GTX in Half Life 2:Lost Coast at 1280×1024 with different AA and AF settings. Below is a series of dynamic areas where you can switch between the various images without having to reload the page.

Anti Aliasing comparison

To compare the different AA-settings, I cut out a part of the images and zoomed in 200% so that we more easily can see the pixels. Below each image is a link to the full un-cut screenshot. Temporal AA and Transparent AA was turned on.

First let us compare the different AA-settings on the HD2900XT.

No AA

2X MSAA

4X MSAA

6X CFAA (2XAA + wide filter)

6X CFAA (4xAA + narrow filter)

8X CFAA (4XAA + wide filter)

8X MSAA

12X CFAA (8XAA + narrow filter)

16X CFAA (8XAA + wide filter)

Next let us compare the GeForce 8800GTX and the HD2900XT.

HD2900XT 4xAA

8800GTX 4xAA

HD2900XT 8xCFAA

HD2900XT 8xAA

8800GTX 8xAA

HD2900XT 16xCFAA

8899GTX 16xAA

As you might recall from earlier in this article, NVIDIA has claimed the CFAA is nothing more than their old Quincunx-AA method which they abandoned several years ago as it was thought to be too blurry. If you take a look at the images above you actually notice that CFAA does indeed blur the image a bit. This can be see on the stairs and the mountain. When you look at the full un-zoomed imageit is however far less obvious and I actually did not notice it myself until I zoomed in and compared them side-by-side. The important thing for me personally is that the image was very nice and free from “shimmer” and other aliasing artifacts.

Note! If you compare the original images between the HD2900XTX and the 8800GTX you notice that there is more fog on the HD2900XT. This is a know issue.

There is a known issue with Half Life 2 and the way fog is being rendered on the ATI Radeon HD 2900 XT. This is a common problem to all DirectX 10 hardware and involves the order of operations when fogging into an sRGB render target and the way gamma is applied. The order of operations on DirectX 10 hardware results in more accurate gamma than in DirectX 9, and Valve had adjusted their algorithm accordingly. AMD is working with Valve on this and will implement a fix for this in a future driver release.

Anisotropic Filtering

Next we compare the anisotropic filtering between the two cards. Here I just cut out a smaller part of the larger image without any zooming.

HD2900XT No AF

8800GTX No AF

HD2900XT 16xAF

8800GTX 16xAF

Both cards produce a very nice image when 16xAF is turned on. As the performance loss, as seen earlier in this article, is minimal with 16xAF turned on I see no reason ever to use any lower setting.

THE FUN STUFF – VALVE AND MORE

In addition to getting our heads filled with technical info about the new GPU’s, we also were shown some fun stuff that is hard to fit into any of the other pages in this article.

Valve

Jason Mitchell visited the event and had a presentation on character performance in Source. we were shown some videos showing how the character animation, specifically the facial expressions, has progressed from the original Half Life, through Half Life 2 and up to Team Fortress 2. Below are some videos that we were shown. The Team Fortress 2 video is real funny. I can’t wait for that game to be released!

|

|

|

|

|

Small trailer introducing Heavy Guy in Team Fortress 2 |

HL1 had Skeletal animation and 1 bone for the jaw |

HL2 had Additive Morphing and 44 Facial morphs |

Team Fortress 2 has Combinatorial Morphing and 663 Facial Morphs |

As you now know, Valve’s Black Box (Team Fortress 2, Portal, Half Life 2:Episode 2) is bundled with the HD2900XT (or at least a voucher for it).

Techland – Call of Juarez

Techland held a short presentation on their DX10 update of their last year game, Call of Juarez. Below is a video that shows some of the differences between the two versions.

|

Ruby

Of course AMD had a new Ruby demo to show off some of the new features in their GPU’s. Below are some screenshots from the demo (click to see a larger version).

|

|

|

|

|

While the actual demo still has not been released to us, I at least can provide you with a recorded movie of it. In addition to the original 200 MB .mov-file I also encoded it to a slightly smaller 114 MB WMV-file.

CONCLUSION

At every launch you expect to see new GPU’s that beat the competition, if only for a little while. In fact, at every launch I’ve been to, ATI and NVIDIA, they have always stressed how important the absolute high-end is to drive sales of the cards that bring in the money, the performance and the value segment. It was therefore a bit odd to leave the Tunis event realizing that AMD this time has chosen to not compete with the GeForce 8800GTX or the GeForce 8800 Ultra. In fact, when talking to AMD guys they several times said that they did not want to bring out cards that cost more than $500-$600 as so few are going to buy them. Is there a genuine change in strategy at AMD or are they just trying to explain away the fact that they could not release the X2900XTX with 1 GB DDR4 as we know they had intended at some point? That is something we will figure out over the next year or so.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996