The high-end video card war continues with ATIs latest GPU X1900 (R580). Sporting 3 times the pixel shader processors over the X1800XT and twice as many as the GeForce 7800GTX ATI has release a real power-horse. Even more important is the fact that the cards already are in stock in some online-stores which means it isnt a paper-launch.

Introduction![]()

The high-end video card war continues with ATI’s latest GPU X1900 (R580). Sporting 3 times the pixel shader processors over the X1800XT and twice as many as the GeForce 7800GTX ATI has release a real power-horse. Even more important is the fact that the cards already are in stock in some online-stores which means it isn’t a paper-launch.

Introducing the R580![]()

We’ve heard a lot of rumours lately about the new chip and today the info can finally be released about the new cards based on the new GPU, the R580. Let’s take a look at the specs for the GPU.

R520: 90nm, 321 M transistors, 16 pixel shaders

R580: 90nm, 384M transistors, 48 pixel shaders

By increasing the transistor count by 20% ATI has managed to put in 3x the pixel shaders over the R520. That’s pretty impressive.

Cards using this GPU will be named X1900. So far 4 different cards have been announced:

X1900XT

The X1900XT is the ‘slowest’ (if you can call it that) card announced. The core runs at 625 MHz and the memory at 1.45 GHz. It has 512 MB GDDR memory. The MSRP is $549.

X1900XTX

ATI has moved away from the PE name they used for their premium card before and instead adopted a similar naming convention that NVIDIA is using. It probably will be easier to educate consumers that an X1900XTX compares to a 7800/7900GTX than to talk about an XT PE.

The X1900XTX has a core that runs at 650 MHz and memory that runs at 1.55 MHz. It also has 512 MB GDDR memory. The MSRP is $649.

X1900 Crossfire

All X1000-cards are Crossfire ready. That means they can be used together with a Crossfire master card. The X1900 Crossfire is such a master card. The core runs at 625 MHz and the memory at 1.45 MHz so it’s basically a X1900XT. The MSRP is $599.

AIW X1900

Last but not least we got the All-In-Wonder X1900. It might seem like a waste of power to create an AIW-card using this powerful GPU. There’s a reason behind this though. In an age where HDTV is becoming more and more important the X1000-series have the ability to decode h.264 material with hardware acceleration. The R580 provides the AIW X1900 with the power to hardware decode a 1080i stream in real-time.

The technology![]()

Why the increase in pixel-shaders?

ATI hasn’t just randomly selected to increase the number of pixel shaders. There’s actually some thought behind the decision.

Since shaders were first introduced in gamers video cards back in 2001 their use have increased a lot. Today basically every game utilises shaders in one or another way. The usage of shaders has also changed. Back in 2001 they 50/50 were used for texture operations and arithmetic operations. Over time, as the shader models have evolved, shaders are used more and more for arithmetic operations. According to ATI we today see a ration of 5:1 between arithmetic operations and texture operations and this is just increasing.

So what does this has to do with the number of shader processors? Well it turns out that texture operations is heavily dependent on external factors like memory size and bandwidth. Arithmetic operations however are only dependent on the number processing units. To take even more advantage of the trend with more processing units developers can use them to reduce the amount of memory and bandwith needed. This is done by a technique called procedural textures. In this technique pixel shader programs can be used to generate textures mathematically based on a set of artist-controlled input parameters. Alternatively, shader programs could be used to add variation and detail to existing textures, thus reducing the number of different texture maps that need to be stored in memory.

Increasing the shader processing power can also be used to offload some calculations from the CPU. As we talked about in our X1000-article today’s GPU’s actually have a lot of processing power compared to the CPU’s. Thus it is possible to offload some of the work the CPU has to do to the GPU. The more shader processing power the GPU has the more option a developer has to use some of the power for this purpose.

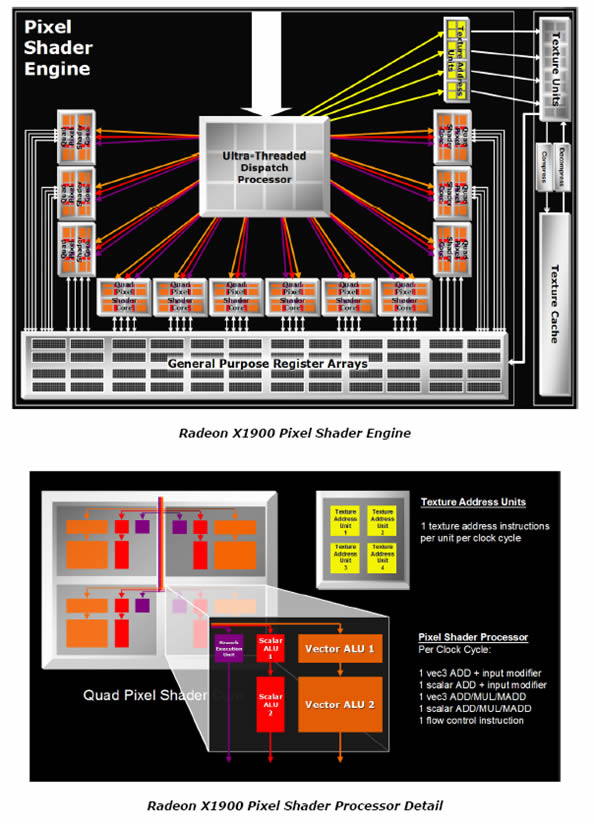

Let’s take a look at how the Pixel Shader Architecture looks on the X1900.

This is how it works according to ATI:

Each pixel shader processor in the Radeon X1900 can handle anywhere from 1 to 5 shader instructions per clock cycle in its various ALUs. Dedicated branch execution units are included to reduce the performance overhead of flow control instructions. Each texture unit and texture address unit can process up to 4 texture fetches per clock cycle.

These units are assigned tasks by the Ultra-Threaded Dispatch Processor, which is constantly seeking opportunities to re-order instructions to achieve maximum utilization of these ALUs. It also makes use of a large number of simultaneous threads to hide texture fetch latency, which can occur when attempting to access data that is not immediately available in the texture cache. Thread sizes are kept small to maximize the benefits of branching operations.

Source: ATI X1900 Whitepaper

In short: It’s simply more efficient to increase the number of shader processing units than to try to add more and faster memory. By increasing the arithmetic processing power 3 times over the X1800XT ATI has created a card that will work great on both older and future games.

Shadow map acceleration and fetch4

Shadows, an image doesn’t look right without them. There’s a lot of ways to create good-looking shadows. One of the more common is shadow maps. The downside with them is that it creates hard-edge shadows. In real life there are no hard edges and thus it doesn’t look as good as it could. To get rid of those hard-edges you can filter the shadow maps. This can be done by taking a number of samples and then combining them in a pixel shader. Using a larger number of samples can result in higher quality shadows, but also requires a large number of texture lookups, which can hurt performance.

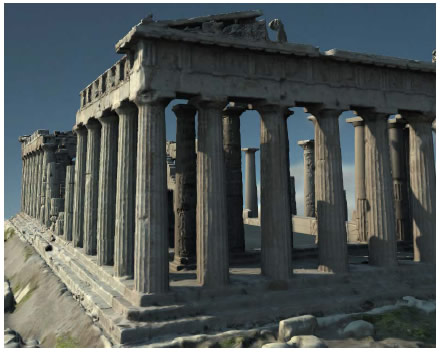

Look at those soft shadows

Dynamic branching can be used to improve the performance of this shadow rendering technique. The X1900 includes a new texture sampling feature that uses this technique called Fetch4.

It works by exploiting the fact that most textures are composed of color values, each consisting of four components (Red, Green, Blue, and Alpha or transparency). The texture units are designed to sample and filter all four components from one texture address simultaneously. However, when looking up different types of textures with single-component values (such as shadow maps), Fetch4 instead allows four values from adjacent addresses to be sampled simultaneously. This effectively increases the texture sampling rate by a factor of 4.

Source: ATI X1900 Whitepaper

Performance – System![]()

After all the tech-talk it’s time to get down to the cool stuff, the performance. ATI was kind enough to lend me two cards, a X1900XTX and a Crossfire X1900XT. Unfortunately I didn’t get a Crossfire-motherboard so I went out and bought an ASUS A8R-MVP, just to be able to test the cards in Crossfire mode.

What I couldn’t get hold of was a NVIDIA GeForce 7800GTX 512 MB card in time for this article. There’s currently only one available for the press in Sweden and that card was not available. As soon as I get hold of it the scores will be updated.

The test system:

| Components |

– ASUS A8R-MVP Crossfire |

| Software | – Windows XP SP2 – DirectX 9.0c – nForce4 6.53 drivers – Beta Catalyst drivers/CATALYST 6.1 |

| Synthetic Benchmarks | – 3DMark 2005 v1.2.0 – 3DMark 2005 v1.0.2 |

| Gaming Benchmarks | – Quake 4 – Half-Life 2 – FEAR 1.2 |

| Notes | HardOCP’s benchmark utilities for these games were used for HL2 and Q4 and the built in test were used in FEAR. |

Performance – 3Dmark05 and 3dmark06![]()

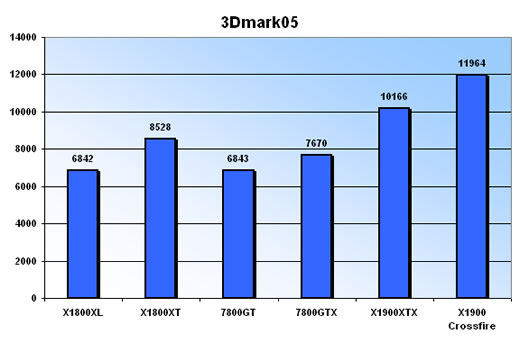

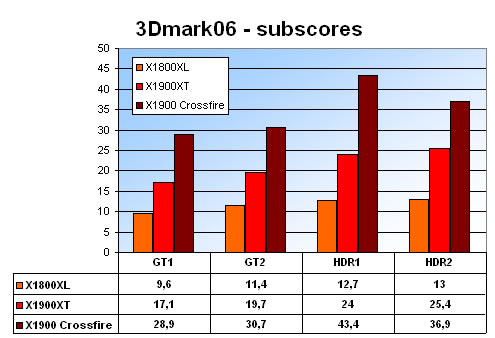

We’ll start with the usual synthetic benchmarks.

Note: The X1800XT, the GeForce 7800GT and GeForce 7800GTX were benched on a nForce4 motherboards. Memory, CPU and the rest of the system was the same as for the rest.

As expected the X1900XTX does score higher than all its competitors. It beats the 7800GTS with 32% and the reference X1800XT with 20%. Adding a second X1900XT in Crossfire mode increases the score with almost 20%. It’s a far cry from doubling the performance but at the default setting the CPU becomes a big bottleneck. I tried to run the Crossfire-setup at 1600×1200 6xAA/16xAF but ran into some problems that you can read more about in the Crossfire-section of the preview.

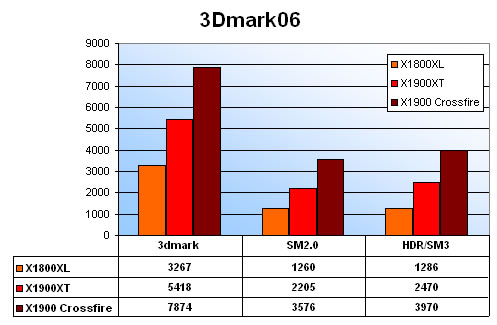

A quick benchmark shows that the X1900XTX performs very well in 3Dmark06. In earlier reviews we’ve seen that the X1800XT is approximately 20% faster than the X1800XL when you turn on everything at high resolutions. This lets us theorise a score for the X1800XT in this system. The X1900XTX turns out to be around 50-60% faster than the X1800XT.

Performance – Quake 4![]()

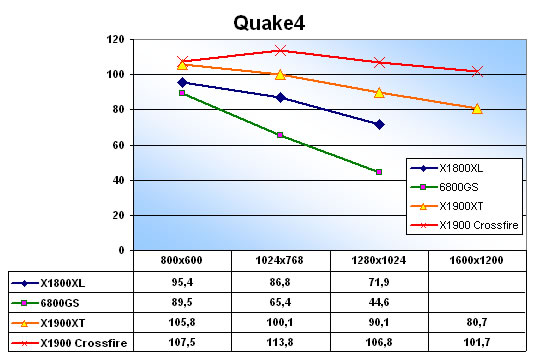

To benchmark Quake 4 we used the HardOCP Q4 utility and the included demo.

Settings: Everything set to high + 4xAA and 8xAF

The X1900XT is CPU-bound most of the time starting to drop off at 1600×1200. Using the cards in Crossfire-mode makes it completely CPU-bound. It will be interesting to see how it compares to the 7800GTX 512 MB in my system.

Half Life 2 ![]()

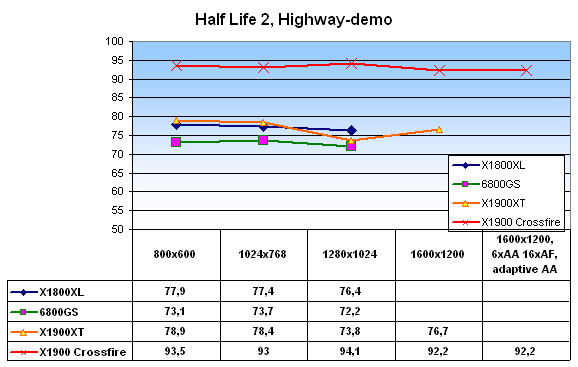

To benchmark HL2 we used the HardOCP HL2 utility and the Highway demo.

Settings: Everything set to max + 4xAA and 8xAF.

I did expect this demo to be CPU-bound and wasn’t planning on including it in the article when I saw the Crossfire-results. I just thought it was interesting to see the system pumping out almost 25% more frames per second. Turning on 6x Adaptive AA and 16xAF doesn’t matter even at 1600×1200 for the Crossfire-system.

Performance – FEAR![]()

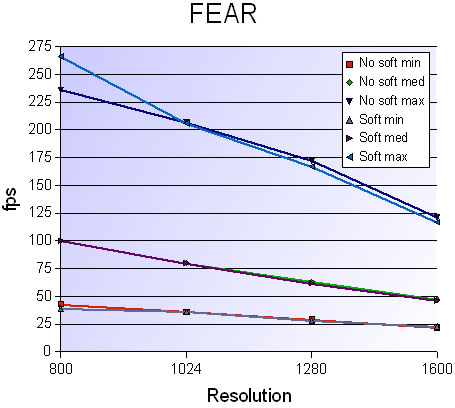

FEAR is a great game. It is also a very demanding game. One of the more demanding features is the support for soft shadows. Turn on them and the performance usually dips a lot. As I explained earlier the X1900 supports a new texture sampling technique that promises to increase the performance when you use soft shadows a lot. I decided to use the built-in Video-test feature in FEAR to see how the X1900XTX would handle the soft shadows there.

Settings: Everything turned on and set to max. The only thing I’m switching is on/off for soft shadows.

It seems the new technique is doing what it is supposed to do. Looking at the scores with and without soft shadows there isn’t really any big difference.

Crossfire![]()

Since ATI was so kind of lending me two X1900-cards, including one Crossfire Master card I had to do my first test with Crossfire. I’ve tested NVIDIA SLI before but so far not ATI’s Crossfire. The first obstacle was to get hold of a Crossfire-able motherboard. Up until recently it hasn’t been that easy to find one here but lately motherboards from ASUS and Abit have been released and I ended up getting myself a ASUS A8R-MVP.

As you probably already know ATI has solved the problem with using two cards a bit differently than NVIDIA. While NVIDIA forces you to use 2 identical cards ATI’s Crossfire lets you pair different cards. Basically you could pair a X1900 and a X1600XT if you wanted to. It’s a bit of a waste doing that of course since the X1900 would scale back to match the X1600XT when it comes to pixel pipelines etc. although it would still run at a higher core and memory speed. With NVIDIA you can however just buy two SLI-certified cards while ATI forces you to buy a special Crossfire master card.

The ASUS A8R-MVP has two PCI-E slots. When you are not running cards in Crossfire-mode they include a special little switch-card, similar to what we have seen on nForce4-motherboards that you insert into one of the PCI-E slots. This makes sure the single slot you are using gets the full 16x bandwidth instead of just 8x which both slots get in Crossfire-mode.

Oh so much problems

Setting up the Crossfire-system was pretty straightforward and I had both cards up and running pretty quick. You turn on Crossfire in the Catalyst Control-center where a special Crossfire-tab pops up. While NVIDIA lets you mess with how SLI-works in different games ATI has chosen to hide that and only let you turn on/off Crossfire.

My first test was 3Dmark05. The first game-test worked fine but as soon as the second game-test was finished (the one in the forest) my system shut itself down. The same thing happened in 3Dmark06. Even at default setting the machine shut itself down when the forest game-test was almost done.

My PSU was a NUUO 500W PSU from Sunbeam which has served me well up until today. Since I also had three SATA-drives, 2 DVDR’s, a X-Fi sound card and a AMD X2 4400+ in the system I figured that the X1900 simply were needing more power than my PSU could offer. While I don’t have any solid figures on the power consumption of the X1900XTX and X1900 Crossfire I saw a mention in another article about 175W for each card at max. That’s a lot. Two cards would thus alone suck 350W from the system.

A Silverstone 650W Zeus ST65ZF PSU (quad 12V rails, SLI-certified with 2 PCI-E power connectors, http://www.silverstonetek.com/products-65zf.htm) was bought and the testing commenced. 3Dmark05 now worked fine at the default setting. To really stress the system I cranked up the resolution to 160×1200 with 6xAA anad16xAF. Unfortunately the system shut down again at this setting. 3Dmark06 could only be finished while unplugging both the two DVDR’s and two SATA-drive and FEAR wouldn’t finish even at 800×600.

All the other benchmarks worked fine though and if I turned off Crossfire everything worked as normal.

Scott mentioned to me that he’s had similar issues with his 7800GTX 512 MB SLI-system. In his review of the Antec Truecontroll II 550W he had similar issues in FEAR.

It’s simply clear that you need a really beefy and good quality PSU when using the X1900 in Crossfire mode. I’m not sure what to get since the Silverstone PSU is supposed to be a high quality PSU. I’ve seen one or two 700W PSU’s here from less known brands but nothing bigger as in the US where you apparently can get hold of 800W-1000W PSU’s now.

Cool when it works

When Crossfire works in my system it is really cool (although noisy). You don’t have to think about anything since it runs transparent in the background. The performance gain is obviously dependant of the game but in my limited testing the video card has in most cased ceased to be the bottleneck even at 1600×1200 with all the various image quality enhancements turned on which is pretty cool. Running the cards in Crossfire mode also adds more anti-aliasing modes that just makes it look even better.

The coming weeks we will receive cards from HIS so we can do some more Crossfire testing with retail boards. Hopefully I will be able to get a PSU that handles the cards by then.

Conclusion![]()

The downside of course is the price but if you wnat to have the best you have to pay for it. It will be interesting to see if ATI comes out with cut down versions of the X1900 in the future.

The absolutely best part of this launch however is the fact that the cards are available as we speak. Just click the pricegrabber-links below and check for the best prices.

So far we know that we can look forward to cards from ASUS, GeCube, Club3D, Connect3D, Gigabyte, HIS, MSI, Sapphire and TUL. Rafal is currently busy reviewing the Powercolor X1900XT and I’m waiting for a few cards from HIS myself so you can look forward reviews with more detailed benchmarks pretty soon. As mentioned before we also will update this review with GeForce 7800GTX 512 MB scores as soon as that card is sent to us.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996