With varieties of the GTX-5xx lineup appearing at an astonishing rate, we are glad to see the GIGABYTE GTX-560 OC in the lab and on the test bench.

Introduction

With the introduction of the Nvidia GTX 560 Ti, containing 384 CUDA cores and a retail price between $243 and $260 (USD) for various models, Nvidia wanted to hit yet another price point to make the technology available to a wider target market. Enter the GIGABYTE GTX 560 OC with 336 CUDA cores and an attractive MSRP of $199. While a single GTX-560 Ti will handle most applications with no problem, two GIGABYTE GTX-560 OC’s in SLI will run in the $400 range and will dominate any application or game.

With the introduction of the Nvidia GTX 560 Ti, containing 384 CUDA cores and a retail price between $243 and $260 (USD) for various models, Nvidia wanted to hit yet another price point to make the technology available to a wider target market. Enter the GIGABYTE GTX 560 OC with 336 CUDA cores and an attractive MSRP of $199. While a single GTX-560 Ti will handle most applications with no problem, two GIGABYTE GTX-560 OC’s in SLI will run in the $400 range and will dominate any application or game.We’re looking at a single card today but have an eye toward an SLI setup as soon as possible.

Out of the box, the GIGABYTE GTX 560 OC runs at 830MHz, or 20 MHz above the stock clocked cards, so no fussing with OC tools is required. We, of course will overclock the GIGABYTE GTX-560 OC until it begs for mercy. The 1 GB of GDDR 5 on the GTX-560 OC runs at a blazing 4008 MHz and it sports a hefty 1660 MHz Shader clock. As with all modern video cards, users will need a PCI-E slot, and the card is PCI-E 2.0 ready but is backwards compatible with PCI-E 1.0 with very little bandwidth loss. In other words, running it in a PCI-E 1.0 slot will still provide a lot of bang for a little buck, and it’ll be a nice upgrade, even on older motherboards. GIGABYTE and Nvidia recommend using a good quality 500W Power Supply with 2x 6 pin PCI-E power connectors.

The GIGABYTE GTX 560 OC supports DirectX 11 like the GTX-4xx lineup. The GTX-4xx lineup was based on the GF100 chip, and the newer GTX-5xx lineup uses a refined GF110. So is the GF100 to GF110 change just a shell game to sell technology-hungry users another video card? The answer that is a definitive no. The GIGABYTE GTX 560 OC should use 21% less power (and produce less heat) than GTX 460 models that have the same 336 CUDA core design. The 560 should also be about 33% faster than the 460, even with the same number of CUDA cores. We’re going to put this to the test, because benchmarking is like sunshine to us. A day without benchmarking is like a day without sunshine.

Features

- GIGABYTE Ultra Durable VGA Series

- Powered by NVIDIA GeForce GTX 560 Ti GPU

- Integrated with industry’s best 1 GB GDDR5 memory 256-bit memory interface

- Features Dual link DVI-I*2/ mini HDMI with HDCP protection

- OC edition

- GIGABYTE WINDFORCE 2X Parallel-inclined anti-turblence cooling design

- GIGABYTE UDV material

- Support NVIDIA® SLI™ Technology

- Support NVIDIA® 3D Vision™

- Support NVIDIA® CUDA™ Technology

- Support NVIDIA® PhyX™ Technology

- *Minimum 500W or greater system power supply with two 6-pin external power connectors

Features & Benefits

Features & BenefitsGPU Temperature 5%~10% down

Ultra Durable VGA board provides dramatic cooling effect on lowering both GPU and memory temperature by doubling the copper inner layer of PCB. GIGABYTE Ultra Durable VGA can lower GPU temperature by 5% to 10%.

Overclocking Capability 10%~30% Up

Ultra Durable VGA board reduces voltage ripples in normal and transient state, thus effectively lowers noises and ensures higher overclocking capability. GIGABYTE Ultra Durable VGA graphic accelerators improve overclocking capability by 10% to 30%.

Power Switching Loss 10%~30% Down

Ultra Durable VGA board allows more bandwidth for electron passage and reduces circuit impedance. The less circuit impedance, the more stable flow of current and can effectively improve power efficiency. GIGABYTE Ultra Durable VGA can lower power switching loss by 10% to 30%.

WINDFORCE™ 2X parallel-inclined fin design is equipped with 2 ultra quiet PWM fans and two copper heat pipes to enlarge air channel on the graphics card vents and creates a more effective airflow system in chassis.

WINDFORCE™ 2X parallel-inclined fin design is equipped with 2 ultra quiet PWM fans and two copper heat pipes to enlarge air channel on the graphics card vents and creates a more effective airflow system in chassis. more

Gold plated, durable large contact area connectors have been used for optimum signal transfer between connections

* Advertised performance is based on maximum theoretical interface values from respective Chipset vendors or organization who defined the interface specification. Actual performance may vary by system configuration.

* All trademarks and logos are the properties of their respective holders.

* Due to standard PC architecture, a certain amount of memory is reserved for system usage and therefore the actual memory size is less than the stated amount.

Specifications

| Chipset | GeForce GTX 560 |

| Core Clock | 830 MHz |

| Shader Clock | 1680 MHz |

| Memory Clock | 4008 MHz |

| Process Technology | 40 nm |

| Memory Size | 1 GB |

| Memory Bus | 256 bit |

| Card Bus | PCI-E 2.0 |

| Memory Type | GDDR5 |

| DirectX | 11 |

| OpenGL | 4.1 |

| PCB Form | ATX |

| Digital max resolution | 2560 x 1600 |

| Analog max resolution | 2048 x 1536 |

| Multi-view | 2 |

| Tools | N/A |

| I/O | DVI-I*2 mini HDMI*1 HDMI (by adapter) D-sub (by adapter) |

| Card size | 241 mm x 134 mm x 43 mm |

| Power requirement | 500W (with two 6-pin external power connectors) |

Pictures & Impressions

The front of the GIGABYTE GTX 560 (336 CUDA Core Variant) shows typical GIGABYTE flair and we see that the card uses an Ultra Durable design with a whopping 3 year warranty. This version has 4 all-copper heat pipes and two 100mm inclined fans. Previous designs from GIGABYTE were dubbed “dual inclined fan” and were primarily on the GTX 4xx lineup. The cowling on the GTX 4xx lineup was a little more substantial and restricted the airflow more. This design will provide more air flow, removing more heat than previous designs.

The back of the box contains a lot of the same information as the front and it should be plenty of info for the end user to make a decision in it’s purchase. One thing we would like to see in future box back advertising is a simple 3DMark Vantage chart showing the different models of the current lineup so consumers can get an idea of relative power in a synthetic benchmark.

Looking down on the video card, we see a sleek sexy design that would excite any true Geek. A blue PCB with 2x 100mm inclined fans that remove heat from the full body heat sink. Once again we see wires on the video card, and though there’s probably nothing wrong with that, having them routed along the reinforcing cross members would have made the look a little sleeker.

The back of the card is fairly unremarkable and the only difference we see between the GTX-4xx and GTX-5xx lineup is a slightly smaller space occupied by the components.

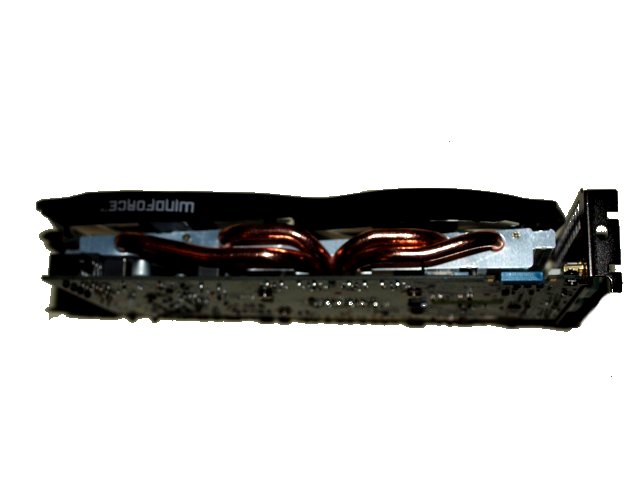

Looking top down on the GTX-560 we see 4 heat pipes, and we verified that they are solid copper and not merely steel coated with copper.

The card uses the same two slot design as its predecessors. Notice that natively, the card only has two DVI video ports, but GIGABYTE included VGA and HDMI Mini adaptors.

On the back edge of the card we find the expected two six pin PCI-E power connectors and a collection of neatly placed wires. A heat shrink sleeve covering the wires would have been beneficial.

Here again Nvidia and GIGABYTE recognize that putting one SLI connector on the GTX-560 will prevent too much of a good thing, Had they put two SLI connectors on this model, users could have run 3 GTX-560’s in triple SLI for around $600 (USD), which might have drawn away sales of their top end models, which have with two connectors on them. We wish they would get past that and include two connectors on all models down to mainstream cards, so that when prices drop, users could extend the price-efficiency factor.

Getting a different angle on the GIGABYTE GTX-560, we can see the full body heat sink. The dual fans extend slightly over the edges of the heat sink.

While there are 4 heat pipes and they all originate from directly above the GF114 core, GIGABYTE has done a good job on running them far enough out into the full body heat sink to spread out the thermal load. Given the 2 100mm whisper quiet fans, we should be able to tweak the core and make the card run a little faster without having to worry about overheating.

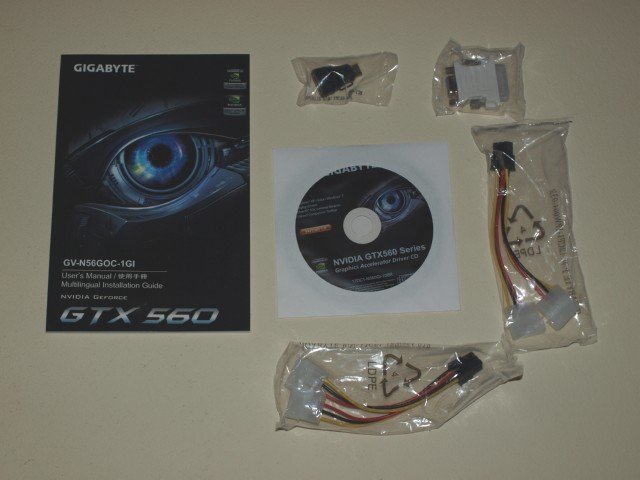

The GTX 560 has a very limited bundle, which was disappointing. We would have gladly paid an extra $15 for an included video game. With the card comes a user manual, DVI to Mini HDMI Adaptor, DVI to VGA Adaptor, driver disk, and two Molex to six pin PCIE power adapters. Most power supplies around and above the 500W range come with native PCI-E connectors, and we advise users to connect these instead of using the Molex to PCI-E adapters included with the card. This eliminates the risk of underpowering the card in case the Molex adapters don’t have enough amps per rail to power the card during overclocking.

Testing & Methodology

To Test the Gigabyte GTX-560, we did a fresh load of Windows 7 Ultimate; then applied all the updates we could find. We installed the latest motherboard drivers for the ASUS Rampage 3, updated the BIOS, and loaded our test suite. We didn’t load graphics drivers because we wanted to clone the HD with a fresh load of Windows 7 without graphics drivers. That way we have a complete OS load with testing suite, and it wouldn’t be contaminated with GPU drivers. Should we need to switch GPU’s, or run some SLI/Crossfire action later, all we would have to do is boot from our cloned OS, install GPU drivers, and run the tests.

We ran each test a total of 3 times, and reported the average score from all three scores. In the case of a screenshot of a benchmark we ran that benchmark 3 times, tossed out the high and low scores; then posted the median result from that benchmark. Erroneous results were discarded and the tests re-run.

Test Rig

| Test Rig “HexZilla” |

|

| Case Type | Silverstone Raven 2 |

| CPU | Intel Core i7 980 Extreme |

| Motherboard | Asus Rampage 3 |

| Ram | Kingston HyperX 12GB 1600 MHz 9-9-9-24 |

| CPU Cooler | Thermalright Ultra 120 RT (Dual 120mm Fans) |

| Hard Drives | 2x Corsair P128 Raid 0, 3x Seagate Constellation 2x RAID 0, 1x Storage |

| Optical | Asus BD Combo |

| GPU Tested |

Gigabyte GTX-560 1GB |

| Case Fans | 120mm Fan cooling the MOSFET CPU area |

| Docking Stations | Thermaltake VION |

| Testing PSU | Silverstone Strider 1500 Watt |

| Legacy | Floppy |

| Mouse | Razer Lachesis |

| Keyboard | Razer Lycosa |

| Gaming Ear Buds |

Razer Moray |

| Speakers | Logitech Dolby 5.1 |

| Any Attempt Copy This System Configuration May Lead to Bankruptcy | |

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark Vantage | |

| Metro 2033 | |

| Dirt 2 | |

| Stone Giant | |

| Unigine Heaven v.2.1 | |

| Crysis Warhead | |

| S.T.A.L.K.E.R.: COP | |

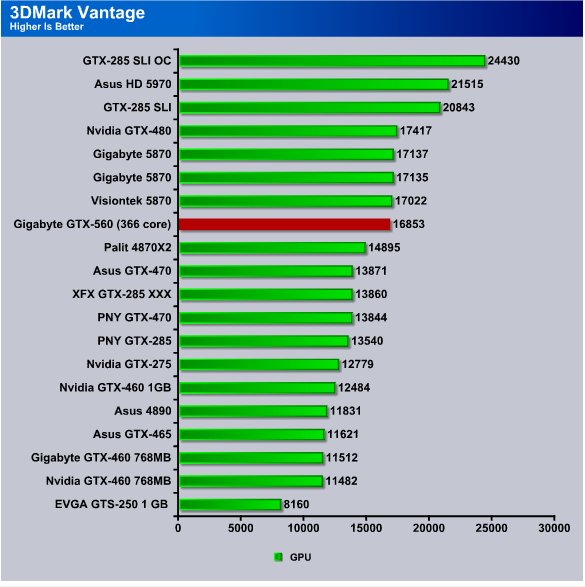

3DMark Vantage

For complete information on 3DMark Vantage Please follow this Link:

www.futuremark.com/benchmarks/3dmarkvantage/features/

The video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

The GIGABYTE GTX 560 comes out ahead of some major contenders and managed to squeak ahead of the GTX 470, ending up slightly behind the HD 5870’s. The previous generation’s GTX 480 was priced at $499 depending on model, but at $199, the GIGABYTE GTX 560 performed about as well.

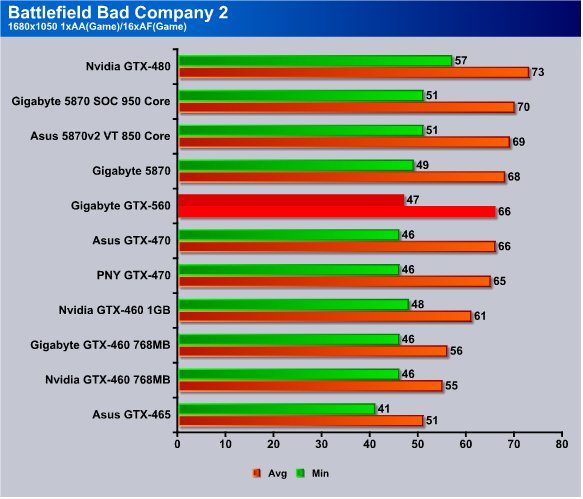

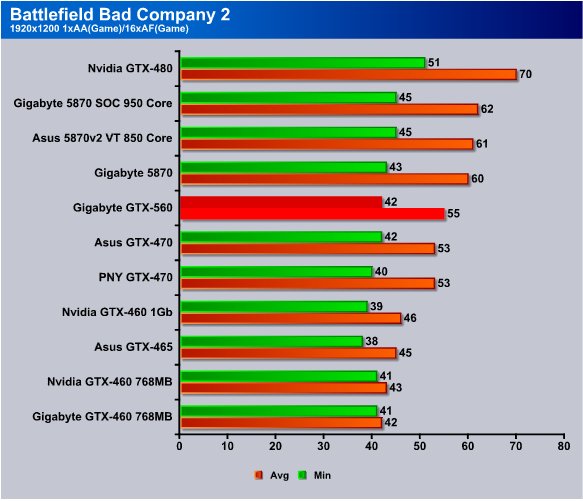

Battlefield Bad Company 2

Coming in ahead of last generation’s GTX 470 by a hair and just behind the HD 5870 lineup is quite a feat for this card, considering its price.

Considering that the GTX 470 lineup ran in the $350 range, we don’t have any complaints. The GTX 560 holds its position above the GTX 470 and competes pretty well with last generation’s HD5780 lineup.

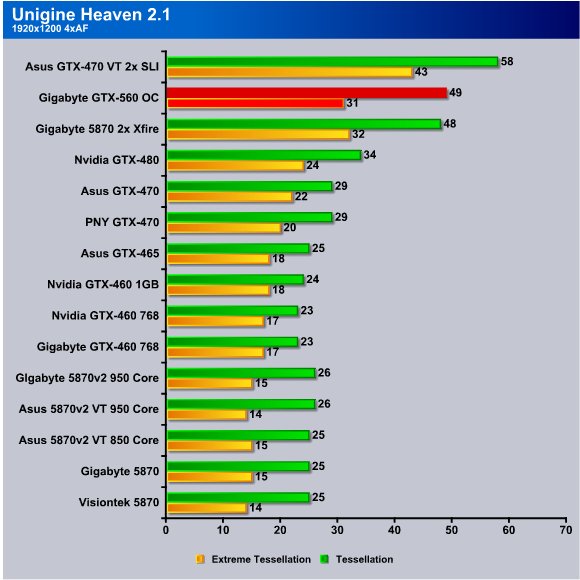

Unigine Heaven 2.1

Unigine Heaven is a benchmark program based on Unigine Corp’s latest engine, Unigine. The engine features DirectX 11, Hardware tessellation, DirectCompute, and Shader Model 5.0. All of these new technologies combined with the ability to run each card through the same exact test means this benchmark should be in our arsenal for a long time.

The GTX 480 lineup originally had problems with drivers on Unigine Heaven which might explain why the GIGABYTE GTX 560 jumped out ahead of it, but we ran the test 3 times and averaged the scores so we’ll have to look into the results a little later. For now we are presenting the results of the test but they won’t weigh into the final scoring of the GIGABYTE GTX 560.

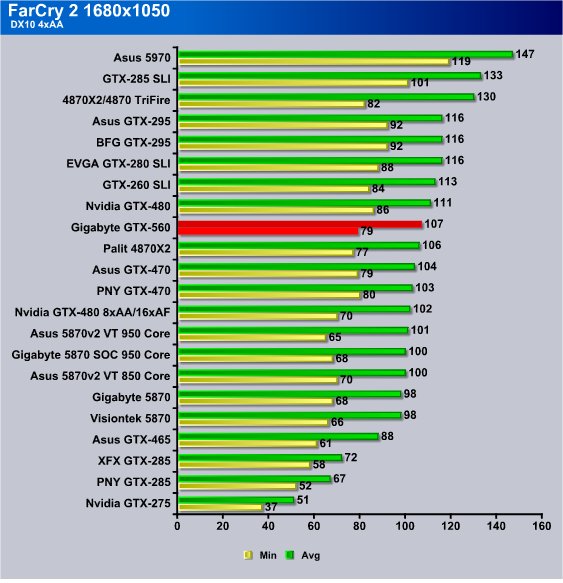

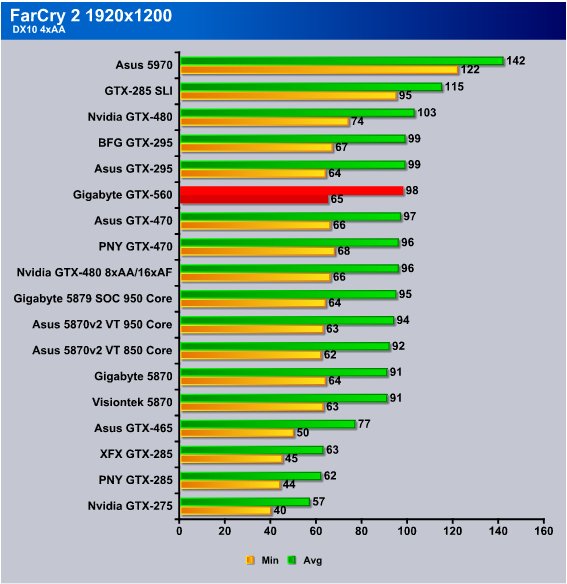

Far Cry 2

Far Cry 2, released in October 2008 by Ubisoft, was one of the most anticipated titles of the year. It’s an engaging state-of-the-art First Person Shooter set in an un-named African country. Caught between two rival factions, you’re sent to take out “The Jackal”. Far Cry2 ships with a full featured benchmark utility and it is one of the most well designed, well thought out game benchmarks we’ve ever seen. One big difference between this benchmark and others is that it leaves the game’s AI (Artificial Intelligence) running while the benchmark is being performed.

What jumped out at us in the 1680×1050 DX 10 test in Far Cry 2 was that the GIGABYTE GTX 560 came in right behind the GTX 480 and just a tad behind SLI GTX 260’s that’s pretty amazing for a $199 video card.

Looking at the 1920×1200 benchmark chart brings another new driver shyness problem back to mind. FarCry 2 had problems with the GTX 295 dual core GPU’s. The GTX 480 outperformed the 295’s and the GTX 560 fell right below the GTX 295 top dog from the recent past. When the GTX 295 hit it came in at a hefty $600 and the $199 GIGABYTE GTX 560 gives it a serious run for it’s money.

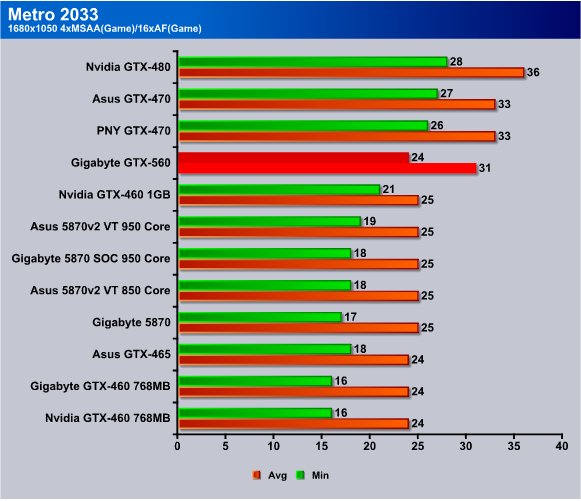

Metro 2033

Metro 2033 is an action-oriented video game with a combination of survival horror, and first-person shooter elements. The game is based on the novel Metro 2033 by Russian author Dmitry Glukhovsky. It was developed by 4A Games in Ukraine and released in March 2010 for the Xbox 360 and Microsoft Windows. In March 2009, 4A Games announced a partnership with Glukhovsky to collaborate on the game. The game was announced a few months later at the 2009 Games Convention in Leipzig; a first trailer came along with the announcement. When the game was announced, it had the subtitle The Last Refuge but this subtitle is no longer being used by THQ.

The game is played from the perspective of a character named Artyom. The story takes place in post-apocalyptic Moscow, mostly inside the metro system where the player’s character was raised (he was born before the war, in an unharmed city), but occasionally the player has to go above ground on certain missions and scavenge for valuables.

We left Metro 2033 on all high settings

Metro 2033 is tough on video cards from a benchmark perspective, but playing the game, all of the video cards drove the game well enough that we saw no stutters. Stil,l it’s useful to gage comparative performance between cards.

The Gigabyte GTX-560 turned in a respectable 24 Minimum FPS and 32 Average FPS, and like we mentioned we played the game for several hours and saw no stutters or dragging during game play. Position wise, it came in tight behind the GTX-470’s which have the advantage of a more mature driver.

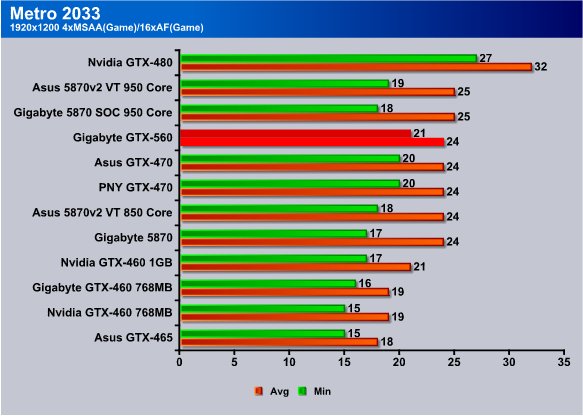

At 1920×1200 in Metro 2033, all but the mighty GTX-480 fall short of the minimum 30 FPS we usually consider minimum playable frame rates. However, we played Metro 2033 at this level with plenty of eye candy and saw no problems with the GTX-560 driving it.

Position wise, on these charts the HD 5870’s and the GTX-480 managed to squeak ahead, but given the GTX-560’s release price, it’s doing a stellar job.

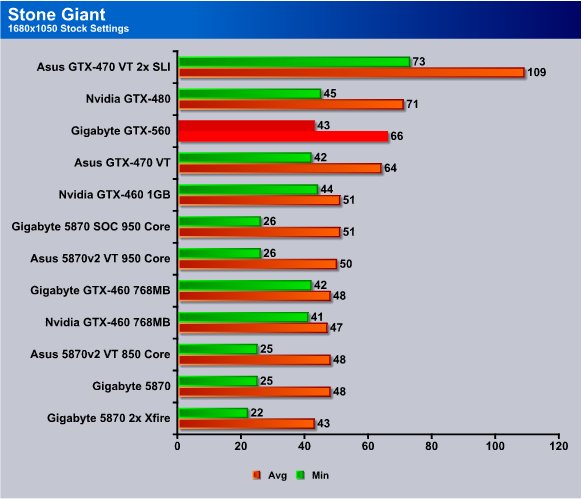

Stone Giant

We used a 90 second Fraps run and recorded the Min/Avg/Max FPS rather than rely on the built-in utility for determining FPS. We started the benchmark, triggered Fraps and let it run on stock settings for 90 seconds without making any adjustments of changing camera angles. We just let it run at default and had Fraps record the FPS and log them to a file for us.

Key features of the BitSquid Tech (PC version) include:

- Highly parallel, data oriented design

- Support for all new DX11 GPUs, including the NVIDIA GeForce GTX 400 Series and AMD Radeon 5000 series

- Compute Shader 5 based depth of field effects

- Dynamic level of detail through displacement map tessellation

- Stereoscopic 3D support for NVIDIA 3dVision

“With advanced tessellation scenes, and high levels of geometry, Stone Giant will allow consumers to test the DX11 credentials of their new graphics cards”, said Tobias Persson, Founder and Senior Graphics Architect at BitSquid. “We believe that the great image fidelity seen in Stone Giant, made possible by the advanced features of DirectX 11, is something that we will come to expect in future games.”

“At Fatshark, we have been creating the art content seen in Stone Giant”, said Martin Wahlund, CEO of Fatshark. “It has been amazing to work with a bleeding edge engine, without the usual geometric limitations seen in current games”.

The GTX-560 holds it’s position right above the GTX-470 and approaches the performance of the GTX-480. We keep mentioning it but it amazes us that a $200 video card can compete with last generation’s $500 offering.

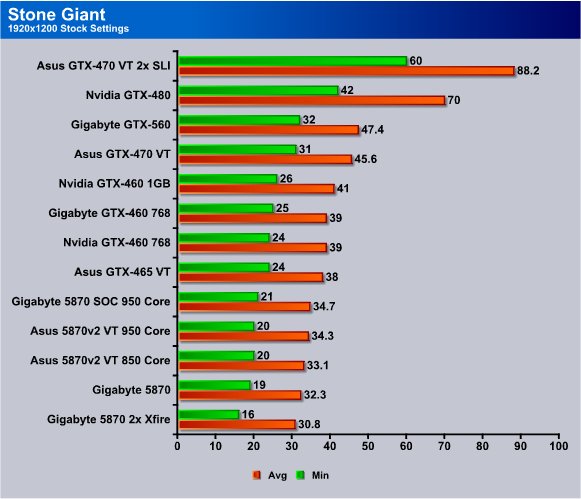

At 1920×1200, the GTX-560 slides in right behind the GTX-480, but at this level the GTX-480 did perform quite a bit better.

S.T.A.L.K.E.R.: Call of Pripyat

Call of Pripyat is the latest addition to the S.T.A.L.K.E.R. franchise. S.T.A.L.K.E.R. has long been considered the thinking man’s shooter, because it gives the player many different ways of completing the objectives. The game includes new advanced DirectX 11 effects as well as the continuation of the story from the previous games.

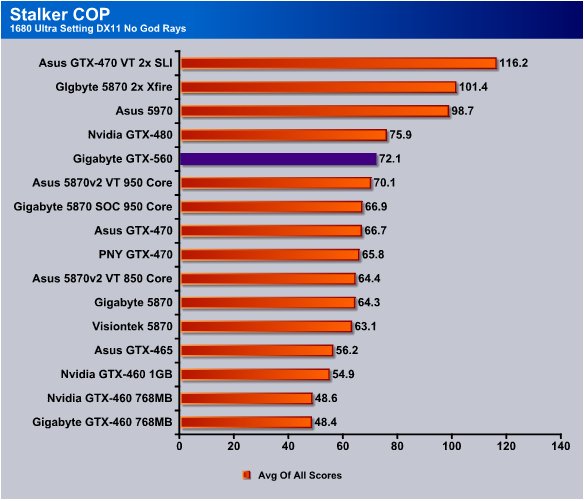

S.T.A.L.K.E.R. favors Nvidia GPU’s with God Rays turned on. At 1680×1050 we hit an astonishing 72.1 FPS More than double what you need for rock solid performance.

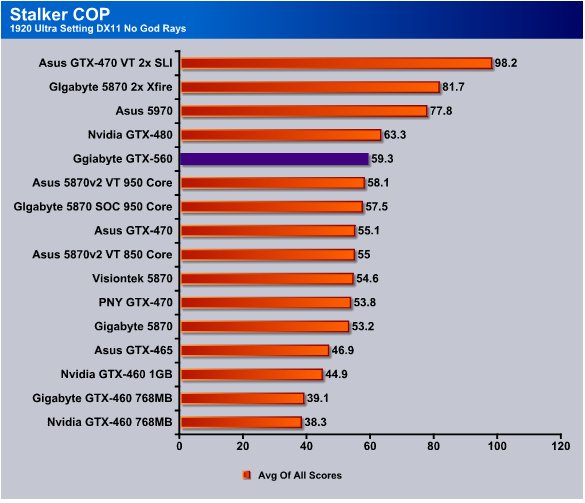

Even at 1920×1200, we got almost double the 30 FPS needed for solid looking video for the human eye. The Gigabyte GTX-560 came a mere 4 FPS behind the GTX-480 and the HD58xx lineup did very well in this test.

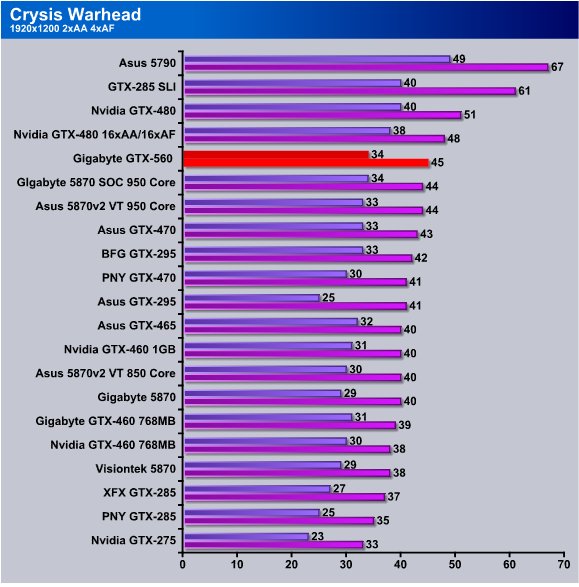

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

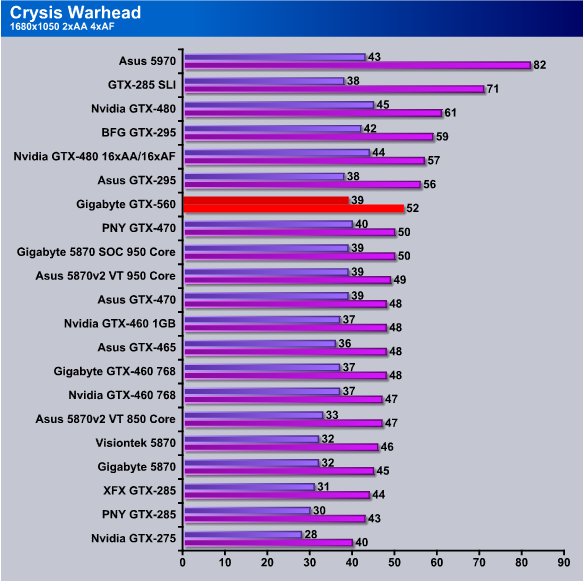

In Crysis Warhead at 1680×1050, we got a minimum FPS of 39 with an Average FPS of 52 and during non-benchmark game play we saw no problems running this resource hungry game on the Gigabyte GTX-560 at all.

We’ve seen Crysis Warhead bring mighty GPU’s to their knees and make them beg for mercy, but the Gigabyte GTX-560 gave us 34 FPS minimum and 45 FPS average, and we are pleased with that performance considering its $199 price point.

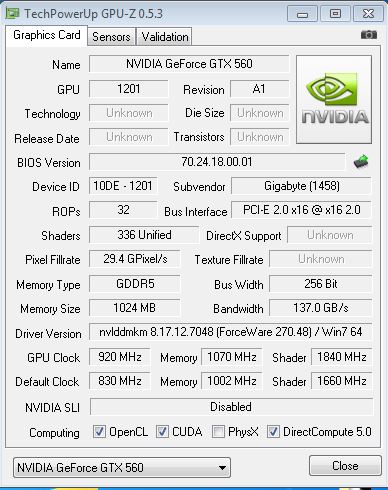

Overclocking

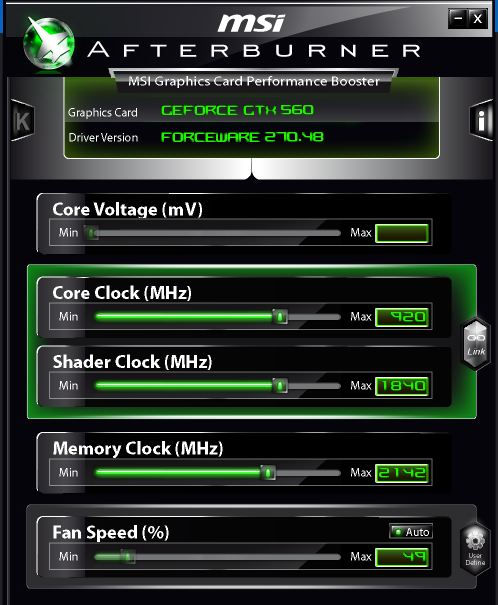

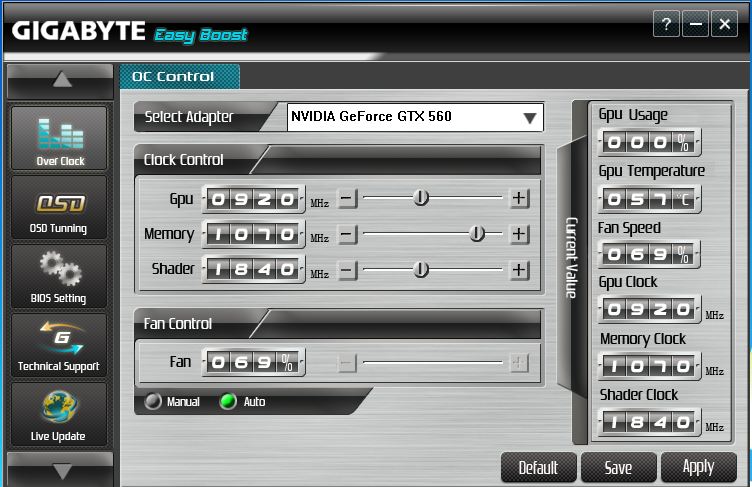

Overclocking we used the latest version of MSI Afterburner and tried out Gigabytes Easy Boost. We also verified Clocking with CPU-Z and quickly found that a combination of Afterburner and CPU-Z or Easy Boost and CPU-Z were required to keep from scrambling my grey matter. Neither MSI Afterburner or Easy Boost show you the original clocks during the OC process so it’s easy to lose track of the factory clocks and push the video cards to far to fast and then you have to back up and go again.

We started slow and moved the Core clock up 10MHz each try and ran ATITool for a minute between tries then when we hit what we felt was the maximum sustainable core clock ran ATITool for 10 minutes checking temps and for artifacts. We used the same process for the GDDR5 memory overclocking and while we love GDDR5 a part of us longs for the bigger overclocks from GDDR3. That’s probably just nostalgia creeping in as we know GDDR5 is the best memory technology we have encountered.

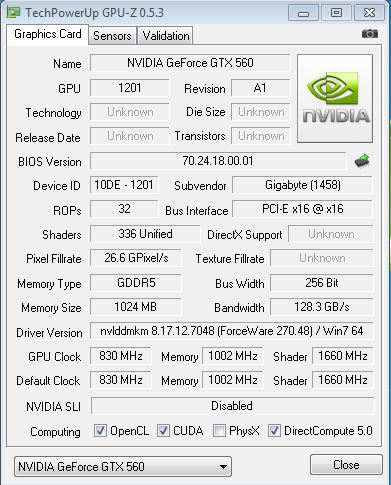

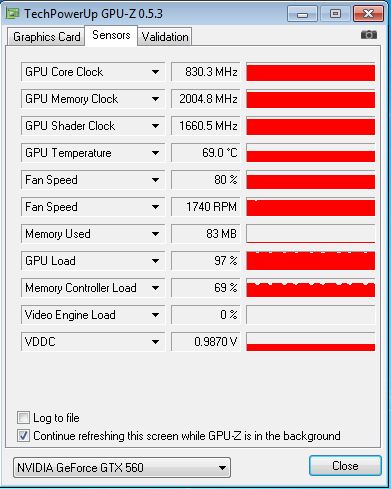

Lets start with a GPU-Z stock clock screen shot.

Stock clocks run 20MHz higher than non-factory overclocked GT-560’s a nice safe conservative OC that we wouldn’t even really call an overclock. It’s more like a love tap on the core than a hammer down overclock like we prefer. Keep in mind though hammer down overclocks are for short periods and best used for bragging rights and not daily use. For Daily use if you want to keep an overclock find the max OC then back down 20 or 30MHz.

The bump they gave the GDDR5 memory isn’t worth mentioning if you ask us a 1MHz base increase is a marketing tool and not a true overclock. No worries though we cranked the memory until it screamed for mercy.

We didn’t get as high an overclock on the core on our card as we’ve seen on other GTX-560’s but our stability testing is more strenuous. We ran stability testing on ATITool and FurMark then ran the card through 3DMark Vantage and 920 was the highest stable clock we got on all three. We pushed the memory to 1070 MHz at it’s highest stable point for the same tests we used on the core. We were able to push both a tad higher and have it stable in one test or another but these are stable in every test we ran and while gaming in several state of the art video games. If it’s not stable while gaming and extended testing then it’s not a true overclock this is the true stable overclock for our card without voltage tweaking.

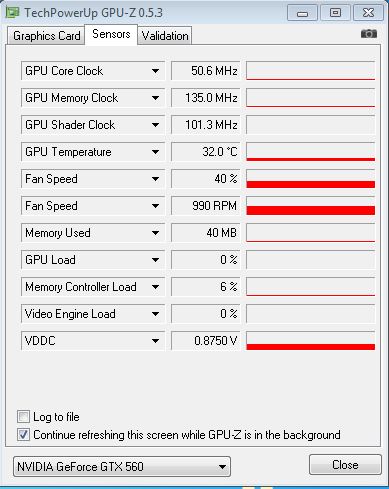

TEMPERATURES

We went with GPU-Z to record Temperatures and FurMark to heat the card up, we didn’t use a preset time in FurMark instead ran it until the card maximum temperature and been reached and leveled off for a couple of minutes. Lets face it FurMark is unrealistic in and foreseen case because no game or productive program is gong to drive a GPU at 98% utilization all the time anyway. All FurMark does is give you worst case thermals and a useful tool for showing the extremes seldom will you continually drive a GPU that hard continuously in real life.

At an idle with the core and memory at minimal power consumption levels we got a reading of 32° and a fan speed of 990RPM the fans being whisper quiet at this level.

After running FurMark until the temperatures leveled off for a couple of minutes we hit a reasonable 69° with a still whisper quiet fans running at 1740 RPM pretty impressive considering the noise people made about fan noise on the GTX4xx lineup.

AfterBurner and Easy Boost

We didn’t have any problem with MSI Afterburner handling the Gigabyte GT-560 but the slider controls on MSI AfterBurner are harder to get precise adjustments from and hitting the exact clocks we wanted had to be compromised by one or two MHz on the memory. Again no original clocks were shown so we kept a stock shot of CPU-Z on screen to remind us of factory clocks. It seems like Factory clocks being displayed at all times would be a no brianer.

Easy Boost was a joy to use overclocking the GTX-460 the plus and minus signs let us increase or decrease by a single MHz with ease but the look of the program leaves a little to be desired. The look is utilitarian and we like a little flash or bling in the look of our overclocking utilities. Think of it as the difference between wearing a $99 shelf suit and a custom tailored Silk suit and you’ll get our meaning.

As far as overclocking we liked the functionality of Easy Boost better but MSI AfterBurner is prettier both lacked a showing of factory clocks once the OC process began. For once can’t we have it all a slick pretty interface with 1MHz increases and decreases easily accomplished, voltage tweaking options for all cards, and stock factory clocks show after the OC begins with a simple button that launches ATITool for stability testing. To us these basic functionalities should be built in every overclocking utility so end users can avoid the inevitable searching for the right utilities to do all they need overclocking.

Conclusion

When we broke out the camera and reloaded the operating system and games to test the Gigabyte GTX-560 OC we had our doubts about how happy a $199 video card could make our refined eye candy tastes.

We deliberately ran the Gigabyte GTX-560 against a stack of last generation cards, especially since this card wil most likely be bought by GTX 200 or 400 series users looking for a DX11 compatible upgrade. During the entire review, we mentioned how the $200 Gigabyte GTX-560 has performance comparable to top offerings from the previous generation.

From an enthusiast standpoint, with the economy in one of the largest downturns in history, the Gigabyte GTX-560 makes sense to us. Buyers can pop one in now, and when the price drops with the next generation’s release, pick up a bargain GTX-560 and run SLI for a few years of eye-popping eye candy.

| OUR VERDICT: GIGABYTE GTX-560 OC | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The GIGABYTE GTX-560 OC is priced right and gives great performance. The Gigabyte GTX-560 has a sweet price point, sleek look, excellent cooling, and chucks out frames comparable to last generation’s most expensive top dogs. Given its solid performance, we award the GIGABYTE GTX 560 OC the Bjorn3D Silver Bear Award. |

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996