Dual-GPU Card? More like Dual-GPU beast! The GTX 690 is here: sleek, sexy, and with the power to back it up. Keep reading to see just how well this monster performs.

Fastest Dual-GPU beast: The NVIDIA GEForce GTX 690

At the NVIDIA Gaming Festival 2012 in Shanghai, China, NVIDIA’s CEO Jen-Hsun Huang introduced to the world the da ge (meaning “big brother” in Chinese) of the latest GeForce 600 family—the GTX 690. Designed with dual-Kepler GPUs, the GTX 690 is the fastest gaming card available on the market today.

When the GTX 680 was released, no one had any doubt that a dual-GPU version was in the pipeline. The question left in everyone’s mind was what kind of sacrifice in terms of performance and feature would be needed to cram two Kepler GPU’s onto one PCB. Traditionally, a dual-GPU card often has its clock speed lowered and a few processing units disabled in order to meet the power and heat requirements. With the GTX 590, NVIDIA packed two fully functional GF110 GPUs but had to dial down the clock speed; each GPU was significantly lower than they would be on a GTX 580.

Click Images to Enlarge

Thanks to the transition to the 28nm fabrication process, NVIDIA can use the relatively small and power efficient GK104 found in the GTX 680 despite the fact that it is packed with 3.5 billion transistors. As a result, with the GTX 690, NVIDIA is able to keep all of the stream processors on the two GK104 for a total of 3072 (2×1536) and simply reduce the core clock speed to 915MHz (vs 1006MHz on the GTX 680) to produce a card that is almost equivalent to dual GTX 680s in SLI, while keeping the 300W TDP envelope. Looking at the specs, we should then expect the GTX 690 to perform about 90-95% of that of dual GTX 680s in SLI, while consuming less power. If we consider the performance per watt ratio, the GTX 690 should be one of the most power efficient cards available.

| Specifications | GTX 690 | GTX 680 | GTX 590 | GTX 580 |

|

CUDA Cores

(Stream Processors)

|

2 x 1536 (3072)(2x GK104) | 1536(GK104) | 2 x 512(2x GF110) | 512(GF110) |

| SMXs | 2 x 8 (16) | 8 | 2 x 16 (32) | 16 |

|

Texture Units

|

2 x 128 | 128 | 2 x 64 | 64 |

|

ROPs

|

2 x 32 (64) | 32 | 2 x 48 (96) | 48 |

|

CORE Clock

|

915MHz | 1006MHz | 607MHz | 772MHz |

|

Shader Clock

|

N/A | N/A | 1214MHz | 1544MHz |

|

Boost Clock

|

1019MHz | 1058MHz | N/A | N/A |

|

Memory Clock

|

6008MHz GDDR5 | 6008MHz GDDR5 | 3414MHz GDDR5 | 4008MHz GDDR5 |

| L2 Cache Size | 1024KB (512KB per GPU) | 512KB | 1536KB (768KB per GPU) | 768KB |

|

Memory Bus Width

|

2 x 256-bit | 256-bit | 2x 384-bit | 384-bit |

| Total Memory Bandwidth | 384.4GB/s | 192.2GB/s | 327.7GB/s | 192.4GB/s |

| Texture Filtering Rate (Bilinear) | 234.2 GigaTexels/s | 128.8 GigaTexels/s | 77.7 GigaTexels/s | 49.4 GigaTexels/s |

|

VRAM

|

2 x 2GB (4GB) | 2GB | 2 x 1.5GB | 1.5GB |

|

FP64

|

1/24 FP32 | 1/24 FP32 | 1/8 FP32 | 1/8 FP32 |

|

TDP

|

300W | 195W | 375W | 244W |

| Recommended Power Supply | 650W | 550W | 600W | 700W |

| Power Connectors | 2 x 8-Pin | 2 x 6-Pin | 2 x 8-Pin | 1x 6-Pin, 1 x 8-Pin |

|

Transistor Count

|

2 x 3.5Bn (7.08Bn) | 3.5Bn | 2 x 3Bn | 3Bn |

|

Manufacturing Process

|

TSMC 28nm | TSMC 28nm | TSMC 40nm | TSMC 40nm |

| Thermal Threshold | 98C | 98C | 97C | 97C |

|

Launch Price

|

$999 | $499 | $699 | $499 |

Other than the 2.8% drop in the GPU clock speed, the rest of the specifications on the GTX 690 are identical to the GTX 680. We get the same number of cores, memory speed, and memory bandwidth per GPU. The GTX 690 has a total of 16 SMX (2x 8), 256 texture units (2x 128), and 64 ROPS (2x 32). It has maximum 234.2 GigaTexels/s texture filtering rate and 384.4 GB/s of memory bandwidth. In addition to the pair of GK104 GPUs clocked at 915MHz, the GTX 690 also comes with 4GB of 512-bit 6Gbps GDDR5 (2GB per GPU). The card will support Turbo up to 1019MHz. Right off the bat, we can already see that this card should be significantly faster than the previous generation GTX 590.

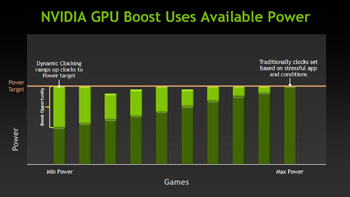

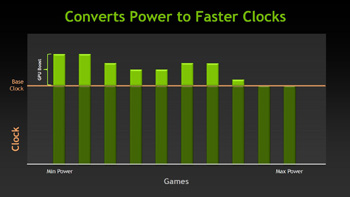

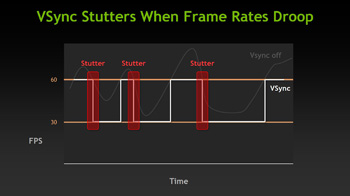

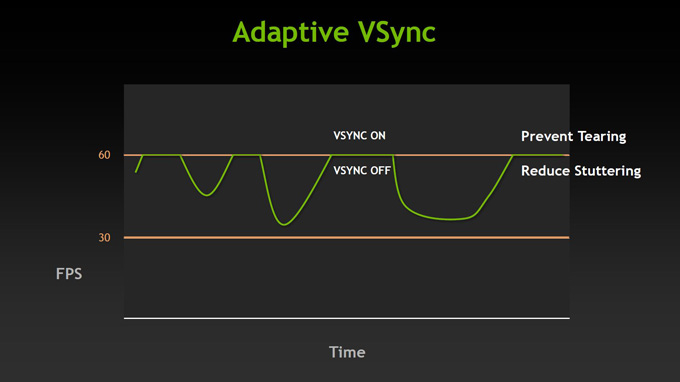

To power the card, there are 10 power phases (5 per GPU). It will be equipped with two 8 pin PCI-E power connectors. Since each PCI-E 8-pin delivers up to 150W and the PCI-E slot delivers 75W of power, the card will have a maximum 375W of power available at its dispense, 75W more than its 300W TDP. The additional power would potentially allows higher overclocking as the GPU Boost is measured within the 300W TDP limit. So what this means is that while the card is rated at 300W, it doesn’t mean that it will use all those 300W at full load. It will depend on the 3D application being used and its graphics settings. For example, an older video game running with adaptive VSync on will most likely not even get close to the 300W TDP, because the GPUs will only work at a much lower percentage rate.

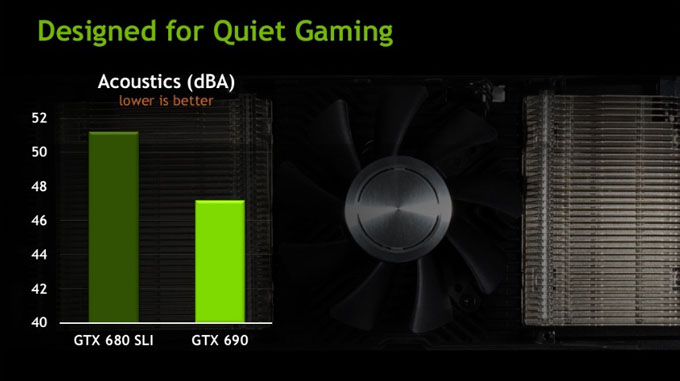

Upon first glance, the GTX 690 looks very similar to the GTX 590 with a single radial fan sitting in the center of the board and the two GPU on either side. A dual water vapor chamber heatsink with nickel platted fins sits directly above the GPUs maximum heat transfer. The nickel plated fins are used to avoid any corrosion or abrasion. Running at 3000 RPM, the axial fan pulls cool air into the cooling fins where the hot air will be moved to either side of the card. The only potential flaw in this cooling design is that parts of the hot air exhausted from the cooler will be blown back into the chassis, so proper air circulation inside the case is essential to making sure your video card will perform to its specifications. From what we have tested so far, it seems that even a poorly ventilated system will manage to run the video card without spinning up the fan on the GTX 690 to its maximum level.

Noise-level is one of the main focuses with the GTX 690. The engineers at NVIDIA have optimized the fin pitch and the angle at which the air hits the fin stack. It is carefully designed so that a smooth airflow can be generated to reduce turbulence. The area directly underneath the fan and all components under the fan are low-profile so that there are no components that protrude out and potentially disrupt the smooth airflow. Additionally, the fan controller software has been fine-tuned so that the fan speed increases gradually as opposed to in discrete steps. All together, we should expect the GTX 690 to operate at much lower noise-level.

The most noticeably difference between the GTX 590 and the GTX 690 would be the shroud. Gone is the plastic shroud and in its place, aluminum and an injection molded magnesium fan housing are used. The metal shroud is bolted onto the card so it should help out with the heat dissipation which can further help with noise reduction. Two pieces of translucent polycarbonate material cover the heatsinks. We feel that this is probably just to showcase the internal vapor chamber cooler rather than for performance.

For the displays, the GTX 690 uses the same three dual-link DVI ports and one mini-Display Ports like the GTX 590. It will continuous to support 2D and 3D Surround and the accessory display setup (3+1). For 2D Surround, any three of the four display adapters can be used but for 3D Surround, the three displays would be powered by the three DL-DVI ports. The maximum resolution supported is 5760×1080.

The GTX 690 takes the award home for the most expensive video cards available with its $999 price tag. Given to its specs, it is not too surprising with this price since a single GTX 680 is retailed at $499. The card will be available today from ASUS and EVGA. Limited quantities are available and NVIDIA expects to have more cards in stock on the May 7th.

FASTER

SMX / GPU Boost

Click on the Images to View a Larger Version

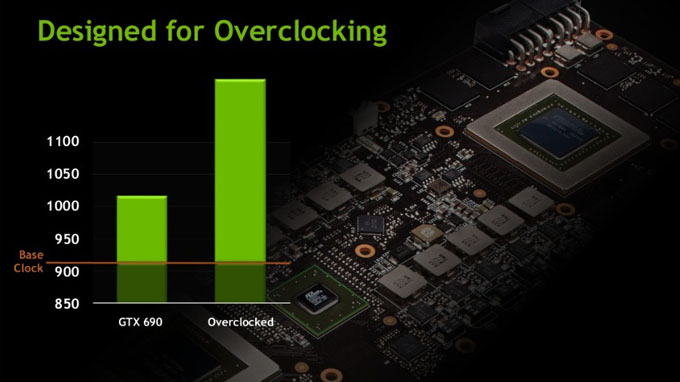

While there is no upper limit to the GPU Boost, The GTX 690 has a boost speed of 100 MHz from the base 915 MHz. In theory, the card can potentially run even faster than this speed as there are power head rooms available on the card.

Video Encoding

Kepler also features a new dedicated H.264 video encoder called NVENC. Fermi’s video encoding was handled by the GPU’s array of CUDA cores. By having dedicated H.264 encoding circuitry, Kepler is able to reduce power consumption compared to Fermi. This is an important step for NVIDIA as Intel’s Quick Sync has proven to be quite efficient at video encoding and the latest AMD HD 7000 Radeon cards also features a new Video Codec Engine.

NVIDIA lets the software manufacturers implement support for their new NVENC engine if they wish to. They can even choose to encode using both NVENC and CUDA in parallel. This is very similar to what AMD has done with the Video Codec Engine in Hybrid mode. By combining the dedicated engine with GPU, the NVENC should be much faster than CUDA and possibly even Quick Sync.

- Can encode full HD resolution (1080p) video up to 8x faster than real-time

- Support for H.264 Base, Main, and High Profile Level 4.1 (Blu-ray standard)

- Supports MVC (multiview Video Coding) for stereoscopic video

- Up to 4096×4096 encoding

According to NVIDIA, besides transcoding, NVENC will also be used in video editing, wireless display, and videoconferencing applications. NVIDIA has been working with the software manufacturers to provide the software support for NVENC. At launch, Cyberlink MediaExpresso will support NVENC, and Cyberlink PowerDirector and Arcsoft MediaConverter will also add support for NVENC later.

Smoother

FXAA

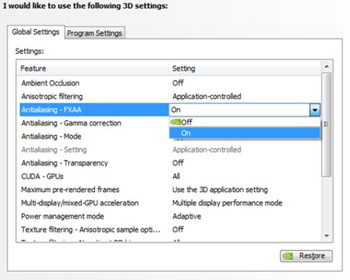

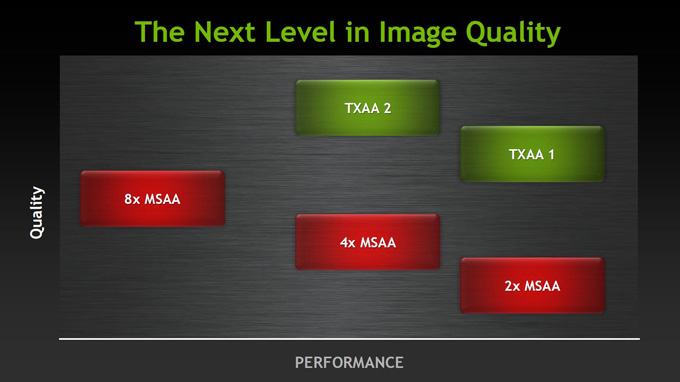

A faster card like the GeForce GTX 690 means that we can actually do more computational work that leads to a far greater visual experience. NVIDIA once again brings their FXAA back to the center stage for smoothing out the jagged edges in games. NVIDIA wants to bring our attention to their FXAA technology, which is able to provide better visual quality than 4x MSAA. In fact, NVIDIA shows that the Kepler is 60% faster than 4x MSAA while providing much smoother edges.

However, we did notice a little bit of blurring in some games when enabling FXAA in the NVIDIA ForceWare Drivers. Thankfully, NVIDIA is constantly improving this technology and will try to fix these problems in the near future.

The latest 3xx series ForceWare drivers have the ability to enable FXAA, making it possible to override internal game settings. NVIDIA informs us that this option will be available for both Fermi and Kepler cards only with the new ForceWare 3xx series drivers, while the older cards will still have to rely on individual game settings.

TXAA

At the moment, we cannot test TXAA as there are no game titles currently supporting it. NVIDIA is working with various game makers to implement TXAA in future game releases. While TXAA will be available at launch with Kepler, it will be available on Fermi as well with future driver releases.

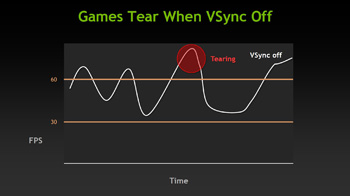

Adaptive VSync

Click on the Images to View a Larger Version

Single GPU 3D Vision Surround

One area where Kepler really improved upon is the multi-monitor support. NVIDIA has been falling behind AMD for a long time in this regard, and it is good to finally see some development on this front. Kepler now supports up to four displays. While AMD still has an edge with Eyefinity’s 6 display support, NVIDIA is at least closing the gap.

The GeForce GTX 690 comes with three Dual-Link DVI ports to make it easy to connect three DVI monitors in a 3D Vision Surround configuration. There will be an additional mini DisplayPort available as well for a 4th monitor. The ability to power four displays simultaneously means that it is now possible to run NVIDIA 3D Vision Surround with just 1 GPU. It is even possible to play games on 3 displays in the Surround mode and have a fourth display for other applications.

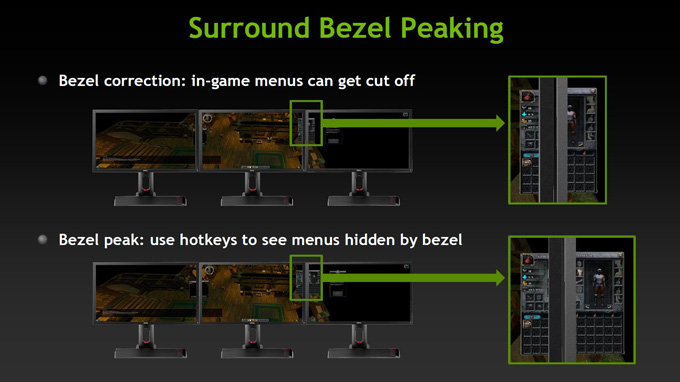

A couple new features have been added to NVIDIA’s Surround. While NVIDIA’s Surround is a great option for games, it still has its problems which are caused by custom resolutions. With a custom resolution, many invisible pixels are rendered in place of the monitor’s borders. This is done to give users the feeling that even though they cannot see the pixels where the monitor borders are located, the world does not simply cut from one monitor to the other. It gives gaming a much more engaging feel, however this has its disadvantages as well. Because the invisible pixels are still rendered, some video games might use this area to display important in-game information to the user. If the information is displayed right where the monitors connect, a lot of valuable information could be lost that the gamer might need.

The Surround Bezel Peaking option allows the user to use a hotkey (CTRL + ALT + B, by default) on their keyboard to show these hidden pixels while using custom Surround resolutions like 6040×1080. We have played games where certain menus opened up right at the borders of the monitors and it was impossible to see what was being rendered in the menus. Another instance was having game subtitles that spanned multiple monitors—and some text would get lost at the borders of the monitor. Surround Bezel Peaking fixes this problem with a quick keyboard hotkey that can be used whenever the user wishes to see the hidden pixels.

A Closer Look at the GTX 690

Click Images to Enlarge

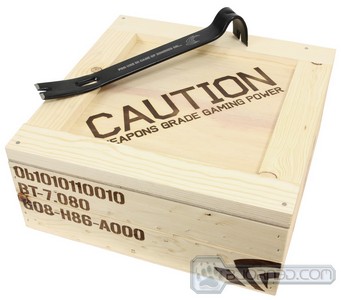

The GTX 690 is shipped in a rather eye-catching wooden military ammo crate. We see that the NVIDIA logo is burned onto the side of the box. We can also see a very interesting code on the side of the box reading “0b1010110010”, which stands for “690” in binary. We could not decipher what the extra two lines on the box stand for, so if you think can figure that out, let us know about what you think the “BT-7.080” and the “808-H86-A000” might mean. NVIDIA provided us with a crowbar to pry open the box. What could actually be inside? Well at this point, we kind of already gave it away. We can see that the GTX 690 sits inside the box with a foam cutout, making the card fit snuggly in the foam padding to prevent any damage during shipment. The card we received is NVIDIA’s own reference card so most likely the retail card from its partners will not be shipped the same way.

Click Images to Enlarge

The GTX 690 has a tough but a super sexy appearance due to its metal shroud. Many graphics card designs often emphasize performances over aesthetics, because the card sits inside the PC and will rarely see the light of day. However, the GTX 690 is one of the nicer looking cards we have seen and we have to give NVIDIA some credit for finally designing a good looking card with a stainless aluminum frame and the polycarbonate window allowing us to peek inside the cooling fin stack.

Click Images to Enlarge

The GEFORCE GTX logo that sits above the card lights up green when the card is operational. The name sits on a injection molded Magnesium cover. So as we can see NVIDIA really went out and made sure the quality of the card’s design is as good as possible without changing the expected price of the GTX 690. Holding the card in your hand makes it feel like you are holding a very high quality product. This is actually quite true, because we’ve seen how well and how stable the new Kepler architecture was with the GTX 680, and the GeForce GTX 690 is a dual GK104 video card with an updated shroud, cooling, and power design. The GTX 690 takes up two expansion slots and it is roughly 27.5cm long, or 10.82 inches long.

Click Images to Enlarge

Two 8 pin PCI-E power connectors are needed to provide necessary auxiliary power to the GTX 680. Each 8 pin power delivers up to 150W so that the card can draw maximum power of 375W of power. Breaking that down, that’s a total of 300W (2x 150W) of power from the 8-pin power connectors, and a maximum of 75W from the PCI-Express motherboard sots. A single GTX 680 has a TDP of 195W so as you will see, while NVIDIA is able to keep about 95% of the performance of dual GTX 680 in SLI, it has reduced the power draw by 90W by having two GPUs on a single PCB running on a well designed 10-phase power design.

Click Images to Enlarge

Our reference card is clocked at 915MHz core and is capable of Turboing to 1019MHz. Like the GTX 680, the GTX 690 has adjustable power target and clock offset. The default power target for the GTX 690 is 263W and it has a maximum power envelope of +35% which gives us 355W.

Click Images to Enlarge

The ports on the GTX 690 are identical to the GTX 590: three DL-DVI ports, and a DisplayPort. With the GTX 690, the DisplayPort has been upgraded to version 1.2. The new revision essentially doubles the effective bandwidth to 21.6 Gbps which means that the port can drive higher resolutions, higher refresh rates, and greater color depth. It is now capable of supporting two 2560×1600 monitors at 60Hz or four 1920×1200 monitors.

Comparing the rear of the GTX 690 and GTX 590, we can notice that the exhaust vent on the back has been shifted slightly. The GTX 690 has the DVI port on the bottom of the card and the exhaust vents on the top while the GTX 590 has them switched. The newer arrangement should be more efficient than the previous design to allow hot air escape out of the case.

Click Images to Enlarge

Compared to the GTX 590 which has a partially covered back, the back of the GTX 690 is not covered with a backplate, exposing the back of the PCB. While it’s hard to see, we can somewhat make out the 8 video memory chips (256MB each) that are hidden on the other side of the PCB right around the GPUs. You can see them on the picture below. The card comes with a total of 4GB of VRAM, however, do not forget that since there are two GPUs on this PCB, the card will only use 2GB per GPU. What does this exactly mean for the end-user? If you are planning on playing video games in 3D Vision Surround, or just plain NVIDIA Surround mode, you might find it difficult maxing out the game graphic settings, especially the anti-aliasing, because a massive 5760×1080 resolution with high AA will easily eat up those 2GB of video memory.

Click Images to Enlarge

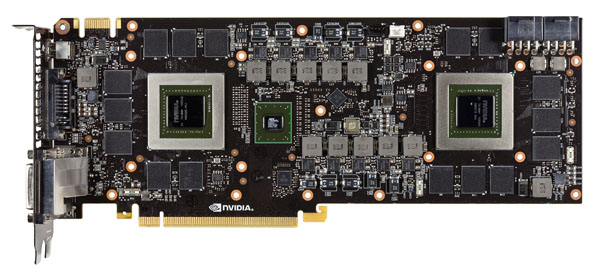

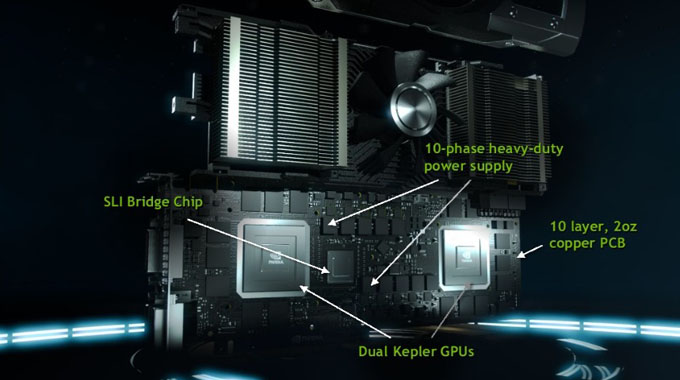

We can see the dual GTX 690 die on the picture above. Notice the lack of a heatspreader above the die. The vapor chamber cooler sits directly above the GPU die to most effectively conduct heat away from the GPU. If you also notice in the middle of the card, we can see that NVIDIA is running on a 10-phase power design. This means that each GPU has a 5-phase power design dedicated to it. Also, note that NVIDIA’s past dual-GPU video cards always use the in house NVIDIA NF200 PCI Express switch. However, since the NF200 is a PCI-E 2.0 switch, it cannot provide sufficient bandwidth; so NVIDIA has to use a third-party PCI-E 3.0 switch from PLX.

Here we have a closer look at the card disassembled, and arrows clearly pointing to the parts we explained above. However, we would also like to talk a little about the cooler design of the GTX 690. The GTX 690 comes with a dual vapor chamber heatsink design. This is a better solution over what the reference GTX 680 came with. Harking back to the Fermi architecture, both the GTX 580 and 590 came with a very nice vapor chamber design. This is why the GTX 580 was able to operate slightly cooler than the GTX 680 at full load. The GTX 690 comes with the same heatsink design, making it possible to run quieter, cooler and gain more GPU boost when the conditions are correct. The fan in the middle is equipped with special acoustic materials to make sure the user will never hear it running. The noise coming from the video card will be merely the noise caused by the actual air hitting against the fins and sides of the video card, causing turbulence.

Testing Methodology

The OS we use is Windows 7 Pro 64bit with all patches and updates applied. We also use the latest drivers available for the motherboard and any devices attached to the computer. We do not disable background tasks or tweak the OS or system in any way. We turn off drive indexing and daily defragging. We also turn off Prefetch and Superfetch. This is not an attempt to produce bigger benchmark numbers. Drive indexing and defragging can interfere with testing and produce confusing numbers. If a test were to be run while a drive was being indexed or defragged, and then the same test was later run when these processes were off, the two results would be contradictory and erroneous. As we cannot control when defragging and indexing occur precisely enough to guarantee that they won’t interfere with testing, we opt to disable the features entirely.

Prefetch tries to predict what users will load the next time they boot the machine by caching the relevant files and storing them for later use. We want to learn how the program runs without any of the files being cached, and we disable it so that each test run we do not have to clear pre-fetch to get accurate numbers. Lastly we disable Superfetch. Superfetch loads often-used programs into the memory. It is one of the reasons that Windows Vista occupies so much memory. Vista fills the memory in an attempt to predict what users will load. Having one test run with files cached, and another test run with the files un-cached would result in inaccurate numbers. Again, since we can’t control its timings so precisely, it we turn it off. Because these four features can potentially interfere with benchmarking, and and are out of our control, we disable them. We do not disable anything else.

We ran each test a total of 3 times, and reported the average score from all three scores. Benchmark screenshots are of the median result. Anomalous results were discounted and the benchmarks were rerun.

Please note that due to new driver releases with performance improvements, we rebenched every card shown in the results section. The results here will be different than previous reviews due to the performance increases in drivers.

Test Rig

| Test Rig | |

| Case | Cooler Master Storm Trooper |

| CPUs | Intel Core i7 3960X (Sandy Bridge-E) @ 4.6GHz |

| Motherboards | GIGABYTE X79-UD5 X79 Chipset Motherboard |

| Ram | Kingston HyperX Genesis 32GB (8x4GB) 1600Mhz 9-9-11-27 Quad-Channel Kit |

| CPU Cooler | Noctua NH-D14 Air Cooler |

| Hard Drives | 2x Western Digital RE3 1TB 7200RPM 3Gb/s Hard Drives |

| SSD | 1x Kingston HyperX 240GB SATA III 6Gb/s SSD |

| Optical | ASUS DVD-Burner |

| GPU | NVIDIA GeForce GTX 690 2x2GB (4GB) Video Card2x NVIDIA GeForce GTX 680 2GB Video Card in 2-way SLI

NVIDIA GeForce GTX 680 2GB Video Card 3x NVIDIA GeForce GTX 580 1.5GB Video Cards in 3-way SLI 2x NVIDIA GeForce GTX 580 1.5GB Video Cards in 2-way SLI NVIDIA GeForce GTX 580 1.5GB Video Cards AMD HD7970 3GB Video Card AMD HD7950 3GB Video Card AMD HD7870 2GB Video Card AMD HD6990 4GB Video Card |

| PSU | Cooler Master Silent Pro Gold 1200W PSU |

| Mouse | Razer Imperator Battlefield 3 Edition |

| Keyboard | Razer Blackwidow Ultimate Battlefield 3 Edition |

Synthetic Benchmarks & Games

We will use the following applications to benchmark the performance of the NVIDIA GeForce GTX 690 video card.

| Benchmarks |

|---|

| 3DMark Vantage |

| 3DMark 11 |

| Crysis 2 |

| Just Cause 2 |

| Lost Planet 2 |

| Metro 2033 |

| Battlefield 3 |

| Unigine Heaven 3.0 |

| Batman Arkham City |

| Dirt 3 |

| H.A.W.X. 2 |

3DMark 11

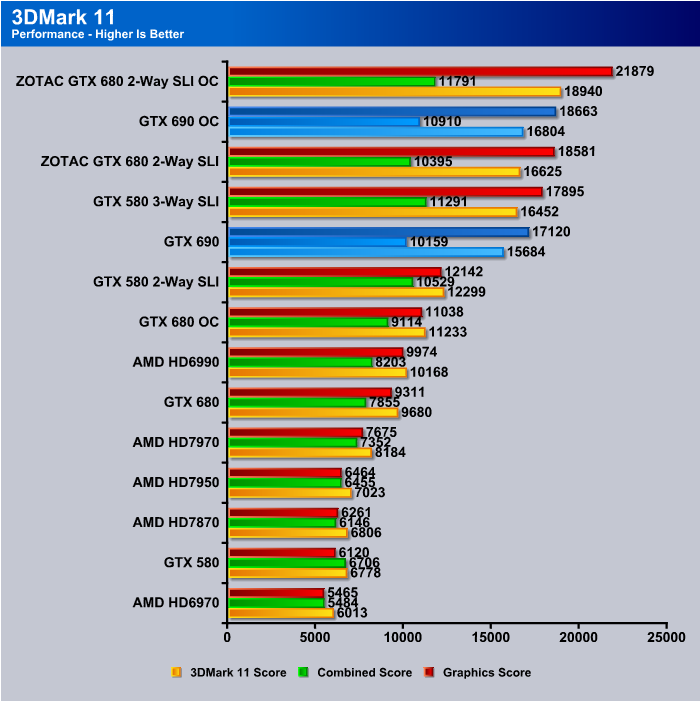

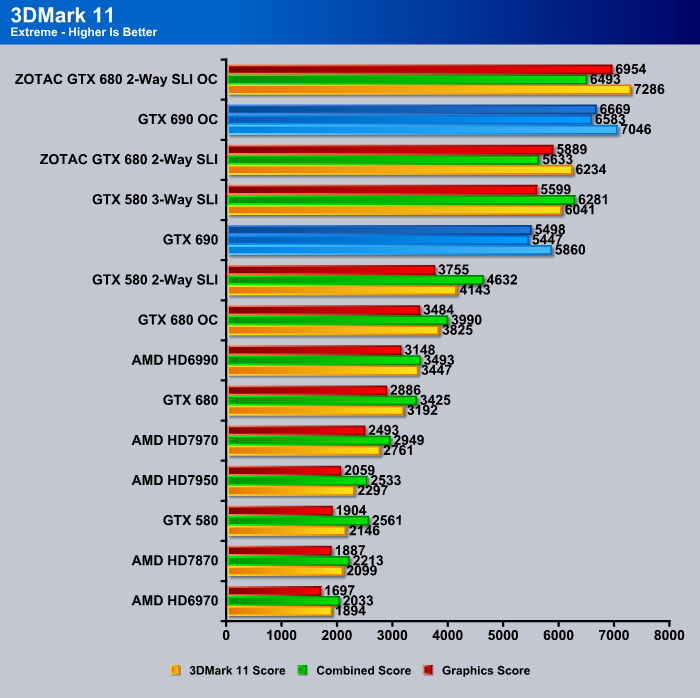

We start off with the industry standard 3DMark 11. The GTX 690 is about 7% slower than two GTX 680’s in SLI. However, notice that the card is trailing just a tad behind the 3-way GTX 580. This is a very impressive result from just one card. Compared to the fastest single card offering from AMD, the HD 6990, the GTX 690 card is 50% faster. No doubt that the GTX 690 is the fastest card on the market today.

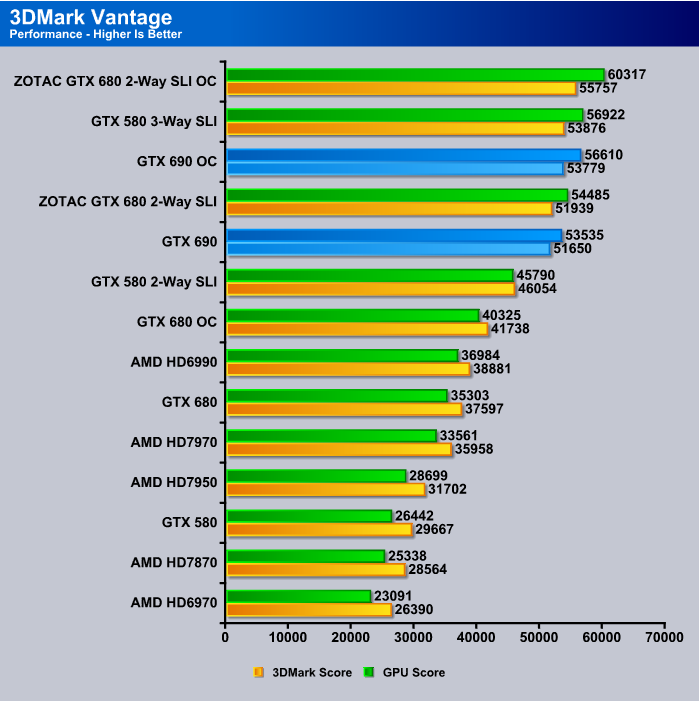

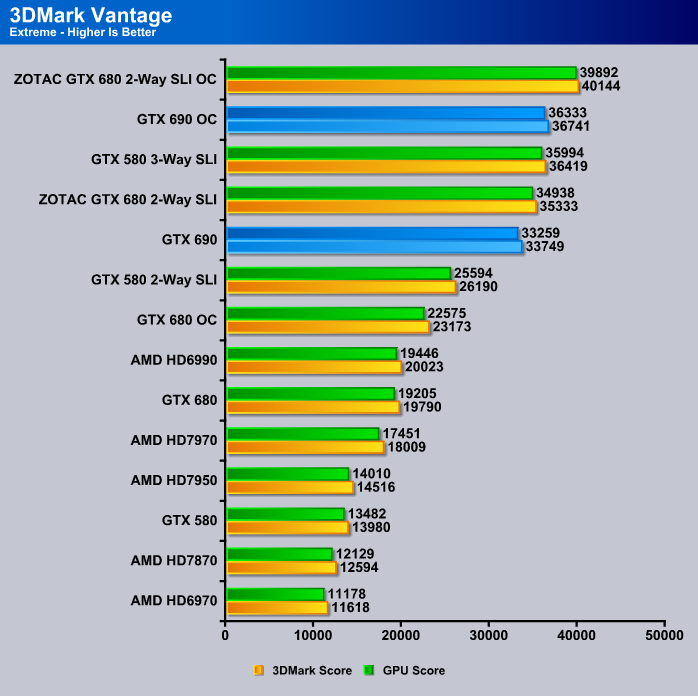

3DMark Vantage

In the Performance present, here is about a 2% difference between the GTX 680’s in SLI and the GTX 690. In the Extreme preset, the difference widens slightly to 4%.

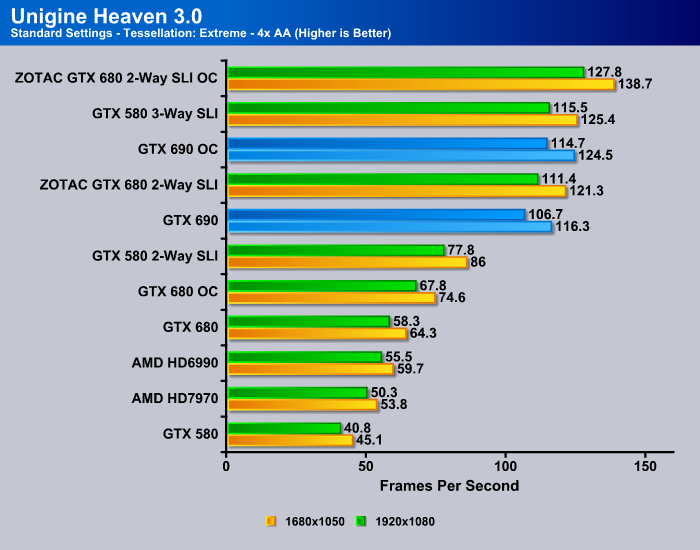

Unigine Heaven 3.0

Unigine Heaven is a benchmark program based on Unigine Corp’s latest engine, Unigine. The engine features DirectX 11, Hardware tessellation, DirectCompute, and Shader Model 5.0. All of these new technologies combined with the ability to run each card through the same exact test means this benchmark should be in our arsenal for a long time.

Unigine Heaven 3.0 also shows about a 5% difference between the GTX 680 and the GTX 690. The HD 6990 is built with older eVLIW4 architcture that is weak at heavy Tessellation. Despite its dual GPU, it is only about 1/2 of what the GTX 690 is capable. The HD 7000 series with the Graphic Core Next architecture is much better at Tessellation as we can see with the HD 7970 so we should expect the dual GPU card based on the HD 7970 to perform much better. Even if we consider 100% scaling, the GTX 690 is still faster here.

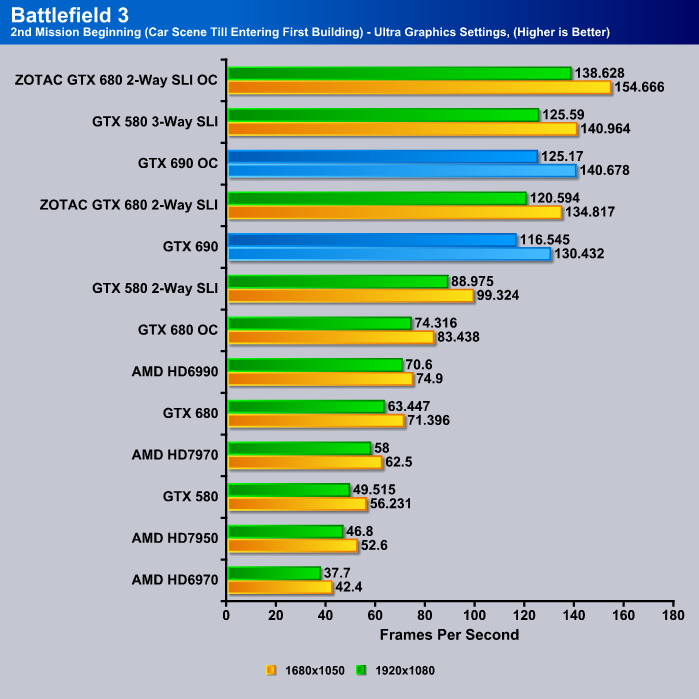

Battlefield 3

There is about a 2-3% difference between the the GTX 680 SLI and the GTX 690. The 3-way GTX 580 is still faster than the GTX 690 but once we overclocked the card, we can see that we are able to achieve the same performance with just one card.

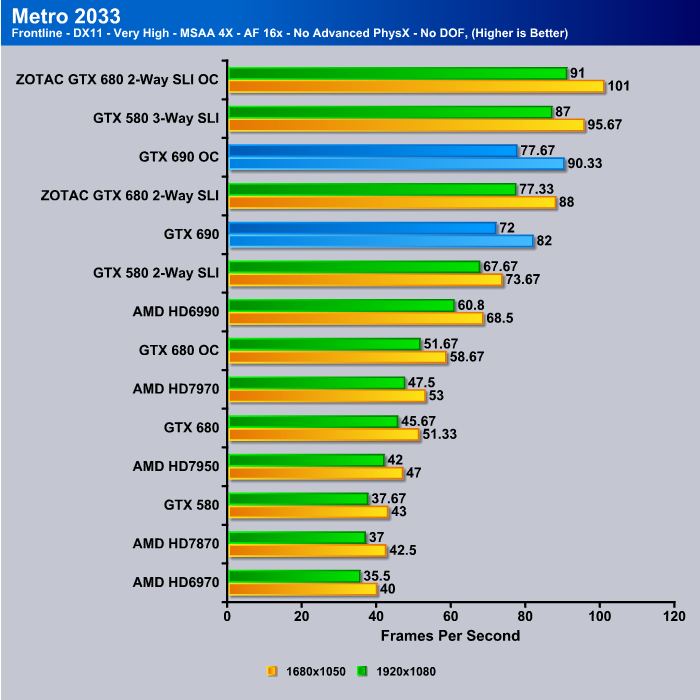

Metro 2033

With Metro 2033, the GTX 690 is about 7% slower here. Compared against the HD 6990, we can see the card is about 20% faster. AMD cards traditionally do very well in this game.

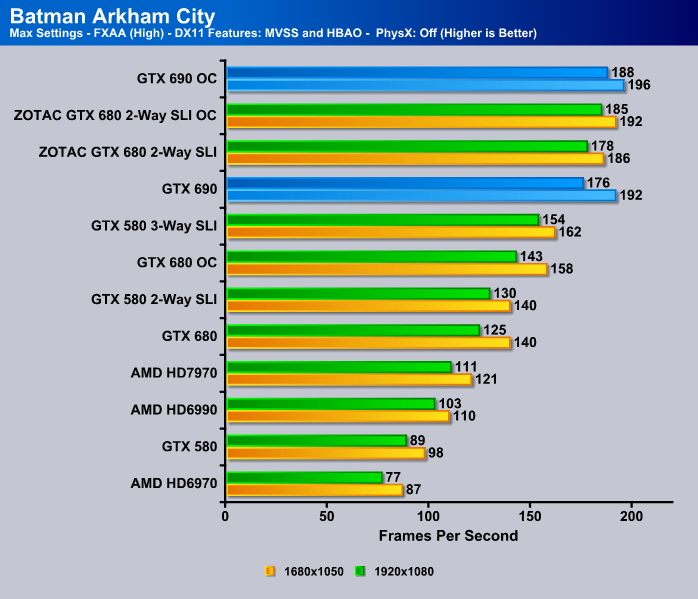

Batman Arkham City

Only two frames separate the GTX 680 SLI and the GTX 690, which works out to be about 1%. The Kepler is clearly much better for Batman Arkham City, and we can see even the GTX 680 is almost catching up with the GTX 580 SLI. With two Kepler GPU, the GTX 690 is about 40% faster than one GTX 680.

Compared to the fastest card from AMD, we can see the GTX 690 is 59% faster than the HD 7970.

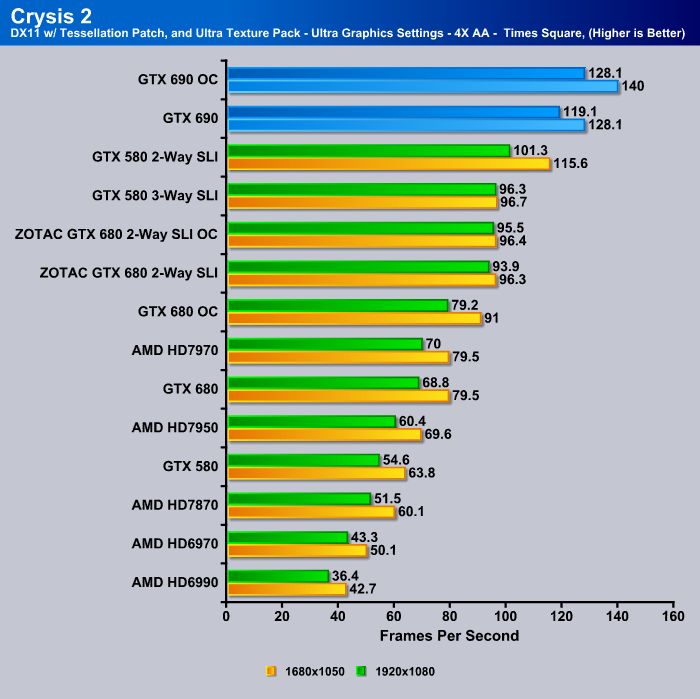

Crysis 2

In Crysis 2, we see the GTX 690 actually out-performs the GTX 680 SLI. We think the latest drivers from NVIDIA probably optimized the card better in this game.

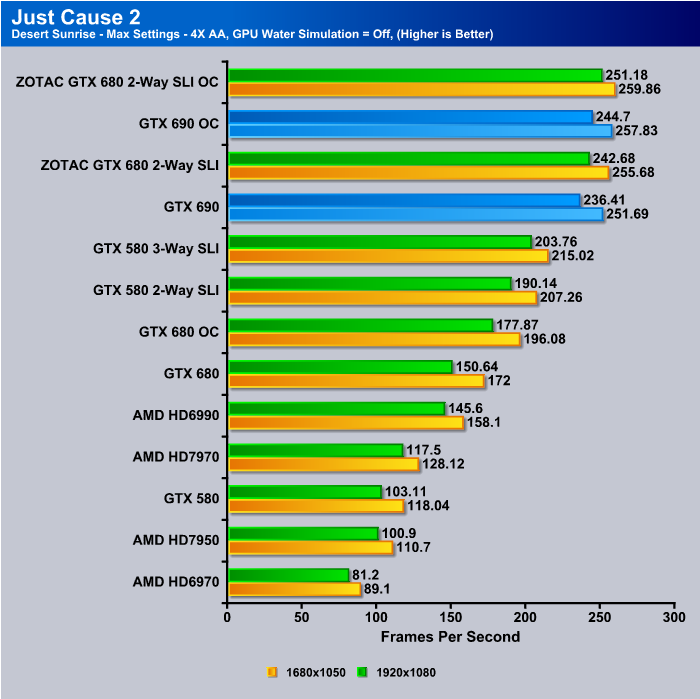

Just Cause 2

The GTX 690 is 16% faster than the 3-way GTX 580 SLI and 62% faster than the HD 6990.

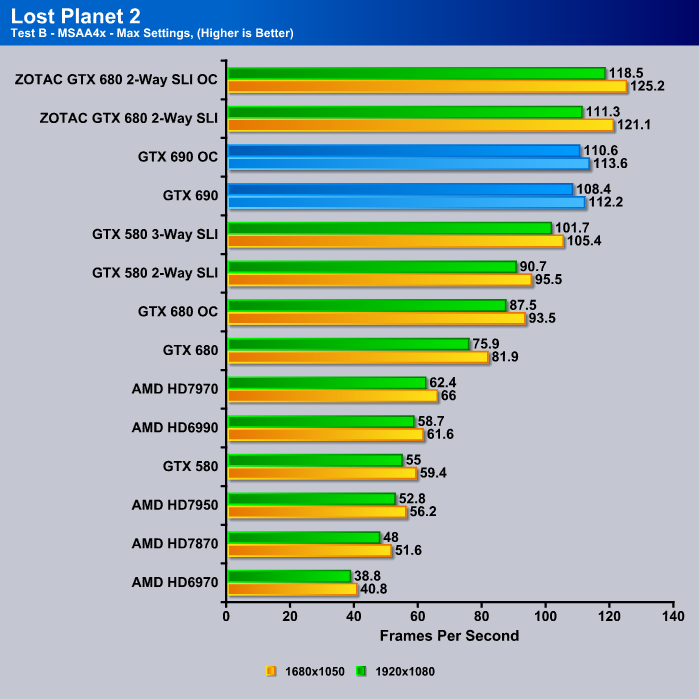

Lost Planet 2

The GTX 690 beats the GTX 580 3-way SLI again here. Compared to the fastest AMD card, the HD 7970, the GTX 690 is 74% faster. We realize that it comparing the dual GPU GTX 690 to the single GPU HD 7970 is a very lopsided dichotomy, but at the moment, there is no doubt that the GTX 690 is the fastest card on the planet.

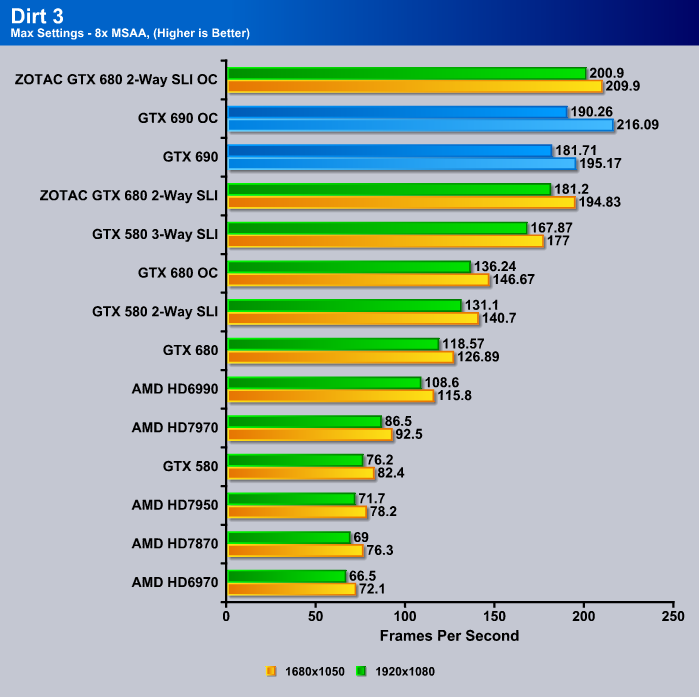

Dirt 3

NVIDIA cards traditionally perform better in Dirt 3. The GTX 680 is already faster than the HD 6990, and the GTX 690 extends the lead by 53%. Compared to the HD 7970, the GTX 690 is more than twice as fast, and if we assume AMD’s upcoming dual-GPU card has 100% scaling, the GTX 690 would still come out to be the faster card.

H.A.W.X 2

H.A.W.X. 2 gives us a very good overview of how the NVIDIA cards scale with multiple GPUs. Here we can see the GTX 690 is about 76% faster than a single GTX 680. Compared against the two GTX 680’s in SLI, we see that the card is only about 2% slower.

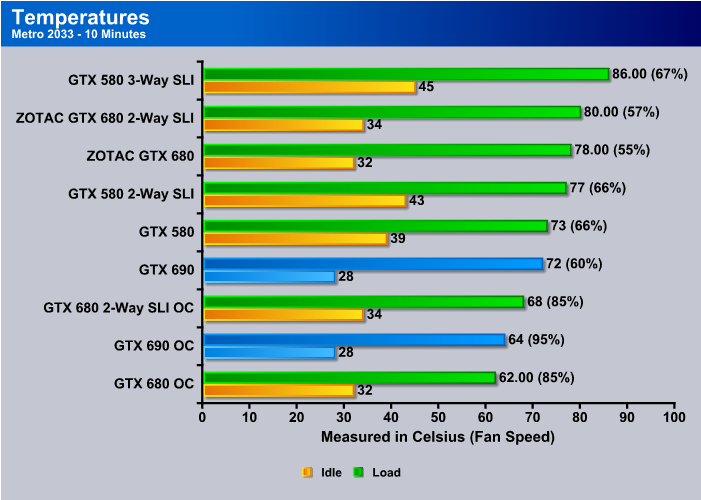

TEMPERATURES

To measure the temperature of the video card, we used Precision X and ran Metro 2033 benchmark in a loop to find the Load temperatures for the video cards. The highest temperature was recorded. After looping for 10 minutes, Metro 2033 was turned off and we let the computer sit at the desktop for another 10 minutes before we measured the idle temperatures.

We can see the fan running in auto mode goes up to 60% on the GTX 690. However, we have also noticed that the 60% on the GTX 690 also sounds quieter than the 60% on a GTX 680. This is most likely caused due to the different fan design on the GTX 690 than on the GTX 680. Despite housing two GPUs, it is actually cooler than a single GTX 580 or GTX 680. This is most likely because of the improved vapor chamber heatsink design on the GTX 690 that the GTX 680 did not have. If the GTX 680 would have come with a vapor chamber design, it is likely that the GTX 680 would have performed better in temperatures than the GTX 690. The better cooling could also be due to the better airflow channeling on the GTX 690 that we did not see on the GTX 590 in the past.

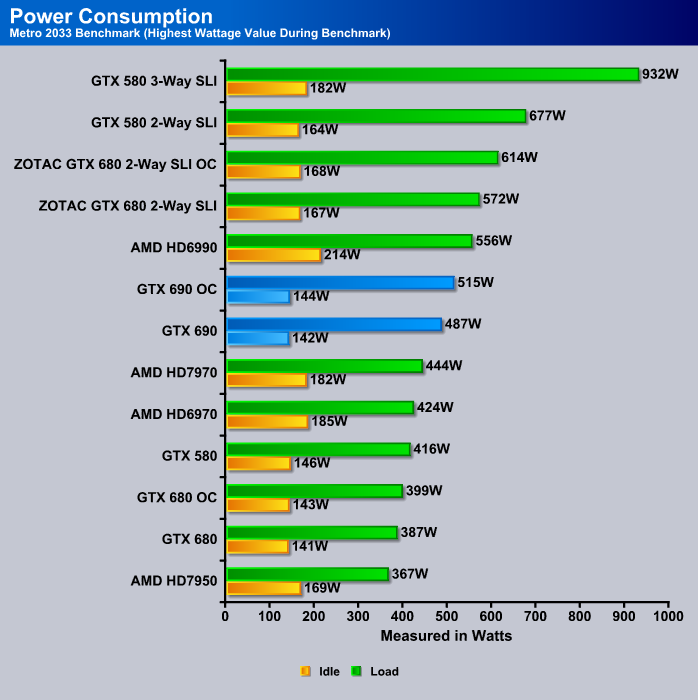

POWER CONSUMPTION

To get our power consumption numbers, we plugged in our Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then we ran Metro 2033 for a few minutes minutes and recorded the highest power usage.

One of the reasons that we liked the GTX 680 is its low power consumption. We can see that even with dual GPU’s, the GTX 690 consumes 142W idle, 1 more watt than the GTX 680. Under load, the card consumes 487 watts of power, 100 watts more than the GTX 680 but 85 watts fewer than dual GTX 680’s in SLI. Looking at the number, we can see that the card consumes 40 watts more than the HD 7970 and 40 watts fewer than the HD 6990.

In terms of performance per watt, there is nothing that can beat the GTX 690.

Overclocking

The new Kepler architecture is very friendly when it comes to overclocking. As mentioned previously, the card already comes with NVIDIA’s GPU Boost, which works very similarly to Intel’s Turbo technology on the latest Sandy Bridge or Ivy Bridge architecture. When the video card’s power target is not met during full load at stock clocks, the video card will automatically overclock itself to 1019MHz max. The card will use GPU Boost only when the operating conditions are perfect for the video card, including the temperature and the power consumption.

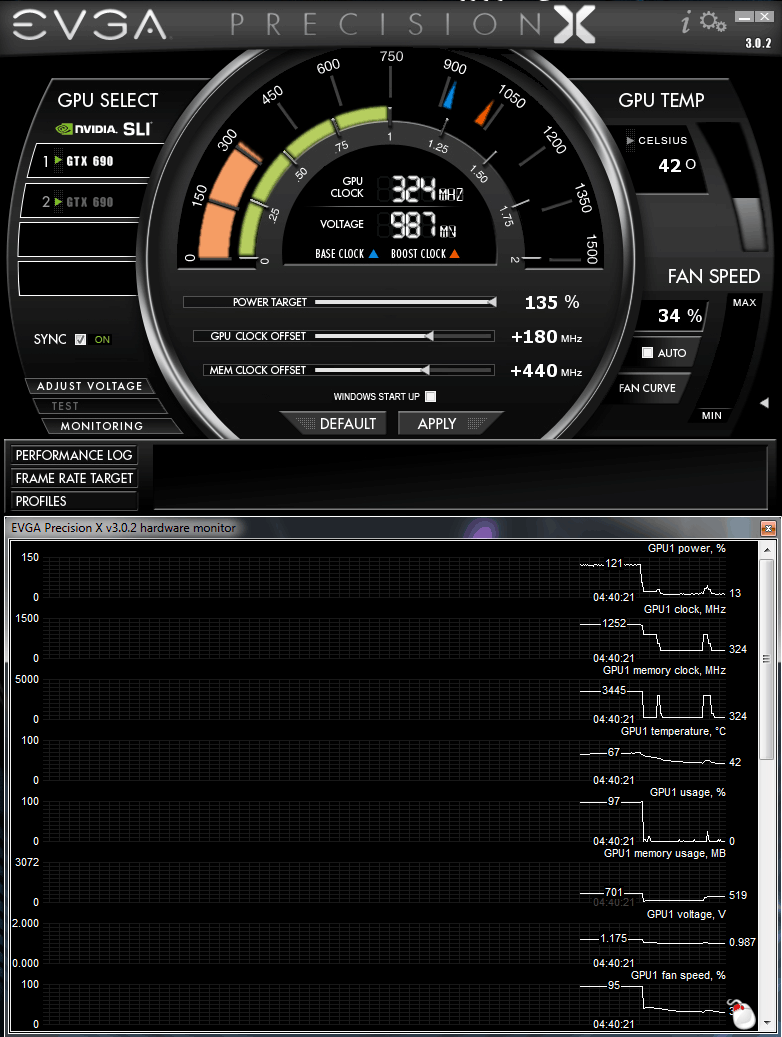

But jumping straight to manual overclocking, the NVIDIA GeForce GTX 690 has the same overclocking as the GTX 680. We can overclock the card easily with the help of EVGA’s Precision X Overclocking utility. Precision X is very much like MSI Afterburner overclocking utility. It has all the main features like Voltage Adjustments for the GPU, manual Core Clock and Memory frequency settings, Auto or Manual Fan settings, as well as real-time monitoring of voltages, clock speeds, fan speeds, temperatures, and much more. To top it off, Precision X also has manual fan curve options, which allow the user to manually adjust how fast the fan will spin on the video card depending on the corresponding temperatures that the user set the fan speeds to.

Finally, a very interesting and useful tool in Precision X has to be the Frame Rate Target, which allows the user to set a maximum Frame Rate option for their 3D applications. Suppose there is game that sometimes runs well over 100FPS during gameplay, but also drops under 60FPS in other areas of the game. With the Frame Rate Target Limiter, the user can set a 60FPS limit, and when the game would use more than 60FPS, the game video card will be limited to only render up to 60FPS. This limits erratic changes in frame rate, and yields an overall smoother gameplay experience.

After only about 10 minutes of overclocking, we settled at a fairly high overclock with a Core Clock speed of 1251MHz and a Memory Frequency at 6888MHz. That’s roughly a 336MHz overclock on both GPUs on the Core Clock speed from a stock speed of 915MHz, and about a 880MHz overclock on the GDDR5 memory frequency. We can’t wait to see some LN2 overclocking on the GTX 690 in the near future, but for now all our tests were done just with the stock NVIDIA vapor chamber air cooler, and the results are still extremely impressive.

The GPU Core Clock frequency on the GPU-Z does not match the exact value we mentioned above, because the value for the Core Clock will depend upon how much GPU Boost is enabled in the 3D application you are running. This is just to show that this card has great overclocking potential.

Performance Comparison in Games

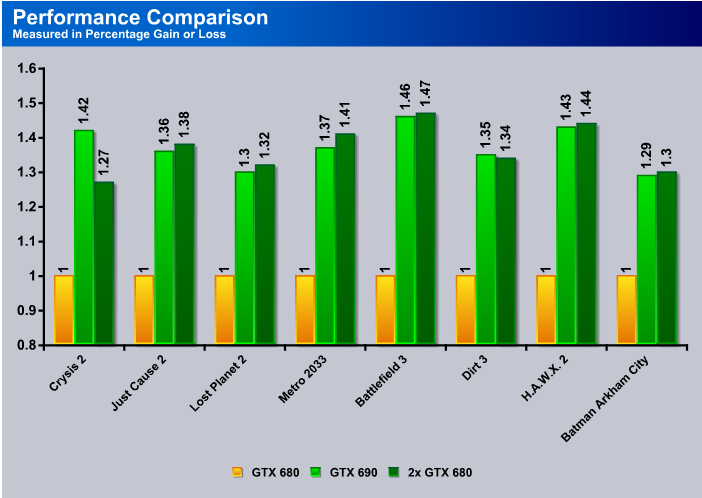

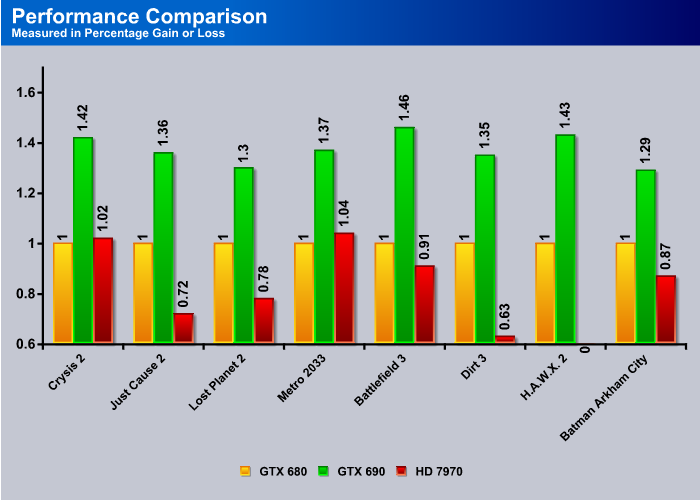

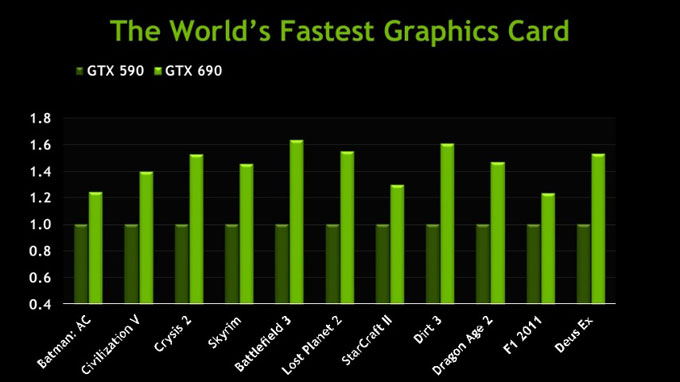

We have something interesting to take a look at in this review. This is something new we’ve been doing, and hopefully it will be helpful to you readers to see a more concise version of the results we got in our games. These charts should not be too hard to understand. We decided to pick a card as our baseline for comparison. In this case, we chose the GTX 680. We set the GTX 680 at 100% performance and we did some calculations to figure out how much faster or slower other cards were compared to the GTX 680. These were not calculated by dividing one number by another. This was calculated by taking the value we want to find the decrease or increase in and take the difference of the original GTX 680 results. We then divided the results with the card that we are comparing in the first place. This gives us the increase or decrease percentage for each card.

In the first set of results, we just simply compared the GTX 690 and two GTX 680s in SLI to a single GTX 680. This just allows us to easily see how much faster or slower the GTX 690 is compared to two GTX 680s, which cost exactly the same amount of money. Do keep in mind that two GTX 680s will use more power and will end up running hotter than the GTX 690 according to our testing. The GTX 690 seemed to have a big gain in Crysis 2 compared to the other cards, but other than that the GTX 690 and two GTX 680s in SLI are extremely close to each other to see major performance difference during gameplay.

We also decided to add the HD 7970 to the mix. Keep in mind, however, that this is not an apples-to-apples comparison, as the HD 7970 is single-GPU, and AMD has not yet released their latest 7000 series based dual-GPU video card. It is likely to be expected to see those cards some time around Computex, which is around the beginning of June. Because of this we have compared AMD’s fastest single GPU video card, the HD 7970, to the GTX 690 and the GTX 680. As we can see from the results, the AMD 7970 lags behind the GTX 680 quite a bit, and the GTX 690 is obviously way ahead of the competition compared to AMD.

GeForce GTX 590 Vs. GTX 690 Performance and Power

Unfortunately we do not have a GTX 590 laying around our testing lab at the moment, and we only received the card yesterday, so it is very difficult to have a proper comparison between the older GTX 590 Dual-GPU Fermi architecture and the newer GTX 690 Dual-GPU Kepler architecture. Since NVIDIA provided us with some results to check our benchmarks to, we will be using some of these results to get back to our readers about how the GTX 690 might perform compared to the older brother GTX 590.

Performance-wise, we can see similar results for the GTX 690 performance increase as we have seen from a GTX 680 to a GTX 690. Some games like F1 2011 do not show a big improvement in performance, but other more popular games like Battlefield 3 show a massive increase of about 60% in performance over a GTX 590. This is something quite unheard of, because we haven’t seen such a big leap in performance going from one architecture to another.

Finally, this is even more interesting. Not only did NVIDIA make a big leap in performance from the GTX 590 to the GTX 690, but they also increased their power efficiency (performance vs. watt) on their Kepler architecture. The nice thing is that if we throw in one more GTX 690 and GTX 590 for quad-SLI power efficiency testing, it is very likely that the difference between the two cards would widen, providing for an even more power efficient gaming system over the previous generation of cards.

Final Thoughts

The GTX 680 was the fastest single GPU card when it was launched a few weeks ago. We were quite impressed with what NVIDIA achieved with the card’s power consumption, heat output, and the performance. With the GTX 690, NVIDIA has raised the performance bar even higher.

With the GTX 690, NVIDIA is essentially able to cram two of the fastest GPU into a single card and the result is rather impressive. The GTX 690 delivers about 90-95% of the performance of two GTX 680’s in SLI, while consuming 80 fewer watts of power. Not only is the card much more power efficient, it is also quiet thanks to the vapor chamber heatsink. While the card may not be exactly as fast as two GTX 680, the trade off is definitely worth it.

And lets not forget about all the key advantages of the Kepler architecture, like the dedicated H.264 video encoder called NVENC, and GPU Boost. While FXAA, TXAA, and Adaptive vsync will be avilable on Fermi as well, it is also a nice feature on the Kepler architecture.

The one point that may dissuade some is the $1000 retail price. While we do like the card a lot, the asking price can be hard to swallow. The pricing of the card leaves a very difficult decision for a potential buyer when shopping for the fastest card. Should one buy two GTX 680s and have slightly better performance but higher power consumption, or a single GTX 690 for its power efficiency? It is a quite tough decision to make. Also it is important to note that only ASUS and EVGA will be selling GTX 690s in North America.

| OUR VERDICT: NVIDIA GeForce GTX 690 | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The GeForce GTX 690 is the sexiest, quietest, coolest, and most powerful dual-GPU video card on the market. For an amazing package, this card definitely earns the Bjorn3D Golden Bear Award. |

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

got mine runing 1075/1215/7500