The Seagate Constellation 2TB drive combined with the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card is a powerful combination of state of the art Raid controller and high end Sas Drives.

Seagate Constellation

Seagate has always been a leader in the storage industry, and when Sata 6Gb/s came around we wondered how long it would be until SAS (Serial Attached SCSI) 6Gb/s would take to arrive. As it turned out not long at all, the Seagate Constellation ES 2TB SAS 6Gb/s drives are in the test rig purring away. Of course having Enterprise class SAS 6Gb/s you need to have a SAS controller and we have the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card capable of handling Sata 6Gb/s and SAS 6Gb/s drives.

Since we are looking at three 2TB drives (that’s a whopping 6TB) we ran them in single drive, 2xRAID0, 3xRAID0 and RAID5. We got some pretty amazing results. Enterprise class drives and hardware RAID controllers don’t come cheap but the performance is very very good. When you compare the storage afforded by the Seagate Constellation to the cost of a tiny 128GB SSD it doesn’t look as expensive. Figure a 2TB Constellation ES runs in the neighborhood of $299 and the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card runs $324 so for 2TB of Enterprise class storage you are out $623 but you’ve upgraded to SAS 6Gb/s and Sata 6Gb/s and added 2TB of storage that’s upgradable. Not just upgradable but hardware RAIDable!

Key Features and Benefits

- Reduces design and support costs by building on the Seagate best-in-class enterprise storage foundation

- Features the maximum capacity available—up to 2TB—in a 3.5-inch enterprise-class hard disk drive

- Features a traditional 3.5-inch form factor with enhanced rotational vibration tolerance and high data integrity for enterprise-class reliability in a cost-effective drive

- Delivers proven reliability, with an MTBF of 1.2 million hours

- Lets you choose a 3Gb/s Enterprise SATA or 6Gb/s SAS interface which eases integration and delivers up to 2x the throughput of traditional 3Gb/s nearline hard drives

- Reduces energy and cooling costs, with the lowest power consumption of any nearline 3.5-inch drive

- Includes Seagate PowerChoice™ technology for even greater on-demand power savings during slow or idle periods

- TCG-compliant, Self-Encrypting Drive (SED) security option (SAS models only) eliminates the need to overwrite or physically destroy drives, enables safe return of drives for warranty or expired lease purposes and allows organizations to securely repurpose or sell hard disk drives

Stacked like that we find it hard to imagine that there lies 6TB of storage capacity with a sustained data throughput of 150Mb/s. We ran three solid days of benchmarks on these drives in every configuration of RAID0 and we also ran RAID5. We checked to see if we could marry these HDDs in my state; then found out, marriage to hard drives is illegal in this state so we were a little disappointed.

| Specifications | |

|---|---|

| Model Number | ST32000445SS |

| Interface | 6-Gb/s SAS |

| Cache | 16MB |

| Capacity | 2TB |

| Guaranteed Sectors | 3,907,029,168 |

| PHYSICAL | |

| Height | 26.1mm (1.028 in) |

| Width | 101.6mm (4.000 in) |

| Length | 146.99mm (5.787 in) |

| Weight (typical) | 710g (1.565 lb) |

| PERFORMANCE | |

| Spin Speed | 7200 RPM |

| Sustained data transfer rate | 150Mb/s |

| Average latency | 4.16ms |

| Random read seek time | <8.5ms |

| Random write seek time | <9.5ms |

| I/O data transfer rate | 600MB/s |

| Unrecoverable read errors | 1 in 1015 |

| RELIABILITY | |

| MTBF | 1,200,000 hours |

| Annual Failure Rate | 0.73% |

| POWER | |

| Average idle power | 8.0W |

| Average operating power | 12.2W |

| Average seek power | 11.2W |

| Maximum start current, DC | 2.08 |

| ENVIRONMENT | |

| Ambient Temperature | |

| Operating | 5°–60°C |

| Nonoperating | -40°–70°C |

| Maximum operating temperature change | 20°C per hour |

| Maximum nonoperating temperature change | 30°C per hour |

| Shock | |

| Operating Shock (max) | 70 Gs for 2ms |

| Nonoperating Shock (max) | 300 Gs for 2ms |

| ACOUSTICS | |

| Acoustics (Idle Volume) | 2.7 bels |

| Acoustics (Seek Volume) | 3.0 bels |

| Features |

|

| Encryption | Yes |

Let’s do the one breath quick and dirty description. The Constellation ES is a 2TB 7200 RPM 16MB cache drive with a standard 3.5 inch form factor and capable of a sustained data transfer rate of 150 Mb/s. Most of the tests we ran showed a substantially higher transfer rate.

With a standard 3.5 inch form factor and SAS 6Gb/s interface if your not lucky enough to have a motherboard with a native SAS controller you’ll need an add in card capable of handling that interface. The LSI 3Ware SAS 9750-4i 6Gb/s RAID Card is ideal for these drives and while it is capable of handling Sata 6Gb/s it will only handle SAS or SATA and you can’t mix the drives on the same controller at the same time.

LSI 3Ware SAS 9750-4i 6Gb/s RAID Card – Introduction

As we have mentioned on the previous page, we will be using LSI’s 3Ware SAS+SATA 9750-4i 4-port 6Gb/s RAID Controller Card to connect our Three Seagate Constellation ES 2TB SAS drives to the system. LSI provides numerous RAID Controller Cards depending on the needs of the customer, but two of the main series of cards they sell are the 3Ware and MegaRAID controller cards. According to their site, the new 6.0Gb/s MegaRAID series cards provide excellent performance for SAS and SATA options, but they are on the entry-level 6.0Gb/s RAID cards. The new 3Ware RAID controller cards are specifically designed to meet the needs of numerous applications. The 3Ware 9750-4i has exceptional performance in video performance for surveillance and video editing. But LSI did not stop there, they also made it possible to meet the needs of high-performance computing, including medical imaging, digital content archiving, and for exceptional performance on file, web, database and email servers.

LSI broke up their RAID controller cards in three categories:

- 3ware® RAID controller cards are a good fit for multi-stream and video streaming applications.

- MegaRAID® entry-level RAID controllers are a good choice for general purpose servers (OLTP, WEB, File-servers and High-performance Desktops).

- High-port count HBAs are good for supporting large number of drives within a storage system.

We just got a short note in from LSI and we are glad to let you know that LSI also offers a Value Line and Feature Line of MegaRAID cards. If you would like to take a look at those HERE we are sure you can find more affordable RAID solution that will fit your needs.

Features

- Four internal SATA+SAS ports

- One mini-SAS SFF-8087 x4 connectors

- 6Gb/s throughput per port

- LSISAS2108 ROC

o 800MHz PowerPC® - Low-profile MD2 form factor (6.6” X 2.536”)

- x8 PCI Express® 2.0 host interface

- 512MB DDRII cache (800MHz)

- Optional battery backup unit (direct attach)

- Connect up to 96 SATA and/or SAS devices

- RAID levels 0, 1, 5, 6, 10, 50 and Single Disk

- Auto-resume on rebuild

- Auto-resume on reconstruction

- Online Capacity Expansion (OCE)

- Online RAID Level Migration (RLM)

- Global hot spare with revertible hot spare support

o Automatic rebuild - Single controller multipathing (failover)

- I/O load balancing

- Comprehensive RAID management suite

Benefits

- Superior performance in multi-stream environments

- PCI Express 2.0 provides faster signaling for high-bandwidth applications

- Support for 3Gb/s and 6Gb/s SATA and SAS hard drives for maximum flexibility

- 3ware Management 3DM2 Controller Settings

o Simplifies creation of RAID volumes

o Easy and extensive control over maintenance features - Low-profile MD2 form factor for space-limited 1U and 2U environments

If you are thinking about upgrading your home desktop, workstation or server based system, or if you want to future proof your business for the next generation 6.0Gb/s interface, then you are in luck because LSI made it possible to use your existing SATA or SAS hard drives with the older 3.0Gb/s interface. This not only saves money by not needing to upgrade your existing hard drives, but the new 6.0Gb/s throughput interface also helps getting a bit more performance out of the older 3.0Gb/s hard drives that previously was not possible with the older onboard software based RAID or 3.0Gb/s hardware based RAID cards.

Here is a video about the lineup of LSI’s new 6.0Gb/s hard drives released this year. The video was made by LSI.

While we have also talked about other great utilization for the 3ware and MegaRAID cards besides video editing and compositing, LSI provides a huge performance increase in science labs. This video provided by LSI explains how the new 6.0Gb/s throughput can help speed up DNA research at Pittsburgh Center for Genomic Sciences.

This setup of three Seagate Constellation ES 2 TB SAS drives and the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card should provide maximum flexibility and great performance. Lets check out the software included with the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card.

LSI 3Ware BIOS

The LSI 3Ware BIOS is very simple to use and the learning curve is very easy and fast. Lets take a look at the following pictures, and see how easy it is, to set up your drives in a RAID5 array.

Click Image For a Larger One

Having too many images of something can get a bit confusing, so I divided up the images to make it easier to follow my explanation. Once you go through the first screen of the BIOS you will be led to the following page. This is where you can expand or close the Available Drives section. To select the drives you can either select the whole group by pressing Space or expand the group of drives, and select only the drives you want to put in a specific array. Since we are going to put all drives in a RAID5 configuration array, we are going to select all the drives.

Once all the drives are selected, we can press Tab to get to the menu settings; then click on the Create Unit button, this will bring up the Create Unit section, where you can set up the way your drives will work. In the list, it will show all the drives you have selected to include in the array. On the Array Name section, you will put the name of the array that you want to use. I recommend that you use reconizable names (if you are using multiple arrays) you will know exactly which hard drive went bad, if by any chance one does go bad in a certain array. In our case, since we will be using these hard drives for video editing, we called it “Video Production”.

Installation of Windows 7

The installation process of Windows 7 on the SAS hard drives was a bit tricky when I first did it. I though I was doing something wrong when I got to an image that Windows 7 could not continue installing because it could not install on the SAS drives, so I will walk you through the whole process on how to get this to work.

Ok, so in order to install windows, first you will need to insert your Windows 7 DVD. Once you get to the window that you see in the first image, it is time to insert your LSI 3Ware CD. You will need to load the drivers to your RAID card, by clicking on Load Driver. It will bring up a screen asking you to look for the driver yourself. Click on Browse, and go to the drive that you inserted the CD in. Once there, navigate to Packages -> Drivers -> Windows -> 64Bit (or in case you are installing a 32bit windows, click on 32bit) -> and now click ok. It will show you the list of drivers available. In our case we got 1 driver that we needed to install. Select it, and install it. It will take a minute or two to do this. Once done, you will see your SAS drives pop up in the list of hard drives that are recognized. Select it but then you will receive a message saying that windows cannot be installed on this drive, this happens because you still have the LSI 3Ware CD in your drive. You will need to take that CD out and put in the Windows 7 DVD. Once you do this, you will need to click refresh, and now Windows can finally be installed.

On a side note we also found that if you have Windows 7 already installed all you really need to do is install the RAID controller, configure the drives in the cards BIOS, boot into windows, install the included software and your bare hardware RAID array is ready to rock. From there we were able to clone the existing copy of WIndows 7 to the arrays and everything worked perfectly. So it’s not a requirement to frag your existing install unless you are just more comfortable with it.

3DM2 Setup

Finally, after installing Windows 7, and installing the drivers for the LSI card, there will be a shortcut to the 3DM2 page that accesses the RAID Card’s settings. This tool allows users to easily set up more arrays and maintain the drives straight from Windows. This tool comes very handy if there are more servers and large amounts of hard drives set up. This also allows the Administrator or users to change different settings on how error checking and other features should work.

We did note that after installing the 3DM2 software that we couldn’t enter the RAID cards BIOS directly and kept getting an error saying we might need a motherboard BIOS upgrade or that we didn’t have enough memory. We uninstalled the 3DM2 software and the Card let us right back into BIOS. What we found though was that we didn’t need to manually access the BIOS on the card after installing the 3DM2 software. Everything we needed was available right there in one easy to use easy to understand GUI.

RAID Levels Tested

RAID 1

Diagram of a RAID 1 setup.

A RAID 1 creates an exact copy (or mirror) of a set of data on two or more disks. This is useful when read performance or reliability are more important than data storage capacity. Such an array can only be as big as the smallest member disk. A classic RAID 1 mirrored pair contains two disks (see diagram), which increases reliability geometrically over a single disk. Since each member contains a complete copy of the data, and can be addressed independently, ordinary wear-and-tear reliability is raised by the power of the number of self-contained copies.

RAID 1 performance

Since all the data exists in two or more copies, each with its own hardware, the read performance can go up roughly as a linear multiple of the number of copies. That is, a

RAID 1 has many administrative advantages. For instance, in some environments, it is possible to “split the mirror”: declare one disk as inactive, do a backup of that disk, and then “rebuild” the mirror. This is useful in situations where the file system must be constantly available. This requires that the application supports recovery from the image of data on the disk at the point of the mirror split. This procedure is less critical in the presence of the “snapshot” feature of some file systems, in which some space is reserved for changes, presenting a static point-in-time view of the file system. Alternatively, a new disk can be substituted so that the inactive disk can be kept in much the same way as traditional backup. To keep redundancy during the backup process, some controllers support adding a third disk to an active pair. After a rebuild to the third disk completes, it is made inactive and backed up as described above.

Diagram of a RAID 0 setup.

A RAID 0 (also known as a stripe set or striped volume) splits data evenly across two or more disks (striped) with no parity information for redundancy. It is important to note that RAID 0 was not one of the original RAID levels and provides no data redundancy. RAID 0 is normally used to increase

A RAID 0 can be created with disks of differing sizes, but the storage space added to the array by each disk is limited to the size of the smallest disk. For example, if a 120 GB disk is striped together with a 100 GB disk, the size of the array will be 200 GB.

RAID 0 failure rate

Although RAID 0 was not specified in the original RAID paper, an idealized implementation of RAID 0 would split I/O operations into equal-sized blocks and spread them evenly across two disks. RAID 0 implementations with more than two disks are also possible, though the group reliability decreases with member size.

Reliability of a given RAID 0 set is equal to the average reliability of each disk divided by the number of disks in the set:

The reason for this is that the file system is distributed across all disks. When a drive fails the file system cannot cope with such a large loss of data and coherency since the data is “striped” across all drives (the data cannot be recovered without the missing disk). Data can be recovered using special tools (see data recovery), however, this data will be incomplete and most likely corrupt, and recovery of drive data is very costly and not guaranteed.

RAID 0 performance.

While the block size can technically be as small as a byte, it is almost always a multiple of the hard disk sector size of 512 bytes. This lets each drive seek independently when randomly reading or writing data on the disk. How much the drives act independently depends on the access pattern from the file system level. For reads and writes that are larger than the stripe size, such as copying files or video playback, the disks will be seeking to the same position on each disk, so the seek time of the array will be the same as that of a single drive. For reads and writes that are smaller than the stripe size, such as database access, the drives will be able to seek independently. If the sectors accessed are spread evenly between the two drives, the apparent seek time of the array will be half that of a single drive (assuming the disks in the array have identical access time characteristics). The transfer speed of the array will be the transfer speed of all the disks added together, limited only by the speed of the RAID controller. Note that these performance scenarios are in the best case with optimal access patterns.

RAID 0 is useful for setups such as large read-only NFS servers where mounting many disks is time-consuming or impossible and redundancy is irrelevant.

RAID 0 is also used in some gaming systems where performance is desired and data integrity is not very important. However, real-world tests with games have shown that RAID-0 performance gains are minimal, although some desktop applications will benefit, but in most situations it will yield a significant improvement in performance.

RAID 5

Diagram of a RAID 5 setup with distributed parity with each color representing the group of blocks in the respective parity block (a stripe). This diagram shows left asymmetric algorithm.

A RAID 5 uses block-level striping with parity data distributed across all member disks. RAID 5 has achieved popularity due to its low cost of redundancy. This can be seen by comparing the number of drives needed to achieve a given capacity. RAID 1 or RAID 0+1, which yield redundancy, give only s / 2 storage capacity, where s is the sum of the capacities of n drives used. In RAID 5, the yield is equal to the Number of drives – 1. As an example, four 1TB drives can be made into a 2 TB redundant array under RAID 1 or RAID 1+0, but the same four drives can be used to build a 3 TB array under RAID 5. Although RAID 5 is commonly implemented in a disk controller, some with hardware support for parity calculations (hardware RAID cards) and some using the main system processor (motherboard based RAID controllers), it can also be done at the operating system level, e.g., using Windows Dynamic Disks or with mdadm in Linux. A minimum of three disks is required for a complete RAID 5 configuration. In some implementations a degraded RAID 5 disk set can be made (three disk set of which only two are online), while mdadm supports a fully-functional (non-degraded) RAID 5 setup with two disks – which function as a slow RAID-1, but can be expanded with further volumes.

In the example above, a read request for block A1 would be serviced by disk 0. A simultaneous read request for block B1 would have to wait, but a read request for B2 could be serviced concurrently by disk 1.

RAID 5 performance

RAID 5 implementations suffer from poor performance when faced with a workload which includes many writes which are smaller than the capacity of a single stripe; this is because parity must be updated on each write, requiring read-modify-write sequences for both the data block and the parity block. More complex implementations may include a non-volatile write back cache to reduce the performance impact of incremental parity updates.

Random write performance is poor, especially at high concurrency levels common in large multi-user databases. The read-modify-write cycle requirement of RAID 5’s parity implementation penalizes random writes by as much as an order of magnitude compared to RAID 0.

Performance problems can be so severe that some database experts have formed a group called BAARF — the Battle Against Any Raid Five.

The read performance of RAID 5 is almost as good as RAID 0 for the same number of disks. Except for the parity blocks, the distribution of data over the drives follows the same pattern as RAID 0. The reason RAID 5 is slightly slower is that the disks must skip over the parity blocks.

In the event of a system failure while there are active writes, the parity of a stripe may become inconsistent with the data. If this is not detected and repaired before a disk or block fails, data loss may ensue as incorrect parity will be used to reconstruct the missing block in that stripe. This potential vulnerability is sometimes known as the write hole. Battery-backed cache and similar techniques are commonly used to reduce the window of opportunity for this to occur.

RAID 6

Diagram of a RAID 6 setup, which is identical to RAID 5 other than the addition of a second parity block

Redundancy and Data Loss Recovery Capability

RAID 6 extends RAID 5 by adding an additional parity block; thus it uses block-level striping with two parity blocks distributed across all member disks. It was not one of the original RAID levels.

Performance

RAID 6 does not have a performance penalty for read operations, but it does have a performance penalty on write operations due to the overhead associated with parity calculations. Performance varies greatly depending on how RAID 6 is implemented in the manufacturer’s storage architecture – in software, firmware or by using firmware and specialized ASICs for intensive parity calculations. It can be as fast as RAID 5 with one fewer drives (same number of data drives.)

Efficiency (Potential Waste of Storage)

RAID 6 is no more space inefficient than RAID 5 with a hot spare drive when used with a small number of drives, but as arrays become bigger and have more drives the loss in storage capacity becomes less important and the probability of data loss is greater. RAID 6 provides protection against data loss during an array rebuild; when a second drive is lost, a bad block read is encountered, or when a human operator accidentally removes and replaces the wrong disk drive when attempting to replace a failed drive.

The usable capacity of a RAID 6 array is the total number of drives capacity -2 drives.

Implementation

According to SNIA (Storage Networking Industry Association), the definition of RAID 6 is: “Any form of RAID that can continue to execute read and write requests to all of a RAID array’s virtual disks in the presence of any two concurrent disk failures. Several methods, including dual check data computations (parity and Reed Solomon), orthogonal dual parity check data and diagonal parity have been used to implement RAID Level 6.”

Testing & Methodology

Couple of things to keep in mind here. First the Seagate Constellation ES drives are Enterprise class and not really designed for desktop use. We really couldn’t find a reason not to use them ourselves. We tested with operating systems on the drives at all times and used the drives as primary boot drives in every configuration tested and they matched or beat the performance on any platter drive we’ve ever used.

Then we are using a LSI 3Ware SAS 9750-4i 6Gb/s RAID Card which has an 800 MHz processor and 512 MB DDR2 800 MHz. In short we are using a very nice performance oriented Hardware RAID controller.

Since we are testing in an extreme enthusiast desktop system and not a server environment some things we can’t test, like vibration (although we saw none) and noise. We are using a Raven 2 Silverstone chassis with the Constellations mounted in the HD Bay native to the chassis and it has anti-vibration technology built into the drive bay. As far as noise goes we didn’t hear any, none nada zip. Our Sound meter kicks in at 50 Decibels but not being able to hear the drive in operation during random 4k or sequential writes tells us they are truly silent drives (or so close to silent that it doesn’t really matter).

We ran each test a total of three times and took the median run to report on. Normally we average all three runs but since we wanted to use some screenshots we went with the median run. Otherwise 2 or 300 people will write in and tell us the numbers on the charts don’t match the screenshots and our “Delete Email” button is almost worn out.

We installed the RAID card and drives and booted into a clean install of Windows 7 on a high end SSD. Then we made sure we had the latest drivers for the Rampage 3 and Windows 7 was updated completely. We turned off indexing and defragging to prevent interference with testing and installed the drivers and software included with the 3ware SAS 9750-4i. Then we formed a 3xRAID0 array using the software, dropped into Acronis and cloned the OS to the Array. Each time we tested a new array it got the same exact OS loaded onto it with Acronis. Otherwise we would have been looking at endless hours of OS loading which might let errors creep in.

Test Rig

| Test Rig “Quadzilla” |

|

| Case Type | Silverstone Raven 2 |

| CPU | Intel Core I7 980 Extreme |

| Motherboard | Asus Rampage 3 |

| Ram | Kingston HyperX 12GB 9-9-9-24 |

| CPU Cooler | Thermalright Ultra 120 RT (Dual 120mm Fans) |

| Hard Drives |

3x Seagate Constellation ES 2TB 7200 RPM 16MB Cache |

| Optical | Asus BD-Combo |

| GPU | Asus GTX-470 |

| Case Fans | 120mm Fan cooling the mosfet CPU area |

| Docking Stations | None |

| Testing PSU | Silverstone Strider 1500 Watt |

| Legacy | None |

| Mouse | Razer Lachesis |

| Keyboard | Razer Lycosa |

| Gaming Ear Buds |

Razer Moray |

| Speakers | None |

| Any Attempt Copy This System Configuration May Lead to Bankruptcy | |

Test Suite

|

Benchmarks |

|

ATTO |

|

HDTach |

|

Crystal DiskMark |

|

HD Tune Pro |

|

PCMark Vantage |

|

IOMeter |

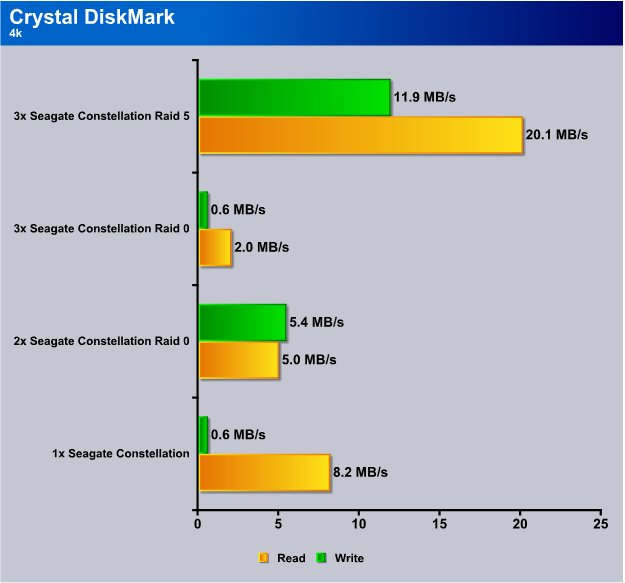

CrystalDiskMark 3.0

One thing to keep in mind here is that you are looking at a drive/controller combination. The scores represented here are specific to these drives with this specific controller. You can’t say that the drives will run the same on a onboard SAS controller as they do on the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card.

Another thing you have to be think about is how large the payload of the benchmark program is. Smaller payloads like 100 – 500MB often produce inflated numbers because the RAID card has 512MB dedicated DDR2 800 MHz memory and you can essentially get a cache hit on smaller payloads.

What we can tell you is that we repeated the tests many many times over a 7 day period for a total of 27 hours benching and that doesn’t include the time it takes IOMeter to prepare the drives. We got some pretty amazing speeds so later we will be presenting screenshots representative of the speeds we got for the doubters.

We get pretty typical scores on the 4k test but the read scores are unusually higher than other platter drives. When we got to RAID5 the scores were off the charts for platter drives.

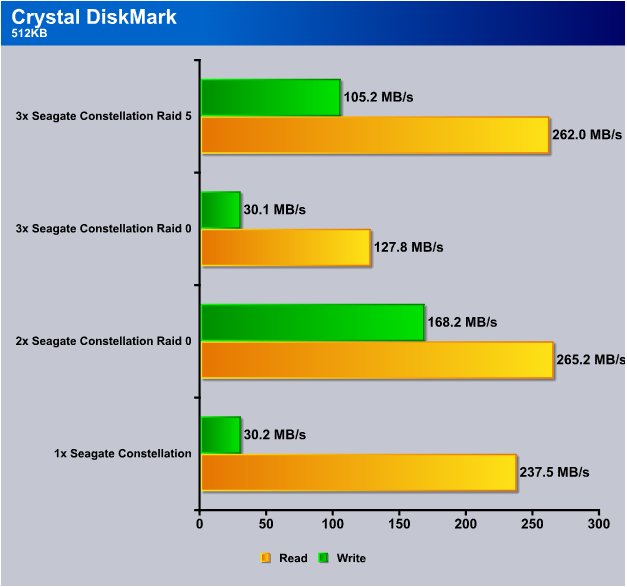

Throughout testing some of the benchmarks plagued us with lower write speeds that jumped when we went to RAID combinations. Here for instance single Constellation ES 2TB drive we got 30.2 MB/s, jump to 2xRAID0 and that zooms to 168.2MB/s. Then 3xRAID0 it drops back to 30 MB/s(ish) and RAID5 it soars back to 105MB/s.

As far as read speeds the Constellation loved CDM and single drive we got 237.5MB/s which increased to 265.2MB/s at 2xRAID0. At 3xRAID0 read performance dropped to 127.8MB/s probably due to the complexity of splitting the data across an odd number of RAID Drives. RAID5, which produced reliably high speeds and provides a safety net should one drive fail, we got 262MB/s.

For those of you wondering we are using default settings on the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card with a 256kb stripe and the 2TB drives set to auto carve partition sizes. Auto carve limits the size of the array to 2TB which is the largest drive Windows 7 can see. You can create a larger drive in the control panel but it may not be bootable.

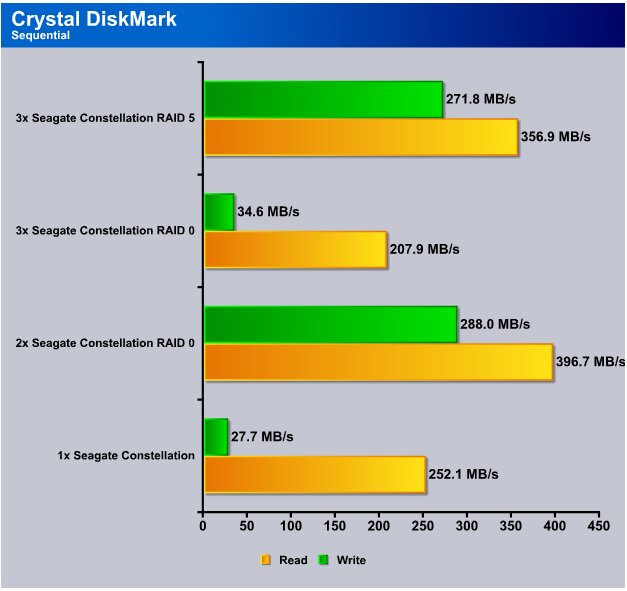

Sequential testing normally produces the highest read scores. This was no exception. With the payload on CDM set to 500MB we got 252.1MB/s single drive. Not to worry we have complete screen shots of CDM 3.0 with 2GB and 4GB payloads later.

The slower write speed appeared here again but we suspect it’s something to do with the smaller payload because in large payload testing we didn’t get the slower write numbers. 2xRAID0 got the highest read speed hitting 396.7MB/s and 3xRAID0 it drops to 207.9MB/s and here again RAID5 produced scored well in both read and write but at a slight cost to speed. To us RAID5 losing a minimal amount of speed is well worth it because unlike RAID0 you have enough redundancy to lose one drive and you don’t lose all the data or OS load.

Normally when we RAID0 we do Acronis backups which so far has reliably cloned all the RAID arrays we’ve tried. By all the RAID arrays we don’t mean just this review, we mean all onboard, all RAID cards and every RAID setup we’ve tried it on. We can back up a RAID array to a single drive, then restore from a single drive back to a RAID0 array. So if you are planning on RAID0 we recommend backing up or cloning with Acronis highly. You are getting higher speeds from 2xRAID0 in most cases but you double the chances of drive failure.

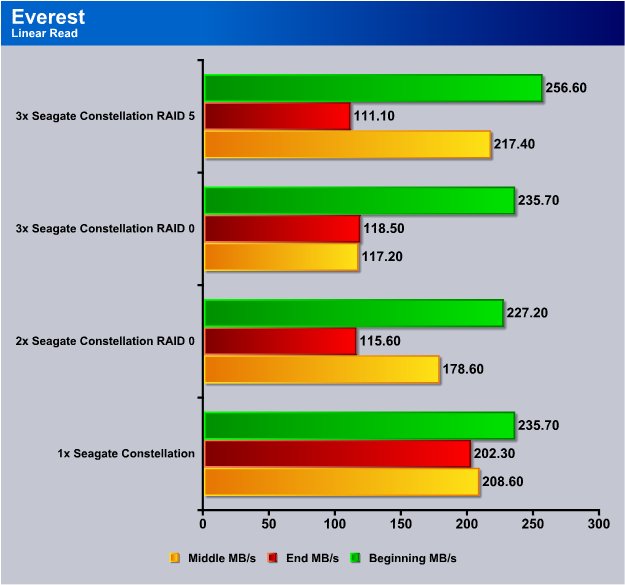

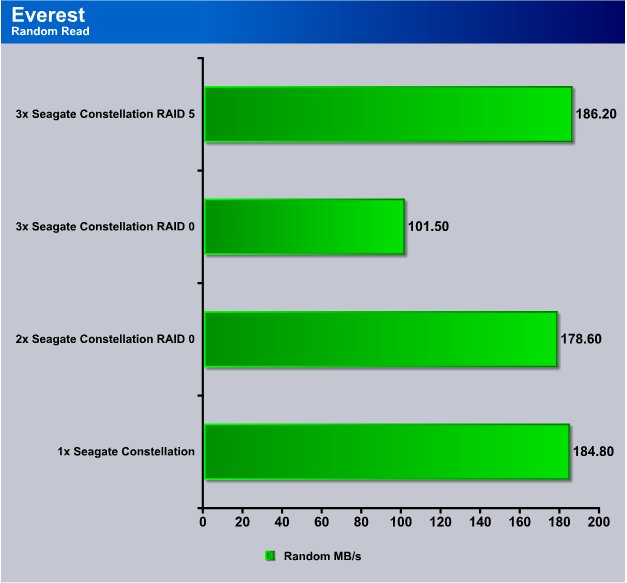

Everest Ultimate

We threw the kitchen sink at the Seagate Constellation ES/LSI 3Ware SAS 9750-4i 6Gb/s RAID Card combination. Everest was reading the setup pretty well and produced some good numbers.

Everest’s Linear testing tests the beginning, middle and end of the drives so we charted all those on one chart so you can see the difference.

We also realize that a lot of you want to see the drives on a chart chocked full of other hard drives, fact is these are the only SAS 6Gb/s drives out there and any comparison we do to other drives, especially using the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card, would be totally apples and oranges. In other words please keep those we would have liked to see “this” emails out of my Inbox. Send me a stack of SAS 6Gb/s drives of various sizes and manufacture and I’ll gladly accommodate. Oh yea Seagate has the only SAS 6Gb/s drives so that would be a feat of magic at this point. Other than comparing apples and oranges we feel like the Seagate Constellation ES drives and LSI 3Ware SAS 9750-4i 6Gb/s RAID Card speak for themselves because a lot of the testing shows SSD like speed without the SSD size limitation.

The Linear read portion of Everest shows the best overall performance at the SIngle drive level. Often RAID adds a level of complexity that can be detrimental to overall performance.

Single Drive Linear at the Beginning of the drive(s) hit 230(ish) on all the RAID0 levels, we got a nice little performance increase in RAID5. The middle of the drive(s) shows single drive and RAID5 in the lead. Testing the end of the platters shows single drive the fastest at 202.30MB/s.

Random reads showed some pretty decent speeds but here again the single drive was faster than 2 and 3xRAID0 but RAID5 took the lead by a hair.

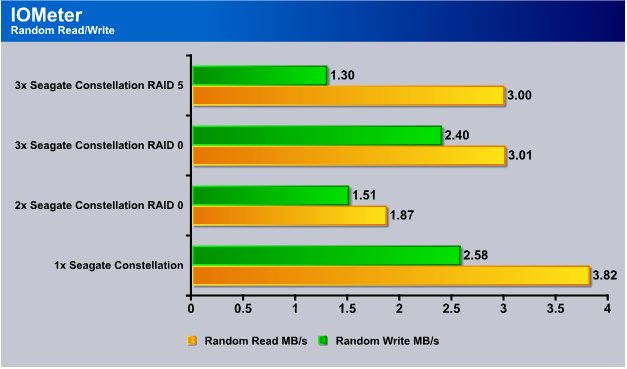

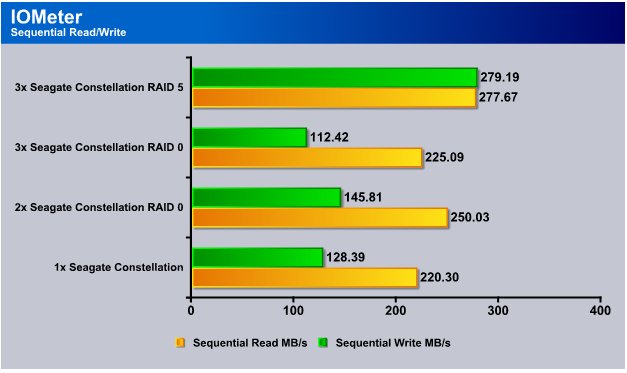

IOMeter

In IOMeter we use a set of 4 configuration files that insure no errors creep into testing. We use the same 4 test files for all drives and don’t optimize testing for any drive. In certain special cases, which we make plainly clear, we will optimize for a specific drive but in this case no optimization of test files was done to favor the Constellation drives. Seagate and LSI have never seen our testing files and never ask to. They provided no tips or hints on how to optimize the testing and preferred to let the product speak for itself any way we tested it. That says a lot about the faith Seagate and LSI have in both their product and our testing.

IOMeter spends endless hours preparing the drives for testing. In some cases overnight. You can cut that time by making smaller partitions but that affects drive performance. We chose to let IOMeter prepare the entire drive(s) in their entire size without reducing partition size. Then we set the testing time on each test to a half hour to ensure that IOMeter had plenty of time to test the entire drive at least once. That method is preferable to running a short 5 minute test that may only test part of the drive.

Our Random Read Write test in IOMeter is pretty brutal and uses 2k data blocks. With a 2k data block and 30 minutes to torture the drive(s) the highest score we got was single drive. In case any of you are wondering to prepare the whole set of drives in any combination took 3 1/2 days total. Yes about 84 hours total just to prep the drives. Then three runs of 30 minutes each for each setup or another 6 hours just testing. That should give you some idea of how through this testing was.

After this set of tests (charted on all benchmarks) we went back and did some bare drive testing for screenshots. During testing the drive setups were also used as primary boot drives so each level of RAID had to have a freshly cloned OS which added to the testing time. We feel that testing with OS in place is more realistic than the bare drive testing most sites do. To that end during testing we disabled defragging and indexing to prevent random interference with the tests but no other adjustments were made.

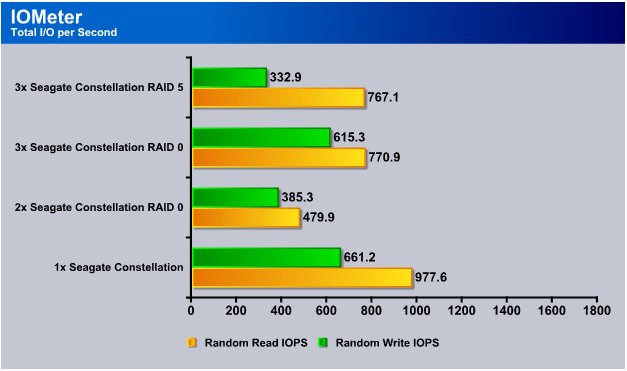

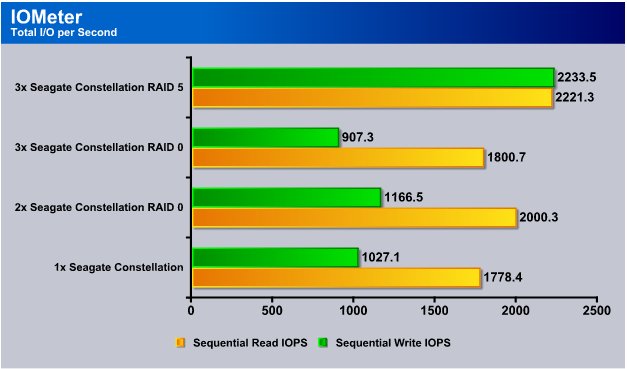

Since SSD’s hit the scene IOPS (Input/Output per second) have become an issue. These aren’t SSD’s but they are Enterprise class drives and server people will be interested in IOPS so we ran IOPS testing on the drives in addition to raw Speed.

With RAID0 levels it quickly becomes apparent that even numbers of drives provide better speed and perceived speed increases from 2xRAID0 often are just perceptions at this level of testing. As far as IOPs go when you add a drive to an array you increased the read write complexity which reduces the IOPs. The RAID controller has to burp for a millisecond or so and decide how to carve the data, and the read write head on the drive(s) has to travel to find the data.. This is more evident with smaller reads and writes, large payloads on the benchmarks like 2GB and 4GB have a different effect on IOPs and scaling. Problem being how many of us do 2GB and 4GB data transfers sequentially on a daily basis.

Here you can see how sequential IOPs compare to Random IOPs. Sequential reads you get higher IOPs and RAID5 gave us pretty amazing (for platter drives) IOP’s of 2200(ish).

Back to the realm of numbers we are all more comfortable with Sequential reads produced 220MB/s single drive and we saw scaling to 225MB/s on 3xRAID0 and 250MB/s 2xRAID0. RAID5 produced the most remarkable numbers and hit the high 270MB/s range. Writes on RAID5 were more than double single drive and more than double any of the RAID0 levels.

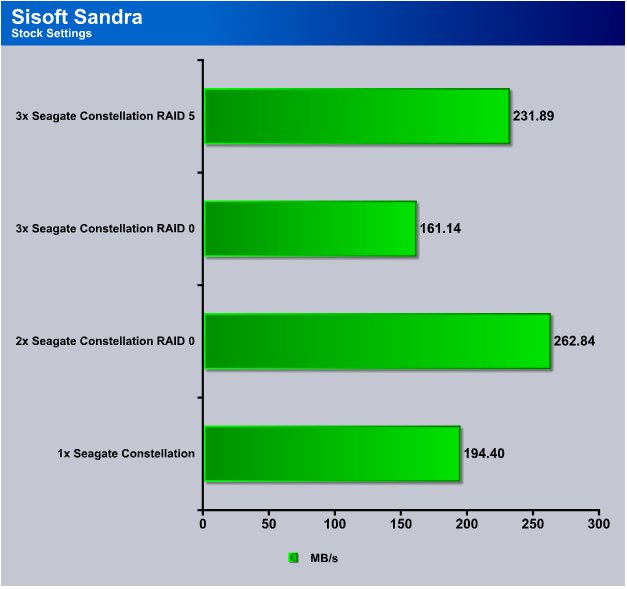

Sisoft Sandra

Sandra only produces one usable benchmark number for us. A flat MB/s score that represents average speed. It does produce a nice chart at the end of the test. It produces a mediocre chart at the end of the test but without displaying ginormous images they are unreadable.

We see about the same pattern of RAID level scaling in Everest. Single drive we got 194 MB/s and 2x RAID0 gave us 262MB/s while 3xRAID0 dropped to 161MB/s and RAID5 wasn’t quite as fast as 2xRAID0 but gave a good performance.

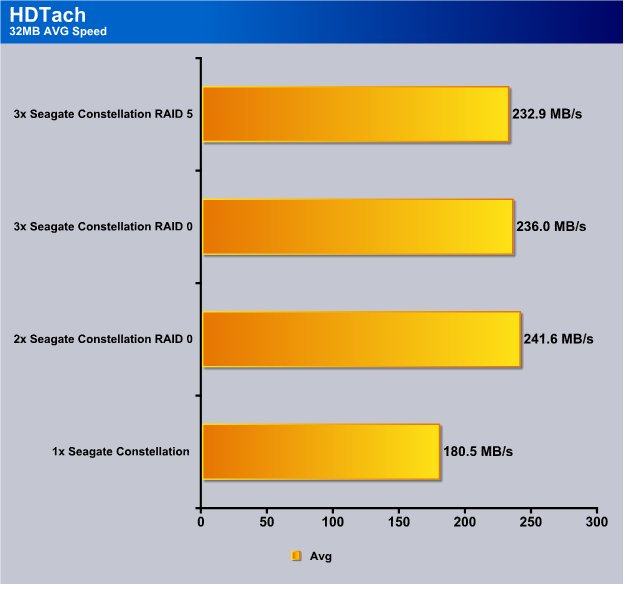

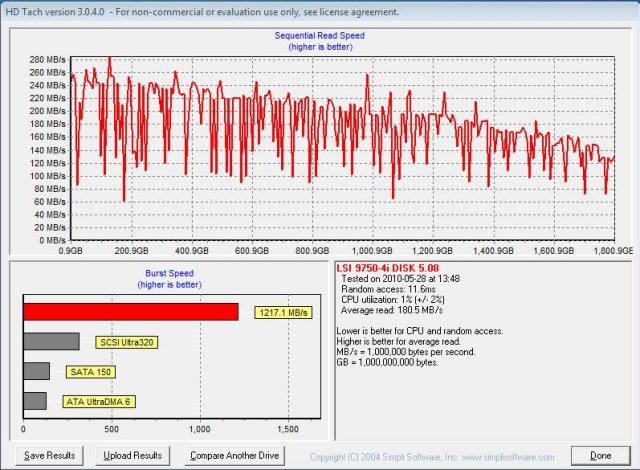

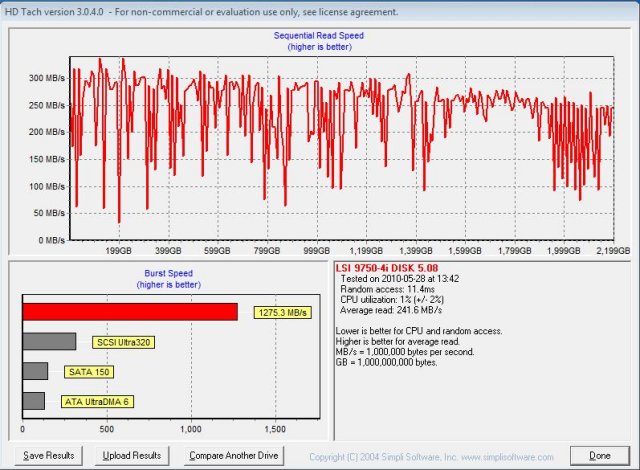

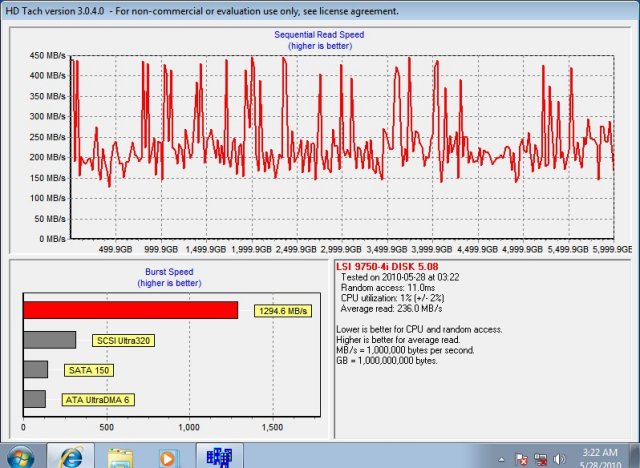

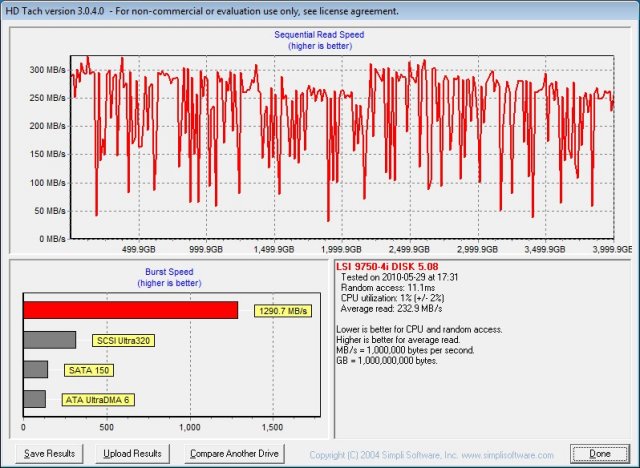

HDTach

HDTach is an old friend of ours but you can discount the Burst speeds on the HDTach screenshots we post later. Average read speeds were pretty normal but burst speeds were obviously cache hits.

In the 32MB long test we got 180.5MB/s single drive and 2xRAID0 got the highest score of 241.6MB/s and the other RAID levels got 230(ish) MB/s. Pretty darn decent speeds for platter drives.

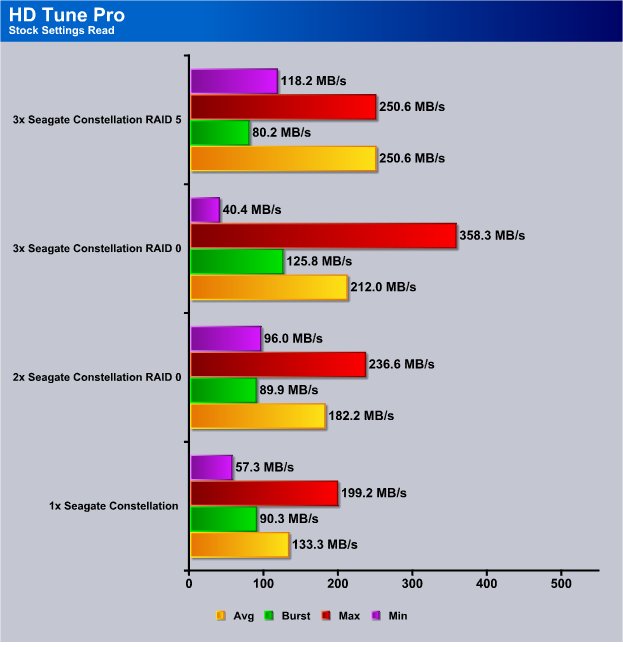

HDTune Pro

HDTune Pro was producing some pretty reliable consistent numbers and we got 133.3 MB/s single drive average speed. Single drive Maximum speed was 199.2MB/s and minimum speed was 57.3MB/s. 2xRAID0 gave us pretty good scaling and it got even better with 3xRAID0. Again RAID5 didn’t top the charts but for a 3 drive setup is probably our personal favorite because of the redundancy.

Screenshots HDTach

There’s not a lot you can say about screenshots, the pictures speak for themselves. The screenies we are using are median results of all the tests.

Single Drive 32MB Test average speed was 180.5 and also the median test result. Tests varied by 3 – 5MB/s like normal so the median result is pretty typical. Notice the burst hitting 1.217GB/s that’s a cache hit or a drive controller memory hit and not representative of drive speed.

2xRAID0 we got 241.6MB/s with spikes over 300MB/s and again the burst speed is a cache hit.

3xRAID0 shows 236.0 MB/s with spikes hitting above 400MB/s. The screenies let you see how the drive(s) perform during the entire test and the lowest speed is about 130MB/s and tops at almost 450MB/s and you can see why Minimum and maximum speeds are so deceiving. Minimums and maximums are mere momentary spikes and average speeds are much more representative of real performance.

RAID5 shows 232.9MB/s average with quite a few spikes hitting 300MB/s and the lowest spike hits about 30MB/s.

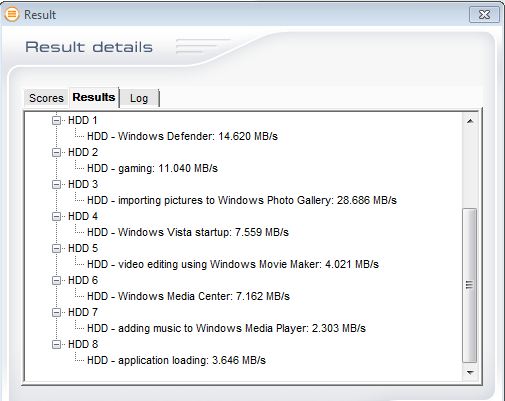

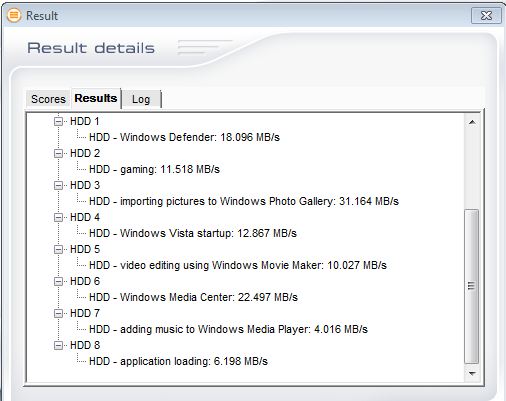

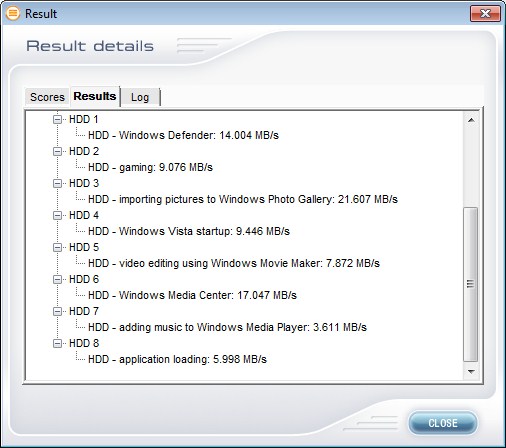

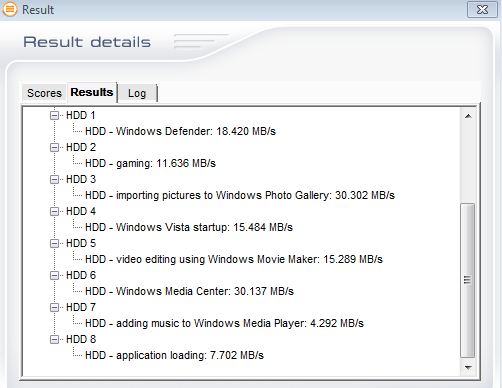

PCMark Vantage

Vantage is a strange creature when it comes to benchmarking drives, it produces very consistent numbers but they are consistently lower, much lower than any other benchmark. We went with screen shots for that reason and the results are so much different than any other benchmark take them for what they are worth.

Single Drive scores ranged from 2.3MB/s to 28.6MB/s and everywhere between.

2xRAID0 we get between 4.016MB/s to 31.164MB/s so we are seeing a little RAID level scaling.

3xRAID0 we get 3.6MB/s to 21.607MB/s so we are seeing the handicap we’ve been seeing all along with 3xRAID0.

RAID5 shows the same good performance as all the other testing and we got 4.29MB/s to 30.3 MB/s and in Vantage seeing very little disadvantage to RAID5. With the inherient sped of the Constellation ES 2TB drive it might be better to use them in single drive configurations depending on what you use them for.

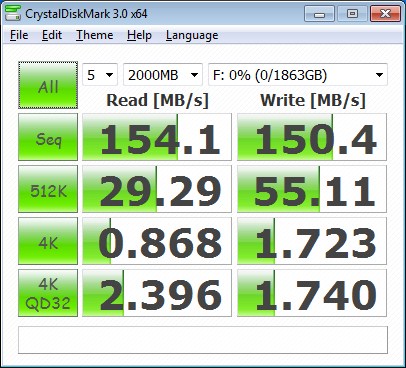

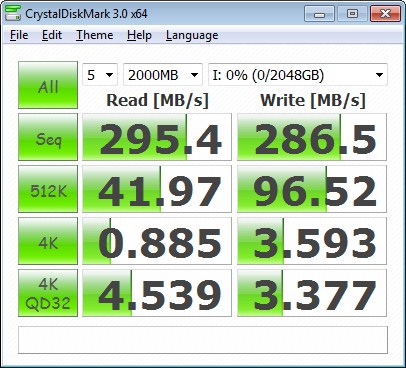

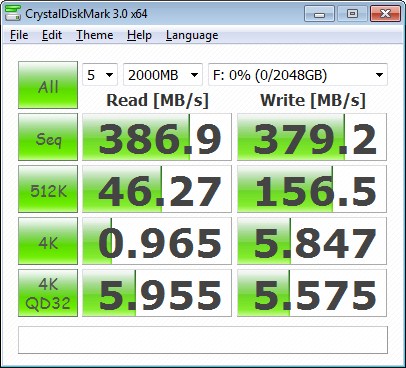

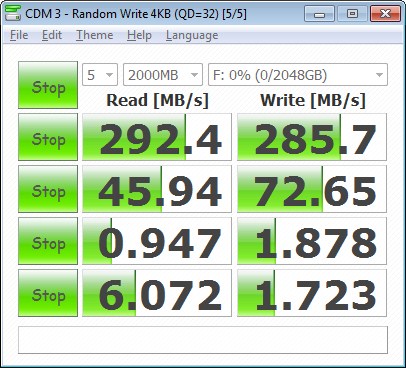

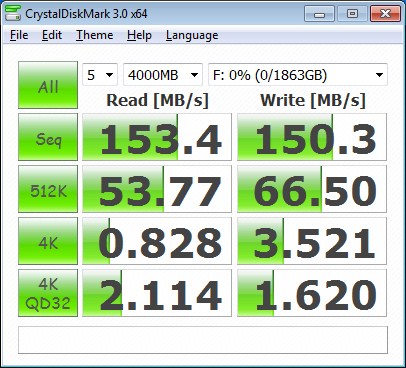

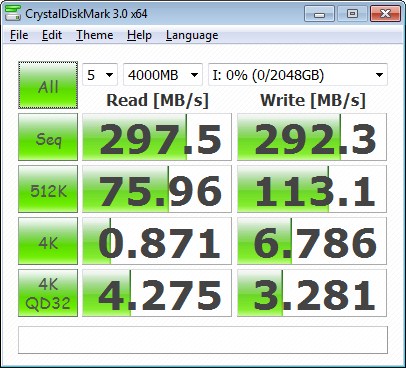

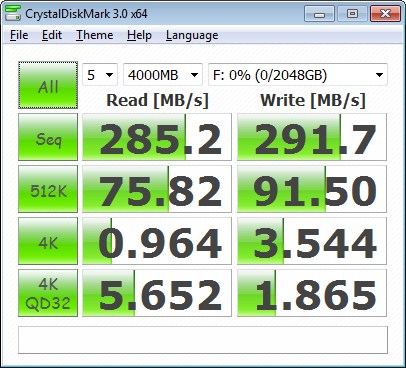

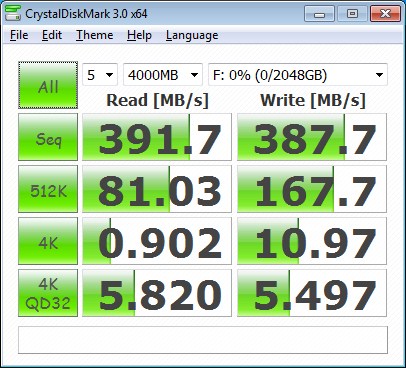

Screenshots CrystalDiskMark 3.0 64Bit

We have copious screenshots of CDM 3.0 so bear with us. We already charted the 500MB tests so these screenies are of the 2GB and 4GB Tests of all the configurations. We tested bare drive for the screenshots, and tested with an OS and booted to the drives for the charts. There wasn’t much difference (if any) between testing bare drive and booting to the drives so it wouldn’t make much difference how we tested. We just feel like testing with an OS in place in more realistic. You don’t buy a drive to leave it blank, you put an OS or data on it and use it that way which is why we test with a full benchmark load on drives. Our typical benchmark load exceeds 150GB games included so it’s a pretty realistic load for a typical drive.

Large payload (2 – 4GB) gives us a chance to get past the effects the cache and drive controller have on drive speeds (for the most part). They are a little unrealistic in that most of us don’t read 2 – 4GB sequentially from a HD very much. Perhaps Video Files of copies of Steam games.

Sequentially on single drive 2GB we got 154.1 MB/s read and 150.4MB/s Write. Moving to 512k we get 29.29MB/s Read and 55.11MB/s Write. On 4k and 4K Que Depth 32 we get pretty typical 0.8 to 2.3 MB/s speeds. We tend to minimize the use of those scores in our judgment, how many of us sit around transferring 4k blocks over and over for a run length of 2 – 4GB.

Moving to 2xRAID0 we see good scaling in this test and got 295MB/s Read and 286 MB/s Write. The 512k test we saw a nice performance boost and got 41.97MB/s Read and 96.52MB/s Write. Even the 4k test benefited from 2xRAID0.

On 3xRAID0 we got 386.9 MB/s Read and 379.2MB/s Write in Sequential. The 512K test we got 46.27MB/s Read and 156.5MB/s Write and again the 4k tests saw a nice little performance boost from 3xRAID0.

We jumped the gun on the RAID5 test and got the screenshot mere seconds before the test ended. At this point we had benched these drives for 80+ hours and said good enough. The final score when the test completed on the 4k QD32 test was the only one affected and it was 1.725MB/s.

RAID5 again wasn’t the fastest but at 292.4MB/s Read Sequential and 285MB/s Write we are getting speeds faster than a lot of SSD’s so who is going to complain.

Hitting the 4GB test we got 153MB/s Read and it’s hard to find a drive that will sustain a 4GB read and produce that type speed. The Sequential write was just as amazing and hit 150MB/s. The 512k test saw 53.7MB/s on Read and 66.5 MB/s Write. The 4k tests well you know how we feel about those but there they are for your consideration.

At 2xRAID0 we saw almost linear scaling and the Sequential speeds almost doubled. That’s prety good scaling for RAID0 but the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card plays a big part in that. A hardware RAID controller that is made well and designed for performance will kill a cheap RAID card every time.

By the time we hit 3xRAID0 our jaws were in need of being picked up off the ground. At 391MB/s Read and 387MB/s Write we are at speeds faster than any SSD we’ve seen by far. Top end SSD we’ve seen speeds approach 250MB/s but on a 128 GB SSD that costs $400 – $450 that’s just to small and expensive for practical use. The Seagate Constellation ES drives aren’t cheap at close to $400 for a 2TB drive but you get 2TB not 128 GB like the SSD’s. Some 400GB SSD’s we’ve seen run $1600 and at 1/5th the size of a single Constellation ES 2TB. So you can snatch a single 400GB Drive (400 – 480GB) for $1600 or have 3 or 4 Constellations and a hardware RAID controller and still have enough leftover for a nice dinner or two. The difference being 400 GB VS 6 – 8TB and most of the decent RAID combination’s we’ve seen rival SSD speeds.

RAID5 again is about like 2xRAID0 but has the redundancy we like. If one drive Fails you can still run the array while you wait on a replacement of clone to a single drive and rebuild the array when the replacement drive arrives.

Overall we are very happy with the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card and Seagate Constellation drive combo. The RAID card is super easy to use with almost no learning curve and the Seagate Constellation ES 2TB drives are huge and run as well as any platter drive we’ve seen.

Conclusion

The Seagate Constellation ES 2TB 6Gb/s drives are very capable lightning fast drives. It’s easy to overlook the fact that they are the first SAS 6Gb/s drives on the market. It would be easy to overlook the fact that they are SAS 6Gb/s drives done right. Single drive we saw amazing speeds in our normal benchmarks, then we increased the benchmark payload sizes to incredibly massive sizes and performance was still high.

You really can’t separate the drives performance from the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card because these are SAS 6Gb/s drives and there is no way to separate the drives from the controller and test them without it. From our experience with hard drives, even 10k RPM drives, we haven’t seen performance this good from many drives. We certainly haven’t seen 10k drives with this storage capacity.

In any case the performance of the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card and Seagate Constellation ES drives was eye popping. No Matter single drive, 2xRAID0, 3xRaid0 or RAID5 performance was high. High enough to make you rethink SSD lust and that’s high enough for us.

| OUR VERDICT: Seagate Constellation ES 2TB 6Gb/s SAS Drives | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The Seagate Constellation ES 2TB 6Gb’s SAS drives are an amazing piece of advanced technology. They are screaming fast and provide massive storage with legendary Enterprise class reliability. 9.5 out of 10 points and Bjorn3D’s Golden bear Award! |

Conclusion – LSI 3Ware SATA+SAS 9750-4i RAID Controller Card

Like we mentioned you can’t separate the drives performance from the RAID cards performance. We have other RAID cards in house and tested the drives on a SAS 3GB/s controller and performance was considerably lower on the off brand RAID card. That tells us that the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card and Constellation ES combination is a marriage made in heaven.

The LSI 3Ware SAS 9750-4i 6Gb/s RAID Card we were expecting to have to boot into the cards BIOS every time we made a change to the setup. That wasn’t the case the 3DM2 software (included) let us dismantle arrays, build new arrays, and make every adjustment we needed from the comfort of a GUI inside windows. We didn’t have to reboot for changes to take effect and the learning curve was almost non-existent.

The LSI 3Ware SAS 9750-4i 6Gb/s RAID Card is a card we didn’t think we needed until SAS 6Gb/s came along, and now we think it’s a controller that we couldn’t live without. Nothing boosts a high end enthusiast system like good HD’s and a great RAID controller and often those are the two components overlooked or skimped on.

Don’t skimp on hardware RAID controllers and good drives in your enthusiast system. They make a world of difference and if it’s a “Real” enthusiast system and not a legend in your own mind it will have great hard drives and if it’s a hardcore enthusiast system it’ll have hardware RAID. It would be hard to make a better choice for a hardware RAID system than the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card.

| OUR VERDICT: LSI 3Ware SATA+SAS 9750-4i RAID Controller Card | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The LSI 3Ware SATA+SAS 9750-4i 6Gb/s RAID Controller Card is the easiest Hardware RAID controller we’ve ever seen. That ease of use didn’t come at a performance hit and the 9750-4i helped the Constellation drive setup provide blazing speed. With it’s ease of use and platform from which blazing speed launched the LSI 3Ware SAS 9750-4i 6Gb/s RAID Card deserves a 9.5 out of 10 points and Bjorn3D’s Golden Bear Award! |

| Product Name | Price |

| Seagate 600GB Cheetah NS.2 SAS 6Gb/s Internal Hard Drive | $533 |

| LSI 3ware SAS 9750-4I KIT 4PORT 6GB SATA+SAS PCIE 2.0 512MB | $386 |

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

I’m considering using 3ware SAS 9750-4i controller with SATA 8 drives and “ICY DOCK MB455SPF-B” as backplane. The goal is to create efficient storage with raid 50. I have following question – is it possible to localize a single drive in case of drive failure?

Let’s imagine such situation – there is a 8-drives RAID 50 array, built in this bacplane and one day the 3ware controller notice drive failure. How to localize this drive (which drive is it)?

Does the SATA/SAS standard support such feature? (assuming that the Backplane has any LED indicators)

thank for answer