With the HD5870 AMD wants to offer twice the performance of the HD4870, full DX11 and OpenCL support while keeping the power consumption down. We’ve run the card through our test lab to see if their claims are true.

INTRODUCTION

By now we are used to seeing new video cards being released from AMD and NVIDIA in the spring and fall. This year is not different except that it coincides with the release of Windows 7 together with DirectX for Windows 7 and Windows Vista. Since AMD already has had experience with some of the new features of DX 11 through the support of DX10.1, which is a sub-set to DX11, it probably is no surprise that it looks like AMD will be the first vendor to offer cards with DirectX 11 support.

During a hectic day in September we visited AMD in London and got all the info about the new HD5800-series of GPU’s.

We went home pretty impressed about what we saw but as we all know you never can trust marketing or the PR-guys so it was of course lucky that AMD also let us bring home a card so we could put it through its paces in our Swedish offices in Stockholm.

This is what you will find in this article/review:

Part 1: Overview of the HD5800 with specifications

Our first stop is to look more closely at the specifications and features of the two new cards that are announced today: the HD5850 and the HD5870.

Part 2: Overview on DirectX11 as well as OpenCL

Next we look into the new features of DirectX11 as well as talk a bit about OpenCL.

Part 3: Eyefinity

One of AMD’s new features is the ability to use several monitors as one huge monitor. We explain the feature and of course test it ourselves.

Part 4: The benchmarks

Of course everyone is eager to see the scores and we’ve compared the HD5870 against the HD4870 1 GB, the HD4890, the GeForce GTX285 and the GeForce 295 on 2 different machines to see what the raw power of the HD5870 really can do in real games.

Part 5: The Conclusion

The HD5800

AMD has set up a pretty ambitious goal to deliver 4 new GPU’s in the next 6 months. The first chip is Cypress which is the codename for the HD5800 and which will be chip aimed at the $300-market. This will be followed by “Hemlock” which is the X2-part and later this year then by “Juniper” which is aimed for $200 cards. In Q1 2010 we then will get the “Redwood” and “Cedar” which will come on the cheap $99 cards. Basically AMD aims to have a full set of DirectX11 supporting cards in 6 months , complete from the $99 cards to the $500 cards.

Today we will concentrate on the cards that are announced today.

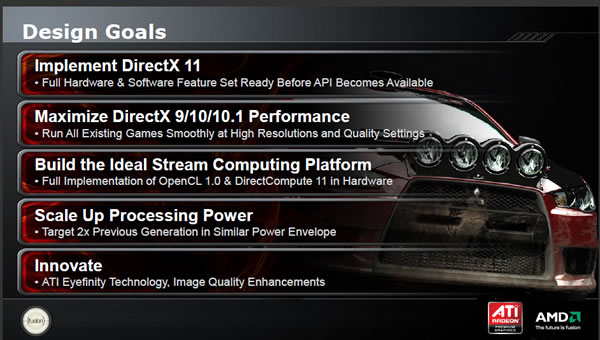

AMD set up some simple design goals for the HD5800 as shown by this slide:

Let us first concentrate on the 4th goal: “Scale up Processing Power: Target 2x Previous Generation in Similar Power Envelope”. What it basically means is that the HD5870 should be twice as fast as the HD4870 without having any increased power usage.

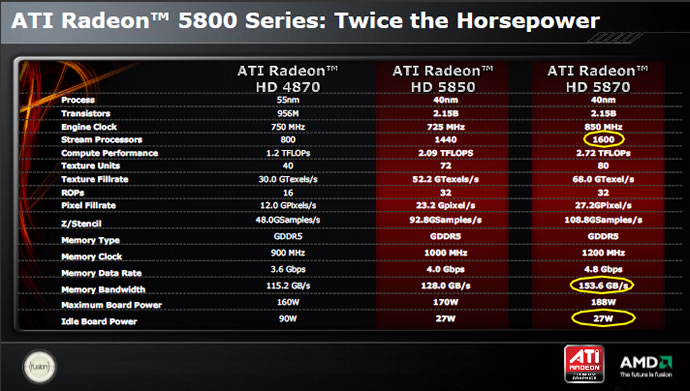

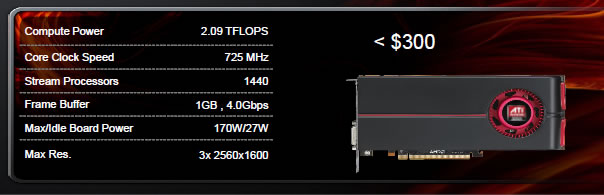

The HD5800 are the second generation 40nm GPU’s from AMD. It has more than double the number of transistors than the HD4870. The clock speed of the GPU is slightly lower on the HD5840 and higher on the HD5870. The GPU’s have gained a significant increase in stream processors. The HD5870 gets twice as many, 1600, while the HD5850 gets a respectable 1440 stream processors, up from the 800 on the HD4870. This also means we get a significant jump in Computer Performance where both the HD5850 and the HD5870 breaks the 2 TFLOP barrier and the HD5870 even is getting close to 3 TFLOPs.

As you can see the HD5870 GPU is basically at least twice as powerful in all specifications. When we look at the memory we still get DDR5, this time however a bit faster than on the HD4870.

One major improvement is that the idle board power now is down to 27W from the quite high 90W of the HD4870.

As you have seen there are actually 2 cards that are announced today: the HD5850 and the HD5870.

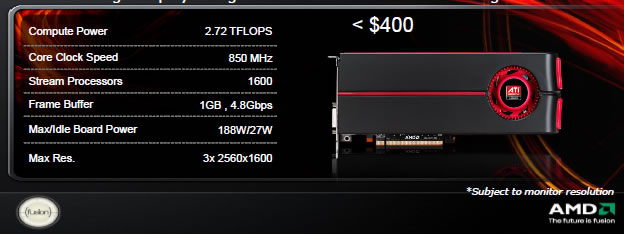

HD5870

The HD5870 is a card that will retail at around $400. It got the maximum 1600 stream processors and 2.72 TFLOPs of Computer Power. The card is able to drive up to 3 screens at 2560×1600 pixels resolution, something that we will look at when talking about Eyefinity. This card actually does not have a direct competitor in the NVIDIA-camp. The GTX285 is about $100 cheaper while the GTX295 instead is almost $100 more expensive (at least until NVIDIA lower the prices).

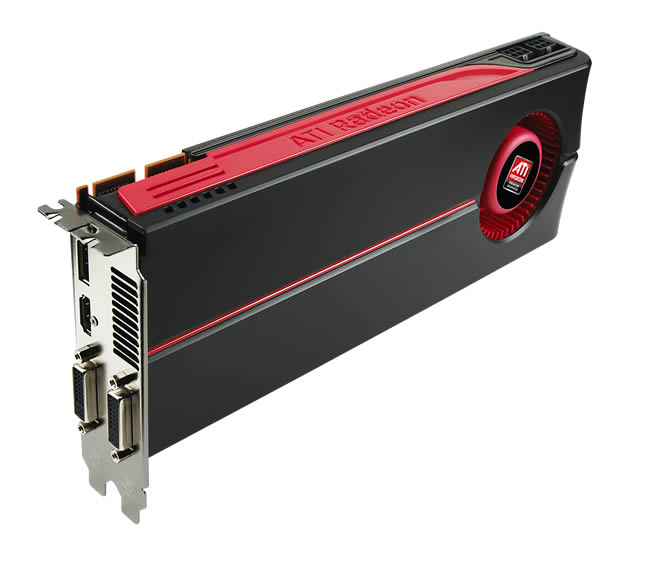

The reference card is a pretty sleek card. There is not a lot of “advertisement” on the card except for a small ATI logo on the fan and the words “ATI Radeon” written on the top. There is ample room for OEM’s to put huge ugly stickers on the side of the card. The card actually looks quite cool as it is so we hope that the OEM’s just exchange the fan sticker and leave the rest of the card as it is.

As you can see the card we took home has 2 DVI-D connectors, 1 HDMI connector and 1 DisplayPort connector. As always it is up to the OEM’s to choose what connectors to use. However, as Eyefinity needs these connectors we do expect most to ship them with them.

If we turn the card around we see that AMD has put two huge air-intakes at the end (or is it the front ..?) of the card. These just helps make the card look even more bad-ass, Black and red is a killer color combination. One welcome improvement over earlier cards is the fact that the power connectors now finally are facing upwards from the card instead of back. This is needed as the cards are really long and it otherwise would be hard to fit in the PCI-E power connectors.

HD5850

The HD5850 has slightly fewer stream processors that the HD5870 and thus “only” get around 2.1 TFLOPs of computer power. It still is able to drive 3 screens at 2560×1600 pixels resolution. This card goes head-to-head with the GTX285 at around $300.

The reference HD5850 looks just like the HD5870 but is a bit shorter.

DirectX11, OpenGL and OpenCL

Bjorn3d as a site have been around since the beginning of the 3D video card era as we started as a fan site for the Rendition video cards. This means we remember the time when 3D-support was handled through proprietary API’s like Rendition’s RRedline and 3dfx’s Glide. The problem with this was that you never could know which game supported your card and which game didn’t. In the end OpenGL and DirectX evolved and took over as “open” API’s, open in the sense that every card maker would write drivers to the OpenGL and DirectX API and then the game developers could write towards DirectX or OpenGL and know that their game would work on all the video cards regardless if it was a NVIDIA TNT video card or a S3 Savage video card.

DirectX

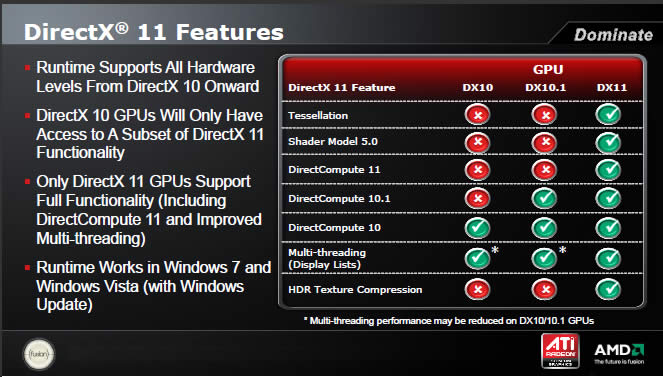

It has been quite obvious for the last year or so that AMD is quite keen on supporting the latest development in DirectX while NVIDIA has chosen to adopt a “wait and maybe do it later” attitude. Thus is it no surprise that AMD has chosen to fully support DirectX11 even before the API has been launched. As AMD already have been supporting some of the new features through DirectX 10.1, which is a sub-set to DirectX 11, since it was released for Vista in Service Pack 1 it most probably hasn’t been such a huge job to get the GPU’s to fully support DirectX 11.

So what is new in DirectX 11? Let us look at a few of the new features of DirectX 11:

Tesselation

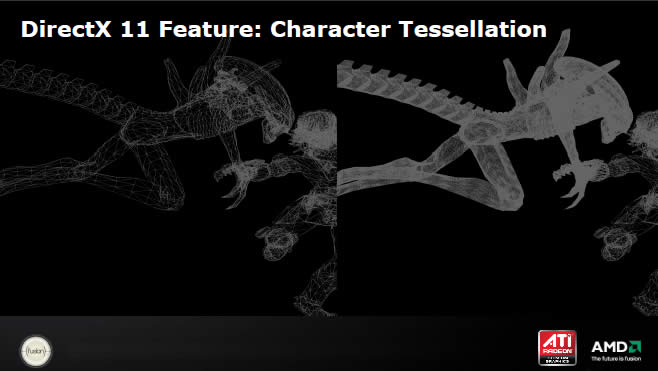

Hold on I hear you say. AMD has supported tessellation several years now. That is true. NVIDIA also has been talking about tessellation in some of their SDK’s. The news is that Tesselation-support now is part of DirectX 11 meaning that developers no longer have to do any vendor-specific solutions which hopefully means we will see lots of tessellation used in future games.

Basically tessellation is a way of taking a relatively low-polygon mesh and then use subdivision on the surface to create a finer mesh. After the finer mesh has been created, a displacement map can then be applied to create a detailed surface.

The advantage with using Tesselation is that you can do a lot more detailed characters, more realistic clothing and more detailed terrains.

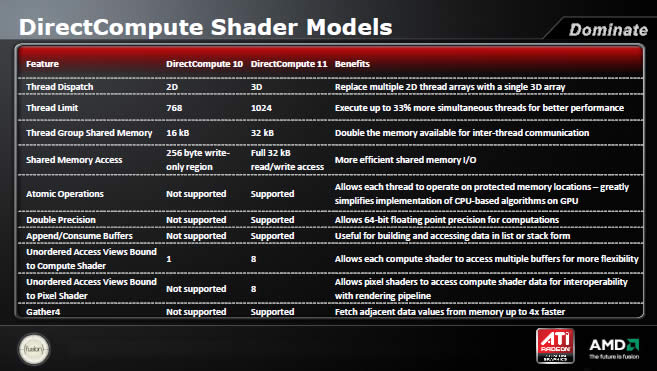

DirectCompute

As expected Microsoft has added DirectComputing to DirectX 11 which adds GPGPU-support into DirectX. It gives direct access to ATI Stream through the DirectX 11 API. This can be used for

- Image Processing & Filtering

- Order Independent Transparency (OIT)

- Shadow Rendering

- Physics

- Artificial Intelligence

- Ray-tracing

One interesting thing about DirectCompute is that it will also work on DX10 and DX10.1 hardware, although with reduce features. So even though NVIDIA lacks a DirectX 10.1 or DirectX 11 part they still can take some advantage of DirectCompute. Developers can start using Compute Shader 4.x kernels in their applications today, and add Compute Shader 5.x capabilities as the installed base of DirectX 11 GPUs becomes a significant portion of the market.

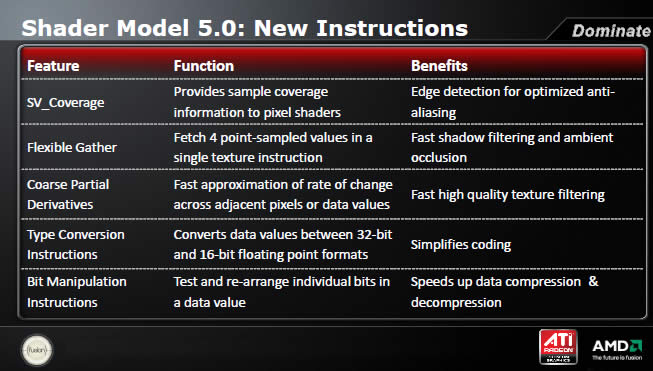

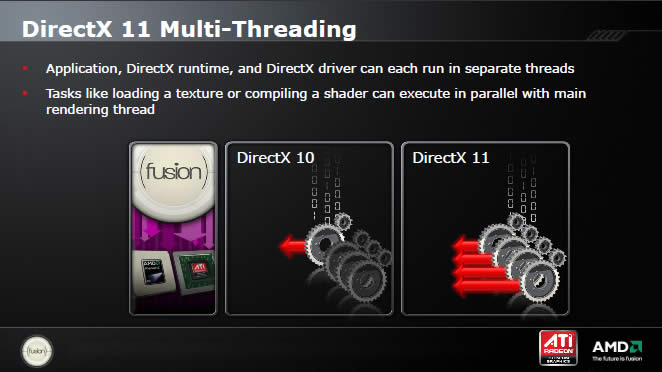

Other interesting new features are Shader Model 5, Multi threading and HDR Texture Compression. We’ll just post a few slides for you to read as it feels unnecessary to just rewrite everything. And just to make it clear; these are new features of DirectX 11 so when NVIDIA have DirectX 11 supporting cards they of course also will be able to take advantage of all this.

So when will we see the first games taking advantage of DirectX 11? There actually already exists a game with DX11-support: Battleforge. EA released a patch a few days ago that adds some DX11-support. AMD of course also had dug up some companies that came to the event talking about their upcoming games.

DICE were there showing off an experimental version of its Frostbite 2 engine where they were playing around with deferred rendering and were using DirectX 11 Compute Shader 5.0 to calculate light contributions in a test scene.

Rebellion were there talking about their upcoming (Q1 2010) game Aliens vs. Predator.

This game will use a lot of character tessellation to make the Aliens even more terrifying.

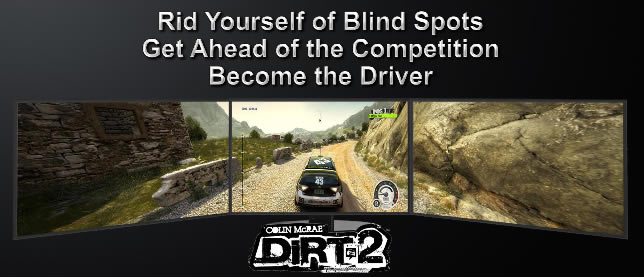

Codemasters showed off Dirt 2 for the PC which will have DirectX 11 support. The features used are:

- HW Tessellation: DynamicWater Surface

- HW Tessellation: Dynamic Cloth

- HW Tessellation: Animated Crowd

- DirectCompute11: Optimize Post Processing Effects

- ShaderModel5.0: High Quality Shadow Filtering

- ShaderModel5.0: Depth of Field

A demo of the game was present at the event and it looked absolutely stunning.

Other games that were mentioned were , S.T.A.L.K.E.R.:Call of Pripyat which is slated for a Q4 09 release, Lord of the Rings Online (Q1 2010) and Dungeon and Dragons Online: Eberron Unlimited

As expected it will be a while before we will see DirectX 11 games dominate but it does help that Microsoft has decided to release it not only for Windows 7 but also for Windows Vista. It would have been nice to see support also for Windows XP but Microsoft needs an incentive to get people to move to Windows 7. It would also help if NVIDIA came out with DirectX 11 supporting cards as soon as possible.

OpenGL

AMD did not say anything about OpenGL in their presentation but as far as we can tell the HD5800 currently will support OpenGL3.1 in the drivers thus being a bit behind NVIDIA which already has support for OpenGL3.2.

OpenCL

OpenCL (Open Computing Language) is a framework for writing programs that execute across heterogeneous platforms consisting of CPUs, GPUs, and other processors. OpenCL includes a language (based on C99) for writing kernels (functions that execute on OpenCL devices), plus APIs that are used to define and then control the platforms. OpenCL provides parallel computing using task-based and data-based parallelism. OpenCL is analogous to the open industry standards OpenGL and OpenAL, for 3D graphics and computer audio, respectively. OpenCL extends the power of the GPU beyond graphics (GPGPU). OpenCL is managed by the non-profit technology consortium Khronos Group.: Source:Wikipedia

In short openCL is set to become for GPGPU what OpenGL is to 3D acceleration on the GPU. The neat thing with OpenCL is that it handles the GPU, the CPU and other processors. Up until now AMD has only supported OpenCL for the CPU but at the even AMD said they had submitted drivers to the Khronos Group that would add support for the GPU giving them support both for the CPU and GPU so the framework can put the work on the chip that handles it the best.

Right now the Havoc physics engine supports OpenCL and work has begun for an OpenCL version of the Open Source Physics engine Bullet Physics. This should mean that there is now a serious alternative to PhysX which will be supported by both AMD and NVIDIA.

Eyefinity

Eyefinity is a technology that in many ways seems to be made up just to use up some of the power that we get from these new GPU’s. In its basic elements it is a new way to use more than one monitor in games, work and entertainment.

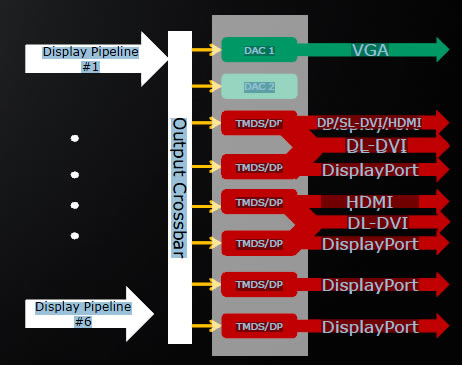

The HD5800 GPU’s have a redesign display engine that supports up to six 10-bit display pipelines. The maximum of connectors a card can have is 6 DisplayPorts and incidentally AMD plan on releasing a special edition card, the HD5870 Eyefinity6 Edition with just that.

For us regular users the cards can support a variety of combinations. The reference HD5870 that we got for testing has 2 DVI connectors, 1 HDMI and 1 DisplayPort connector. With this combination we should be able to run at least 3 screens, one with DVI, one with HDMI and one with DisplayPort (and yes, we have done exactly that, but more about our results later).

We’ve had multi-monitor support before but this time AMD has added a small twist to it. You can now create Display Groups. This is actually the reason that AMD recently did an overhaul of their Catalyst Control Center. They simply were preparing the CCC for this new feature.

A DisplayGroup can consist of several monitors and what it does is make them all appear like one big monitor to the operating system and the games. Thus if you put three 1920×1080 monitors side by side then the OS and games see it as a large 5760×1080 monitor. The whole DisplayGroup is fully 3D accelerated. Matrox have had some products (tripleHead and DualHead) that gives the user a similar solution but AMD’s version is much more flexible and powerfull.

So what can you do with Eyefinity and the display groups? Here are some examples that AMD suggests:

You get the idea. We saw both HAWX and Grid 2 at the event and it was really impressive. The performance was great and the immersion effect is enormuous when you sit close to the screens.

Since the games see it as a huge monitor they of course need to support showing the extended views but AMD has tested tons of games and so far it looks like the overwhelming number of them work right out of the box with Eyefinity. We won’t be able to test all of these games but we will of course test a few of them just to see how well it works.

There of course are some limitations of Eyefinity. First of all, how many people have 3 monitors or want to buy at least 3 monitors? And even more important, how many have at least one monitor that has a DisplayPort. The next limitation is apparent from all the images above. The bezel of the monitor has to be thin. At the event Samsung were showing off a new monitor that is perfect for Eyefinity as it has a very thing bezel. We should hear more about that monitor soon. Still, even with a thin bezel you will get a bit annoyed by the black lines in the image. The third limitation is space. When we prepared to test Eyefinity and put up 3 monitors we were surprised on how much space it actually took to have 3 monitors standing side-by-side.

In the end this feature feels like something only a true hardcore gamer or someone who really needs the screen estate will care about.

Benchmarks

So how does it perform? To test this we put together two systems.

System 1:

CPU: AMD Phenom II 955

Memory: 4 GB Corsair 1333MHz DDR3

Motherboard: ASUSM4A7BT-E

Main disk: Corsair P128 SSD

Other harddrive: 36 GB Raptor

Optical drive: LG BR-player

System 2:

CPU: Intel Corei7 940

Memory: 6 GB Corsair 1333MHz DDR3

Motherboard: Intel DX58SO

Main Disk: Samsung F2 EcoGreen 1 TB

Optical: External ASUS BR-drive (USB)

Both systems had Windows 7 RTM installed with all the latest patches. Due to lack of time we simply had no time to install Vista on both also but we hope to come back to some Vista testing at a later date.

The drivers used were Catalyst 9.9 for all AMD cards except the HD5870, a beta driver for the HD5870 and n… 190.62 for the NVIDIA cards.

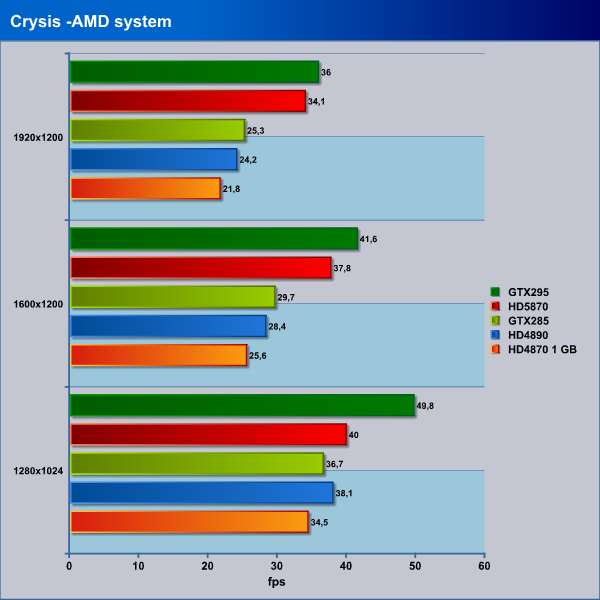

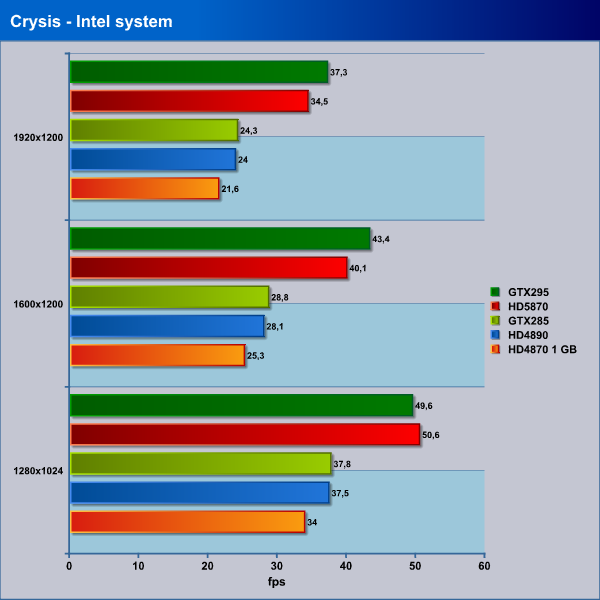

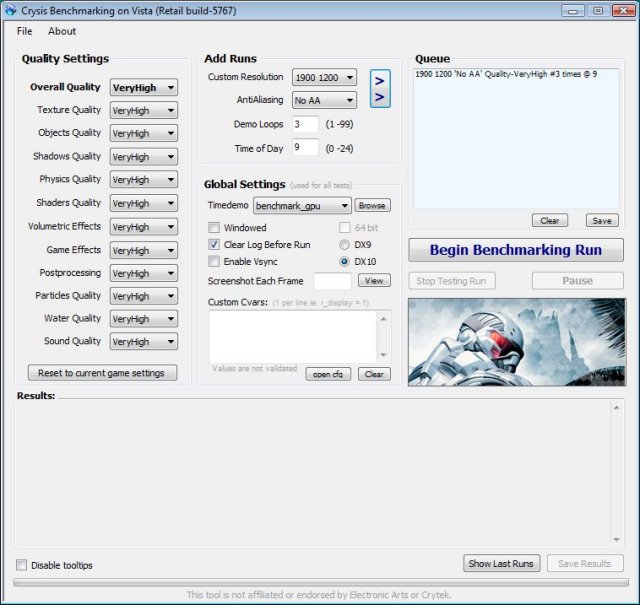

Crysis v. 1.21

Crysis is the most highly anticipated game to hit the market in the last several years. Crysis is based on the CryENGINE™ 2 developed by Crytek. The CryENGINE™ 2 offers real time editing, bump mapping, dynamic lights, network system, integrated physics system, shaders, shadows, and a dynamic music system, just to name a few of the state-of-the-art features that are incorporated into Crysis. As one might expect with this number of features, the game is extremely demanding of system resources, especially the GPU. We expect Crysis to be a primary gaming benchmark for many years to come.

As we move up in the resolutions the HD5870 closes in to the GTX295 and almost reaches it. It leaves all the other cards way behind.

The situation changes a bit when we use our Intel test-system as the HD5870 now performs almost as well as the GTX295 right away.

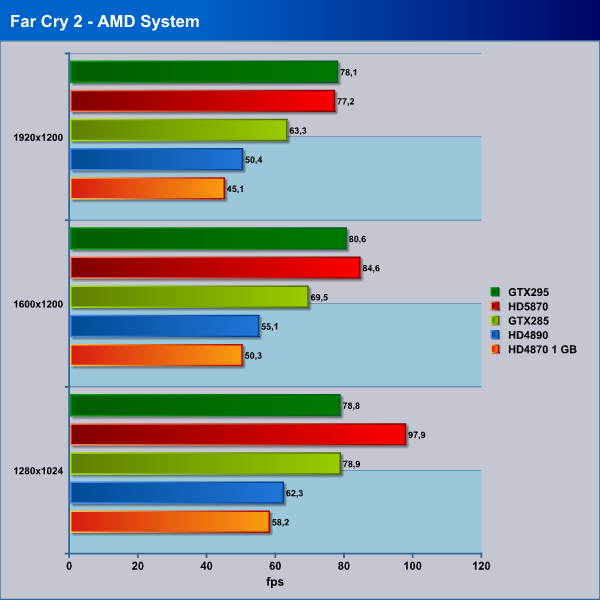

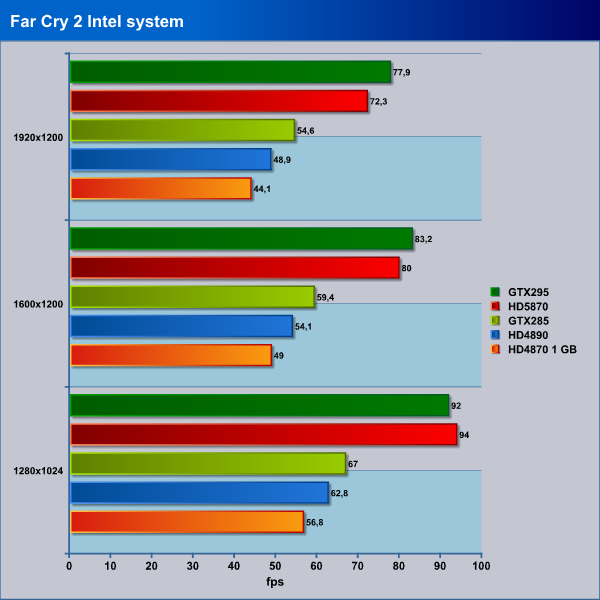

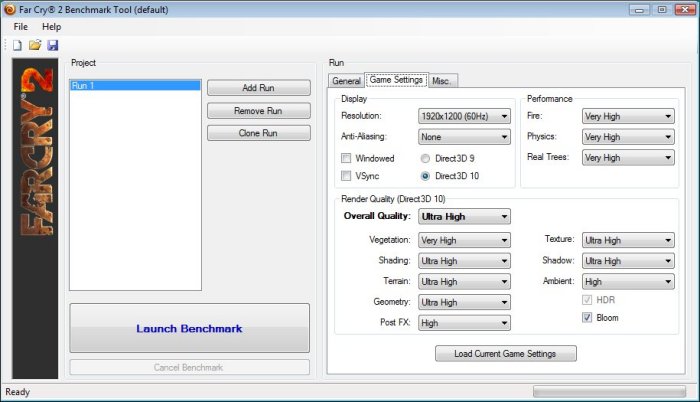

Far Cry 2

Far Cry 2, released in October 2008 by Ubisoft, was one of the most anticipated titles of the year. It’s an engaging state-of-the-art First Person Shooter set in an un-named African country. Caught between two rival factions, you’re sent to take out “The Jackal”. Far Cry2 ships with a full featured benchmark utility and it is one of the most well designed, well thought out game benchmarks we’ve ever seen. One big difference between this benchmark and others is that it leaves the game’s AI (Artificial Intelligence) running while the benchmark is being performed.

The Settings we use for benchmarking FarCry 2. In addition to these settings we added 4xAA for this test

The HD5870 performs very well in Far Cry 2 beating the GTX295 in all resolutions except 1920×1200 where they are neck-to-neck.Again, look at the distance to the HD4890 and HD4870 1 GB!

As we switch to our Intel system things change a bit and the GTX295 manages to take a narrow win in 2 of the 3 resolutions. It is close though.

HawX

The story begins in the year 2012. As the era of the nation–state draws quickly to a close, the rules of warfare evolve even more rapidly. More and more nations become increasingly dependent on private military companies (PMCs), elite mercenaries with a lax view of the law. The Reykjavik Accords further legitimize their existence by authorizing their right to serve in every aspect of military operations. While the benefits of such PMCs are apparent, growing concerns surrounding giving them too much power begin to mount.

Tom Clancy‘s HAWX is the first air combat game set in the world–renowned Tom Clancy‘s video game universe. Cutting–edge technology, devastating firepower, and intense dogfights bestow this new title a deserving place in the prestigious Tom Clancy franchise. Soon, flying at Mach 3 becomes a right, not a privilege.

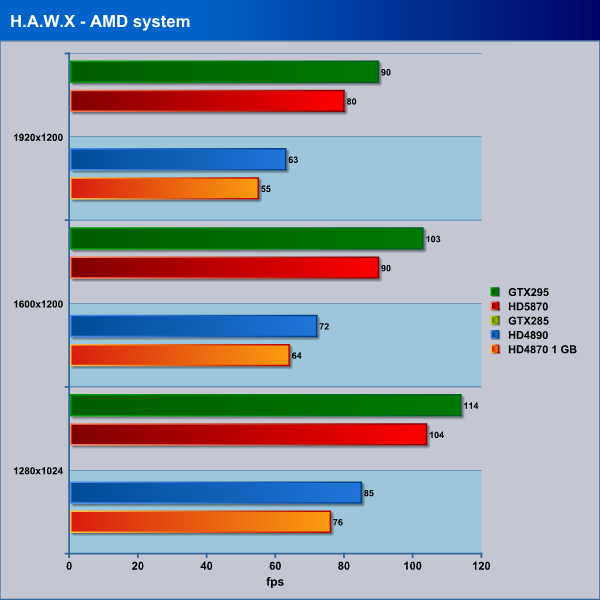

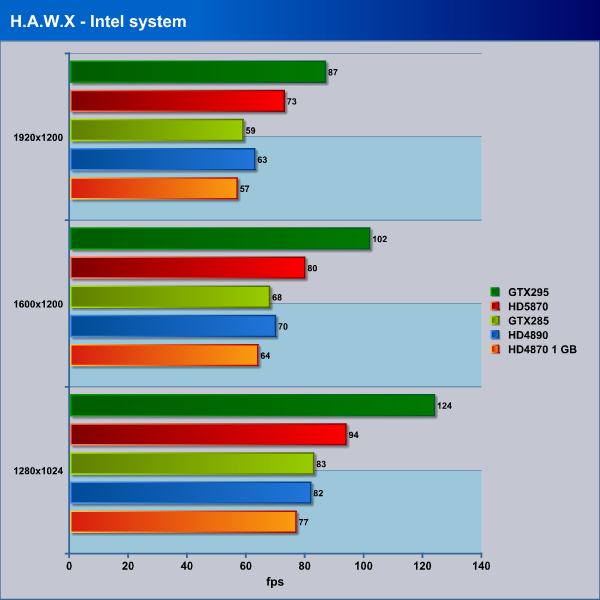

The HD5870 again is close behind the GTX295 in all resolutions leaving the other cards behind. We unfortunately missed testing the GTX285 on this system. We had some image corruption when testing the GTX295. We are a bit surpsied as when we tested HAWX in our HIS HD4890 review the GTX295 performed much worse so NVIDIA must have done a good job in their drivers.

On the intel system the GTX295 draws ahead even more.

Resident Evil 5

We used the benchmark that can be downloaded for this game. All settings were set to max, motion blur was on and we used 4xAA.

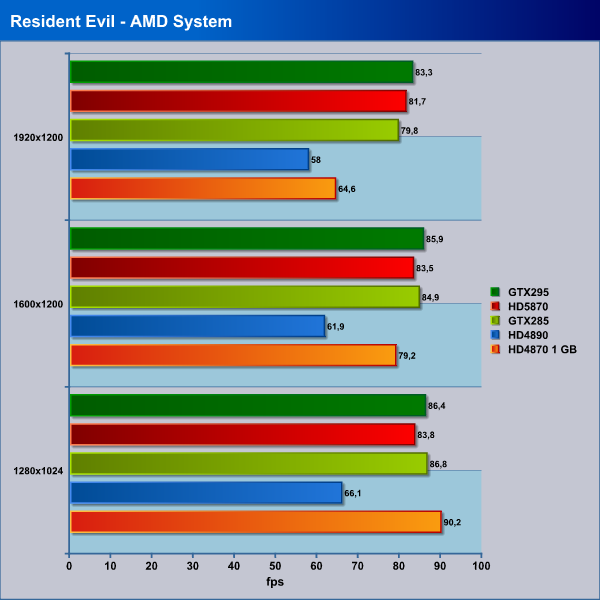

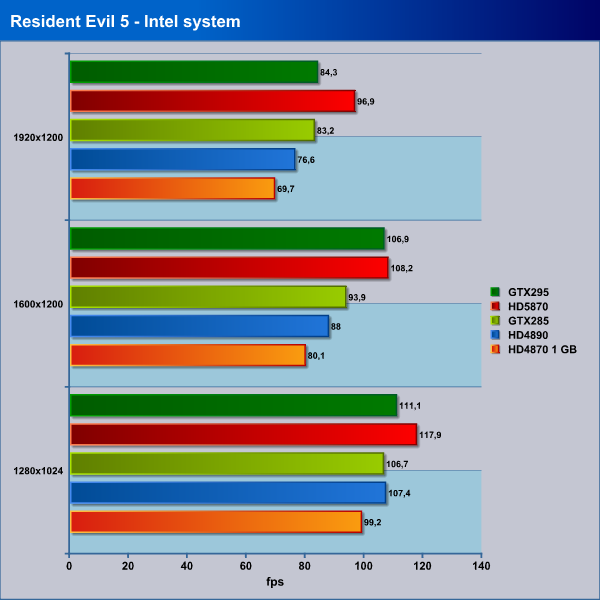

It is obvious that the game is quite CPU-limited on the Phenom II 955. While the HD4870 and HD4890 looses performance as we move up the resolutions the GTS285, HD5870 and GTX295 just keeps on pumping out the same framerates.

When we move over to the Intel-system things change. The HD5870 manages to outperform the other cards in all resolutions.

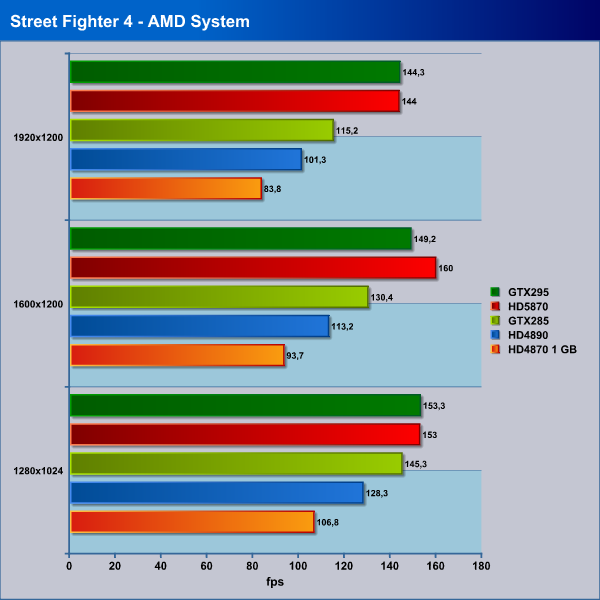

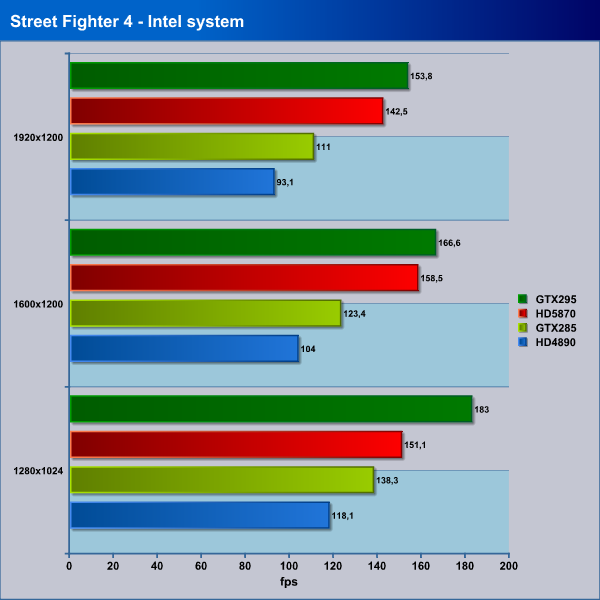

Street Fighter 4

We used the benchmark that can be downloaded for this game. All settings were set to max and we used 4xAA and 16xAF.

The GTX295 and the HD5870 are neck-to-neck in this game with the HD5870 having a slight edge.

As we move to the Intel system the GTX295 gains the most performance and draws ahead of the HD5870. Still, it is these two cards that are dominating as usual.

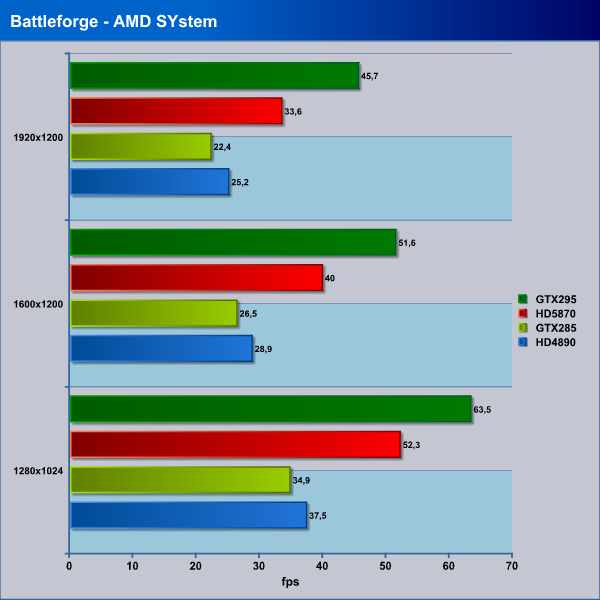

Battleforge

Just before we were ready to release this article AMD told us that EA had added DX11 support for Battleforge. Of course we wanted to try it. All settings were set to max including SSAO (Ambient Occlusion) which was set to Very High. MaxShaders were set to DX10/DX10.1-level for the HD4890, the GTX285 and GTX295 and set to DX11-level for the HD5870. This must be done manually in the config-file. We also used 4xAA.

As we prepared the graphs for the release we accidently switched the scores for the GTX295 and the HD5870 in the graph above. So the scores for GTX295 is actually those for the HD5870.

The HD5870 of course dominates this game as it clearly is one of the games AMD has worked hard with to get optimized for DX10.1 and now DX11.

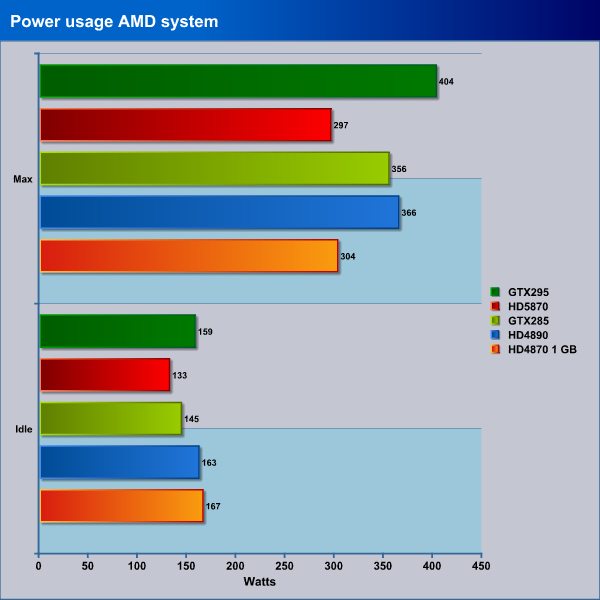

Power consumption

We measured the power consumption for each card in each system both when the system was in idle (at the Windows desktop for 30 minutes) and while we were running the GPU-test in the program OCCT which puts a 100% load on the GPU.

It is quite impressive that AMD has managed to keep the power usage so low even though they have increased the performance so much. The HD5870 draws the least juice of all the cards we are testing here.

The picture is the same for the Intel system. In fact, the power usage is even lower for the whole system compared to our AMD-system.

Eyefinity Test

We were very curious on how it would be to use Eyefinity in a wide variety of games. Unfortunately we ran into some small issues as we could not get hold of a monitor with DisplayPort in time for the article. Also all the adapters we found were for the mini-DisplayPort while the HD5870 uses the regular version.

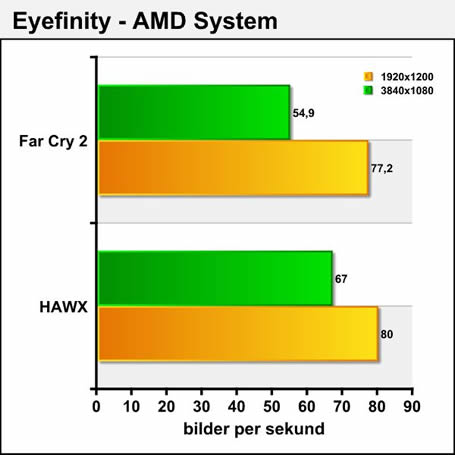

We decided to at least try it with 2 monitors and set up two BenQ 1920x1080p 22″ monitors side by side. This effectively gave us a monitor with the resolution of 3840×1080. We then ran a few benchmarks in Far Cry 2 and HAWX just to get a feel how much performance we will loose. We wanted to bench Crysis to but the benchmark tool did not want to let us choose the resolution. We did however go in and play the game and from inside the game we could choose 3840×1080 as a resolution. It was an odd feeling to have the gun in the middle just right behind the two bezels (and this is why using 2 monitors isn’t really usefull for gaming) but it actually was smooth and playable.

We appologize for the Swedish version of the chart but we realized to late we forgot to make an English version. Basically we see a 20-30% drop in framerate as the amount of pixels increase with 80%. Not every game will work with more monitors but it seems that the HD5870 indeed has enough horsepower to power Eyefinity. Remember that the games were tested at the highest possible quality settings.

A blurry image showing the problems of having just 2 displays.

This is the opening-scene of Crysis

We have ordered a Dell 2410 display which has DisplayPort and will return with a more detailed article on Eyefinity later.

Our Conclusion

Eyefinity is cool but we cannot see it as a “killer”-feature. It feels a bit like NVIDIA’s 3D-glasses; a cool feature that few will ever use.

We do like that AMD continues to move towards supporting “standards” like DirectX, OpenGL and OpenCL as we rather see game developers use these APi’s for their games than writing specifically for one or the other card.

For now the HD5870 is the fastest single-GPU card available and combined with a good price, nice features and a low power consumption we are sure that AMD has a real winner on their hands.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996