We all like kicker SKUs. They are much cheaper than previous generations of products and bring at least one new feature — be it speed improvement or architectural change. This is exactly what PowerColor HD 3850 is about, but on top of it it’s Xtreme.

INTRODUCTION

The last few months were very uncomfortable and stressing for AMD. It seems the $5.4 billion dollar merger with ATI did not do any good for now. Even worse, the current products are inferior to the competition in terms of performance. Because we can’t do a damn thing about it we have to watch and monitor what happens next. This particular transaction made a lot of strategical and operational changes to the company. Many wonderfully performing engineers were let go or simply moved elsewhere which in my opinion created chaos and distraction in the departments. Whatever positive things AMD says about the merger and the wonderful products they are just trying not to scare away the investors and potential OEM customers.

Pardon the above prologue, but it’s necessary to explain why AMD products fall behind NVIDIA and Intel — and that’s across all levels. Price wise however, AMD gets the kudos and that’s certainly an important factor for a lot of customers. Again, AMD needs to calm down, take a few chill pills and let ATI do what they do best — Graphics Processing Units. The coordination between both companies is as important as their isolated actions.

Before we proceed, check out what Björn cooked for you while being in Warsaw.

Going back to reality, I have another graphic card for you to check out. It’s AMD’s second attempt to convince you to their high-end Radeon HD models. The ever wonderful PowerColor aka Tul, shipped a surprise package to my doorstep with the PowerColor HD 3850 512MB Xtreme inside. I would like to apologize to Raymen and you guys for not releasing the article as planned, but Leadtek WinFast PX8600 GT SLI article had been in the works.

KEY FEATURES

- DirectX 10.1 support

- Shader Model 4.1

- UVD video decoder

- PCI-Express 2.0 interface

- CrossFireX support

- Factory overclocked

VPU SPECIFICATIONS

Technology wise, the HD 3800 series doesn’t bring much except for few little things. First, 55nm fabrication process hence lower price. It’s basically a die shrink from 80nm and reduced transistor count to 666 million (now that’s weird eh?). The other thing that differs the RV670 from the R600 is the memory controller; the high-end HD 2900 came with 512-bit memory while HD 3800 series is equipped with 256-bit controller with preserved 512-bit internal ring bus. As far as rasterizer is concerned nothing has changed: 16 ROPs, 64 shader processors, 320 stream processors, 16 texture filtering units and outputting 32 Z samples. Although RV670 now supports DirectX 10.1 API, I won’t delve into a lot of details here. Basically the updated API comes with a lot of fixes, including Antialiasing which may be implemented by game developers themselves. Additionally we will see mandatory 32-bit floating point filtering and shader model 4.1 support.

The other thing that lacked in R600 was UVD processor which was part of AVIVO HD technology. Funny it was only available on HD 2600s and lower-end cards. With HD 3800 series you will now be able to decode H.264 and VC-1 content directly via hardware. Last but not least is CrossFireX support for more than just two GPUs.

| Video card |

PowerColor HD 3850 Xtreme |

PowerColor X1950 PRO | PowerColor HD 2600 XT |

| GPU (256-bit) | RV670 |

RV570 | RV630 |

| Process |

55nm (TSMC fab) |

80nm (TSMC fab) | 65nm (TSMC fab) |

| Transistors | ~666 Million |

~330 Million | ~390 Million |

| Memory Architecture | 256-bit |

256-bit | 128-bit |

| Frame Buffer Size | 512 MB GDDR-4 |

256 MB GDDR-3 | 256 MB GDDR-3 |

| Rasterizer |

Shader Processors: 64 Stream Processors: 320 Precision: FP32 Texture filtering units: 16 ROPs: 16 Z samples: 32 |

Pixel Shader Units: 36 (3 ALUs) Pixel Pipelines: 12 Precision: FP32 Texture filtering units: 12 ROPs: 12 Z samples: 24 |

Shader Processors: 24 |

| Bus Type | PCI-e 2.0 16x | PCI-e 16x | PCI-e 16x |

| Core Clock | 720 MHz | 575 MHz | 800 MHz |

| Memory Clock | 1800 MHz DDR3 |

1200 MHz DDR3 | 1400 MHz DDR3 |

| RAMDACs | 2x 400 MHz DACs | 2x 400 MHz DACs | 2x 400 MHz DACs |

| Memory Bandwidth | 57.6 GB / sec | 38.4 GB / sec | 22.4 GB / sec |

| Pixel Fillrate | 11.5 GPixels / sec | 6.9 GPixels / sec | 3.2 GPixels / sec |

| Texture Fillrate | 11.5 GTexels / sec | 6.9 GTexels / sec | 3.2 GTexels / sec |

| DirectX Version | 10.1 | 9.0c | 10 |

| Pixel Shader | 4.1 | 3.0 | 4.0 |

| Vertex Shader | 4.1 | 3.0 | 4.0 |

ATI Radeon™ HD 3800 Series – GPU Specifications

- 666 million transistors on 55nm fabrication process

- PCI Express 2.0 x16 bus interface

- 256-bit GDDR3/GDDR4 memory interface

- Ring Bus Memory Controller

- Fully distributed design with 512-bit internal ring bus for memory reads and writes

- Microsoft® DirectX® 10.1 support

- Shader Model 4.1

- 32-bit floating point texture filtering

- Indexed cube map arrays

- Independent blend modes per render target

- Pixel coverage sample masking

- Read/write multi-sample surfaces with shaders

- Gather4 texture fetching

- Unified Superscalar Shader Architecture

- 320 stream processing units

- Dynamic load balancing and resource allocation for vertex, geometry, and pixel shaders

- Common instruction set and texture unit access supported for all types of shaders

- Dedicated branch execution units and texture address processors

- 128-bit floating point precision for all operations

- Command processor for reduced CPU overhead

- Shader instruction and constant caches

- Up to 80 texture fetches per clock cycle

- Up to 128 textures per pixel

- Fully associative multi-level texture cache design

- DXTC and 3Dc+ texture compression

- High resolution texture support (up to 8192 x 8192)

- Fully associative texture Z/stencil cache designs

- Double-sided hierarchical Z/stencil buffer

- Early Z test, Re-Z, Z Range optimization, and Fast Z Clear

- Lossless Z & stencil compression (up to 128:1)

- Lossless color compression (up to 8:1)

- 8 render targets (MRTs) with anti-aliasing support

- Physics processing support

- 320 stream processing units

- Dynamic Geometry Acceleration

- High performance vertex cache

- Programmable tessellation unit

- Accelerated geometry shader path for geometry amplification

- Memory read/write cache for improved stream output performance

- Anti-aliasing features

- Multi-sample anti-aliasing (2, 4, or 8 samples per pixel)

- Up to 24x Custom Filter Anti-Aliasing (CFAA) for improved quality

- Adaptive super-sampling and multi-sampling

- Temporal anti-aliasing

- Gamma correct

- Super AA (ATI CrossFire™ configurations only)

- All anti-aliasing features compatible with HDR rendering

- Texture filtering features

- 2x/4x/8x/16x high quality adaptive anisotropic filtering modes (up to 128 taps per pixel)

- 128-bit floating point HDR texture filtering

- Bicubic filtering

- sRGB filtering (gamma/degamma)

- Percentage Closer Filtering (PCF)

- Depth & stencil texture (DST) format support

- Shared exponent HDR (RGBE 9:9:9:5) texture format support

- OpenGL 2.0 support

- ATI Avivo™ HD Video and Display Platform

- Dedicated unified video decoder (UVD) for H.264/AVC and VC-1 video formats

- High definition (HD) playback of both Blu-ray and HD DVD formats

- Hardware MPEG-1, MPEG-2, and DivX video decode acceleration

- Motion compensation and IDCT

- ATI Avivo Video Post Processor

- Color space conversion

- Chroma subsampling format conversion

- Horizontal and vertical scaling

- Gamma correction

- Advanced vector adaptive per-pixel de-interlacing

- De-blocking and noise reduction filtering

- Detail enhancement

- Inverse telecine (2:2 and 3:2 pull-down correction)

- Bad edit correction

- Two independent display controllers

- Drive two displays simultaneously with independent resolutions, refresh rates, color controls and video overlays for each display

- Full 30-bit display processing

- Programmable piecewise linear gamma correction, color correction, and color space conversion

- Spatial/temporal dithering provides 30-bit color quality on 24-bit and 18-bit displays

- High quality pre- and post-scaling engines, with underscan support for all display outputs

- Content-adaptive de-flicker filtering for interlaced displays

- Fast, glitch-free mode switching

- Hardware cursor

- Two integrated dual-link DVI display outputs

- Each supports 18-, 24-, and 30-bit digital displays at all resolutions up to 1920×1200 (single-link DVI) or 2560×1600 (dual-link DVI)2

- Each includes a dual-link HDCP encoder with on-chip key storage for high resolution playback of protected content3

- Two integrated 400 MHz 30-bit RAMDACs

- Each supports analog displays connected by VGA at all resolutions up to 2048×15362

- DisplayPort output support4

- Supports 24- and 30-bit displays at all resolutions up to 2560×16002

- HDMI output support

- Supports all display resolutions up to 1920×10802

- Integrated HD audio controller with multi-channel (5.1) AC3 support, enabling a plug-and-play cable-less audio solution

- Integrated AMD Xilleon™ HDTV encoder

- Provides high quality analog TV output (component/S-video/composite)

- Supports SDTV and HDTV resolutions

- Underscan and overscan compensation

- MPEG-2, MPEG-4, DivX, WMV9, VC-1, and H.264/AVC encoding and transcoding

- Seamless integration of pixel shaders with video in real time

- VGA mode support on all display outputs

- Dedicated unified video decoder (UVD) for H.264/AVC and VC-1 video formats

- ATI PowerPlay™

- Advanced power management technology for optimal performance and power savings

- Performance-on-Demand

- Constantly monitors GPU activity, dynamically adjusting clocks and voltage based on user scenario

- Clock and memory speed throttling

- Voltage switching

- Dynamic clock gating

- Central thermal management – on-chip sensor monitors GPU temperature and triggers thermal actions as required

- ATI CrossFireX™ Multi-GPU Technology

- Scale up rendering performance and image quality with two, three, or four GPUs

- Integrated compositing engine

- High performance dual channel bridge interconnect1

THE CARD

PowerColor was one of the first companies to introduce a non stock version of the HD 3850. Clocked at 720 MHz (715 MHz actual) core and 1800 MHz DDR3 it comes with custom cooling system from ZEROtherm. When unpacking, I noticed the HSF was protected with foam pieces to support the sides in case the delivery guy started throwing the package around.

Click a picture to see a larger view

The flip side sports nothing unusual. There are four screws in case you need to take off the cooling system. One interesting bit, the card is now rigged with PCI Express 2.0 interface (more bandwidth). The back of the card is equipped with additional 6-pin PCI-e power connector which is to be expected of higher-end GPUs. The black heat sink on the third picture is there to cool voltage regulation circuitry. An interesting move from PowerColor was inclusion of direct HDMI output on the cards I/O plate. This solution eliminates the need for DVI-HDMI dongle.

As mentioned few lines above, this HD 3850 is fitted with a custom cooling system from ZEROtherm. To summarize: it cools, it glows and it performs. It’s built using copper heat pipes which are encased around fins. In the middle is the fan which blows the hot air outside — or so it should at least. The design doesn’t appeal to me as the air seems to be circulating instead of dissipating.

The last two pictures show CrossFire support with the ability to run as many as four cards in tandem — but that’s to be determined. The last picture shows RAM chips being used: SAMSUNG K4J52324QE-BJ1A 1.0ns

BUNDLE

The artwork had changed with the new HD 3800 models. The box is no longer horizontal which makes the overall look more than average. On the back you’ll find standard information regarding the card, features and short notes in six languages.

Click a picture to see a larger view

The sides of the box are embedded with power and system requirements. As for bundle, it’s rather standard for PowerColor and includes only the most important things.

- Quick Installation guide

- Quick installation guide

- Set of cables

- CrossFireX bridge

- DVI-VGA adapter

TESTING METHODOLOGY

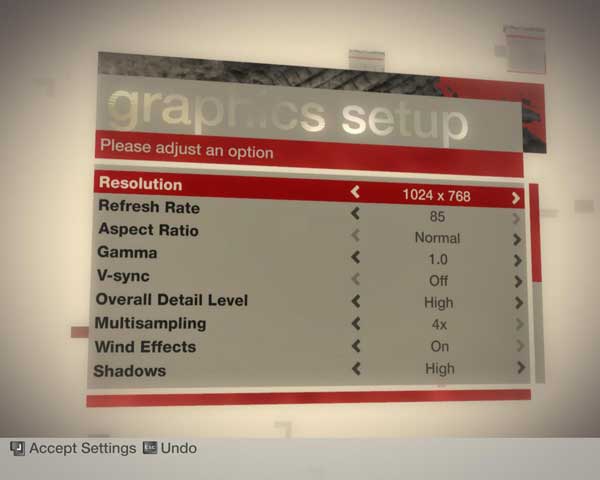

Gaming tests were performed using various image settings depending on game defaults: 0aa0af, 0aa4af, 0aa8af and 4aa8af. This is a semi high-end card so we will use the following resolutions: 1024×768, 1280×1024 and 1600×1200. As far as driver settings are concerned, the card was clocked at 720 MHz core and 1800 MHz DDR3 for memory. High quality AF was used for anisotropic filtering. All other settings were at their default states.

PLATFORM

All of our benchmarks were ran on an Intel Core 2 Duo platform clocked at 3.0GHz. Performance of PowerColor HD 3850 Xtreme was measured using ASUS P5N-E SLI motherboard. The table below shows test system configuration as well benchmarks used throughout this comparison.

I haven’t experienced any issues when installing HD 3850 from PowerColor. All processes were completed successfully without any side effects. Preliminary testing concluded the card to be healthy i.e no screen corruption, no random restarts or overheating.

|

Testing Platform

|

||||

| Processor | Intel Core 2 Duo E6600 @ 3.0 GHz | |||

| Motherboard | ASUS P5N-E SLI | |||

| Memory | GeIL PC2-6400 DDR2 Ultra 2GB kit | |||

| Video card(s) | PowerColor HD 3850 Xtreme Leadtek WinFast PX8600 GT TDH 512MB GDDR2 SLI PowerColor X1950 PRO |

|||

| Hard drive(s) | Seagate SATA II ST3250620AS Western Digital WD120JB |

|||

| CPU Cooling | Cooler Master Hyper 212 | |||

| Power supply | Thermaltake Toughpower 850W | |||

| Case | Thermaltake SopranoFX | |||

| Operating System |

Windows XP SP2 32-bit |

|||

| API, drivers |

DirectX 9.0c DirectX 10 NVIDIA Forceware 169.04 ATI CATALYST 7.11 BETA |

|||

| Other software | nTune, RivaTuner | |||

|

Benchmarks

|

||||

| Synthetic | 3DMark 2006 D3D Right Mark |

|||

| Games |

Bioshock / FRAPS |

|||

3DMark06

PowerColor HD 3850 does well across all game tests however it’s nothing extraordinary when comparing to 8600 GT SLI — especially with GT1/GT2.

RightMark

Geometry processing (vertex processing) is much stronger on HD 3850.

DirectX 9: BIOSHOCK

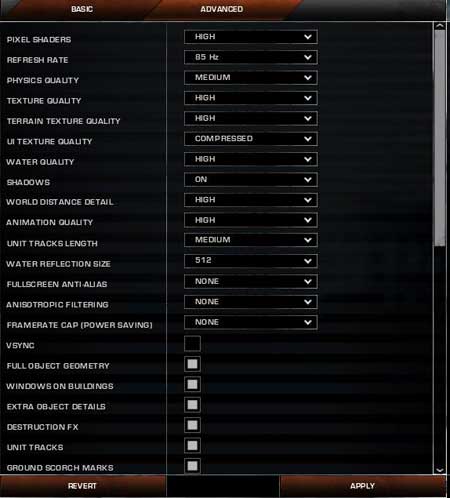

Standard settings were used with texture detail slider notched down to medium, rest at high.

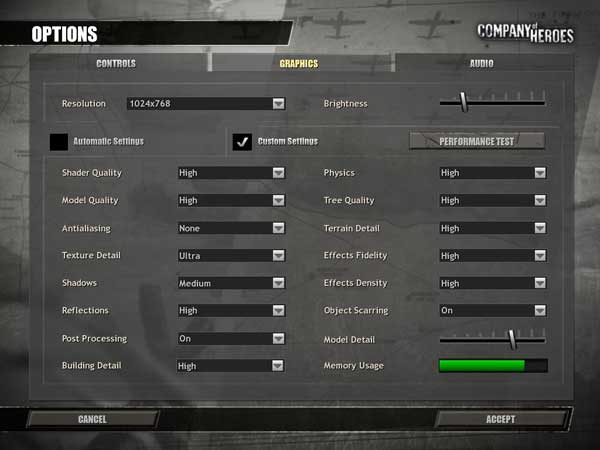

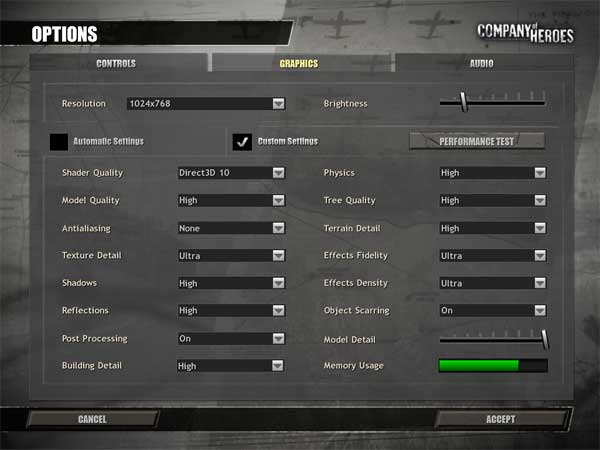

DirectX 9: COMPANY OF HEROES

Most of the settings were set to high; vsync was turned off with -novsync flag. PowerColor HD 3850 performed really well though this game isn’t so graphic intensive.

DirectX 9: CRYSIS

All tests were ran with medium settings and high shader quality (DX9 effects). Because the demo does not support SLI, a single 8600 GT was running instead. It seems HD 3850 lacks serious driver support for this title. We will conduct more tests when newer drivers are released.

DirectX 9: COLIN MCRAE DIRT

HD 3850 performed as expected. The older RADEON, X1950 PRO holds its scores tight and is on par with 8600 GT SLI.

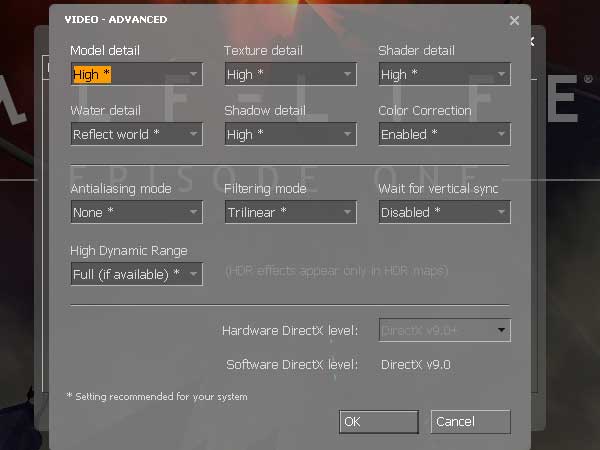

DirectX 9: HALF-LIFE 2 EPISODE ONE

Benchmarks were performed under maximum settings with additional 4AA and 8AF. I might be quite CPU limited in this title at 10×7 though. Both HD 3850 Xtreme and 8600 GT SLI go head to head while the X1950 PRO wonders behind a bit.

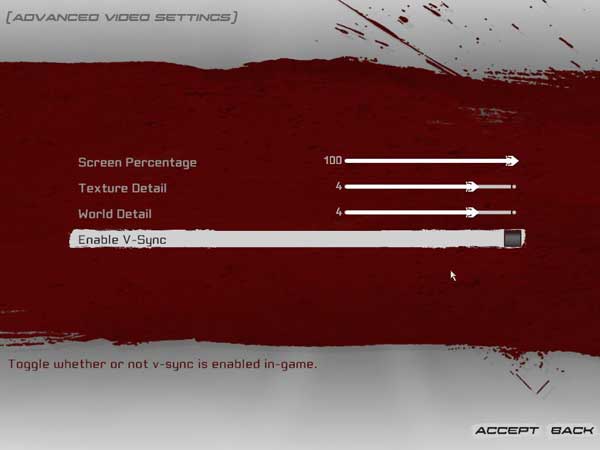

DirectX 9: UNREAL TOURNAMENT 3

The texture and world detail sliders were pushed down to level 4, but that’s still considered high settings. I didn’t bother forcing Antialiasing in this game as it required some .inf hacking. Also note that a frame limiter of 62 FPS was present during the testing.

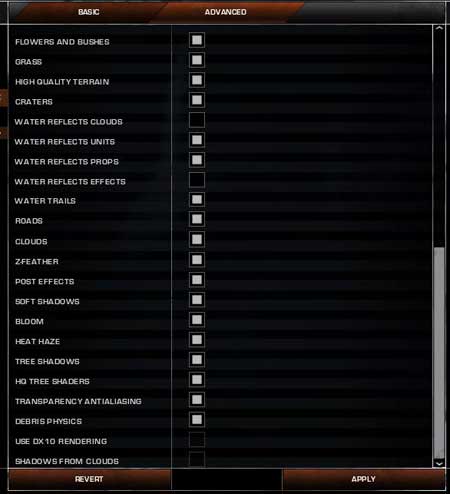

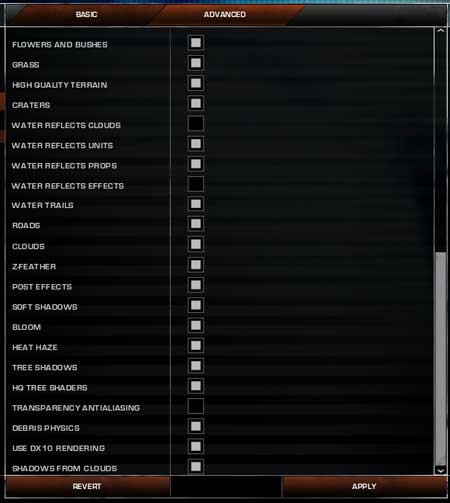

DirectX 9: WORLD IN CONFLICT

As you can see below, there were quite a few settings to play with. In the end, high quality options were chosen. Framebuffer seems to be the culprit for X1950 PRO in this title. While HD 3850 from PowerColor does really well, Leadtek 8600 GT SLI doesn’t stay behind so much — not until we turn on AA/AF at least.

DirectX 10: BIOSHOCK

Standard settings were used with texture detail slider notched down to medium, rest at high and DirectX 10 options set to ON. PowerColor HD 3850 Xtreme is a no no when it comes to DX10 performance. Hands down for a pair of 8600 GTs.

DirectX 10: COMPANY OF HEROES

Although this isn’t a very graphic intensive game, I left most of the settings at high. Note that vSync was turned off with -novsync flag. Even though 3850 manages to outperform 8600 GT SLI it certainly isn’t doing its best.

DirectX 10: CRYSIS

All tests were ran with medium settings and very high shader quality (DX10 effects). Because the demo does not support SLI, a single 8600 GT was running instead. Again, HD 3850 doesn’t seem to be doing a lot of damage to a single 8600 GT. No eye candy here either as it would require my intervention or worst it would simply not work.

DirectX 10: UNREAL TOURNAMENT 3

The texture and world detail sliders were pushed down to level 4, but that’s still considered high settings. I didn’t bother forcing Antialiasing in this game as it required some .inf hacking. Also note that a frame limiter of 62 FPS was present during the testing. DirectX 10 effects were automatically turned on.

DirectX 10: WORLD IN CONFLICT

There were quite a few settings to play with in WiC. In the end, high quality options were chosen. This is probably the only DX10 title where 8600 GT SLI had nothing to say to HD 3850 in terms of performance. A much appreciated frame rate and great scalability.

POWER & TEMPERATURE

It’s always good to know the amount of power that is needed to run a decent gaming rig these days. Usually refresh products such as HD 3850 are sized down to a smaller process making it less Watt-hungry than its predecessor. It’s pretty simple math if you look at it logically. The better the card performs, the more expensive it is and the more Watts it burns. Simple as that. Obviously this isn’t true when comparing two unlike generations of chips as they are built using totally different process.

What we have above is a graph consisting of two different sets of information: temperature (in Celsius) and peak power (in Watts). These are the highest numbers I was able to achieve, not average as with frames per second. Even though our PowerColor HD 3850 Xtreme is a raisin in its class (factory overclocked) it does produce acceptable results. Within in idle state we get around 122 Watts. That is very low considering it’s an overclocked Core 2 Duo platform with not so shabby equipment. The reported temperature does not go over 37C. Running a game in the background automatically raises the power consumption over 200 Watts. During overclocking, I haven’t noticed massive power fluctuation — did not exceed 203W and 47C.

OVERCLOCKING

The fact that PowerColor HD 3850 Xtreme comes factory overclocked doesn’t mean we can’t take it higher. Clocked at 720 MHz core and 1800 MHz DDR3 already makes it a preeminent product amongst the competition. We’ve already seen how temperature and power consumption stacks up for this card. It’s now time to check out what it can do besides the already upped clocks.

Using ATITool 0.27 I was able to find out the highest clocks for both core and memory. W1zzards application allows you to seek those maximum values automatically. Without any hesitation, I launched the application and as usual started testing. The results were there after around forty five minutes. Our core was able to reach maximum of 742 MHz while memory ran at 2016 MHz DDR3 — all artifact free. Anything beyond these numbers resulted in either screen corruption or random freeze-up.

Core clock difference (720 MHz stock): 3%

Memory clock difference (1800 MHz stock): 11%

CONCLUSIONS

All wonderful things come to an end, including this article which is slowly approaching a conclusion. We all like kicker SKUs. They are much cheaper than previous generations of products and bring at least one new feature — be it speed improvement or architectural change. This is exactly what PowerColor HD 3850 is about, but on top of it it’s Xtreme. The seven most important aspects when looking at the HD 3800 series are: price, DirectX 10.1 support, Shader Model 4.1, PCI-e 2.0, UVD, CrossFireX (quad GPU support) and rasterizer taken from R600.

In terms of performance, well, it’s a mixed bag where the HD 3800 series resembles AMDs high-end parts while not being able to take the crown away from NVIDIA. As far as DX10 is concerned, I’m disappointed with the overall operation. Drivers should have matured by now so that argument is out of the league. We’ve seen quite a few performance charts where the card simply hiccupped and it felt like a mid-range product, hence Bioshock and Crysis.

Overall I’m satisfied with the product despite the fact the HD 3800 is late. Not only that, it should be the sole replacement for the HD 2900 series by now. However, I’m not judging the GPU per se, but PowerColor model which in my subjective opinion is simply solid. Although the cooling system might be unforgiving to some (2 slot solution), it’s a nice addition both design and performance wise. The other freebie is higher clocked core and memory which a lot of you will enjoy, likewise not so dingy overclocking potential.

Pros:

+ Factory overclocked

+ DX10.1 / SM 4.1 ready

+ CrossFireX support

+ UVD

+ Low power draw

+ Runs cool & quiet

+ Overclocking potential

+ Direct HDMI output

+ Good price

Cons:

– Questionable DX10 performance

For great performance / price ratio as well as factory overclocked clocks, PowerColor HD 3850 Xtreme receives the rating of 9 out of 10 (Extremely Good) and Bjorn3D Golden Bear Award

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996