NVIDIA’s roadmap for it’s GeForce FX family of GPUs will fill every market segment with DirectX 9 hardware. This preview looks at NVIDIA’s line-up, roadmap, and a quick preview of NV31 and NV34.

Introduction

Today NVIDIA is announcing the specifications of the entire GeForce FX family line-up at the Game Developers Conference (GDC). As a part of this launch, weve prepared this preview of the specifications. With this preview, NVIDIA also sent us reference versions of NV31 (GeForce FX 5600 Ultra) and NV34 (GeForce FX 5200 Ultra). Well be presenting a full-on performance test of these cards next week. In the mean time, well look at NVIDIAs roadmap with these new video cards.

Making up the FX line are five GPUs:

The above are, obviously listed in decreasing order of performance. The first two, the 5800s, weve know about for some time. The later four comprise todays big announcement. For today, well discuss the technology differences between this line-up, look at NVIDIAs roadmap for these cards (and beyond), and a quick preview of the NV31 and NV34 cards which well be benchmarking.

Technology

To aid in discussing the differences between these GPUs, Ive used NVIDIA press package to generate the tables below which detail the technology behind the GeForce FX GPU. Please note that this is merely a summary. A full review of this technology is available in our former GeForce FX Preview, HERE . Its important to reference this information since NVIDIA has not implemented all of the FX features on every GPU model.

CINEFX SHADING ARCHITECTURE

|

|

|

| HIGH-PERFORMANCE, HIGH-PRECISION 3D RENDERING ENGINE

|

HIGH-PERFORMANCE 2D RENDERING ENGINE

|

| INTELLISAMPLE TECHNOLOGY

|

ADVANCED DISPLAY PIPELINE WITH FULL NVIEW CAPABILITIES

|

MISCELLANEOUS SPECIFICATIONS

GeForce FX Family

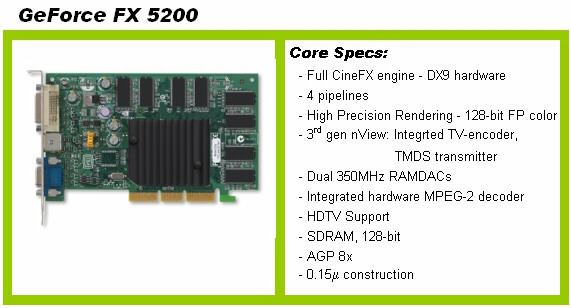

Now, for the individual GPUs, I’ve separated out the like models, as applicable, and listed the core technology NVIDIA has included on each. First and foremost, the best news is that NVIDIA has chosen to include their core CineFX engine (DX9 hardware) on every model! This is a pleasing sight, for more than one reason as we’ll see on page 2. Also note that core and memory frequencies have not yet been released for these products. Let’s start with at the bottom and work up:

The bare-bones FX 5200 looks like every GeForce 2/4 MX that I’ve ever seen. The important technical feature difference from the other models to note for this card include the reduced number of pipelines, lack of Intellisampling, and SDRAM. Yes, the 5200 is a DX9 part, but it has half the pipelines of the 5800. However, this is stil quite adequate when you consider NVIDIA’s GF3 and GF4 GPUs. Intellisampling is NVIDIA’s method of advanced color compression, dynamic gamma correction, adaptive texture filtering, and it includes NVIDIA’s latest antialiasing modes: 6xS and 8x. At first, I didn’t like the fact that Intellisample didn’t make onto the 5200s, but then I thought about it. Due to the reduced core and memory speeds on these products, I doubt the GPU has enough horsepower to make Intellisample worthwhile. SDRAM…Since this is NVIDIA’s entry level FX, SDRAM is a great way to cut costs. I can’t blame them for making the base 5200 in this configuration. Also note that the 5200 appears to be the only card that comes in the standard AGP card size. Passive cooling appears to be a real option for this model, also.

Of lesser importance is the 350MHz RAMDACs vs. the 400MHz versions on the 5600 Ultra and 5800s. For day to day use and gaming, 350 is more than adequate. Using these also helps save on costs of production. This is likely why 0.15 micron construction was also used.

As far as what I like about the 5200 is the entry level cost of a true DX9 part. The first of its kind. It also has the now norm DVI-I, VGA, and S-video ports.

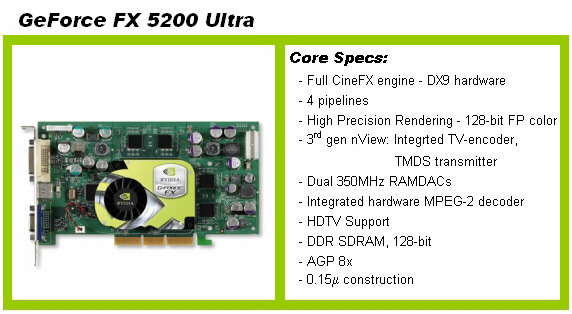

The 5200 Ultra steps up the performance a notch with DDR SDRAM. Note the small white plastic block at top right of the card. This is an external power connector for a 4-pin molex connection. Note that this video card will function without the additional power connected. NVIDIA has these GPUs hardwired to reduce the core and memory speeds to run at a level acceptable to the AGP supplied power. In reality, this should be a non-issue….just plug in the power cord. This is in sharp contrast to the Radeon 9700, which gives a few beeps and splash screen on boot-up, reminding you that the external power is required. If a spare power connector is unavailable, at least you can get up and running until you can scam up a splitter cable. This external power adapter is standard on the 5200 Ultra and above.

Beyond the DDR-SDRAM upgrade, this card will likely have the same or slightly greater core speed than the regular 5200. The memory frequency should be a nice boost over the base 5200.

HDTV support: this, you’ll note, is included in all models. We have a few questions in to NVIDIA concerning this and a few other things. I’m unsure as to what they specifically mean by HDTV support. In essence, it would seem to me that true HDTV support would require the GPU to output HDTV native resolutions like 1080i and 760P. We’ll update here when we get a clarification from NVIDIA.

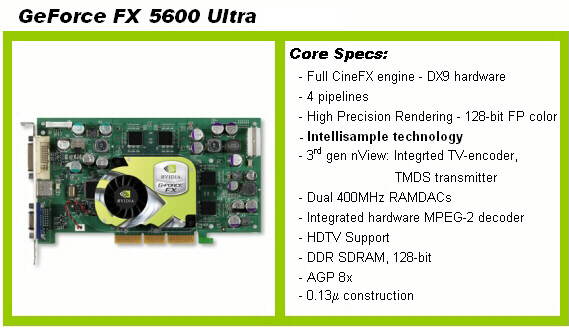

The funny thing here is that the picture for the 5600 Ultra is exactly the same as that for the 5200 Ultra. After getting our reference cards, the pictures don’t lie. The construction fo these two cards is exactly the same.

As I’ve highlighted (bold) in the image above, the 5600 Ultra gets the Intellisample technology, now having enough fill rate to run this advanced technology. 400MHz RAMDACs arrive in this higher line card, as well as 0.13 micron. Unfortunately, we’re still only seeing 4 pipelines and no availability of the faster DDRII. But, again, this all goes to marketing of these devices. The specs. provided fit the Performance market established for these NV31 products.

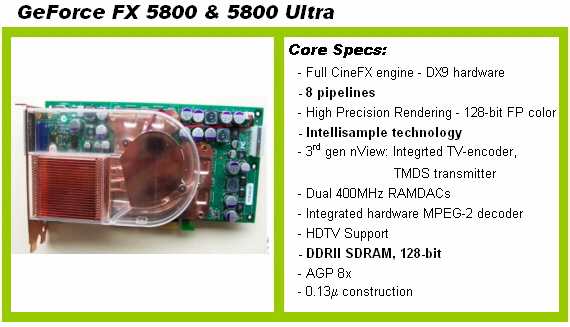

No new images of the 5800 were provided, so this image was stolen from our GeForce FX preview. The external power adapter is shown at top right, but otherwise, the configuration of this card varies from the 5600 Ultra and lower. However, this could simply be due to the fact this picture is of an older reference card. We know that the cooling solution has evolved since these original pictures. With the 5800 and 5800 Ultra, we’re seeing DDRII memory and the choicest core speed GPUs. And, the full 8 pipelines are included on this 125M transistor die.

On to page 2, with NVIDIA’s roadmap, NVIDIA’s MSRPs for these GPUs, and a look at the NV31 and NV34 cards.

GeForce FX Pricing

| GPU | Retail ASP |

|

|

|

| GeForce FX 5800 Ultra | $399 |

| GeForce FX 5800 | $299 |

| GeForce FX 5600 Ultra | $199 |

| GeForce FX 5200 Ultra | $149 |

| GeForce FX 5200 | $99 |

|

|

|

| GeForce 4 MX440 | $79 |

NVIDIA suggests these prices as actual, retail sale prices. As we all know, typical on-line retailers will offer significant discounts over these prices. Unfortunately, current pricing on the 5800 Ultra is sporadic, and often above the $399 list price. However, I’d expect the 5800 non-ultra and 5600 Ultra to see better street pricing in the short term, since these would seem to me to be performance system builder’s bang-for-the-buck cards. While the extremely low pricing on the 5200s should garner the attention of the budget minded system builder. NVIDIA included their GF4 MX440 as the “Value” market segment, replacing the old TNT2 Vanta which has been on NVIDIA’s list for toooo long. Here the ASP of $79 for the MX440 is way high, since street pricing is as low as $40 for an MX440.

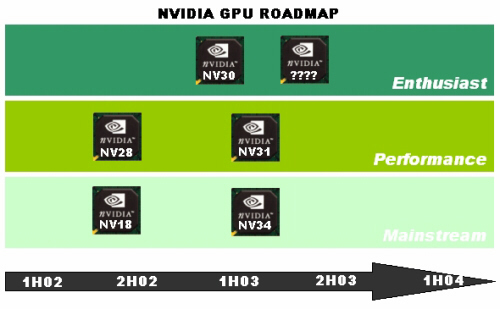

NVIDIA’s Roadmap

|

The reference NV31 and NV34 looked more or less identical. They are the spitting image of the reference photographs, except for the chrome fan cover vs. the painted NVIDIA logo, which we’re used to seeing on reference cards. The NV34 (GeForce FX 5200 Ultra) came at the stock clock speed of 325MHz core and 650MHz memory. The memory actually appears to be 2.5ns DDR, so overclocking tests will be in order. Scott has the NV31 for testing. His default core and memory frequencies are 350 and 700, respectively. I was surprised to see such a little difference in core and memory speeds between these products. However, Scott already ran some surprising overclocks on the NV31….all of which will be revealed in our up and coming bench test of these two cards. All that I’ll say right now is that the NV31 will be compared to a BFG GeForce4 Ti4600 and the NV34 will be compared to a GeForce4 MX460 and a GeForce4 Ti4800SE. |

Conclusions

With all of the GeForce FXs including DX9 hardware, coupled with NVIDIA’s aggressive pricing, makes the GeForce FX family of GPUs a winner in all market segments. From the $99 GeForce FX 5200 to the $399 GeForce 5800 Ultra, NVIDIA’s solid drivers and CineFX engine, NVIDIA is poised to get every AGP-enabled computer owner running current and future games. Look back here next week for our detailed review of the NV31 and NV35.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996