NV30 is GeForce FX

Today NVIDIA is announcing NV30. This greatly anticipated DirectX9 Graphics Processing Unit (GPU), the first to include technology acquired from 3DFX, is finally being released. It goes by the name of GeForce FX. Bjorn3D was lucky enough to receive a press package from NVIDIA covering, in detail, the features of this new GPU. With this preview, we’ll look at the extensive features of this next generation product and a take a quick glance at the card.

The GeForce “FX” name was purposely chosen for two reasons:

|

|

1. The GeForce FX takes great steps to bring the home user closer to cinema style effects seen on the large screen capabilities, 2. The second reason, which may not have jumped out at you upon seeing the name, is that “FX” is taken from “3DFX”. As I mentioned in the introduction, NVIDIA has been working long and hard on integrating the wealth of knowledge (and patents), which they’ve acquired from 3DFX. |

Technical Highlights

First, let’s look at the new features of this new GPU before we look at the details of the actual video cards that are soon to come.

GeForce FX was designed from the ground up to encompass and surpass Microsoft’s DirectX9 specification. The core change of DX9 from DX8 is increased programmability. The programming language was improved with more commands being made available, the structure was made much more flexible for the DX programmers, making it easier to implement. The areas of concern are the pixel shaders and vertex shaders.

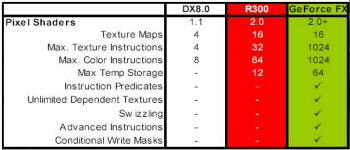

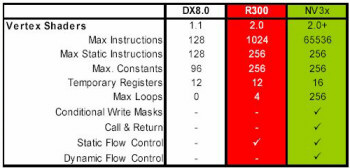

NVIDIA provided this table, which illustrates the changes in the pixel shader and vertex shader specifications. The number of instructions has doubled and the constants, used for temporary data storage, has more or less tripled. Also notice that NVIDIA has taken advantage of the die real estate and included thousands of instructions and constants…calling it Version 2.0+ (more on this in the CineFX discussion below).

It is generally agreed that the DX8 pixel shaders and vertex shaders were “configurable” and not truly “programmable.” DX9 brings a whole new level to GPU programmability, and in fact relies upon the programmer’s instructions to be utilized in order to achieve it’s potential.

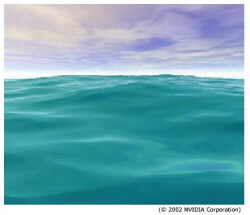

The key benefit of the Pixel Shader 2.0 feature set is that it can operate on arbitrary math algorithms that the programmer would have otherwise relied upon the CPU to perform. The above picture shows how the pixel shaders can be programmed to vary the color of a surface, at the pixel level, based upon the light source location….and this is just the tip of the iceburg. Procedural shading can also be programmed in a similar manner, which can literally do away with the problems associated with mapping a 2D texture to a 3D surface. The pixel shaders can actually generate the detailed textures that we’re used to seeing by simply following the rules established by the program.

A similar tale is told by the new Vertex Shaders 2.0 specification. With the extra instructions and constants available, programmers can generate much more complex scenes due to the larger space available for larger programs in hardware.

|

|

A nice example of the pixel pushing power of Vertex Shaders 2.0 is the wave example. The programmer can program the engine to dynamically model the wave surface while the pixel shader instructions, on a per-pixel level, determine how light reflects off of and penetrates the waves which creates the texture on the wave surface. |

Also of importance in Vertex Shaders 2.0 is the inclusion of flow control. Flow control permits the calculations to branch off into other subroutines in the instruction set. This is necessary to best automate the construct of the near cinematic effects, which are claimed to be possible.

On the next page, we’ll look at NVIDIA’s solution to DirectX9…..CineFX

CineFX

NVIDIA tackles these DirectX9 features with its CineFX engine. The CineFX engine realistically pushes the programmers control down to the pixel level and in hardware, reducing the CPU bottleneck. Triangles and 2D texture mapping can be overcome by the programmability of this engine. Beyond Pixel Shader 2.0 and Vertex Shader 2.0 compliance, NVIDIA has enhanced the capabilities in CineFX to include additional benefits to the graphics programmer:

· Variable/switchable precision modes up to true 128-bit color

GeForceFX offers both 16-bit and 32-bit floating point formats, where FP32 is actually 128-bit color. This is equivalent to what is used in the movie industry in animated feature film development. The GeForceFX is 32-bit from start to finish, including the Z. The developer is also free to switch between these modes in a single application. This ability, in the end, ensures that the programmer can utilize only what is needed for the given rendered scene, in order to minimize any performance slow downs. However, precision can be necessary if other advanced features like fog and bump mapping are used. The lack of FP32 can result in artifacts (such as specular highlighting and banding) occurring as error builds up during successive computations.

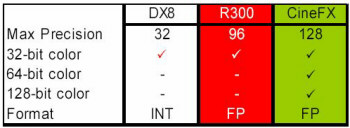

This small table indicates the relationship of precision between the DirectX 8 specification, the Radeon 9700 core, and the CineFX engine.

· Considerably longer programs can be written for the shaders. Up to 1,024 instructions can be programmed for the pixel shaders, vs. DX8s eight.

· Branching and looping within the program on hardware is dynamic, providing enhanced control flow, which greatly increases the amount of computation available for each vertex and pixel. CineFX can execute up 65,536 vertex instructions at each vertex.

· Finally, using NVIDIAs Cg graphics language, implementation of the programming can be quick. For a full discussion of the power behind NVIDIAs Cg compiler, see our review right — HERE. Also note that more information of Cg can be found at: http://www.cgshaders.org/

For comparison sake, NVIDIA provided this diagram illustrating the number of instructions in the different DirectX specifications. A simple graph can say a thousand words. Obviously, no programmable instructions were available prior to DX8. The R300 (ATI Radeon 9700) is apparently running at DX9 spec. In the end, we see a DirectX9 GPU far, far beyond what ATI released earlier this year. But we must note that determining DirectX9 performance will have to wait until DX9 games hit the shelves (and the public release of DX9, itself!). The GeForceFX is, of course, backwards compatible with DX8 and is completely OpenGL 1.4 compliant.

Intellisample Technology

Intellisample is NVIDIAs patented collection of features intended to boost the picture quality of the GeForceFX GPU to near cinematic quality. Versus the previous features, Intellisample is completely NVIDIAs design and not a derivative of the AGP 3.0 specification. Intellisample seemingly takes the place of Accuview. Features included in the Intellisample technology are:

· Color Compression

The GeForceFX family of processors is set-up to perform operations on color in a 4:1 compression mode. It is stated that the compression is completely loss-less and seamless with other operations.

· Dynamic Gamma Correction

NVIDIA has included gamma correction capabilities right in the shader engines. This is a step forward from many other graphics engines, where true gamma correction was left out of the process all together, resulting in color error.

· Adaptive Texture Filtering

GeForceFX is capable of changing the algorithms used in calculating trilinear and anisotropic filtering to less demanding routines in order to ensure reasonable frame rates for the end user.

· New Antialiasing Modes

Either available through the game/app engine or through NVIDIAs control panel, 6XS and 8x antialiasing modes are now available, promising a new level of picture quality.

General Specifications

Weve talked about the core features of the card, mainly revolving around the DirectX9 specification. Now lets talk about the remaining, traditional features of interest:

|

|

Geforce 4 Ti4600 |

Radeon 9700 |

GeforceFX |

|

Die Size |

63 Million |

110 Million |

125 Million |

|

Die Construction |

0.15 micron |

0.15 micron |

0.13 micron |

|

Core/Mem Freq. |

300/650 |

300/600 |

500/1000 |

|

Memory Type |

128-bit DDR |

128-bit DDR |

128-bit DDR II |

|

Direct X |

8 |

9 |

9 |

|

AGP Bus |

4x |

8x |

8x |

|

|

|

|

|

The Radeon 9700 packed 110 Million transistors onto the 0.15 micron process, and thats a lot. Luckily for everyone 0.13 micron has been around long enough and is becoming cost effective to use. The new GeForceFX is taking advantage of the die shrink and uses 100% copper interconnects, and claims that 36% less power is used than with the older aluminum construction. But it seemingly still needs some serious cooling, as well see below. The die shrink also ushers in even higher core frequencies. The GeForceFX GPU will operate at 500 MHz.

|

|

The GeForceFX is the first to bite into the new DDR II SDRAM. The promoted model is running the ram 500MHz, doubled pumped to a cool 1.0 GHz by using 2.0 ns DDR II. |

|

Of course, AGP 8x is the latest standard for the AGP bus. Intel’s Accelerated Graphics Port has received a boost with the new AGP3.0 Specification, and AGP 8x can theoretically 2.1 GB/second. This is double the AGP4x spec (hence the doubling of the multiplier name). This new AGP 3.0 spec. is also backwards compatable with the older AGP 2.0 spec, in that both AGP2x and AGP4x are supported. As we’ve seen in many on-line reviews and here at Bjorn3D, AGP8x doesn’t seem to give us much of a performance boost over the AGP4x mode. Considering the drastic improvement in the core features and high frequencies of operation of the GeForceFX, we’ll have to investigate the effects of AGP8x for this card. Of any card on the market, GeForceFX appears most capable to utilize the added bandwidth.

Other items of note are that the GeForceFX can push 8 pixels per clock cycle. This is similar to the ATI’s R300 but, as it is for both cards, the true performance will come from the core and memory frequencies, and in the advanced DX9 features (CineFX for NV30). Also of note is that NVIDIA stated that GeForceFX is compatable with Linux, Windows, Mac OSX, and XBox. I found it rather interesting that XBox was intentionally added to the list. Perhaps an XBox 2 is in the works?

The Card

Bjorn3D hasnt received a GeForceFX to test yet. The above picture is the best that we have to look at. The first thing youll notice is the reference ducted fan, which vents out of the adjacent PCI slot. YES, unfortunately, the first PCI slot is lost. But, to be honest, its being used to duct cooler outside air directly to the GPU, and I cant complain about that. If it was for extra I/O jacks, Id have been upset. The fan is located over the GPU, and it appears to be a heatpump, where the copper plate conducts the heat to the heatsink via the two tubes which can be seen between the fan and sink. The small dents at right of the fan (and above the fan) are notched down to cover and cool the memory modules. I imagine that memory is on both sides of the card, so Im not sure what the cooling situation is on the back side. We can certainly expect the standard array of adapters on the GeForceFX: VGA, DVI, and S-video for VIVO.

No benchmarks were provided to us. However, some generalized information suggests that we’ll see a healthy increase in performance with GeForceFX:

-

Approx. 3x Geometry Performance from GeForce4 Ti 4600.

-

Significantly faster core and DDR II RAM running at 1GHz is a promise to see a drastic increase its performance in sub-DX9 games.

-

Advanced, high-precision floating point color.

-

DX9 Vertex Shaders 2.0+ and Pixel Shaders 2.0+.

-

Intellisample picture quality.

-

And NVIDIA’s multi-display technology and Digital Vibrance Control will be making a return….

All of these features ceretainly seem to fit the mold that NVIDIA has created for near cinematic quality PC video. These features will also see great use in the video game world. In the end, we’ve waited for NV30 to deliver, and the GeForceFX certainly has!

Bjorn3D looks forward to getting its hands on a GeForce FX for some in-depth, hands on testing. Look back here for a full review in the coming weeks.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996