Kepler is finally here. Check out how we push the new GeForce GTX 680 300Mhz above its stock Core Clock Speed and 900Mhz above its stock memory frequency.

Nvidia GeForce GTX 680

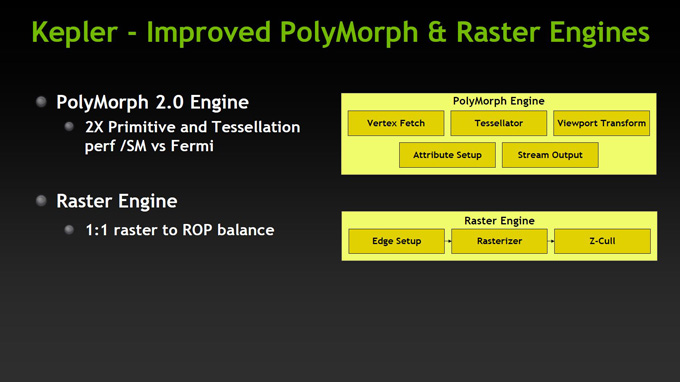

What we have here today is a GPU that improves on what it does best (tessellation) and adds features that were missing from previous generation cards.

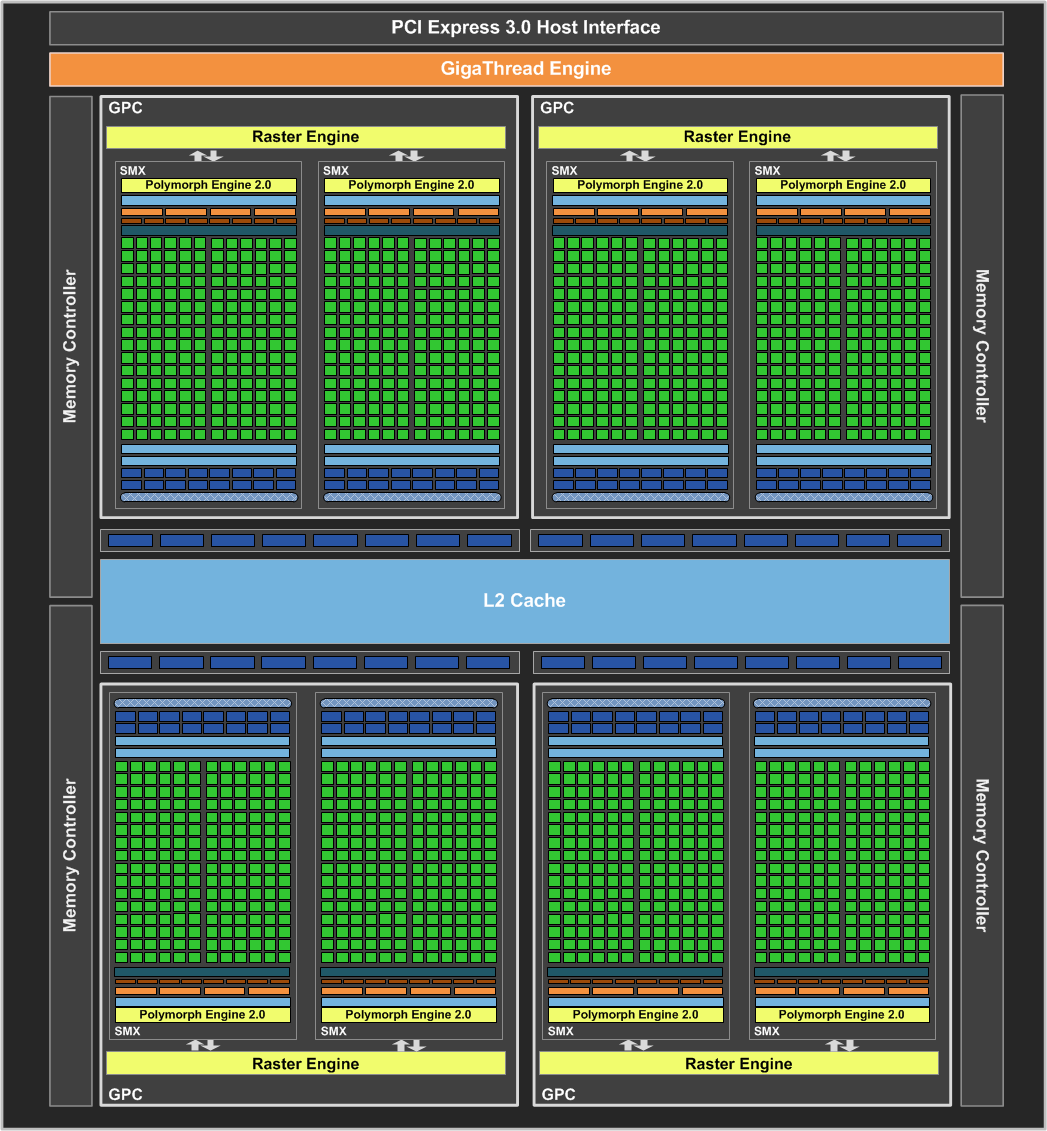

The GK104 Kepler ARCHITECTURE

On the technical side, Kepler is a completely new architecture which means a completely new chip. Nvidia’s engineers went back to the drawing board and pretty much entirely redesigned the GPUs main components working out problems from the previous architecture.

Kepler comes with:

- 8 SMXs

- 1536 CUDA cores

- 8 Geometry units

- 4 Raster units

- 128 Texture units

- 32 ROP units

- 256-bit GDDR5 6Gbps memory.

While Kepler still only has four GPCs, each GPC actually contains two SMX units inside. A single raster engine is shared by the two SMX units. At the SMX level, we have one PolyMorph Engine, 192 CUDA cores, 32 load/store units, 32 special function units, 16 texture units, 4 warp schedulers with 2 dispatch units each, and 64KB shared memory/L1 cache.

| Numbers per GPC | Kepler | Fermi |

|

GPC

|

4 | 4 |

|

SM/SMX

|

2 | 4 |

|

CUDA Cores

|

192×2 | 32×4 |

|

Load/store unit

|

32×2 | 12×4 |

|

Special function unit

|

32×2 | 4×4 |

|

Texture units

|

16×2 | 4×4 |

|

Warp scheduler

|

4×2 | 2×4 |

|

Dispatch unit

|

16 (2 per each warp scheduler) | 2×4 |

|

L1 cache

|

64 KB x2 | 64KB x4 |

|

PolyMorph Engine

|

1×2 | 1 |

|

Raster Engine

|

1 | 1 |

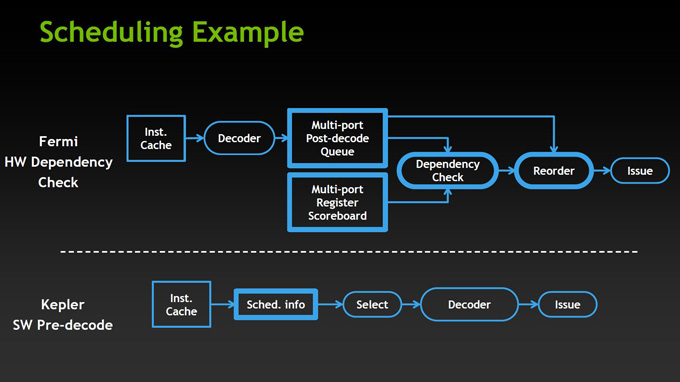

Let’s do a little bit of comparison per GPC level. The number of Streaming Multiprocessors (SM) on the Kepler has been cut in half compared to the Fermi, but the number of CUDA cores on each SM (SMX) is 12 times more. The Kepler also has four times of the number of the special units. In addition, the number of schedulers, dispatch units, and PolyMorph engines are all doubled.

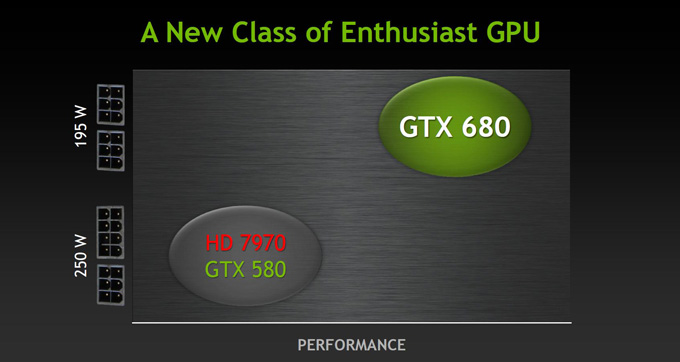

Faster

One of the main selling points behind Kepler is that Nvidia is able to provide the performance level of the 3 GTX 580 cards with a single GTX 680. When we compare the performance per watt, this means the card is able to deliver the same performance but at 25% of the power consumption and heat output as the 3 GTX 580. Additionally, we can see that the noise-level on the card has also been reduced from 51 dBA to 46dBA. While the number may seem small, we must remember that the decibel is a logarithmic unit so 5 units is quite a significant decrease, and will be noticeable. Do note however, that the Samaritan demo showcased at GDC 2011 has been greatly optimized not only on the software side but also graphics wise. With new FXAA anti-aliasing, Nvidia claims a 60% performance increase over standard MSAA anti-aliasing.

SMX / GPU Boost

Click on the Images to View a Larger Version

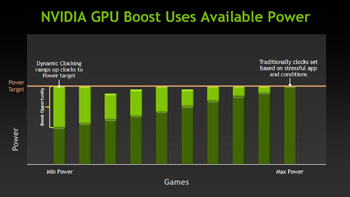

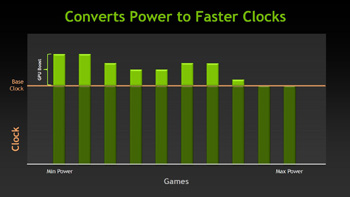

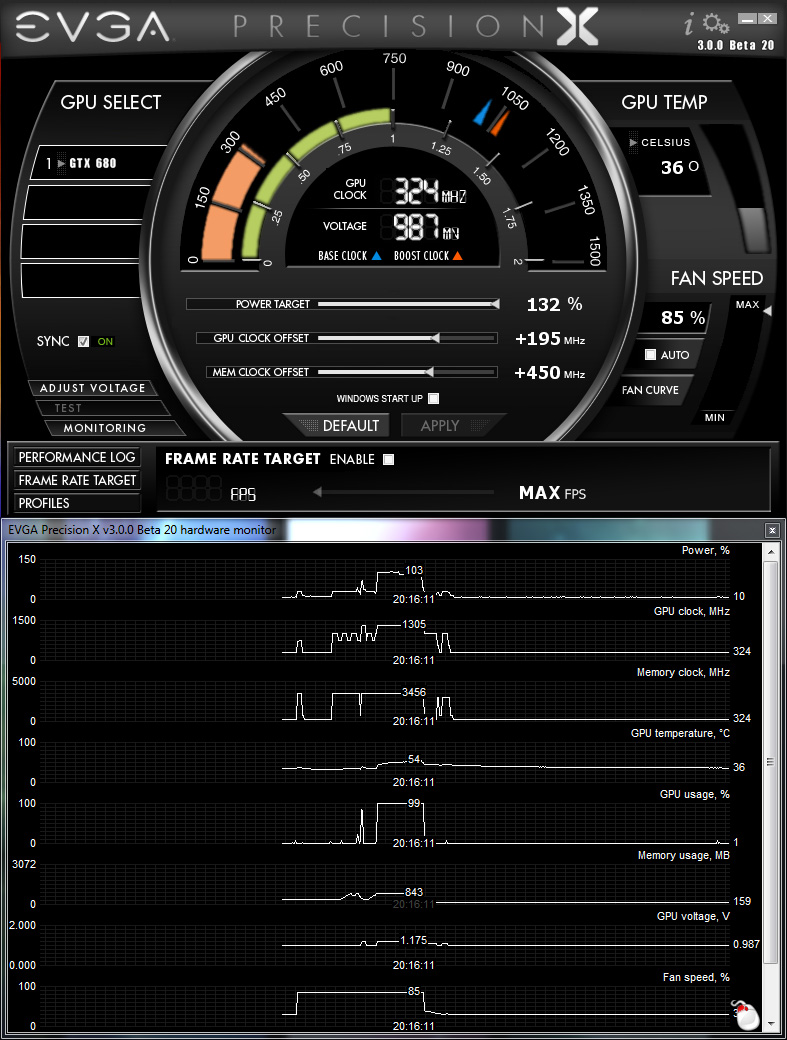

There is no upper limit to the GPU Boost but it is limited by the internal GPU power consumption set by Nvidia. We have seen the card Turbo by about 40-50MHz during the test routine. Nvidia does not provide the ability to raise the card’s maximum power target in their ForceWare drivers, though other tools like EVGA’s Precision X make it possible to reach even higher clockspeeds.

Video Encoding

Kepler also features a new dedicated H.264 video encoder called NVENC. Fermi’s video encoding was handled by the GPU’s array of CUDA cores. By having dedicated H.264 encoding circuitry, Kepler is able to reduce power consumption compared to Fermi. This is an important step for Nvidia as Intel’s Quick Sync has proven to be quite efficient at video encoding and the latest AMD HD 7000 Radeon cards also features a new Video Codec Engine.

Nvidia lets the software manufacturers implement support for their new NVENC engine if they wish to. They can even choose to encode using both NVENC and CUDA in parallel. This is very similar to what AMD has done with the Video Codec Engine in Hybrid mode. By combining the dedicated engine with GPU, the NVENC should be much faster than CUDA and possibly even Quick Sync.

- Can encode full HD resolution (1080p) video up to 8x faster than real-time

- Support for H.264 Base, Main, and High Profile Level 4.1 (Blu-ray standard)

- Supports MVC (multiview Video Coding) for stereoscopic video

- Up to 4096×4096 encoding

According to NVIDIA, besides transcoding, NVENC will also be used in video editing, wireless display, and videoconferencing applications. NVIDIA has been working with the software manufacturers to provide the software support for NVENC. At launch, Cyberlink MediaExpresso will support NVENC, and Cyberlink PowerDirector and Arcsoft MediaConverter will also add support for NVENC later.

Smoother

FXAA

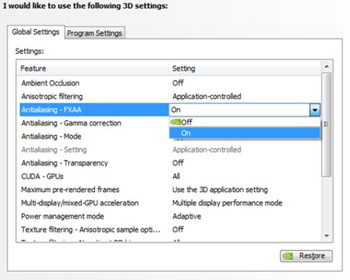

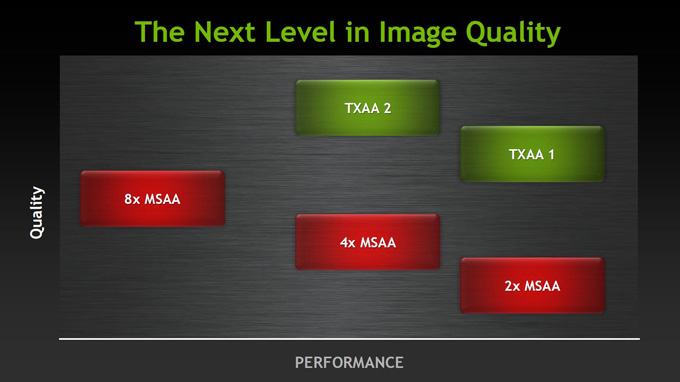

A faster card like Kepler means that we can actually do more computational work that leads to a better and more realistic visual image. Nvidia once again brings their FXAA back to the center stage for smoothing out the jagged edges in games. Nvidia wants to bring our attention to their FXAA technology , which is able to provide better visual quality than 4x MSAA. In fact, Nvidia shows that the Kepler is 60% faster than 4x MSAA while providing much smoother edges.

The latest ForceWare will have the ability to enable FXAA in the drivers making it possible to override internal game settings. Nvidia informs us that this option will be available for both Fermi and Kepler cards only with the new ForceWare 3xx series drivers, while the older cards will still have to rely on individual game settings.

TXAA

At the moment, we cannot test TXAA as there are no game titles currently supporting it. NVIDIA is working with various game makers to implement TXAA in future game releases. While TXAA will be available at launch with Kepler, it will be available on Fermi as well with future driver releases.

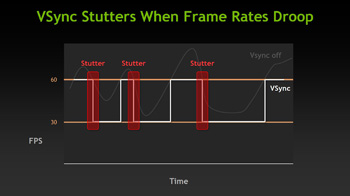

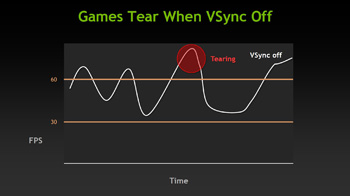

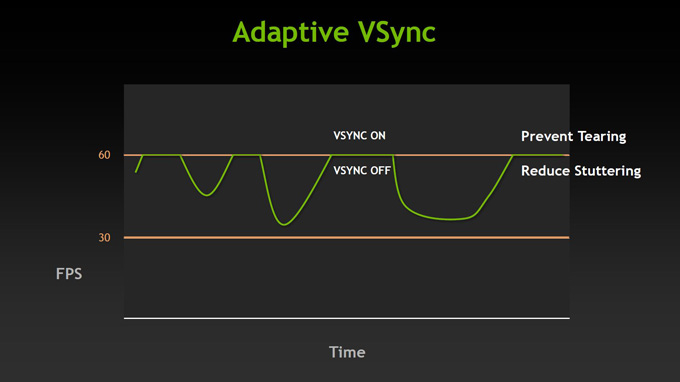

Adaptive VSync

Click on the Images to View a Larger Version

Specifications

Specifications |

Nvidia GeForce GTX 680 (GK104) |

|

Graphics Processing Clusters

|

4

|

|

Streaming Multiprocessors

|

8

|

|

CUDA Cores

|

1536

|

|

Texture Units

|

128

|

|

ROP Units

|

32

|

|

Base Clock

|

1006 MHz

|

|

Boost Clock

|

1058 MHz

|

|

Memory Clock (Data Rate)

|

6008 MHz

|

|

L2 Cache Size

|

512KB

|

|

Total Video Memory

|

2048MB GDDR5

|

|

Memory Interface

|

256-bit

|

| Total Memory Bandwidth | 192.26 GB/s |

|

Total Filtering Rate (Bilinear)

|

128.8 GigaTexels/sec

|

|

Fabrication Process

|

28 nm

|

|

Transistor Count

|

3.54 Billion

|

|

Connectors

|

2 x Dual-Link DVI

1 x HDMI

1 x Display Port

|

|

Form Factor

|

Dual Slot

|

|

Power Connectors

|

2 x 6-pin

|

|

Recommended Power Supply

|

550 Watts

|

|

Thermal Design Power (TDP)

|

195 Watts

|

|

Thermal Threshold

|

98C

|

Nvidia’s GeForce GTX 680 is the first card to achieve a 6Gbps performance on GDDR5 memory. A new I/O design was built just for Kepler to push the theoretical limits of GDDR5 signaling speed. Through in-depth study of Nvidia’s previous silicon, changes could only be made through extensive improvements in circuit and physical design, link training, and signal integrity. The improvement in performance was made possibly by a highly integrated cross-functional effort that enables the co-optimization of these three major areas of improvements. The result is just fantastic as we’ll explore just how far we were able to push the memory frequency on our overclocking page in this review.

Single GPU 3D Vision Surround

One area where Kepler really improved upon is the multi-monitor support. NVIDIA has been falling behind AMD for a long time in this regard, and it is good to finally see some development on this front. Kepler now supports up to four displays. While AMD still has an edge with Eyefinity’s 6 display support, NVIDIA is at least closing the gap.

Kepler will come with two Dual-link DVI ports, one HDMI port, and a DisplayPort 1.2. The ability to power four displays simultaneously means that it is now possible to run NVIDIA 3D Vision Surround with just 1 GPU. It is even possible to play games on 3 displays in the Surround mode and have a fourth display for other applications.

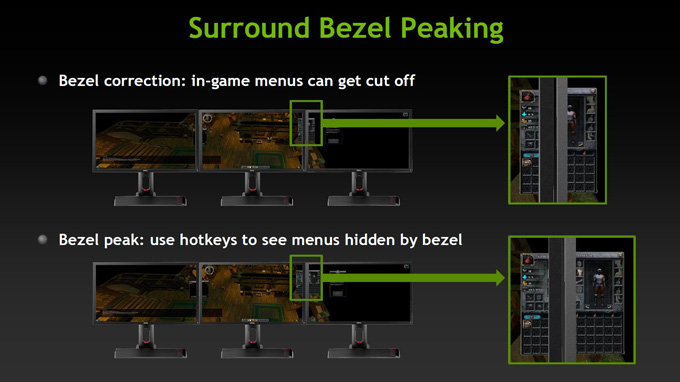

A couple new features have been added to Nvidia’s Surround. While Nvidia’s Surround is a great option for games, it still has its problems which are caused by custom resolutions. With a custom resolution, many invisible pixels are rendered in place of the monitor’s borders. This is done to give users the feeling that even though they cannot see the pixels where the monitor borders are located, the world does not simply cut from one monitor to the other. It gives gaming a much more engaging feel, however this has its disadvantages as well. Because the invisible pixels are still rendered, some video games might use this area to display important in-game information to the user. If the information is displayed right where the monitors connect, a lot of valuable information could be lost that the gamer might need.

The Surround Bezel Peaking option allows the user to use a hotkey on their keyboard to show these hidden pixels while using custom Surround resolutions like 6040×1080. We have played games where certain menus opened up right at the borders of the monitors and it was impossible to see what was being rendered in the menus. Another instance was having game subtitles that spanned across the monitors and some text would get lost at the borders of the monitor. Surround Bezel Peaking fixes this problem with a quick keyboard hotkey that can be used whenever the user wishes to see the hidden pixels.

To find out more about Surround on the new GeForce GTX 680, check out our article where we have fully tested the Nvidia’s GeForce GTX 680 at 5760×1080 and 6040×1080 on 8 different games here.

PhysX

Nvidia showed off two demos with their Kepler cards that show of their improvement in PhysX technology.

The Fracture Demo takes a look at real-time fracture simulation which is not dependent on previously rigged or prepared objects with premade fractures. In current video games that have PhysX fracture features, the objects are prepared to self-destruct in certain ways depending on where a bullet or another object impacts that object. What Nvidia shows off with their Fracture demo is that the new Kepler cards are able to do real-time simulations of actual fractures that have never been prepared before. This means that even if the object is hit several thousand times, it will fracture differently every single time. It uses physics simulations to calculate force, and impact to figure out how a real life fracture pattern would look.

The 2nd demo shows off Fur simulation. There were many fur simulations done in the past, but with the new Kepler architectuce, Nvidia is confident that game developers could make games with real-time fur simulations which would add to the gaming experience of the user. This quick demo shows off the possibilities of such fur simulation.

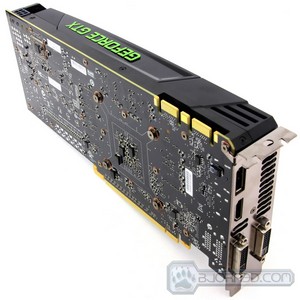

A Closer Look at the GTX 680

Click Images to Enlarge

Great products usually come in great packaging, and the Nvidia GeForce GTX 680 is no exception. A very simple packaging with a reflective surface can go a long way when making a first impression on the user, and we think Nvidia nailed it with Kepler. While most cards will come with accessories and other goodies, this reviewer’s package came only with the video card. Nonetheless, we won’t complain, since the video card is what we’re actually looking forward to, and accessories can be easily replaced.

Click Images to Enlarge

After taking the card out from the anti-static protection bag, it had several thin transparent protection strips all along the front and sides to protect the card from getting scratched during shipment. Looking at the GTX 680 for the first time brings back few memories from the G110 Fermi launch from November 2010, but as we get to explore the new shroud and power design of the card, we’ll see a few nice improvements on the GTX 680 Kepler cards.

Click Images to Enlarge

Starting off with the PCI-E power connectors on GeForce GTX 680, one of the first questions that arose during the first inspection of the the card was the stacked PCI-E power connectors. After talking with Nvidia, they claim the stacked connectors allow them to reposition the fan on the video card providing a better airflow over the video cards components and over the heatsink that cools off the GK104 GPU. They also claim that they are able to lower acoustic levels by having less obstruction in the fan’s airflow. This stacking method also helps save PCB space.

The second thing we realized was the fact that the GTX 680 uses only two 6-pin PCI-E power connectors. Does this mean that overclocking will be a problem due to only being able to push 150W of power through the connectors? Or can this mean that there will be other cards in future, like the GTX 690 which will allow Nvidia to experiment with more powerful dual-GPU solutions than previously? Well to answer the first question, there should actually not be any major overclocking problems because of the lower input power that we can supply the card. The new Kepler architecture uses far less power than the previous Fermi architecture, which is why Nvidia was able to drop the 8-pin power connector and use a 6-pin PCI-E connector instead. As far as the second question goes, we are not sure what Nvidia’s future plans are at this moment.

Click Images to Enlarge

Now the GTX 680 might look like a massive card, but it’s actually a bit smaller than a GTX 580. The GeForce GTX 680 measures about 25.4 cm in length, which is about 10 inches. It does have a slightly different cover design and as we will see on the pictures below, the PCB is completely redesigned as well. However, overall the only major difference on the shroud design is that there is no longer a small hole on the end of the card towards the fan, and the shroud is tapered off slightly. Honestly it’s slightly a strange design, but as long as it runs and performs great, we won’t complain.

Click Images to Enlarge

Taking a look at the back for the GTX 680, Nvidia has slightly redesigned the display ports available on the GTX 680. Instead of the standard two DVI ports and a single HDMI port, now there are two DVI ports, an HDMI port, and a Display Port. With this configuration, the user can run 3D Vision Surround on a single Kepler card along with an additional 4th monitor being used as a separate single monitor.

Click Images to Enlarge

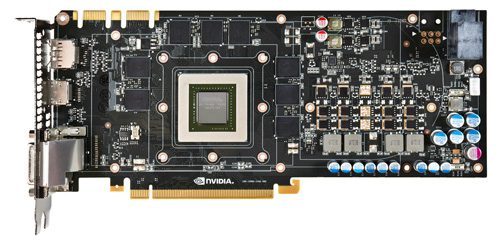

Looking at the SLI bridge connectors on the GTX 680, it looks like this card is 3 and 4-way SLI capable if combined with the appropriate motherboard. We’ll have SLI performance testing in future reviews, so stay tuned and keep checking back as we might have an article up in the next few days. On the PCB side of the card, we can see a little bulge of surface mounted components and a small chip. This seems to be the controller for the GPU Boost which monitors the power target for the video card and automatically overclocks the GPU if additional power could be used.

Click Images to Enlarge

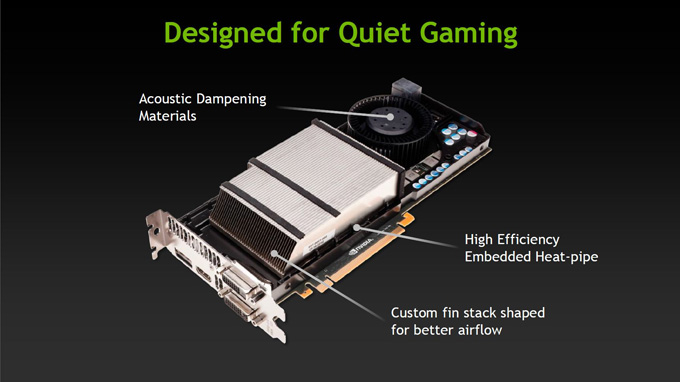

Looking right under the hood reveals Nvidia’s cooling solution for Kepler. It looks extremely similar to the vapor chamber design that was used on the previous 2nd generation Fermi cards; however, this cooling solution is heat-pipe based. Three heatpipes are embedded into the base of the GPU heatsink, which extracts heat from the GPU. It looks like Nvidia upped the game a bit with their cooling since they have added special acoustic dampening materials in the fan to make sure less noise is generated during full load. The new heatsink design is also suppose to improve air circulation, which in effect helps cooling down the card even better.

Click Images to Enlarge

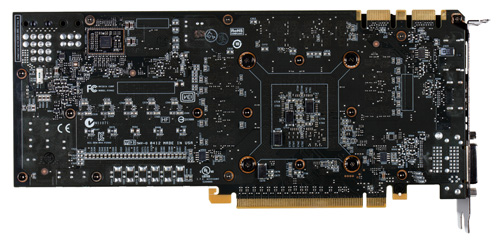

And finally here is the naked GK104. As we can see the PCB has been completely redesigned. All the capacitors on the card were placed at the edges of the board, and the VRM has been nicely placed next to each other without any major surface-mounted components obstructing it. This makes it much simpler cooling down the card if the user decides to go with an aftermarket cooler. The GK104 chip is also revealed, as it does not have a thermal cover. This helps create better contact between the GPU and the cooler, but can be dangerous because installing a cooler improperly onto the naked GPU could damage the chip.

Testing Methodology

The OS we use is Windows 7 Pro 64bit with all patches and updates applied. We also use the latest drivers available for the motherboard and any devices attached to the computer. We do not disable background tasks or tweak the OS or system in any way. We turn off drive indexing and daily defragging. We also turn off Prefetch and Superfetch. This is not an attempt to produce bigger benchmark numbers. Drive indexing and defragging can interfere with testing and produce confusing numbers. If a test were to be run while a drive was being indexed or defragged, and then the same test was later run when these processes were off, the two results would be contradictory and erroneous. As we cannot control when defragging and indexing occur precisely enough to guarantee that they won’t interfere with testing, we opt to disable the features entirely.

Prefetch tries to predict what users will load the next time they boot the machine by caching the relevant files and storing them for later use. We want to learn how the program runs without any of the files being cached, and we disable it so that each test run we do not have to clear pre-fetch to get accurate numbers. Lastly we disable Superfetch. Superfetch loads often-used programs into the memory. It is one of the reasons that Windows Vista occupies so much memory. Vista fills the memory in an attempt to predict what users will load. Having one test run with files cached, and another test run with the files un-cached would result in inaccurate numbers. Again, since we can’t control its timings so precisely, it we turn it off. Because these four features can potentially interfere with benchmarking, and and are out of our control, we disable them. We do not disable anything else.

We ran each test a total of 3 times, and reported the average score from all three scores. Benchmark screenshots are of the median result. Anomalous results were discounted and the benchmarks were rerun.

Please note that due to new driver releases with performance improvements, we rebenched every card shown in the results section. The results here will be different than previous reviews due to the performance increases in drivers.

Test Rig

| Test Rig | |

| Case | Cooler Master Storm Trooper |

| CPUs | Intel Core i7 3960X (Sandy Bridge-E) @ 4.6GHz |

| Motherboards | GIGABYTE X79-UD5 X79 Chipset Motherboard |

| Ram | Kingston HyperX Genesis 32GB (8x4GB) 1600Mhz 9-9-11-27 Quad-Channel Kit |

| CPU Cooler | Noctua NH-D14 Air Cooler |

| Hard Drives | 2x Western Digital RE3 1TB 7200RPM 3Gb/s Hard Drives |

| SSD | 1x Kingston HyperX 240GB SATA III 6Gb/s SSD |

| Optical | ASUS DVD-Burner |

| GPU | Nvidia GeForce GTX 680 2GB Video Card

3x Nvidia GeForce GTX 580 1.5GB Video CardsAMD HD7970 3GB Video Card AMD HD6990 4GB Video Card |

| PSU | Cooler Master Silent Pro Gold 1200W PSU |

| Mouse | Razer Imperator Battlefield 3 Edition |

| Keyboard | Razer Blackwidow Ultimate Battlefield 3 Edition |

Synthetic Benchmarks & Games

We will use the following applications to benchmark the performance of the Nvidia GeForce GTX 680 video card.

| Benchmarks |

|---|

| 3DMark Vantage |

| 3DMark 11 |

| Crysis 2 |

| Just Cause 2 |

| Lost Planet 2 |

| Metro 2033 |

| Battlefield 3 |

| Unigine Heaven 3.0 |

| Batman Arkham City |

| Dirt 3 |

| H.A.W.X. 2 |

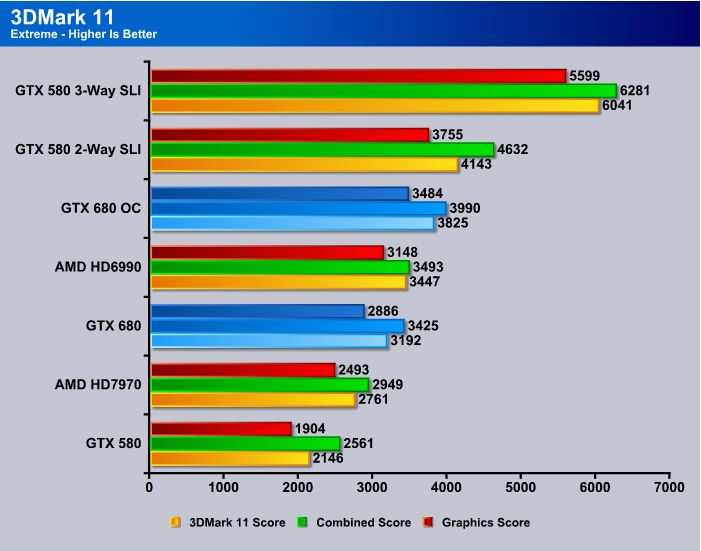

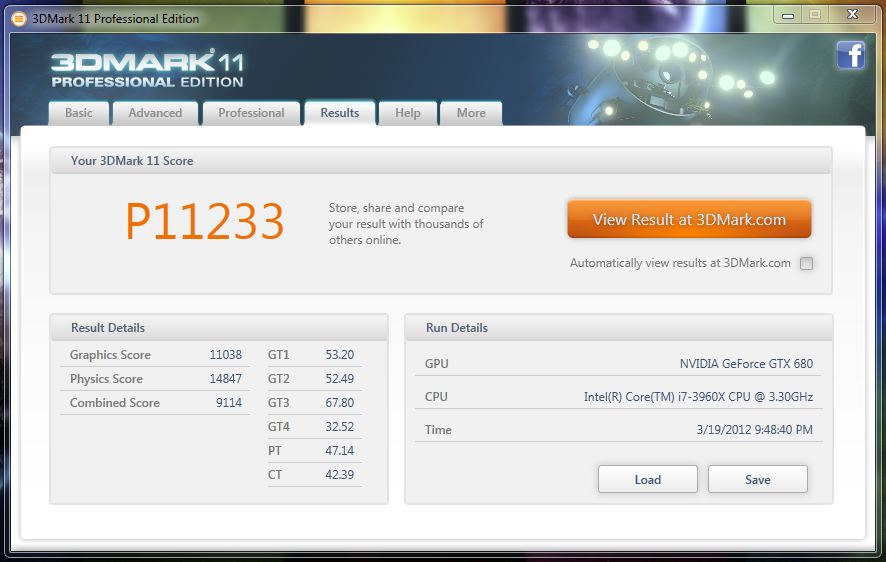

3DMark 11

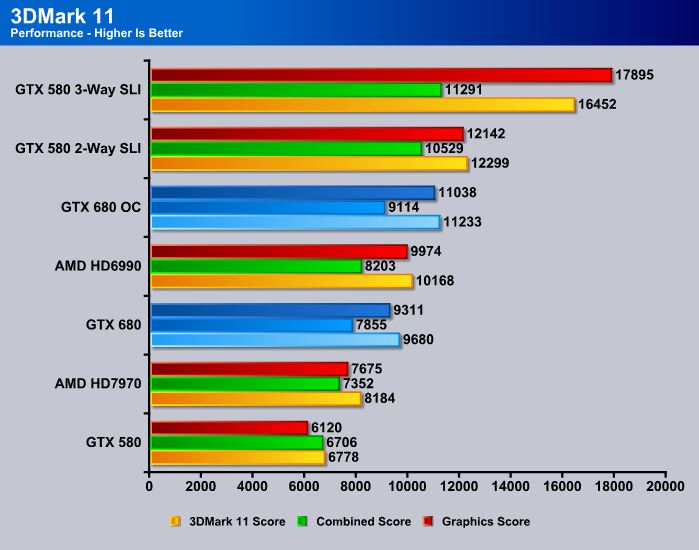

Right off the bat, 3DMark 11 clearly shows us that the Nvidia GeForce GTX 680 is the fastest single-GPU solution currently available on the market. The GTX 680 is roughly 16% faster than the AMD HD7970, and roughly 33% faster than a GTX 580. But let’s not forget about the overclocked GTX 680, which clearly outperforms AMDs 6990 Dual-GPU video card by 10%. That’s about a 44% performance increase from the GTX 580. It’s just mind blowing how the new Kepler architecture allows Nvidia to have much lower power consumption than any other card tested in this review, while also outperforming a dual-GPU card when overclocked.

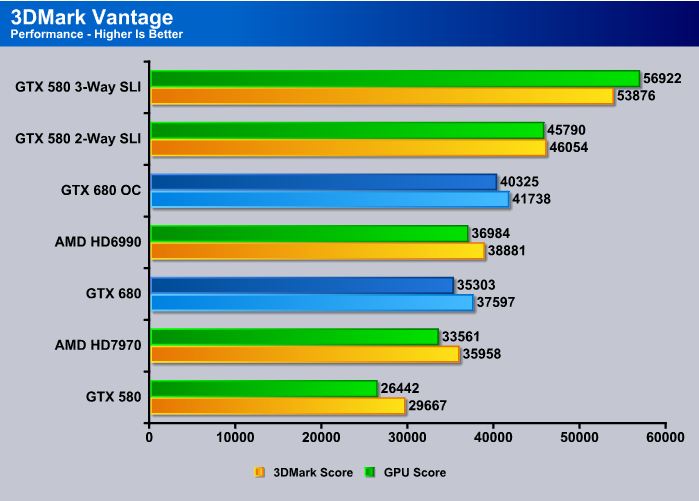

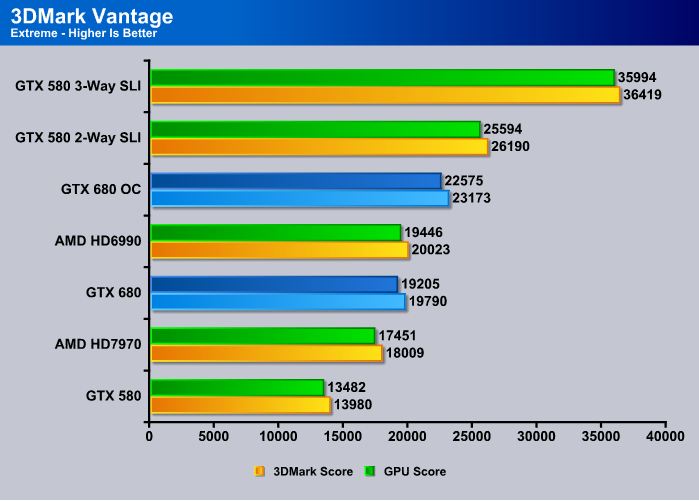

3DMark Vantage

In 3DMark Vantage, we see the same exact scenario. The GTX 680 outperforms AMD’s fastest single-GPU solution video card, and gets extremely close to the performance of AMD’s dual-GPU HD6990 video card. An overclocked GTX 680 is also getting very close to the performance of two GTX 580s in 2-Way SLI, which just shows how powerful a single GK104 (Kepler) GPU can be compared to the older GF110 (Fermi) architecture.

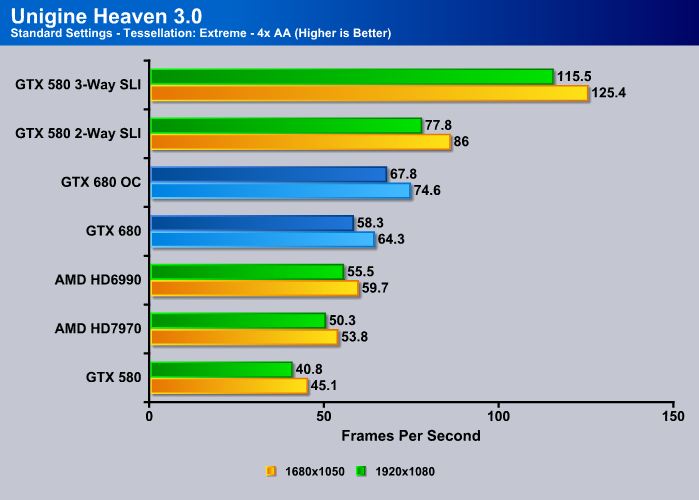

Unigine Heaven 3.0

Unigine Heaven is a benchmark program based on Unigine Corp’s latest engine, Unigine. The engine features DirectX 11, Hardware tessellation, DirectCompute, and Shader Model 5.0. All of these new technologies combined with the ability to run each card through the same exact test means this benchmark should be in our arsenal for a long time.

As we all know, the GTX 580 is no longer the fastest single-GPU video card that Nvidia offers, and since AMD’s launch of the HD7970, the Fermi architecture became outdated compared to the Tahiti architecture. But the HD6990 is still a faster dual-GPU solution when it comes to gaming. Interestingly, the GTX 680 outperforms both of these flagship GPUs, and not just by 1 or 2 frames per second, but by up to 4.6FPS compared to the HD6990.

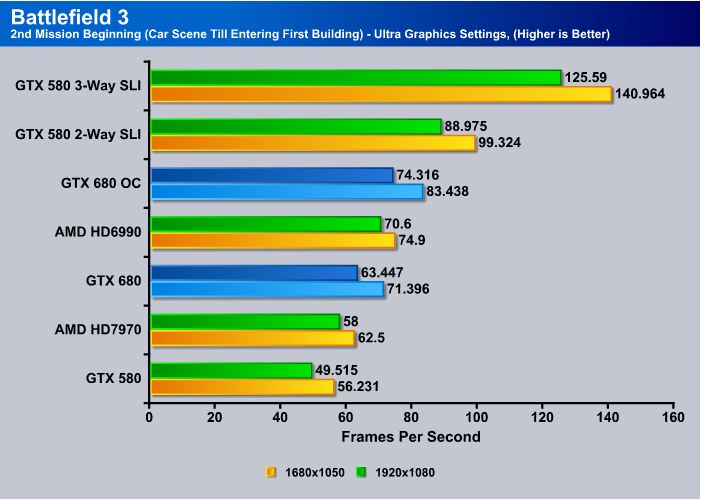

Battlefield 3

Lets take a look at some gaming benchmarks, starting off with one of the most awaited single and multiplayer first person shooters: Battlefield 3. Nvidia’s GTX 680 gets a stunning 63.447FPS at a 1920×1080 resolution on Ultra Graphics Settings. This is the game’s highest visual quality, and the GTX 680 manages to render just above 60FPS, which is a sweet spot for gamers due to the smooth gameplay they will experience at these frame rates. Having a single GTX 580 is also enough to play the game maxed out, however having the extra 20-23FPS will help in the more intensive parts of the game where the GTX 580 might have been pushed a bit to hard to achieve smooth gameplay.

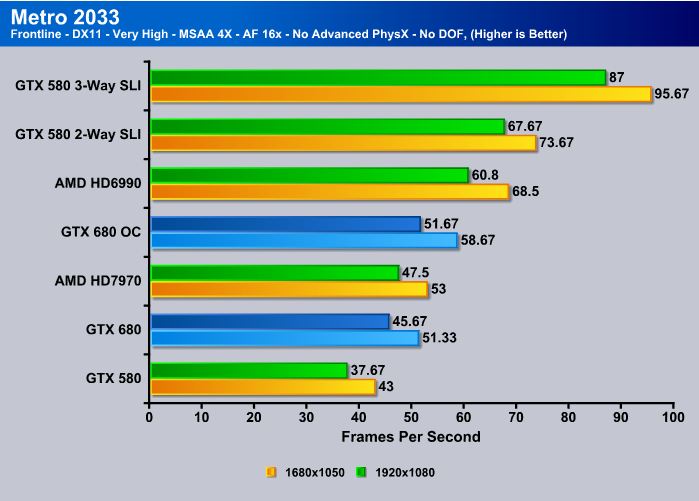

Metro 2033

Even after 2 years, Metro 2033 is still one of the most demanding DX11 video games available to really push the limits of your system. While we disabled Advanced PhysX and Depth of Field for our benchmark to keep a nice consistency between Nvidia’s and AMD’s cards, enabling these features will strain the system even more, leading to even lower frame rates than what we see in the chart above. Having a powerful video card is key to achieving smooth gameplay even with the extra graphics settings that could be enabled in Metro 2033. Here we see that AMD’s HD7970 performed slightly faster than the GTX 680; however, an overclocked GTX 680 gets about 9-10% faster performance than the HD7970. Metro 2033 is very well optimized for dual-GPU video cards, which is why AMD’s HD6990 performs so well in this particular benchmark. Then again, having two GTX 580s in a 2-way SLI setup gets even better performance. We can’t wait to try out two GTX 680s to see how that would perform in Metro 2033.

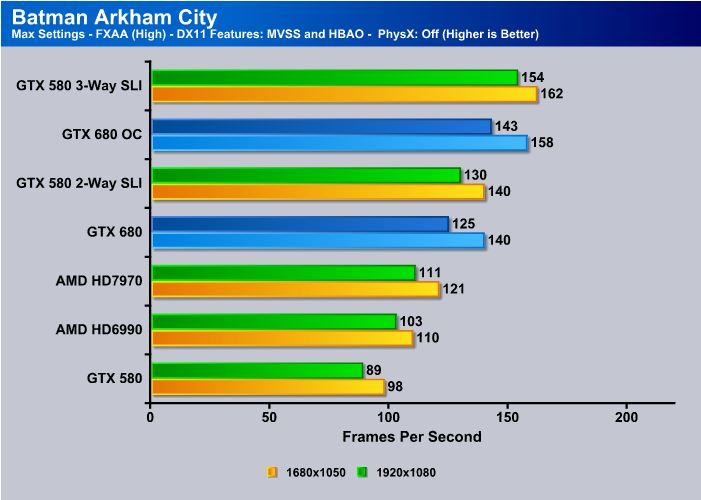

Batman Arkham City

Batman Arkham City is one of the first DX11 games we’ve played that natively supports FXAA. FXAA is the new Anti-Aliasing specification that Nvidia is currently marketing with the Kepler architecture and the new 300 series ForceWare drivers. FXAA is apparently 60% faster compared to MSAA, and also provides a better image quality than lower MSAA configurations. We’re planning on testing the difference between the different Anti-Aliasing settings in future articles, so stay tuned for that in the near future. Because Kepler is optimized for FXAA, we can clearly see how well it performs compared to its competition, leaving behind even AMD’s dual-GPU video card.

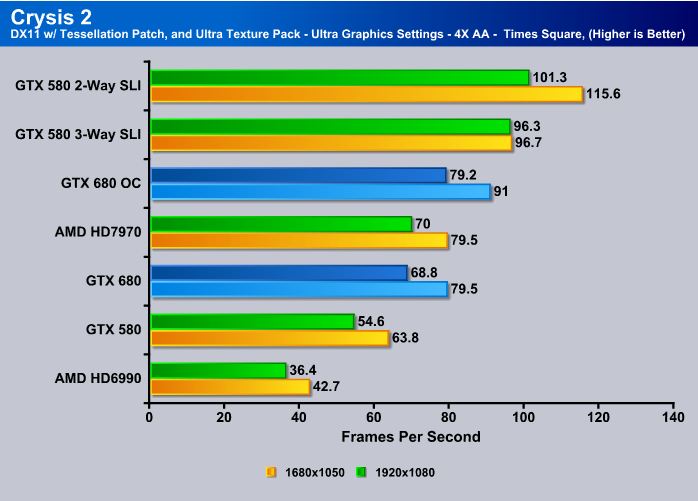

Crysis 2

When Crysis 2 was first released, it was a DX10-based game, but a few months after the release, Crytek released a massive 2GB DX11 patch, which enabled tessellation within the game as well as some other DX11 features. This patch also included the Ultra Texture pack, which is even higher quality compared to the original High-Quality textures that came with the game. We tested Crysis 2 with these patches installed and we were quite amazed at how well the GTX 680 performed. While it fell slightly behind on the higher 1920×1080 resolution, it still performed just as well as the HD7970 at 1680×1050. While we were testing the cards, we’ve also noticed that Crysis 2 doesn’t seem to like 3-Way SLI, as we’ve reran the benchmark several times. Despite repeated testing, our 3-Way GTX 580 setup failed to outperform a 2-Way SLI setup. This could be because of the way the game’s engine is designed or due to driver optimization problems.

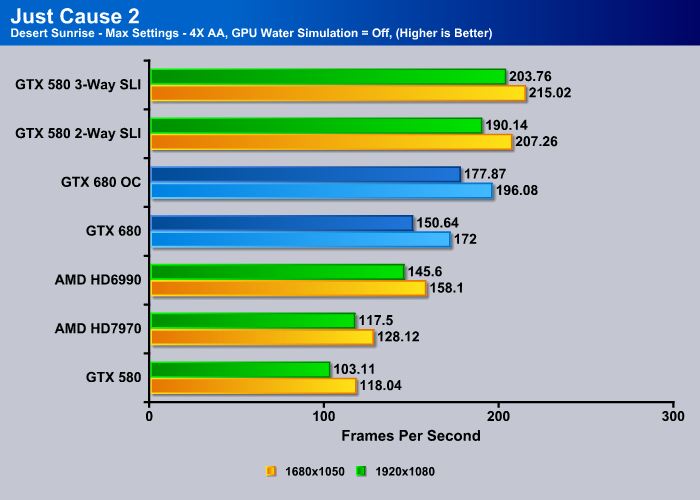

Just Cause 2

GPU Water Simulation was disabled for Just Cause 2 just so our benchmark results would be consistent with the AMD video cards, as the GPU Water Simulation is an Nvidia GPU feature only. Interestingly the GTX 680 really left the HD 7970 behind on this benchmark. The GTX 680 performed 34% faster than the HD7970 at 1920×1080, and almost 35% faster at 1680×1050. These numbers are really amazing considering that the GTX 680 performed 42% faster from Nvidia’s last generation flagship single-GPU Fermi video card, the GTX 580.

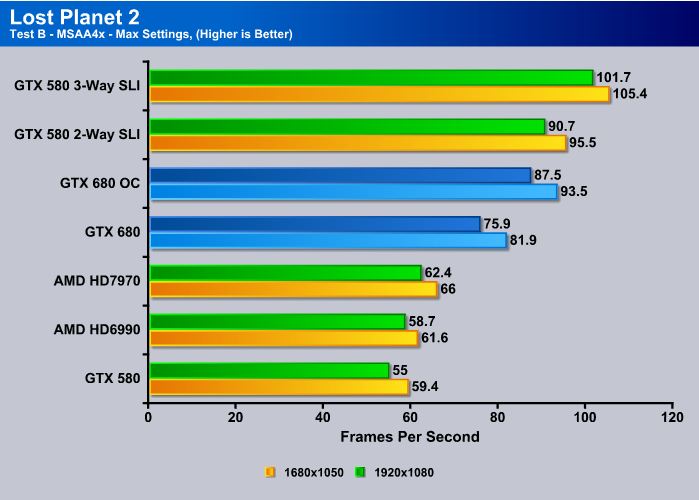

Lost Planet 2

When we overclocked the GTX 680 to 1305MHz Core Clock speed and about 6900MHz on the memory, we almost beat the GTX 580 2-Way SLI scores. We only needed about 2% more performance to beat the 2-Way SLI system running two of the last generation flagship video cards with a single-GPU Kepler card. Once again, the GTX 680 outperforms the HD7970 be a great deal.

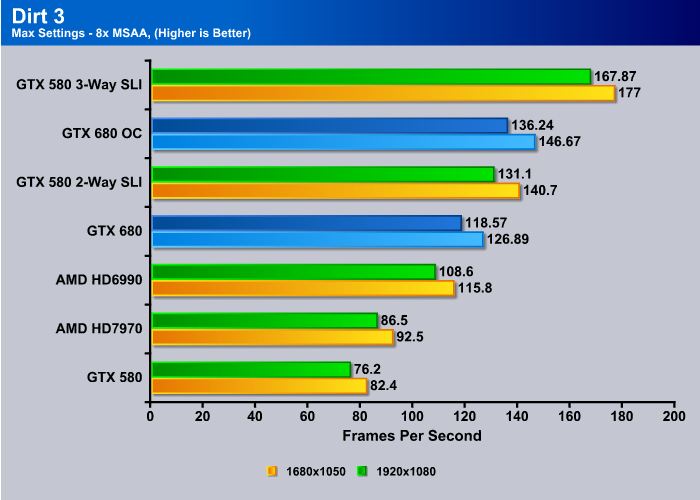

Dirt 3

Finally, the overclocked GTX 680 has really surprised us in Dirt 3. It was able to perform about 6 frames per second faster than a 2-Way SLI setup of two GTX 580s. The HD7970 fell far behind but was still faster than a stock GTX 580. The stock GTX 680 on the other hand showed a great deal of improvement in performance over its older Fermi cousin.

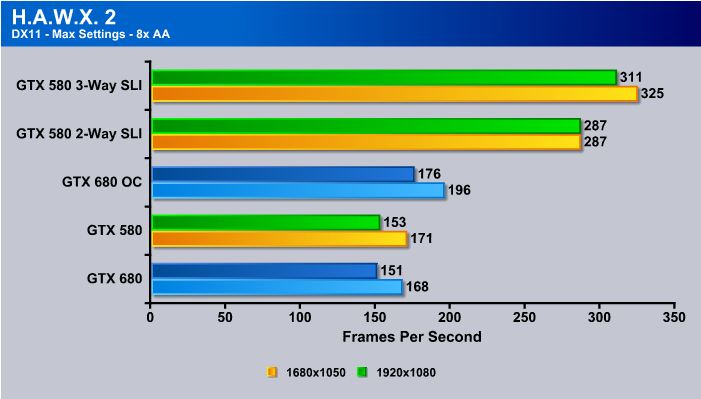

H.A.W.X 2

We actually thought Kepler would shine here, but it turned out to perform slower than Fermi in H.A.W.X 2 Unfortunately, we were not able to get H.A.W.X 2 working in time with AMDs cards which is why we left the results out, but as soon as we have these results, we’ll try to have a follow up in future Kepler reviews/articles.

Overclocking

The new Kepler architecture is very friendly when it comes to overclocking. As mentioned previously, the card already comes with Nvidia’s GPU Boost, which works very similarly to Intel’s Turbo technology on the latest Sandy Bridge architecture. When the video card’s power target is not reached during full load at stock clocks, the video card will automatically overclock itself to 1058MHz or higher. We’ve seen the Core Clock speed hit 1128MHz even without manual overclocking. The card will use GPU Boost only when the operating conditions are perfect for the video card, including the temperature and the power consumption.

But jumping straight to manual overclocking, the Nvidia GeForce GTX 680 has been one of the most stable and one of the easiest cards to overclock with the help of EVGA’s Precision X Overclocking utility. Precision X is very much like MSI’s Afterburner overclocking utility. It has all the main features like Voltage Adjustments for the GPU, manual Core Clock and Memory frequency settings, Auto or Manual Fan settings, as well as real-time monitoring of voltages, clock speeds, fan speeds, temperatures, and much more. To top it off, Precision X also has manual fan curve options, which allow the user to manually adjust how fast the fan will spin on the video card depending on the corresponding temperatures that the user set the fan speeds to.

Finally, a very interesting and useful tool in Precision X has to be the Frame Rate Target, which allows the user to set a maximum Frame Rate option for their 3D applications. Suppose there is game that sometimes runs well over 100FPS during gameplay, but also drops under 60FPS in other areas of the game. With the Frame Rate Target Limiter, the user can set a 60FPS limit, and when the game would use more than 60FPS, the game video card will be limited to only render up to 60FPS. This limits erratic changes in frame rate, and yields an overall smoother gameplay experience.

After only about 10 minutes of overclocking, we settled at a fairly high overclock with a Core Clock speed of 1305MHz and a Memory Frequency at 6908MHz. That’s roughly a 300MHz overclock on the Core Clock speed from 1006MHz, and about a 900MHz overclock on the GDDR5 memory frequency. We can’t wait to see some LN2 overclocking on the GTX 680 in the near future, but for now all our tests were done just with the stock Nvidia air cooler, and the results are still extremely impressive.

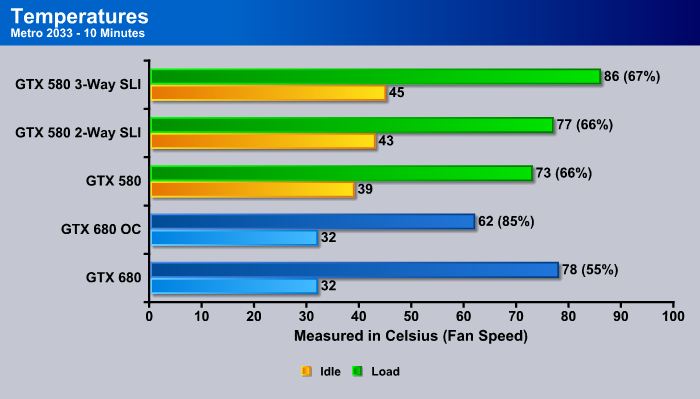

TEMPERATURES

To measure the temperature of the video card, we used EVGA Precision X and ran Metro 2033 Benchmark in a loop to find the Load temperatures for the video cards. The highest temperature was recorded. After looping for 10 minutes, Metro 2033 was turned off and we let the computer sit at the desktop for another 10 minutes before we measured the idle temperatures.

Please note that the GTX 680 OC result shows 85% fan speed. This is the limit on the GTX 680 and we manually set it to 85% to prevent damage to the card. Then we sat back and wore a drool cup as we ran some benchmarks and measured the temperature of the GPU. Interestingly, the GTX 680 only hit 62C at 85% fan speed. We were expecting higher temperatures at such extreme overclocked settings. This just shows how good the stock pre-installed cooler is on the new GTX 680 reference cards. The video card’s fan becomes noticeable once the speed hits about 60%, and the 85% fan speed is quite noisy for gaming unless users wear noise-cancelling headphones. It is also good to know that the 85% fan noise is nowhere close to the AMD HD6990’s fan noise, which means that if you’re thinking of moving from a HD6990 to a GTX 680, you will be quite impressed with the acoustic performance of the GTX 680.

Other than that, when the card is left on Auto settings, the highest temperatures we’ve seen were in the 78C range at a fan speed of 55%. We could not hear the video card’s fan over the already installed chassis fans in our system, which makes the GTX 680 an excellent choice for gamers that get frustrated by overall PC noise. Therefore, the GTX 680 sacrifices a bit on the temperature side, in order to ensure a quiet gaming experience. The GTX 580 on the other hand, ran at a slightly higher fan speed and also ran slightly cooler. But when we look at the idle temperatures, the GTX 680 had the lowest measured temperatures.

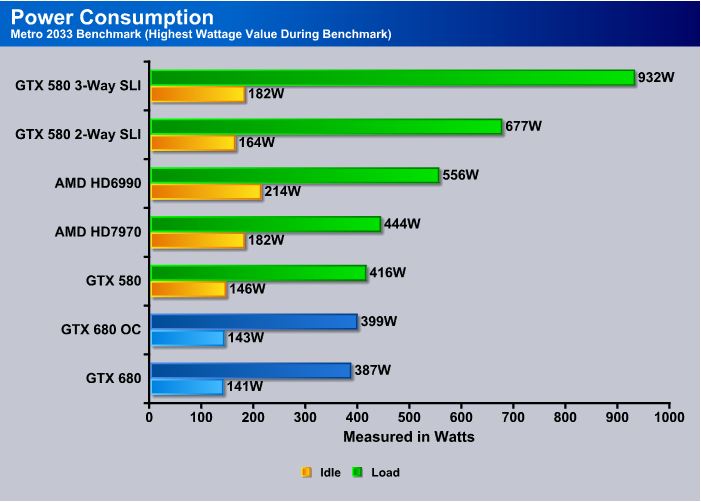

POWER CONSUMPTION

To get our power consumption numbers, we plugged in our Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then we ran Metro 2033 for a few minutes minutes and recorded the highest power usage.

Now here comes the most fascinating parts of the new Kepler architecture. While the GTX 680 offers a great gaming experience due to being the fastest single GPU video card in most games, it is also a card that uses the least amount of power when compared to other high-end video cards. Not only that, but when we overclocked the GTX 680, we still had lower power consumption than with all the other video cards we have tested. Additionally, the performance we got with the overclocked GTX 680 was close to two GTX 580s in 2-Way SLI, while that type of setup uses up to 677W of power. Please do note that we used Metro 2033 to find our max power consumption, but these numbers could vary depending on the application. We didn’t want to run tools like OCCT or Furmark, because those applications do not represent an everyday real-life scenario. We can’t wait to put two GTX 680s to the test and coming close to perhaps beating three GTX 580s in performance with much lower power consumption.

Our Final Thoughts

Once again, Nvidia takes the lead by having the fastest single GPU video card currently available on the market. The development of the GK104 Kepler architecture clearly shows that Nvidia understands the needs of the gamers around the world. With Kepler, Nvidia also shows off their engineering architecture improvements over AMD’s Southern Islands HD7970 video card. Not only is the Nvidia GeForce GTX 680 the fastest single GPU card, but it is also the most power efficient and quietest high-end flagship card we have ever tested here at Bjorn3D.

The most impressive part of Kepler is its overclocking potential. At first we were skeptical, and thought the two 6-pin PCI-E power connectors on the card might not provide enough power to achieve high overclocks. However, once we fired up EVGA’s Precision X overclocking utility, within 10 minutes we were able to overclock the video card by 300 MHz over its stock Core Clock speed, and 900Mhz above it’s stock 6004 MHz memory frequency. This allowed us to outperform two GTX 580s in a 2-way SLI configuration in our Dirt 3 benchmark. At these overclocked frequencies, the GTX 680 still maintained a lower power consumption compared to the GTX 580 Fermi architecture.

But that’s not all: Nvidia also launched several new features along with the Kepler architecture which makes this card really stand out from the crowd. Just like Intel’s Turbo Boost on their latest Sany Bridge architecture, Nvidia introduced “GPU Boost”, which overclocks the base clock speed of the GTX 680 to higher clock speeds when the power target of the card is not yet met. Further improvements in the new ForceWare 3xx series drivers allow users to take advantage of faster anti-aliasing options in their games that not only create better visual quality than previous MSAA based anti-aliasing, but also drastically improve gaming performance. FXAA is one of these edge smoothing anti-aliasing features, along with TXAA which will be introduced in newer titles later this year.

With the new architecture improvement, Nvidia also introduced a new video encoding called NVENC. It’s a dedicated H.264 encoding circuitry, which allows Kepler to run at a much lower power consumption compared to Fermi, which handled video encoding by using the GPU’s array of CUDA cores.

And finally, Nvidia also improved on Nvidia Surround technology by providing a new feature called Surround Bezel Peaking. It allows gamers to see hidden portions of a custom made resolution during Surround gaming. The new Kepler architecture also supports full 3D Vision Surround support on a single GPU, along with a 4th monitor which is separate from the surround setup.

With all that covered, at an MSRP of $499, the Nvidia GeForce GTX 680 should definitely be on your wish-list for your next graphics card if money is not an issue.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996