If you’re looking for a great card that you can use on your older power supply this is it.

Introduction

With today’s newest additions to graphics cards, it seems like they keep getting bigger and bigger. And along with each rehash we usually see a die decrease, but the card doesn’t necessarily get smaller in size. This is unfortunate for people with smaller cases or mid tower cases where the hard drive cage gets in the way. As we all know, a standard 9800GTX or 9800GTX+ is 10.5 inches long. That’s pretty long for a video card and requires some serious roomage in your case to have one. Leadtek though, has decided to go beyond Nvidia’s reference design and create their own. They made a new card that will deliver the performance of the bigger cards, but take up less space, use less power, and generate less heat. Sounds like a winner.

LEADTEK

Founded in 1986, Leadtek is doing something slightly different than other manufacturers. Their company has invested tremendous resource in research and development. In fact, they state that “Research and development has been the heart and soul of Leadtek corporate policy and vision from the start. Credence to this is born out in the fact that annually 30% of the employees are engaged in, and 5% of the revenue is invested in, R&D.”

Furthermore, the company focuses on their customers and provides high quality products with added value:

“Innovation and Quality ” are all and intrinsic part of our corporate policy. We have never failed to stress the importance of strong R&D capabilities if we are to continue to make high quality products with added value.

By doing so, our products will not only go on winning favorable reviews in the professional media and at exhibitions around the world but the respect and loyalty of the market.

For Leadtek, our customers really do come first and their satisfaction is paramount important to us.

CARD AND BUNDLE

Here we have the front of the box and the back. In the front you see the usual jazz along with a small picture of the card and the name. In the back we have more specifications and features. I’m sure you can see the rainbow like lines on the box. This is something Leadtek has started doing and I think it puts a little pizzazz to the box and catches the eye.

Leadtek protects their card with a nice Styrofoam cut out that will leave your card undamaged from those nasty delivery guys. We all know which ones I’m talking about. Luckily, mine was untouched and worked perfectly out of the box. We have the bundle here as well, which includes the following:

- Leadtek Manual

- Driver CD

- DVI-VGA Adapter

- PCI-E 6pin – Molex adapter

- S-Video to Composite cable

- And a copy of Overlord

The typical set of things that you would most likely find in a video card box.

We have a green PCB which I think is the only reference thing on this board. There’s a heatsink that looks like something taken out of Transformers and this card is clearly shorter than its reference brothers. This card actually mimics the older 8800GTS 512MB cards with its design and heatsink.

On this card, Leadtek leaves more of the underside of the shroud visible. You can see the aluminum base of the heatsink, and most importantly, the single 6-pin PCI-E power connector. That is strikingly odd, but I did say this was a non-reference design. Also shown is the dual SLI connectors for Tri-SLI and an SPDI-F connector for HD Audio. Unfortunately, Leadtek did not include a cable for the audio in their bundle.

The connector end of the card looks pretty normal with dual DVI slots and TV out. You can also see the exhaust port for the fan. I went ahead and took a picture of the sticker on the back just so you can in fact see, this really is a 9800GTX+. And it wasn’t placed over an 8800GTS sticker either.

Here I’m comparing the Leadtek to a reference XFX 9800GTX+ and a smaller Asus 7950GT. As you can see, the Leadtek card is a great deal smaller than the XFX.

FEATURES AND SPECIFICATIONS

| Leadtek Winfast 9800GTX+ | |

|

Specifications |

|

| Fabrication Process | 55nm (G92) |

| Core Clock (Including dispatch, texture units, and ROP units) |

740 MHz |

| Shader Clock (Processor Cores) | 1836 MHz |

| Processor Cores | 128 |

| Memory Clock / Data Rate | 1100 MHz / 2200 MHz |

| Memory Interface | 256 bit |

| Memory Size | 512 MB |

| ROPs | 16 |

| Texture Filtering Units | 64 |

| HDCP Support | Yes |

| HDMI Support | Yes (Using DVI to HDMI adaptor) |

| Connectors | 2 x Dual-Link DVI-I 1 x 7-pin HDTV Out |

| RAMDAC’s | 400 MHz |

| BUS Technology | PCI-Express 2.0 |

| Form Factor | Dual Slot |

| Power Connectors | 1 x 6-pin |

| Dimensions | 270mm x 100mm x 32mm (L x H x D) 9.45in x 3.93in x 1.26in |

Features

- PCI Express 2.0 GPU Provides Support for Next Generation PC Platforms

- GPU/Memory Clock at 738/2200 MHz

- HDCP capable

- 512MB, 256-bit memory interface for smooth, realistic gaming experiences at Ultra-High Resolutions /AA/AF gaming

- Support Dual Dual-Link DVI with awe-inspiring 2560×1600 resolution

- The Ultimate Blu-ray and HD DVD Movie Experience on a Gaming PC

- Smoothly playback H.264, MPEG-2, VC-1 and WMV video—including WMV HD

- Industry leading 3-way NVIDIA SLI technology offers amazing performance

- NVIDIA® unified architecture

- GigaThread™ Technology

- High-Speed GDDR3 Memory on Board

- NVIDIA PhysX™ -Ready

- Dual Dual-Link DVI

- Dual 400MHz RAMDACs

- 3-way NVIDIA SLI technology

- HDCP Capable

- NVIDIA® nView® multi-display technology

- NVIDIA® Lumenex™ Engine

- NVIDIA® Quantum Effects™ Technology

- Microsoft® DirectX® 10 Shader Model 4.0 Support

- Dual-stream Hardware Acceleration

- High dynamic-range (HDR) Rendering Support

- NVIDIA® PureVideo ™ HD technology

- HybridPower Technology support

- Integrated HDTV encoder

- OpenGL® 2.1 Optimizations and Support

- NVIDIA CUDA™ Technology support

Test Setup

| Test Rig “Univac” |

|

| Case Type | Thermaltake V9 |

| CPU | Intel Core 2 Duo E8400 @ 3.8ghz |

| Motherboard | XFX 780i SLI |

| Ram | OCZ Flex XLC DDR2 1150 @ 1200Mhz |

| CPU Cooler | Zalman CNPS 9700 |

| Hard Drives | Maxtor 500gig SATA II 2 WD 160 Gig Sata2 |

| GPU’s |

Leadtek Winfast 9800GTX+ Non-Reference |

| Display | Samsung 2433bw 24″ |

| PSU | Thermaltake Toughpower 1000 Watt Modular |

| OS |

Windows Vista Ultimate X64 SP1 |

|

This is my sweet little POS =) |

|

This is the test rig I will be using to benchmark with. The insides of the Leadtek 9800GTX+ seem the same.

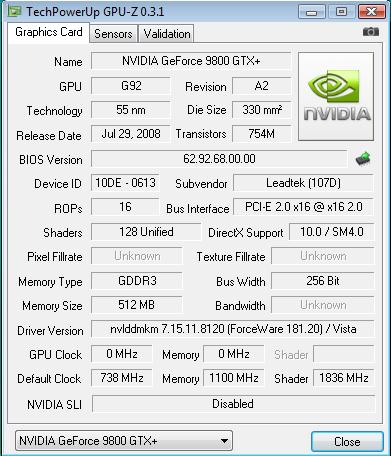

For some odd reason GPU-Z isn’t detecting this card right. It doesn’t show what the Fillrate or Bandwidth is, but it still be the same as its reference brothers.

| Card Specifications |

|||

| Leadtek 9800 GTX+ | |||

| Fabrication Process | 55nm | ||

|

Core Clock Rate |

740 MHz | ||

| SP Clock Rate | 1,836 MHz | ||

| Streaming Processors | 128 | ||

| Memory Type | GDDR3 | ||

| Memory Clock | 1,100 MHz (2,200 MHz) |

||

| Memory Interface | 256-bit | ||

| Memory Bandwidth | 70.4 GB/s | ||

| Memory Size | 512 MB | ||

| ROPs | 16 | ||

| Texture Filtering Units | 64 | ||

| Texture Filtering Rate | 47.4 GigaTexels/sec | ||

| RAMDACs | 400 MHz | ||

| Bus Type | PCI-E 2.0 | ||

| Power Connectors | 1 x 6-pin | ||

I included a table of the specs just for people who like tables instead of font. I also included the memory Bandwidth and Fillrates as they normally would be on a reference stock clocked 9800GTX+ for your knowledge.

Let’s get on to testing.

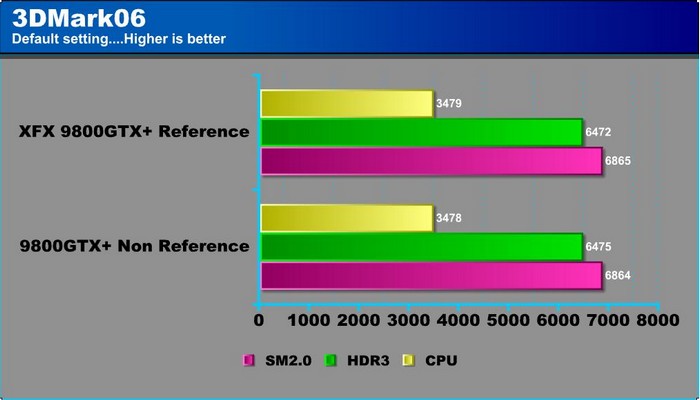

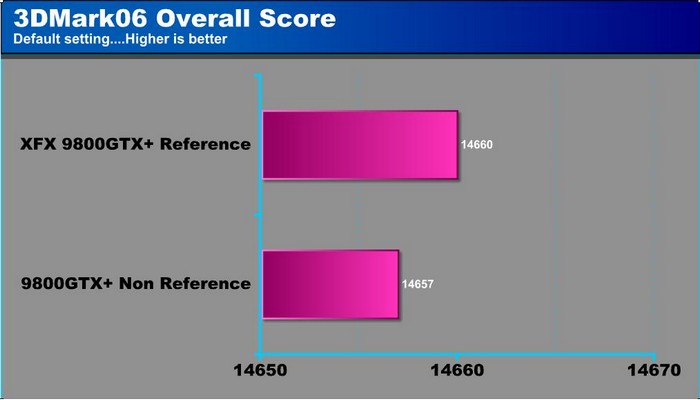

3DMARK06 V. 1.1.0

Here we have 3dMark06 which with its newer brother, Vantage, later on. This is the norm for graphical benchmarking. I’d say the card yields pretty normal results for being just like any other stock clocked 9800GTX+. 3DMark06 is getting a bit dated though so it doesn’t stress out the newer cards like it used to. Let’s continue on over to Vantage.

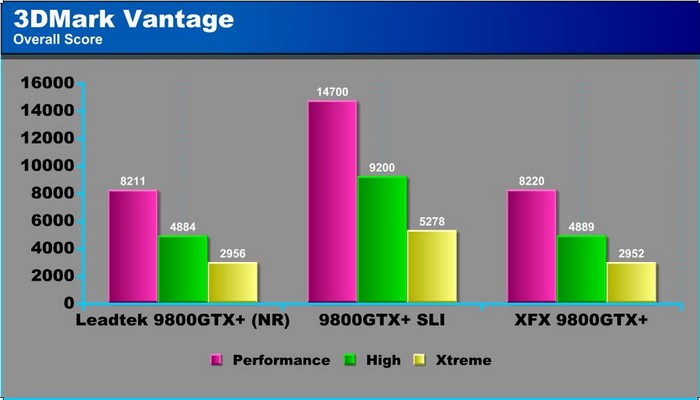

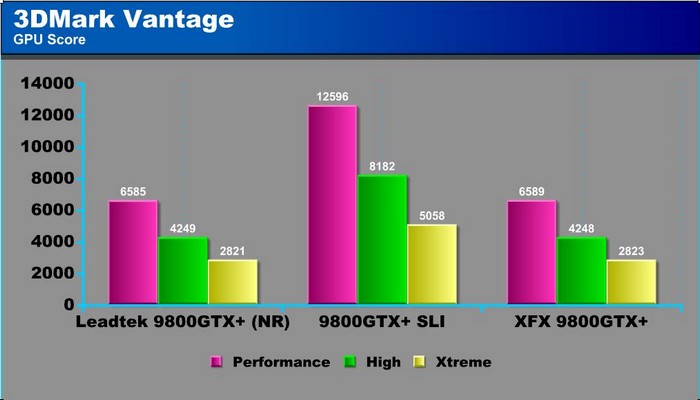

3DMark Vantage

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PyhsX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

Vantage tends to stress the 9800 series out a bit and you can definitely see this in the Xtreme testing. I recommend SLI or Crossfire for anybody playing at high resolutions. Again though, we have typical results from a stock clocked 9800GTX+. As compared to the XFX counterpart they seem to be pretty much the same.

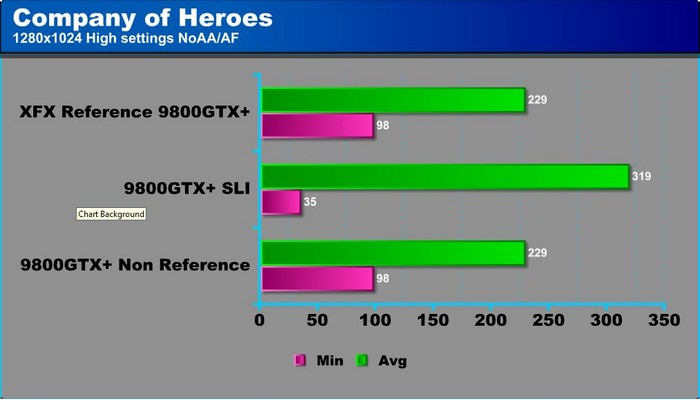

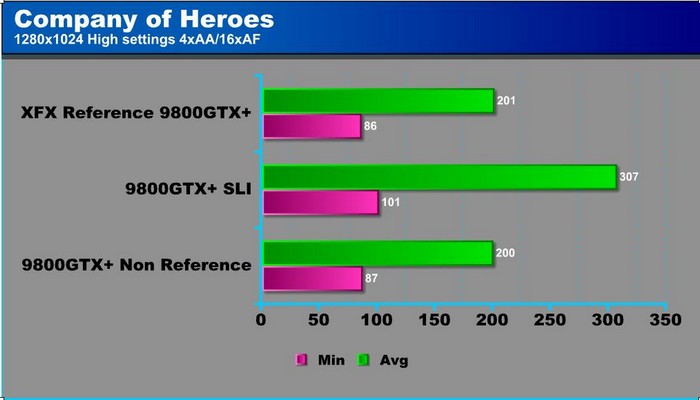

Company Of Heroes v. 1.71

Ain’t no big thing here. With a decent processor, any kind of lower resolution can be handled on this game. These cards don’t start to pick up until you start giving them higher resolutions to play with.

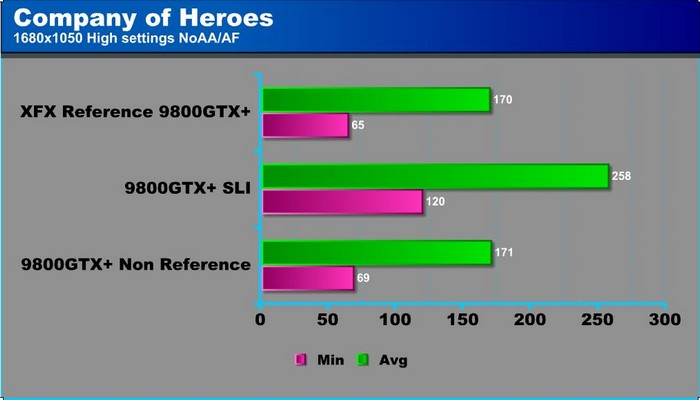

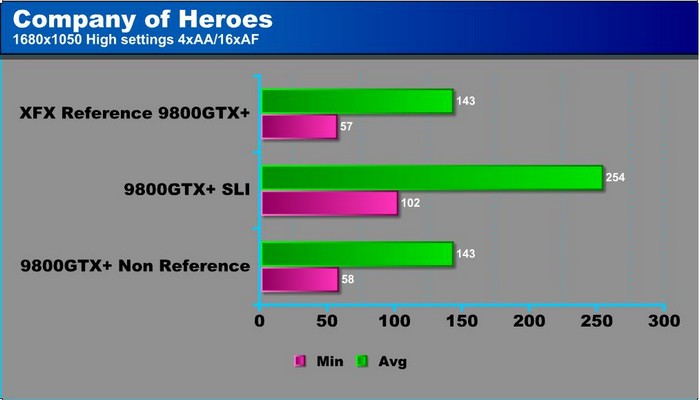

Like I was saying, these cards require higher resolutions to be properly used. The Leadtek 9800 keeps its own in frames on this game and SLI performance is starting to even out a bit.

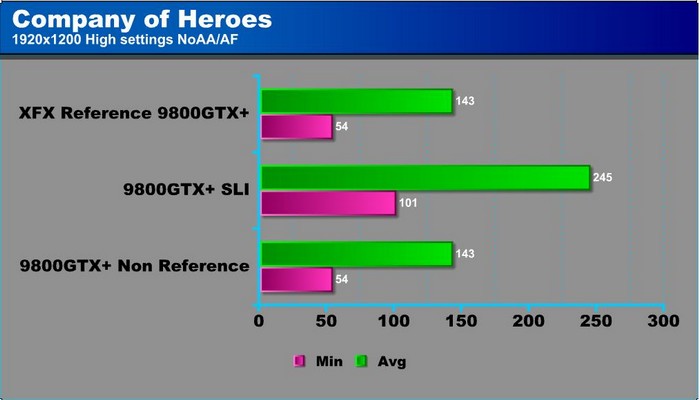

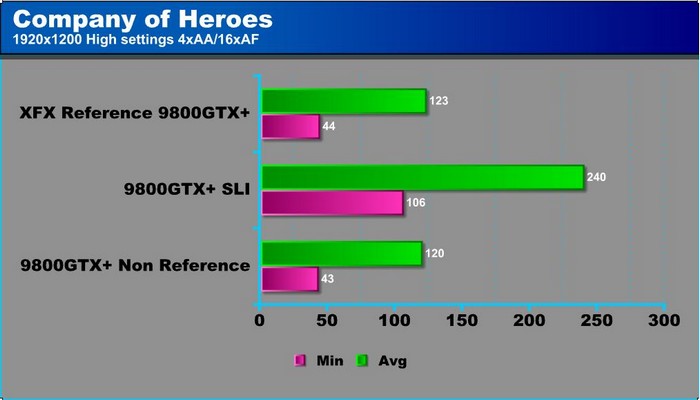

Even after putting the top resolution on these cards they still keep chugging along like nothing is going on. For a stock clocked single card, the Leadtek 9800GTX+ handles itself quite nicely for getting a bit aged.

Moving on to some Anti-Aliasing action, these cards still eat this game at this resolution. Still though, keep in mind that lower resolutions are mainly CPU limited. So, if you have a good CPU and game at 1280×1024, you should be quite fine with almost anything.

As we move up a bit on the resolution chain, we start to see the single Leadtek 9800GTX+ drop in frames and the SLI setup actually doing its job. High five to Nvidia for starting to get SLI to work properly… in certain games, at least.

Max resolution and max eye candy the Leadtek 9800GTX+ still gives out good enough frame rates to easily play this game. Coupled with a second 9800GTX+, this game is easily overtaken with outstanding performance.

Moving on to a new game!

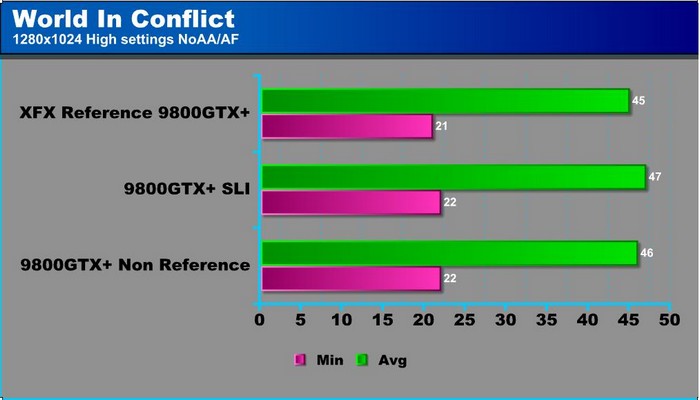

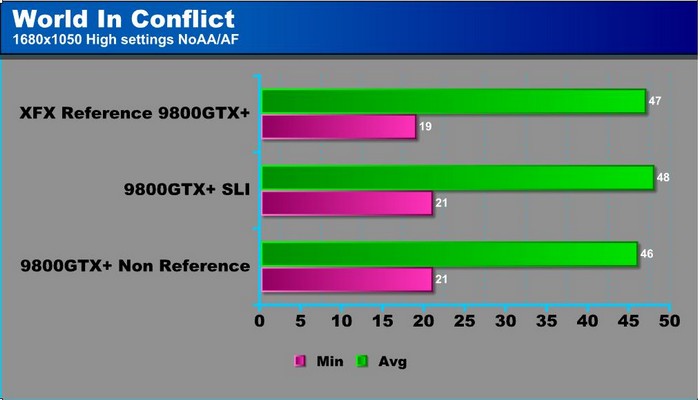

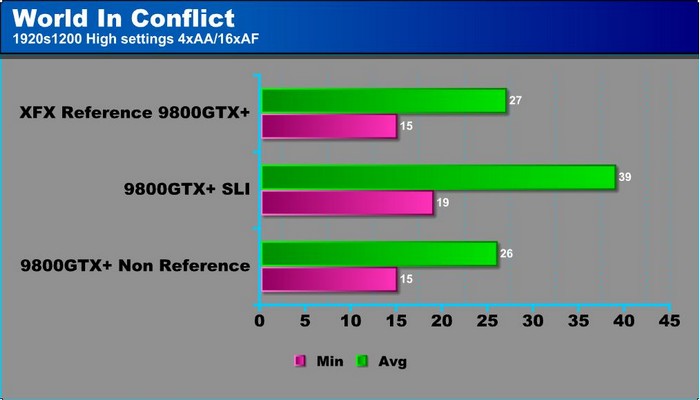

World in Conflict Demo

World In Conflict seems to be one of those games that just doesn’t give up no matter how good of hardware you have. It’s sort of like the Crysis of the RTS world for some reason. With outstanding graphics, it taxes even the top machines still. As we can see from my little chart here, it’s still playable though with High Settings. I have DirectX 10 enhanced graphics as well.

As we crank up the resolution a notch, performance seems to stay a little the same. That’s not bad at all.

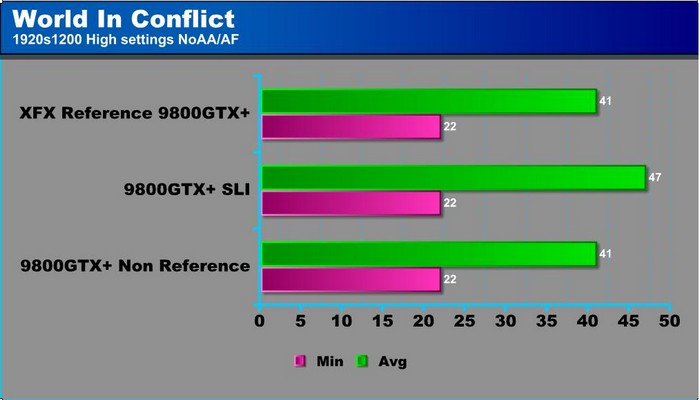

And at max resolution the numbers are still coming out pretty much the same. That is quite phenomenal, to say the least, but let’s see how it fares with extra eye candy.

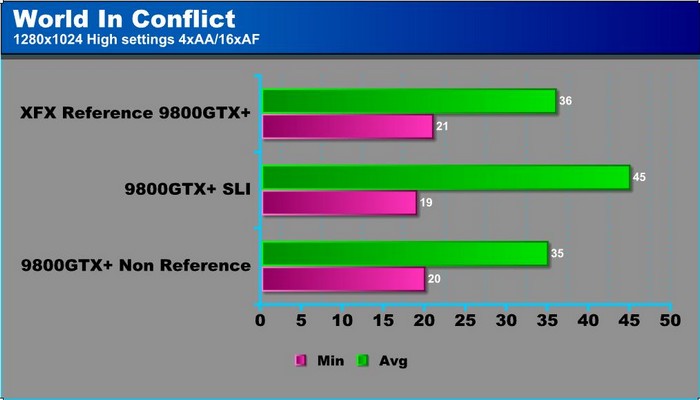

As we add the extra eye candy that we all love but hate, with Anti-Aliasing the numbers start to drop, which is expected. With an average of 35 FPS, that’s quite playable, but kind of low for this resolution. Remember, CPU bound though.

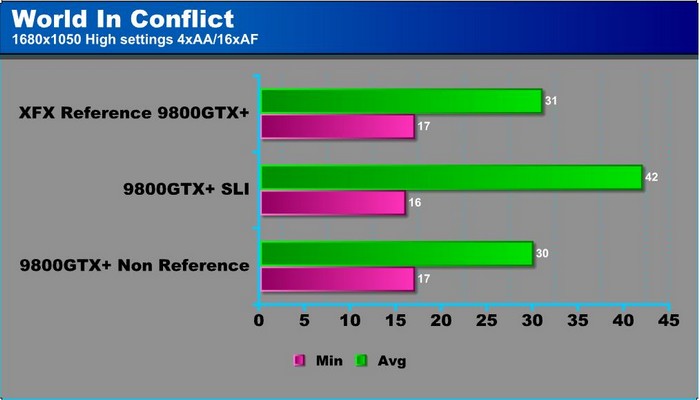

The dip here on the Leadtek 9800GTX+ is below what we consider playable, but I noticed this only happened once when everything was exploding center screen. If you don’t have the game zoomed in all the way, this shouldn’t be a problem at all.

At this resolution and with Anti-Aliasing, the single Leadtek 9800GTX+ is what we consider below playable. But I must say, SLI isn’t doing so well either, but I would be willing to bet that is a scaling issue.

Let’s move on to the anticipated Crysis.

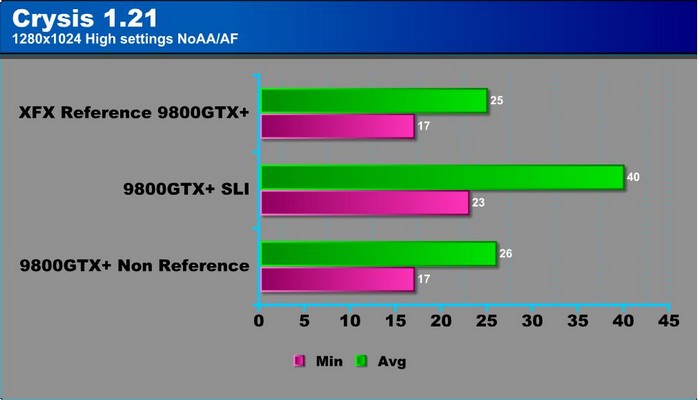

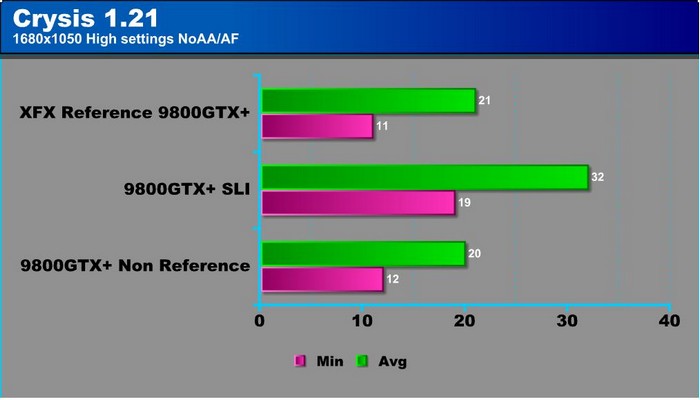

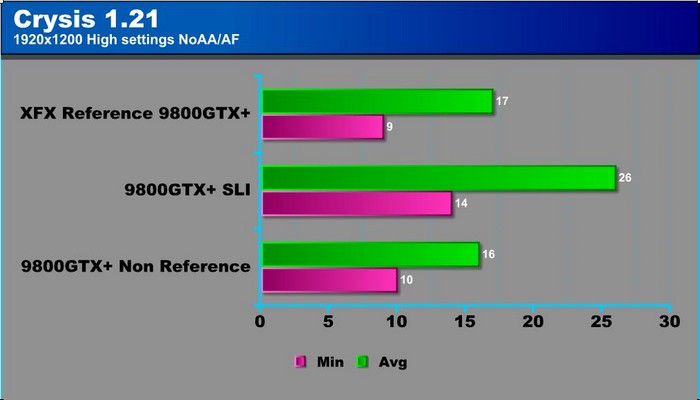

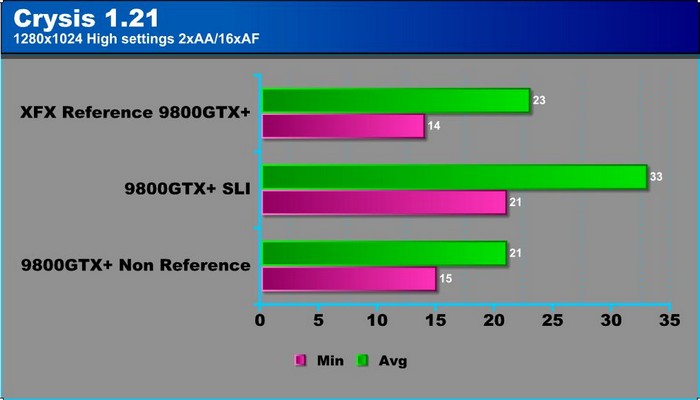

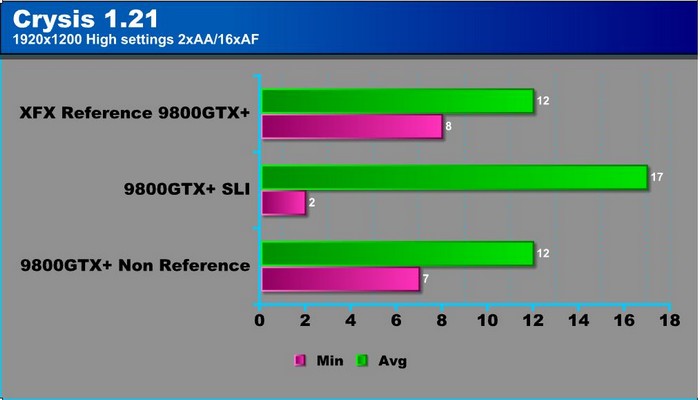

Crysis v. 1.21

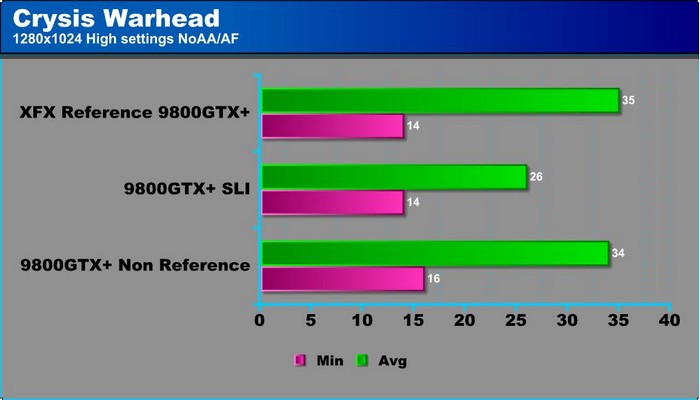

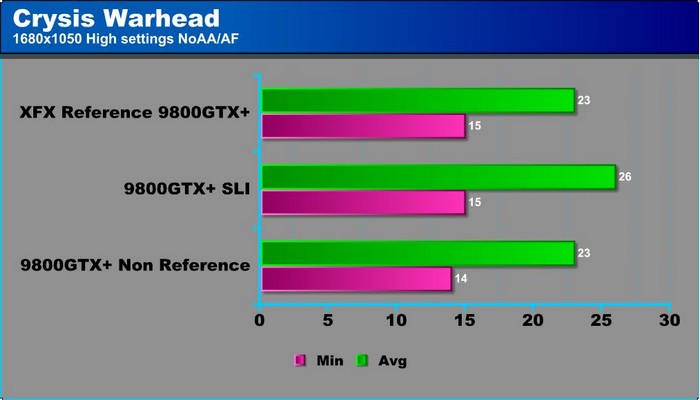

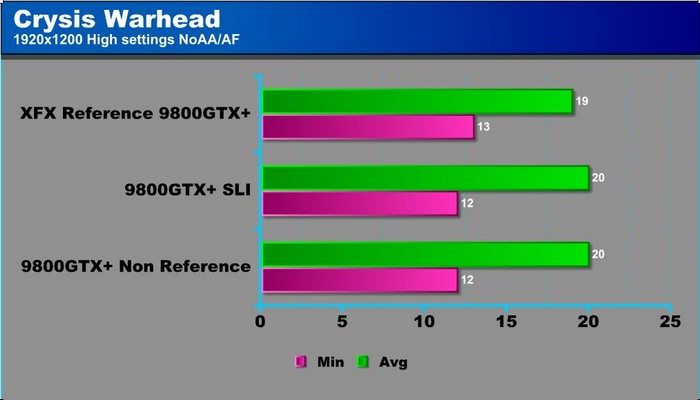

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

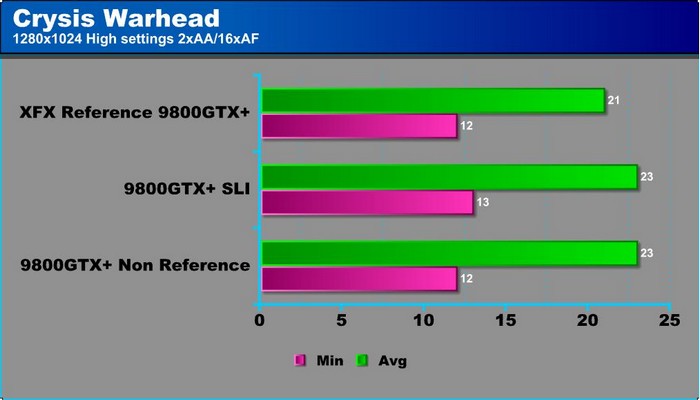

Before we look at some charts I have to mention that I experienced some weird numbers while benching this program. Even though this is supposed to be the “optimized” version of our “beloved” Crysis, I firmly believe that performance is worse, and you get some strange things happening with certain setups. Take a look and see what I mean.

As we can see, performance is noticeably slower than the original Crysis and it seems SLI scaling is a lot worse. I actually did not believe the benchmark program and decided to do in-game testing myself to see if these numbers are correct and they, in fact, are.

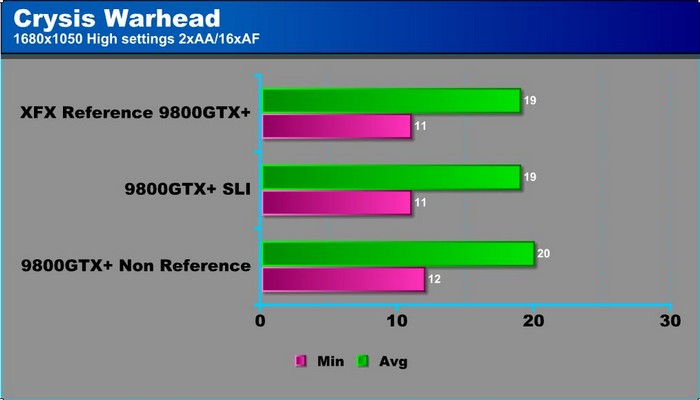

As we can see, making the GPU do the work, we start to get more normal readings but not quite what we would expect. Of course, I did the testing both in game and with the benching utility to test numbers. Both came out the same.

It seems that SLI scaling is just as bad, if not worse, in Warhead than it is in Crysis. Both single Leadtek and SLI 9800GTX+ are putting out non-acceptable frame rates for this resolution.

When we mix anti-aliasing into the picture, things seem to get drastically worse on the performance side.

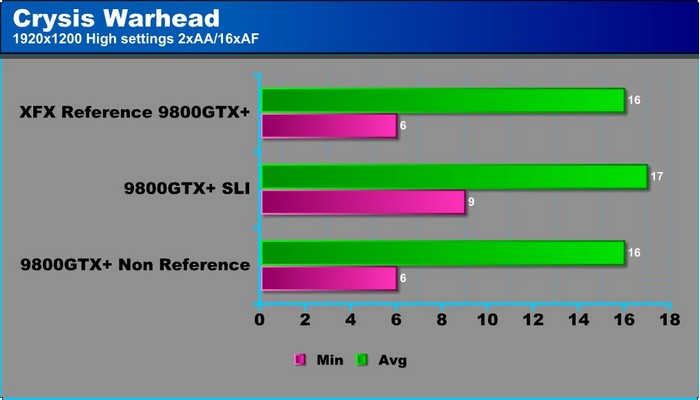

With a higher resolution you expect SLI scaling to work the way it is supposed to, but in fact, numbers are a little worse. Odd, huh?

With max resolution and max bling, yet again, this hash out of Crysis is not acceptable on either SLI or single Leadtek 9800GTX+. I tested numbers on a similar AMD rig and it came out about the same. Hopefully, we see better numbers in Farcry 2.

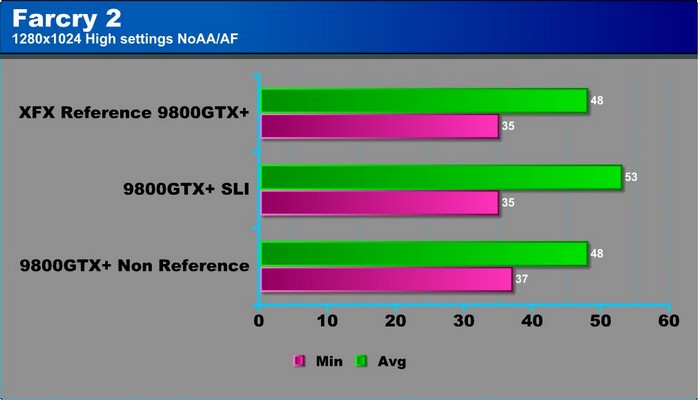

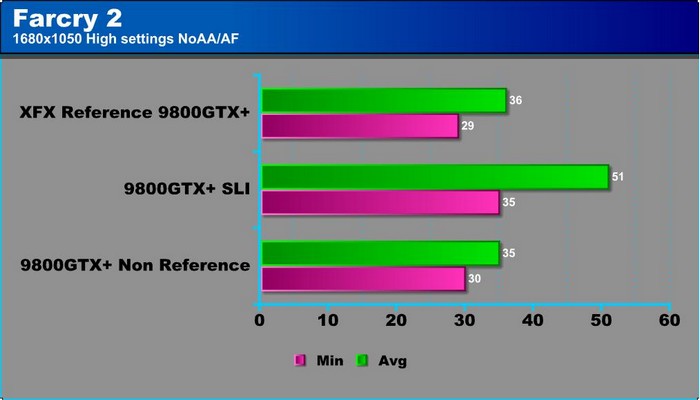

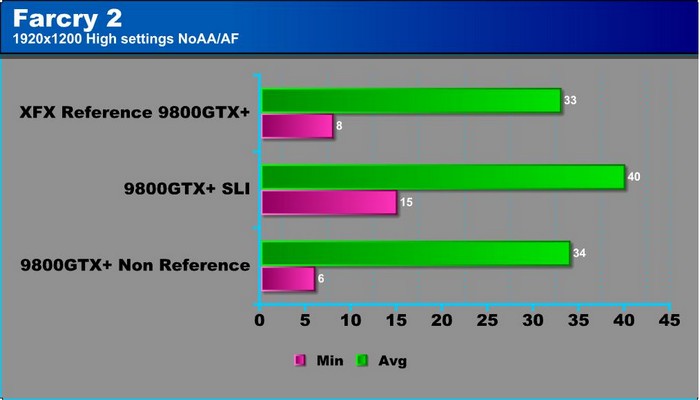

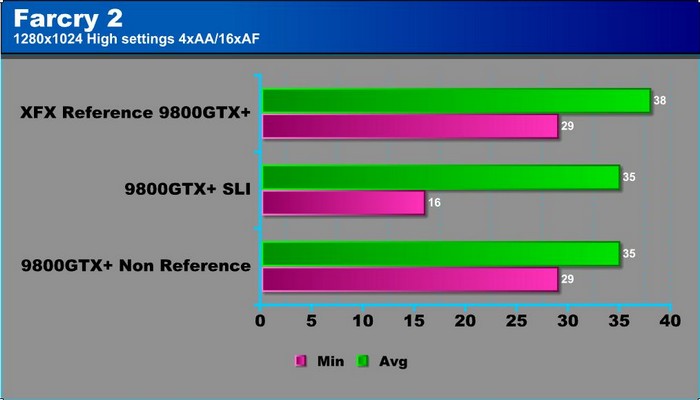

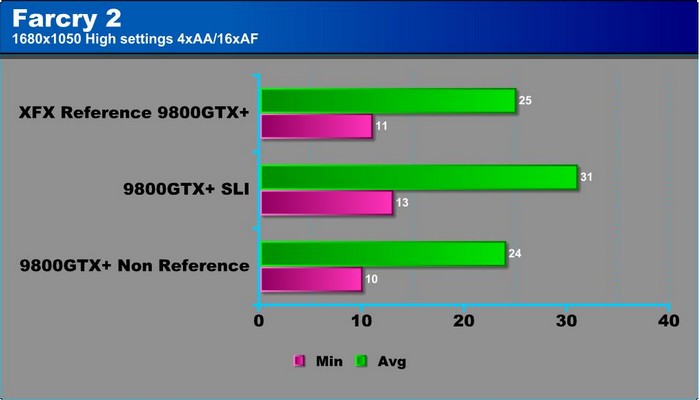

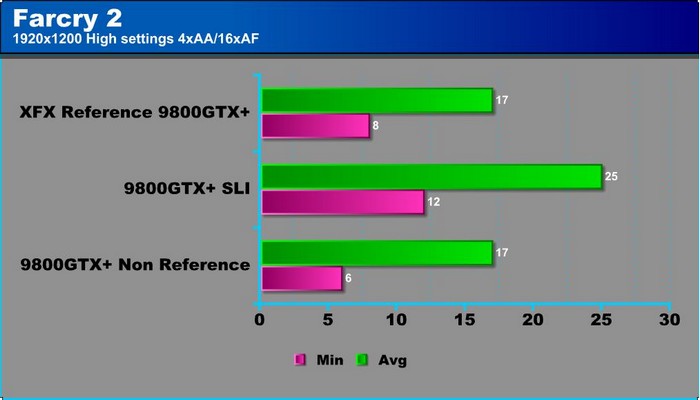

Far Cry 2

TEMPERATURES

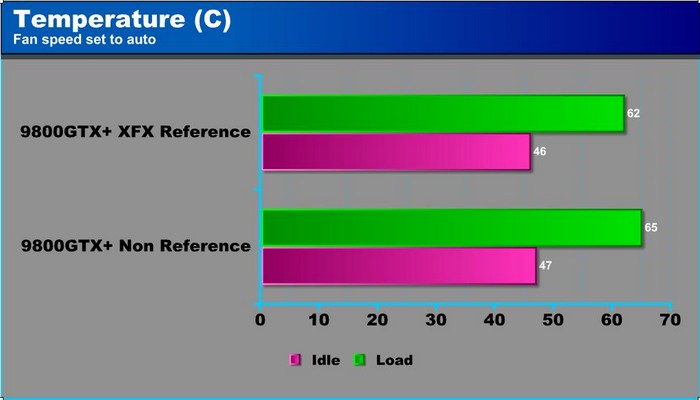

To get our temperature reading, we ran 3DMark Vantage looping for 30 minutes to get the load temperature. To get the idle temp we let the machine idle at the desktop for 30 minutes with no background tasks that would drive the temperature up.

My ambient temps are pretty much always around 63°F or 65°F, so your temps can vary if your house is warmer or your air flow isn’t as good.

As we can see, the extra real estate the reference cooler has gives it the advantage in cooling. There really isn’t much of a difference though and I never had any problems keeping the fan settings on auto. I used eVGA Precision tuner, Everest, and GPU-Z to check temp readings and they all came out the same. Overall, even though this card uses a smaller cooler, there is almost no difference in temps. On auto fan settings my Zalman fan on my CPU heatsink is much louder than both GPU fans combined. The only time you can hear the fans is when they start to heat up and the fan is put on 100%. If your room is fairly cool like mine, that almost never happens.

Conclusion

This card, even though it isn’t a standard reference design, still kicks it like the rest, needless to say. Leadtek surely took a good card and made it better by refining it and making it smaller and only take one PCI-E connector. Performance wise, it stands its ground on today’s top games, as well as can fit in any mid size case. The cooler, even though looks like it was stolen off an older 8800GTS 512mb, does its job quite well and is quiet at reasonable fan speeds.

Today though, a 9800GTX+ is kinda dated and is aimed more towards a mainstream crowd. Even though this would be the perfect card for a SLI setup only taking one power connector per card, I feel it would be better to go the GTX260 route than getting a second card for SLI. Normally, this is where we would say “Just overclock the thing”, but there’s a catch with this.

The catch would be that, because it only takes one power connector, overclocking of this card is not possible. For instance, Rivatuner doesn’t detect clock speeds on the card and eVGA Precision tool doesn’t yield any overclocking results. Being the hardcore, on the edge kind of guy that I am, I even tried to flash the BIOS with a minor overclock and the results were, well… not good. It seems that without the extra power, only the stock clock speeds are sustainable, and that the compromise for a smaller, more manageable 9800GTX+ is no overclocking. So, it seems this card, with its performance still doing well, is fairly cheap today, and is small, so it would be aimed more towards the casual gamer kind of guy. The ones who don’t have the uber full tower cases and the GTX280’s. Or, in a positive manner, it would make for a great dedicated PhysX card too!

For a cheap reliable card though, I would recommend the Non-Reference Leadtek 9800GTX+ to anybody with a smaller mid tower case who is a casual gamer.

Pros:

+ Runs cool

+ Able to run SLI with other 9800GTX+ cards

+ Is 9.45″ long

+ Only uses 1 PCI-E Power connector

+ Cheap and still stands ground in performance

+ Three year warranty

Cons:

– No overclocking ability

– The SLI connectors are slightly off from standard reference design

With all this in mind, the Non-Reference Leadtek 9800GTX+ will receive a score of:

7.5 (Very Good) out of 10

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996