The EVGA GTX-280 has arrived at Bjorn3D, and not only has it arrived, it arrived big. We’re starting with an EVGA GTX-280 SLI review, and later on you’ll see them in Triple SLI!

INTRODUCTION

We’ve spent several weeks with the EVGA GTX-280’s we received some time ago. They’ve been productive weeks, full of testing, playing, and living with the EVGA GTX-280 in single card and SLI configuration and while we still have a lot of testing to do, we decided to go ahead with a Single card and SLI review. We’re saving the Triple SLI review for a week or two and then we’ll break it out with some of the hottest titles of the season in what’s sure to be an SLI vs. Crossfire extravaganza of epic proportions.

We’ve got to tell you that there’s a certain feeling that you get when you hold these monsters in your hand that’s almost indescribable. Knowing that you are holding the fastest, most powerful single GPU video card in the world in your hand; with it straining to get into a machine and drive graphics like you’ve never seen before.

With the introduction of the new Nvidia 180.xx drivers offering better SLI support, we felt it important to review the beast in not only our normal fashion, but in SLI as well. In honor of its debut we spent days testing it and getting a feel for the “King of Single GPU Cards”. We have to tell you, the King still rules.

About EVGA

Warranty & Support:

In the US, EVGA offers a 10 year warranty (equivalent to lifetime). You can find full details of the warranty program on the EVGA home page, although it’s important to remember that the 10 year warranty only applies if you register the card within thirty days of purchase. But this is pretty much standard practice amongst the board partners that offer extra extended warranties. In addition, EVGA also has a lively message board where you can ask EVGA representatives about anything you’d like to know before or after purchasing an EVGA product.

One thing that sets EVGA above other NVIDIA partners is its support program. When you purchase an EVGA video card, the company gives you the chance to step up to something better (should Nvidia release a new line of graphics cards) in the first 90 days after the initial purchase. In order to qualify for this, you must purchase your EVGA video card from an authorized reseller – purchasing a card from eBay or another auction site does not qualify you for the Step-Up program.

Providing you meet EVGA’s very reasonable terms and conditions, you will get the full amount you paid knocked off the cost of the card you’re upgrading to. Obviously, you can’t keep stepping up to something faster – EVGA allows you to complete one Step-Up on each video card purchase; the Step-Up doesn’t count as a purchase.

FEATURES

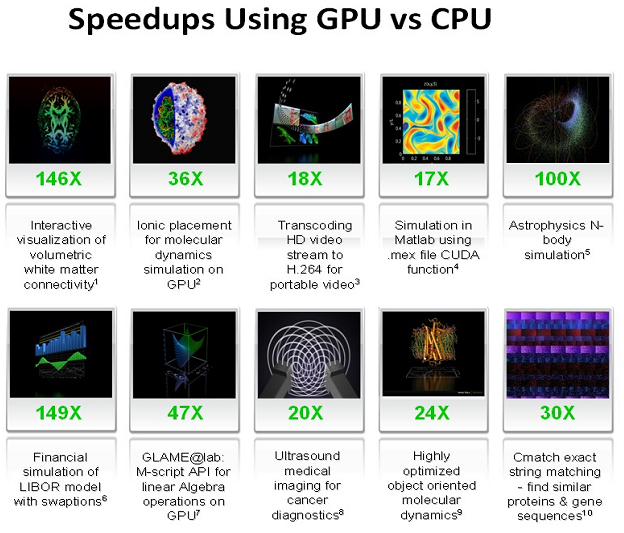

In past generations of GPUs, real-time images that appeared true-to-life could be delivered, but in many cases would cause frame rates to drop to unplayable levels in complex scenes. Thanks to the increase in sheer shader processing power of the GeForce GTX 200 GPUs and NVIDIA’s acquisition of the PhysX technology, many new features can now be offered without causing horrific slow downs. Some of these features include:

- Convincing facial character animation

- Multiple ultra-high polygon characters in complex environments

- Advanced volumetric effects (smoke, fog, mist, etc.)

- Fluid and cloth simulation

- Fully simulated physical effects, i.e. live debris, explosions, and fires

- Physical weather effects, i.e. accumulating snow and water, sand storms, overheating, freezing, and more

- Better lighting for dramatic and spectacular effect, including ambient occlusion, global illumination, soft shadows, indirect lighting, and accurate reflections

Extreme HD

For those early adopters of new standards, you will be pleased to hear of included support for the new DisplayPort interface. Allowing up to 2560 x 1600 resolution with 10-bit color depth, the GTX 200 GPUs offer unprecedented quality. While prior-generation GPUs included internal 10-bit processing, they could only output 8-bit component colors (RGB). The GeForce GTX 200 GPUs permit both internal 10-bit internal processing and 10-bit color output.

GeForce GTX 200 GPU Architecture

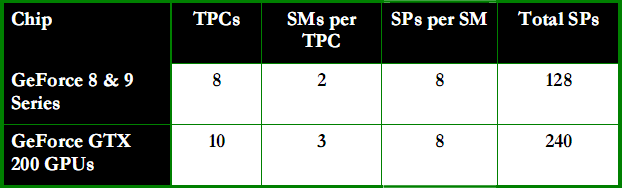

The GeForce 8 and 9 Series GPUs were the first to be based on a Scalable Processor Array (SPA) framework. With the GTX 200 GPU, NVIDIA has upped the ante in a big way. The second-generation architecture is based on a re-engineered, enhanced and extended SPA architecture.

The SPA architecture consists of a number of TPCs (Texture Processing Cluster in graphics processing mode and Thread Processing Clusters in parallel computing mode). Each TPC is comprised of a number of streaming multiprocessors (SMs), and each SM contains eight processor cores, also called streaming processors (SPs) or thread processors. Every SM also includes texture filtering processors used in graphics processing which can also be used for various filtering operations in computing mode, such as filtering images as they are zoomed in and out.

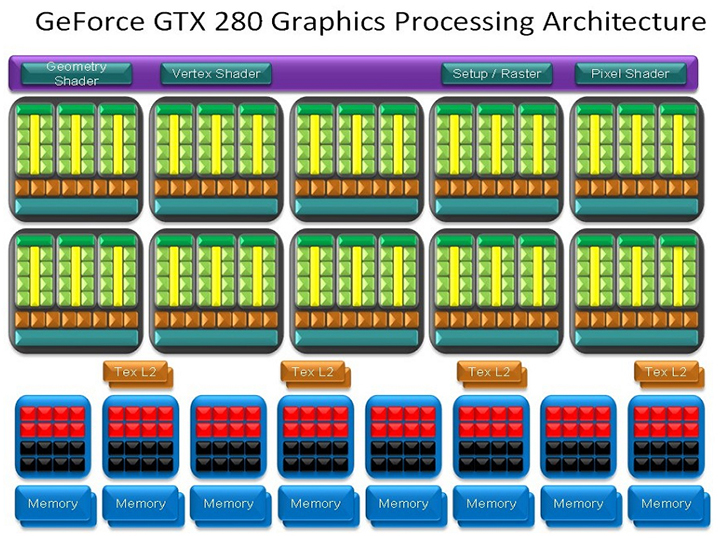

As mentioned earlier, the GeForce GTX 200 GPUs includes two different architectural personalities; graphics and computing. The image below represents the GeForce GTX 280 in graphics mode. At the top is the thread dispatch logic, in addition to the setup and raster units. The ten TPCs each include three SMs and each SM has eight processing cores for a total of 240 scalar processing cores. ROP (raster operations processors) and memory interface units are locates at the bottom.

GeForce GTX 280 Graphics Processing Architecture

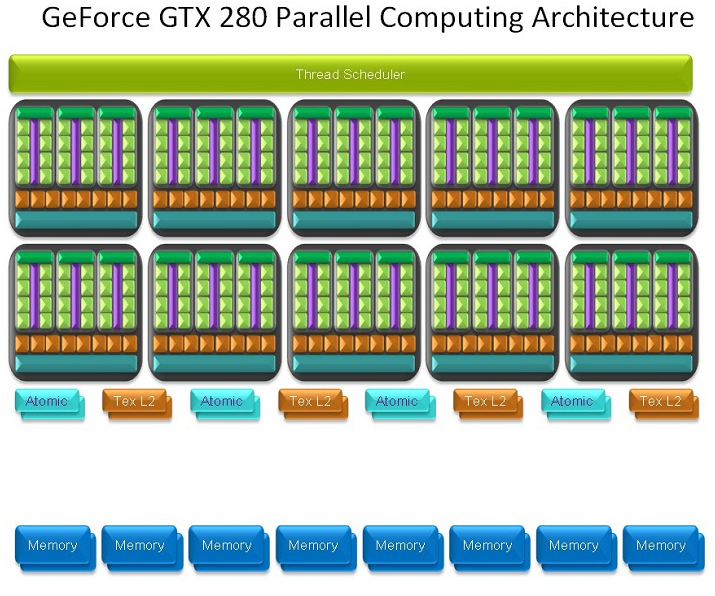

The next image depicts a high-level view of the GeForce GTX 280 GPU in parallel computing mode. A hardware-based thread scheduler at the top manages scheduling threads across the TPCs. In compute mode, the architecture includes texture caches and memory interface units. The texture caches are used to combine memory accesses for more efficient and higher bandwidth memory read-write operations.

GeForce GTX 280 Computing Architecture

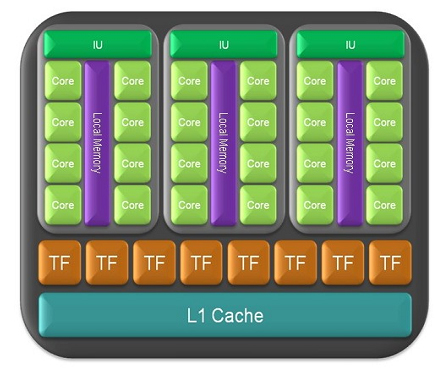

Here is a close up of a TPC in compute mode. Note the shared local memory in each of the three SMs. This allows the eight processing cores of the SM to shard data with the other processing cores in the same SM without having to read from or write to an external memory subsystem. This greatly increases computational speed and efficiency for a variety of algorithms.

TPC in compute mode

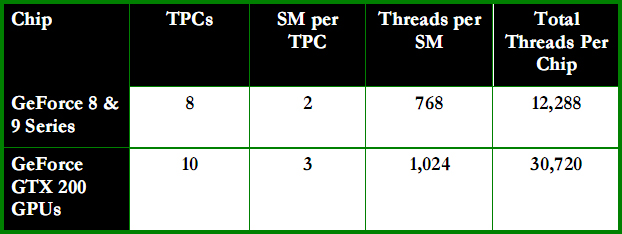

In addition to all of the aforementioned architectural changes and upgrades, the GeForce GTX 200 GPUs can support over thirty thousand threads in flight. Through hardware thread scheduling, all processing cores attain nearly 100% utilization. Additionally, the GPU architecture is latency-tolerant. This means if a particular thread is waiting for memory access, the GPU can perform zero-cost hardware-based context switching and immediately switch to another thread to process.

Thread count

Double Precision Support

A long requested addition has finally made its way into the new GTX 200 GPUs; double-precision, 64-bit floating point computation support. While gamers won’t find much use in this addition, it is the high-end scientific, engineering, and financial computing applications requiring very high accuracy that will put this feature to good use. Each SM (Streaming Multiprocessor) incorporates a double-precision 64-bit floating math unit for a total of 30 double-precision 64-bit processing cores. The overall double-precision performance of all ten TPCs (Thread Processing Clusters) of a GeForce GTX 200 GPU is roughly equivalent to an eight-core Xeon CPU, yielding up to 90 gigaflops.

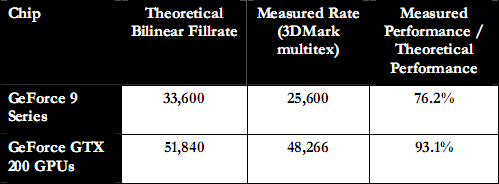

Improved Texturing Performance

The eight TPCs of the GeForce 8800 GTX allowed for 64 pixels per clock of texture filtering, 32 pixels per clock of texture addressing, 32 pixels per clock of 2x anisotropic bilinear filtering or 32-bilinear-filtered pixels per clock. Subsequent GeForce 8 and 9 Series GPUs balance texture addressing and filtering.

- For example, the GeForce 9800 GTX can address and filter 64 pixels per clock, supporting 64 bilinear-filtered pixels per clock.

GeForce GTX 200 GPUs also provide balanced texture addressing and filtering, and each of the ten TPCs include a dual-quad texture unit capable of addressing and filtering eight bilinear pixels per clock, four 2:1 anisotropic filtered pixels per clock or four 16-bit floating point bilinear-filtered pixels per clock. Total bilinear texture addressing and filtering capability for an entire high-end GeForce GTX 200 GPU is 80 pixels per clock.

The GeForce GTX 200 GPU also employs a more efficient scheduler, allowing the chip to attain close to theoretical peak performance in texture filtering. In real world measurements, it is 22% more efficient than the GeForce 9 Series.

Texture filtering rates

Power Management Enhancements

GeForce GTX 200 GPUs include a more dynamic and flexible power management architecture than past generation NVIDIA GPUs. Four different performance / power modes are employed:

- Idle/2D power mode (25 watts approx.)

- Blu-ray DVD playback mode (35 watts approx)

- Full 3D performance (236 watts worst case)

- HybridPower mode (0 w effective)

For 3D graphics-intensive applications, the NVIDIA driver can seamlessly switch between the power modes based on the utilization of the GPU. Each of the new GeForce GTX 200 GPUs integrates utilization monitors that constantly check the amount of traffic occurring in side the GPU. Bases on the level of utilization reported by these monitors, the GPU driver can dynamically set the appropriate performance mode.

The GPU also has clock-gating circuitry, which effectively ‘shuts down’ blocks of the GPU which are not being used at a particular time (where time is measure in milliseconds), further reducing power during periods of inactivity.

This dynamic power range gives you incredible power efficiency across a full range of applications (gaming, video playback, web surfing, etc.)

SPECIFICATIONS

| NVIDIA GTX200 GPU | |||

| EVGA GTX280 | GTX 260 | 9800GTX | |

| Fabrication Process | 65nm | 65nm | 65nm |

| Transistor Count | 1.4 Billion | 1.4 Billion | 754 Million |

| Core Clock Rate | 602 MHz | 576 MHz | 675 MHz |

| SP Clock Rate | 1,296 MHz | 1,242 MHz | 1,688 MHz |

| Streaming Processors | 240 | 192 | 128 |

| Memory Clock | 1,107 MHz (2,214 MHz) | 999 MHz (1,998 MHz) | 1100 MHz (2,200 MHz) |

| Memory Interface | 512-bit | 448-bit | 256-bit |

| Memory Bandwidth | 141.7 GB/s | 111.9 GB/s | 70.4 GB/s |

| Memory Size | 1024 MB | 896 MB | 512 MB |

| ROPs | 32 | 28 | 16 |

| Texture Filtering Units | 80 | 64 | 64 |

| Texture Filtering Rate | 48.2 GigaTexels/sec | 36.9 GigaTexels/sec | 43.2 GigaTexels/sec |

| RAMDACs | 400 MHz | 400 MHz | 400 MHz |

| Bus Type | PCI-E 2.0 | PCI-E 2.0 | PCI-E 2.0 |

| Power Connectors | 1 x 8-pin & 1 x 6-pin | 2 x 6-pin | 2 x 6-pin |

| Max Board Power | 236 watts | 182 watts | 156 watts |

| GPU Thermal Threshold | 105º C | 105º C | 105º C |

| Recommended PSU | 550 watt (40A on 12v) | 500 watt (36A on 12v) | – |

PICTURES & IMPRESSIONS

Earlier we mentioned that the EVGA GTX-280 had arrived at Bjorn3D and that it arrived big so we thought we’d show you how big it arrived. That would be three boxes of total Graphics ecstasy right there. When they arrived, the opening of the box involved miles of smiles.

We know that you want to see a couple of these beasts on the X58 test rig in all their glory, so we thought we’d get that out of the way. We know that some people would prefer in chassis testing but with nine GPU’s going on and off the platform at random intervals and in different combination’s that’s just not feasible, so please keep the “In Chassis” carping to a minimum please. Those beasts look pretty sweet linked up. Please note though that this was an early picture before we received our flexible SLI bridge and moved the cards to the two PCI-e X16 slots. All the testing was done with the GPU’s in the full PCI-e X16 slots, but this gives you a better look at them than running them closer together.

Now back to the package itself. The cards come individually packaged in an attractive black box with enough information and specifications to help inform the consumer about the purchase of this beast. Rest assured that they are well packed inside the box and we’d rather spend our time on the product itself than the box it comes in.

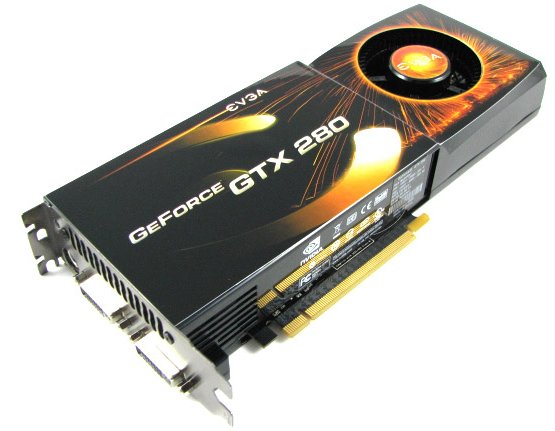

Then we have the card itself, even before you get this monster into the machine it exudes power. We’re pretty sure we heard the test rig moaning as we were snapping pics of it.

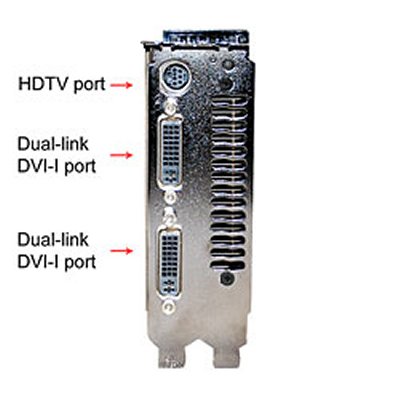

Looking at the back of the GPU we see the same familiar dual slot cooler that we’ve gotten used to since the 8800GTX G80 core GPU’s came out.

The back of the GPU is covered but well ventilated. We suspect that this came about after a few people shorted something out on the back of the card doing something they shouldn’t have been doing (cough, Pencil Mod, cough).

With more powerful GPU’s you need a more powerful fan. Enter the familiar squirrel cage fan that helps keep the GPU cool by drawing in cool air and circulating it across the GPU and PCB.

For a little additional cooling, the end of the GPU is open so air can be drawn in by convection and cool the rear of the GPU.

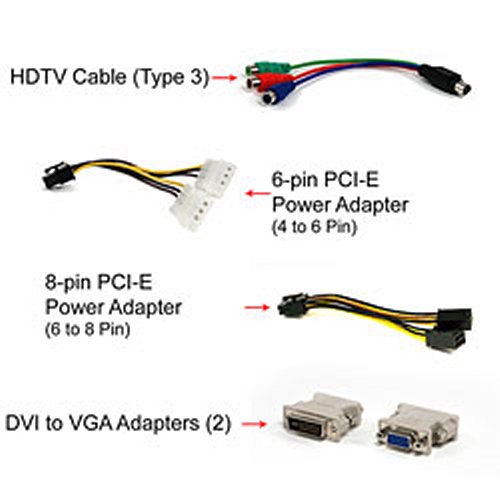

The EVGA GTX-280 requires an 8-pin and 6-pin connector to correctly power it and even though it comes with Molex to PCI-E connectors of each type, if we catch you powering one of these beauties without a proper PSU we’ll have to take your GTX-280 and leave it in the hands of someone more deserving. In other words, it’s not a good idea to combine this beast with a pre-PCIE connector PSU to save a few bucks. If the older PSU tanks and takes the GPU with it then you’ve lost a $450-$500 dollar GPU because you used some stinky old PSU to save a few bucks.

The EVGA GTX-280 is, of course Dual and Triple SLI ready. The connectors are located at the top of the GPU with a rubber cover protecting them.

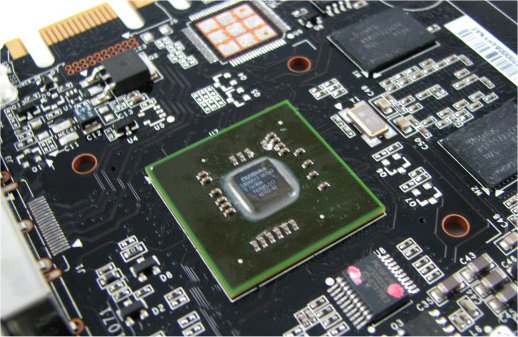

We thought we’d get one of these beauties naked so you can see one without having to rip the cover off yours, potentially damaging it, and to see if there was anything interesting under there.

Of course, there’s always something of interest under the hood, like the return of the NVIO chip under the SLI connectors, which originated with the 8800 GTX and is the Nvidia Input Output chip and services the dual DVI ports you saw earlier. We’d suspect this has something to do with dual monitor support being enabled in SLI under the new Nvidia 180.xx drivers.

BUNDLE

Inside the box with the EVGA GTX-280 you’ll find a couple of Molex to PCI adapters in case you’re stuck with an older PSU, a couple of DVI to VGA Adapters, and a type 3 HDTV adapter.

You’ll also find a Quick start guide, a user’s manual, and a driver disk in a nice sleeve. What’s noticeably absent is a recent game title which would have made the bundle a little sweeter.

TESTING & METHODOLOGY

We did a fresh load of Vista 64 on our test rig to make sure that no pesky left over drivers interfered with testing, all applicable patches and driver updates were applied prior to testing. We ran each test at least three times and the average of the tests is reported here. The clock speeds of all the GPU’s were kept at stock during testing, and the test system was kept at 3.74GHz, with DDR3 Triple channel RAM left running at 1600MHz for each GPU. The hardware and clocking were kept the same for each GPU, un-needed background tasks were disabled, and a fresh load of Vista (From an Acronis Clone of the hard drive) was done each time a GPU was switched for testing. The Acronis clone has no GPU drivers installed leaving us as clean an install of the needed drivers as possible. With the move to Vista 64 games are tested in DX10 mode unless they don’t support DX10, then they are tested in DX9.

The Test Rig

| Test Rig “Quadzilla” |

|

| Case Type | Top Deck Testing Station |

| CPU | Intel Core I7 965 Extreme (3.74 GHz 1.2975 Vcore) |

| Motherboard | Asus P6T Deluxe (SLI and CrossFire on Demand) |

| Ram | G.Skill DDR 3 1600 (9-9-9-24 1.5v) 6GB Kit |

| CPU Cooler | Thermalright Ultra 120 RT (Dual 120mm Fans) |

| Hard Drives | Intel 80 GB SSD |

| Optical | Sony DVD R/W |

| GPU | EVGA GTX-280 (2) XFX 9800 GTX+ Black Edition BFG GTX-260 MaxCore Leadtek GTX-260 Drivers for Nvidia GPU’s 180.43 Palit HD Radeon 4870X2 Sapphire HD Radeon 4870 Sapphire HD Radeon 4850 Toxic Drivers for ATI GPU’s 8.11 |

| Case Fans | 120mm Fan cooling the mosfet cpu area |

| Docking Stations | None |

| Testing PSU | Thermaltake Toughpower 1K |

| Legacy | None |

| Mouse | Razer Lachesis |

| Keyboard | Razer Lycosa |

| Gaming Ear Buds |

Razer Moray |

| Speakers | None |

| Any Attempt Copy This System Configuration May Lead to Bankrupcy | |

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark06 v. 1.10 | |

| 3DMark Vantage | |

| Company of Heroes v. 1.71 | |

| Crysis v. 1.2 | |

| World in Conflict Demo | |

| F.E.A.R. v 1.08 | |

| FarCry 2 | |

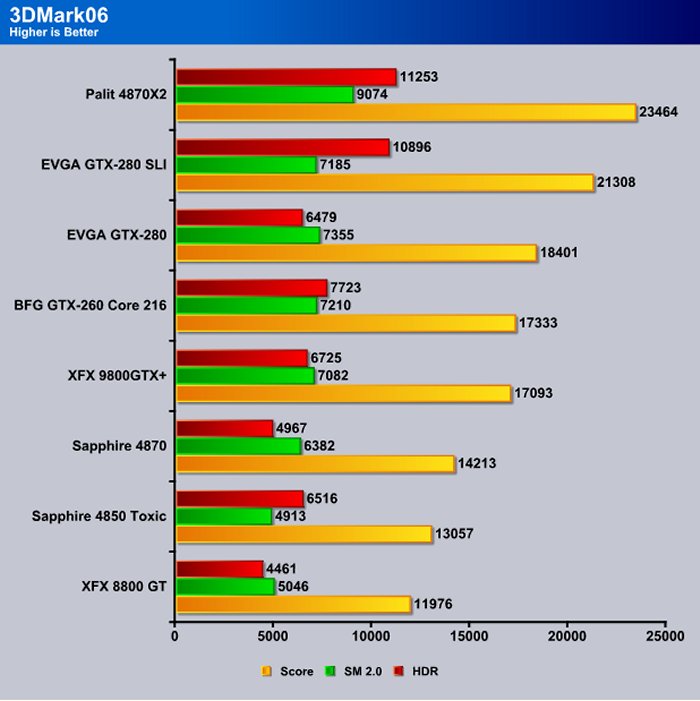

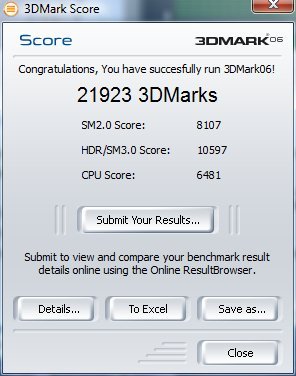

3DMARK06 V. 1.1.0

3DMark06 developed by Futuremark, is a synthetic benchmark used for universal testing of all graphics solutions. 3DMark06 features HDR rendering, complex HDR post processing, dynamic soft shadows for all objects, water shader with HDR refraction, HDR reflection, depth fog and Gerstner wave functions, realistic sky model with cloud blending, and approximately 5.4 million triangles and 8.8 million vertices; to name just a few. The measurement unit “3DMark” is intended to give a normalized mean for comparing different GPU/VPUs. It has been accepted as both a standard and a mandatory benchmark throughout the gaming world for measuring performance.

In 3DMark06, the EVGA GTX-280 is the fastest single GPU card. It gets beaten by the Palit 4870X2, which is a dual GPU card and isn’t in quite the same category. The 4870X2 beats the GTX-280 SLI in this test ,which is known to scale poorly with SLI and Crossfire. We don’t think that those results will hold true long with the more modern 3DMark Vantage.

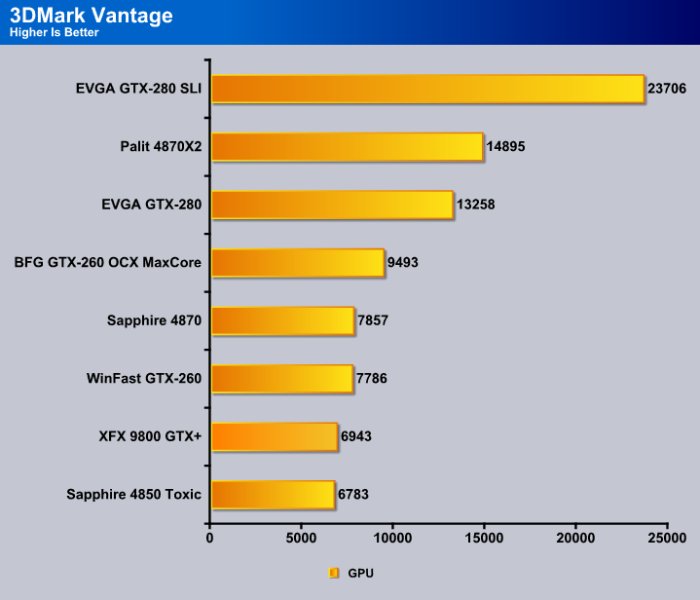

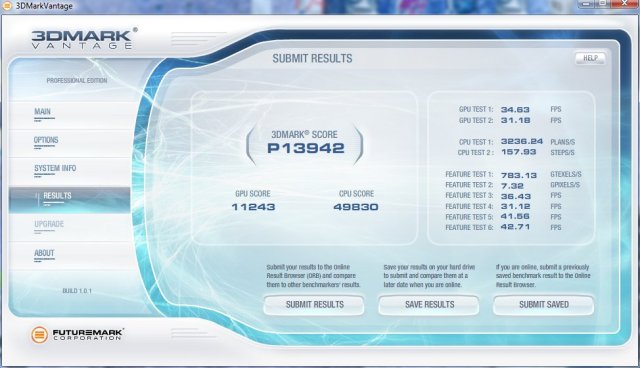

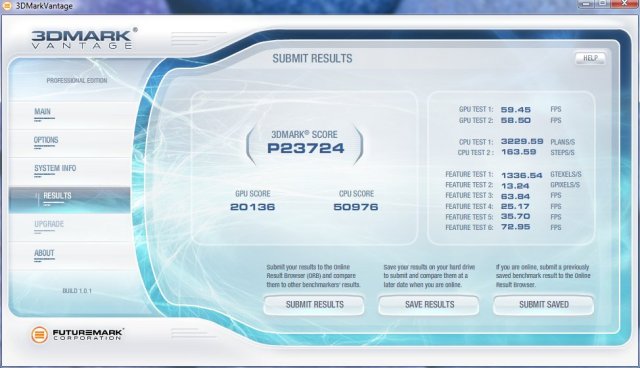

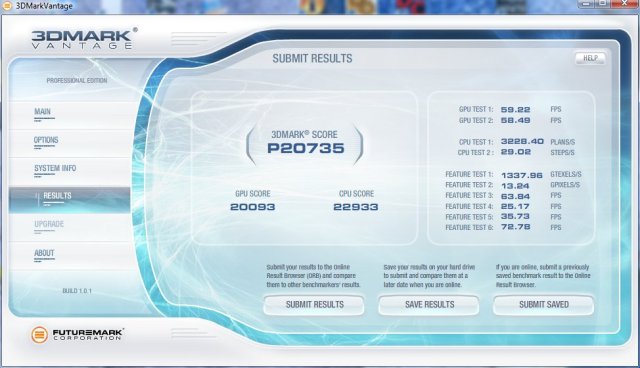

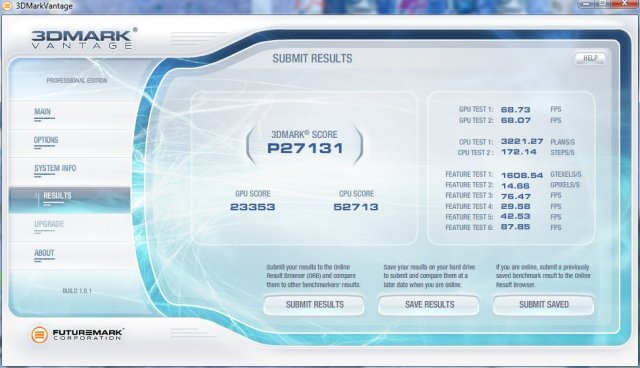

3DMark Vantage

For complete information on 3DMark Vantage Please follow this Link: www.futuremark.com/benchmarks/3dmarkvantage/features/

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PhysX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

Moving to 3Dmark Vantage, which is a better measure of modern GPU’s, you can see that the Palit 4870X2 comes in higher than the GTX-280 with its single core but gets slaughtered by the EVGA GTX-280 SLI setup. Notice the improved scaling in SLI with the new Nvidia 180.xx drivers. What you’re seeing there is almost 79% scaling. What that translates to is the second GTX-280 is operating at 79% efficiency. Given that one operates at 100%, that would mean your total GPU processing power being utilized in this test is the equivalent of 179% the processing power of a normal GTX-280. The scaling with the new 180.xx drivers in properly optimized benches and games has gotten a lot better. Performance, as you’ll see later, is tied to the level of optimization in the game.

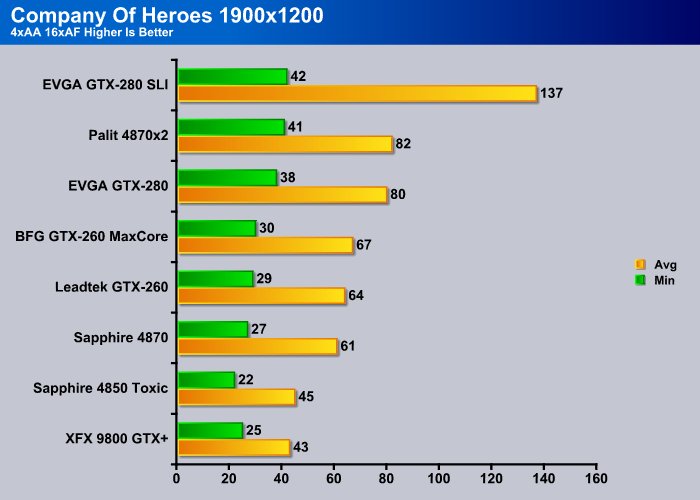

Company of Heroes v. 1.71

Company of Heroes (COH) is a Real Time Strategy (RTS) game for the PC, announced on April, 2005. It is developed by the Canadian based company Relic Entertainment and published by THQ. COH is an excellent game that is incredibly demanding on system resources thus making it an excellent benchmark. Like F.E.A.R., the game contains an integrated performance test that can be run to determine your system’s performance based on the graphical options you have chosen. It uses the same multi-staged performance ratings as does the F.E.A.R. test. Letting the games benchmark handle the chore takes the human factor out of the equation and ensures that each run of the test is exactly the same producing more reliable results.

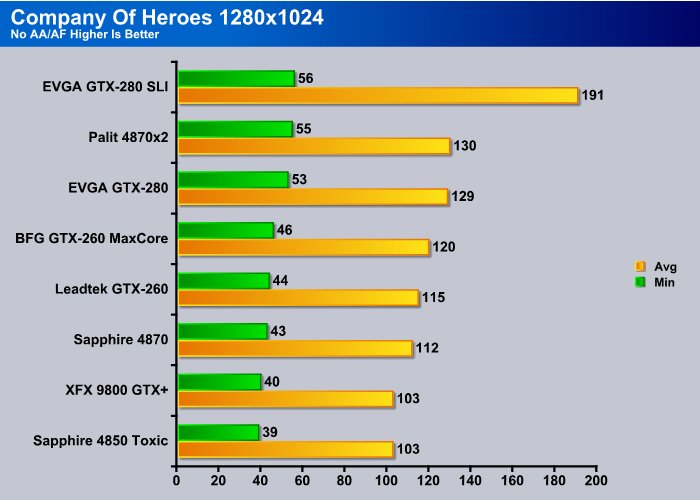

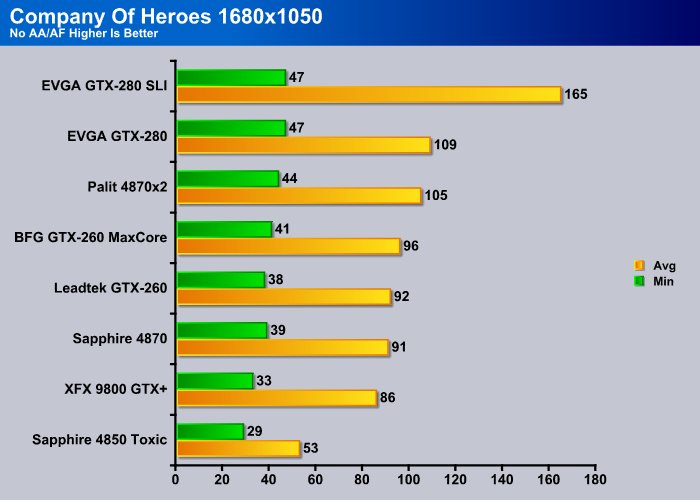

Getting out of the realms of synthetic benchmarks and into the real life arena of gaming, we see the EVGA GTX-280 SLI ahead of the Palit 4870X2 by one frame on the minimum test, and 61 FPS on the average test. The single EVGA GTX-280 did almost as well as the Palit 4870X2 by itself. Company of Heroes is getting a little long in the tooth and doesn’t scale very well in SLI, but does well enough for the EVGA GTX-280 to put a whipping on the 4870X2.

When we kicked it up a notch to 1680×1050 No AA/AF, the EVGA GTX-280 SLI keeps the lead and the single GTX-280 beat the 4870X2 in this higher resolution. From the minimum frames you can see how badly Company of Heroes scales with SLI. The good news is that more modern games are scaling better, which you’ll see in the Triple SLI review we’ll be posting as soon as we wade through a humongous stack of GPU’s to finish testing.

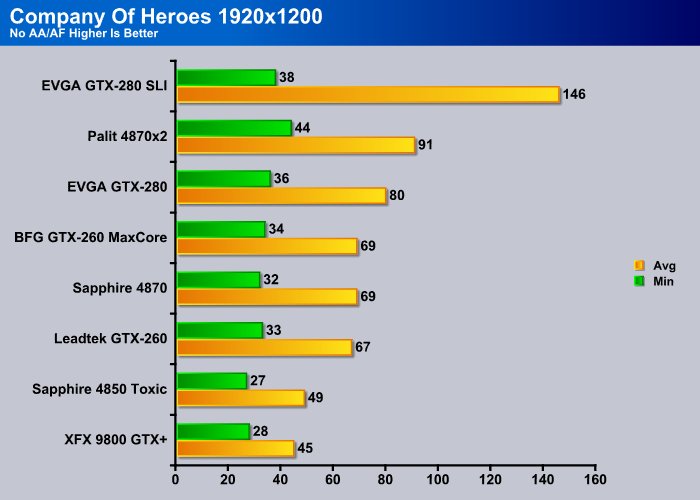

At the highest resolution tested, 1920×1200 the single GTX-280 came out on top the single core GPU’s, and was only beaten by the dual core Palit 4870×2. Minimum frames the 4870×2 was ahead of the EVGA GTX-280 SLI by six frames. On average frame rates it was crushed by the GTX-280 SLI setup. We tend to place more emphasis on average frame rates because often minimum frame rates are merely a momentary drop in FPS that aren’t even noticeable to the human eye. When the FPS drops go below 30 and are detectable by the human eye, then the GPU has problems. We don’t like GPU’s stuttering during game play and will likely fill its fan with peanut butter and watch the core roast (yeah, right).

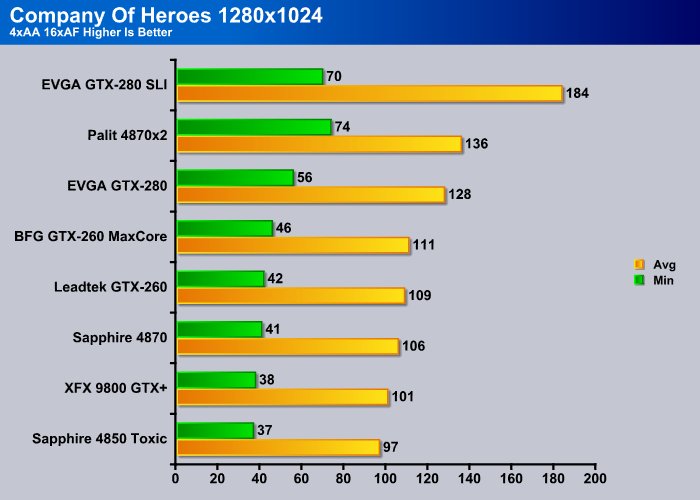

Backing down to 1280×1024 but cranking 4xAA/16xAF, the EVGA GTX-280 SLI again falls a few frames below the 4870×2 in minimum frames and smashes the 4870×2 in average frames. The single GTX-280 keeps its spot as the fastest single core GPU in this test once again.

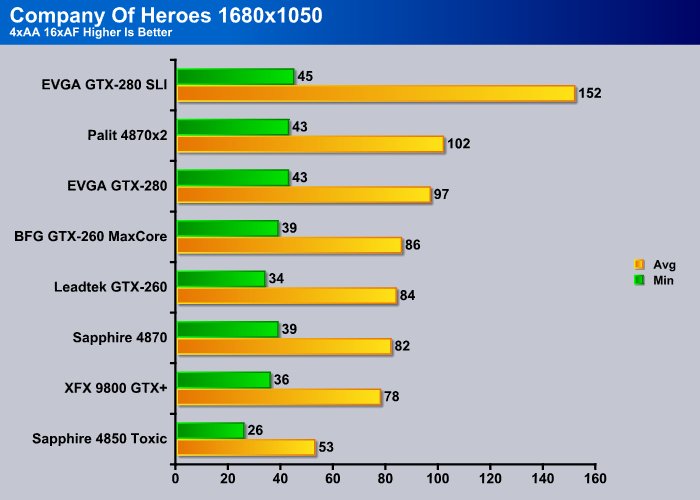

Increasing the resolution to 1680X1050 with AA/AF cranked up, the GTX-280 setup pulled out ahead (slightly) in minimum FPS and again crushed the 4870×2 in average FPS. The single EVGA GTX-280, at stock speed we might add, again reigns supreme among single Core GPU’s.

At the highest resolution that we tested, at 1920×1200 AA/AF, we turned on all settings to max and the EVGA GTX-280 SLI setup kept the lead over the 4870×2, and the single EVGA GTX-280 performed almost as well as the 4870×2.

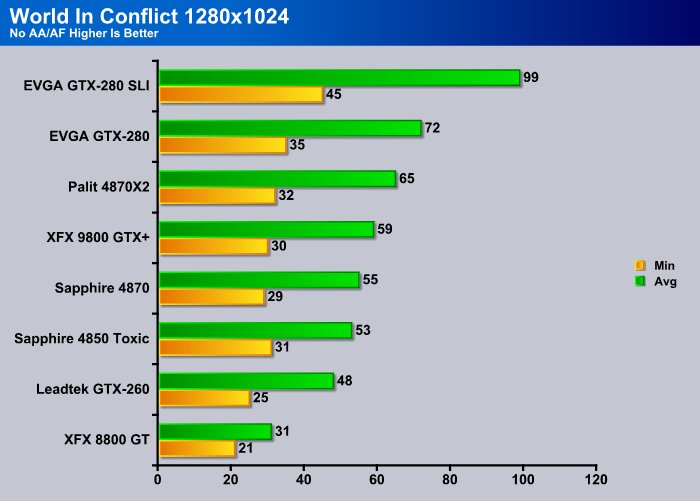

WORLD IN CONFLICT DEMO

World in Conflict is a real-time tactical video game developed by the Swedish video game company Massive Entertainment, and published by Sierra Entertainment for Windows PC. The game was released in September of 2007. The game is set in 1989 during the social, political, and economic collapse of the Soviet Union. However, the title postulates an alternate history scenario where the Soviet Union pursued a course of war to remain in power. World in Conflict has superb graphics, is extremely GPU intensive, and has built-in benchmarks. Sounds like benchmark material to us!

World in Conflict, running DX10 with the Eye Candy maxed, can be brutal on games. For this test we threw our old faithful XFX 8800 GT into the mix to show the performance increase from the 8800 GT current GPU’s. In just a short period of time, GPU processing power has increased tremendously. In World in Conflict, the EVGA GTX-280 SLI setup dominated the field with the single GTX-280, and being a little jealous, it decided to outperform the 4870×2 as well.

We could be seeing a pattern here with the EVGA GTX-280 SLI setup again in the lead. The 4870×2 lost out (by one frame) to the single GTX-280 in the minimum frames test but the 4870×2 came out five frames ahead of the GTX-280 in average frames.

At 1920×1200, the EVGA GTX-280 SLI setup stays in the lead by a respectable amount. The single GTX-280 lost to the 4870×2 in minimum FPS again, but came out ahead in average FPS.

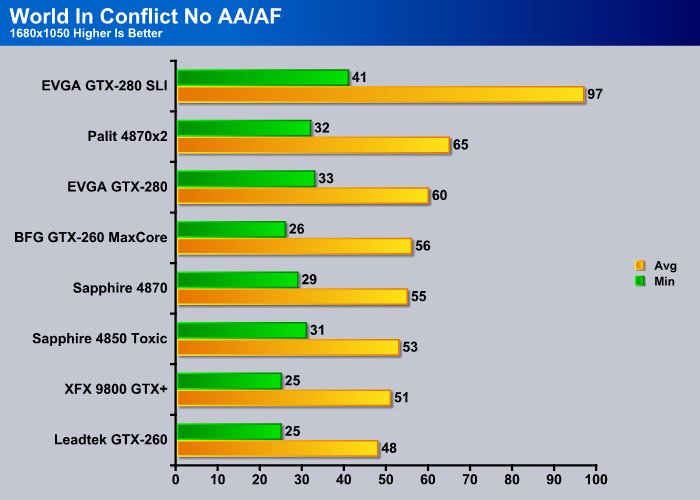

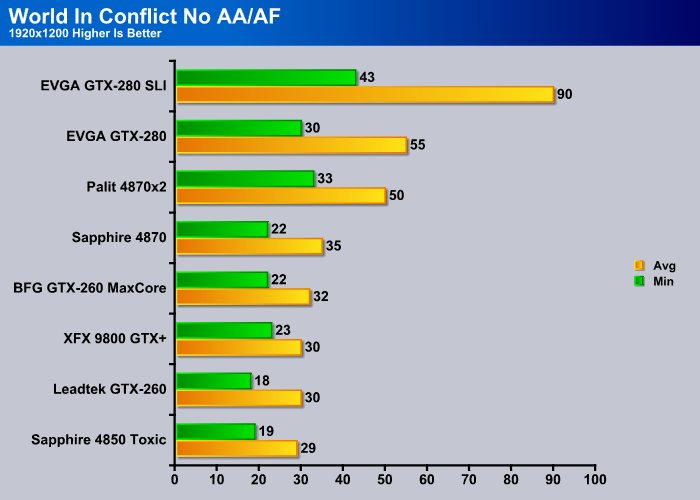

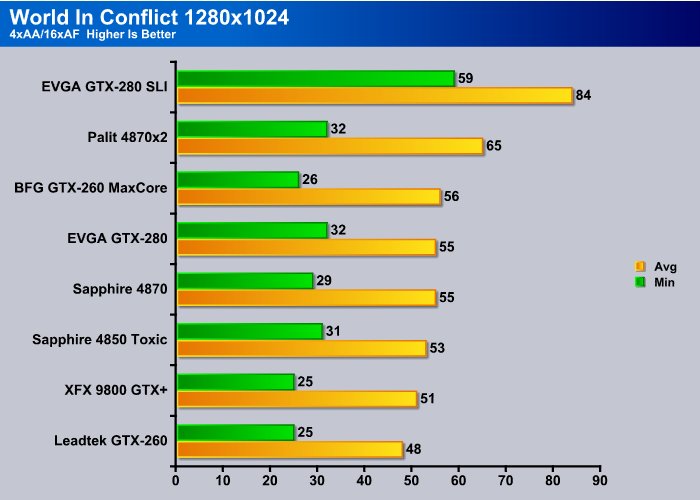

When it comes to the rubber meeting the road with GPU’s, if we see any degradation of performance, it’s when AA/AF is turned on. Adding the last vestige of Eye Candy to the mix usually tips the performance scale. Of late, in a lot of GPU’s, we see them rebelling against the norm and picking up performance in some areas when AA/AF is cranked. Whether driver improvements or GPU Core design are responsible for the change, we can’t say. We prefer to think they just want to make our lives harder.

At this resolution we see several of the GPU’s dipping under 30 FPS, which is the cutoff for game play that appears rock solid to the human eye. The GTX-280 SLI setup comes out in the lead again by a decent amount, followed by the 4870×2. The factory overclocked BFG GTX-260 MaxCore decided to get into the action and came out one FPS ahead of the GTX-280 running standalone in the average FPS test, but ran behind the GTX-280 in the minimum FPS test. That just goes to show you need to watch out for the under dog as sometimes it can jump up and bite you.

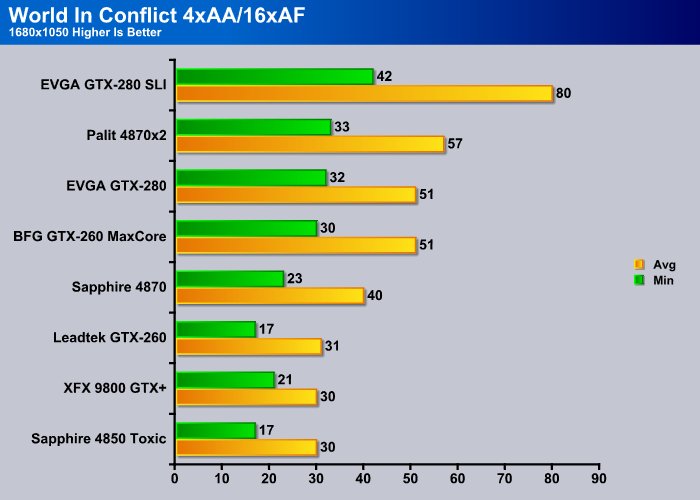

Moving to 1680×1050, the GPU’s were starting to groan a little and the fans keeping them cool were starting to kick the speed up to keep them cool. That’s a good indication that they are starting to stress well. By this point, we already knew where the EVGA GTX-280 was going to be on the chart, which makes it easier to chart things. Once again, it’s in the lead by a decent amount, followed by the 4870×2, and the single GTX-280 performs almost as well as the 4870×2. World in Conflict must like the factory overclocked BFG GPU because it was close to the GTX-280. Only the top four GPU setups tested remained at or above 30FPS, that magical cutoff for rock solid game play.

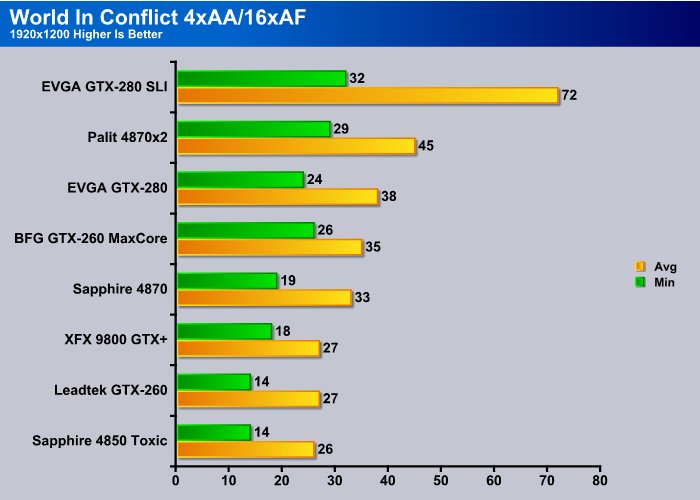

At 1920×1200 you probably need to back off the Eye Candy a little with most of the GPU’s for World if Conflict running in DX10. Even the mighty 4870×2 was showing a stutter here and there. The EVGA GTX-280 SLI setup is the only one that kept above 30 FPS in this test and the others dipped below 30. We were watching the test the entire time and with all the GPU’s down to the BFG card, we didn’t really notice any stuttering that would hamper game play. With the Sapphire 4870, we did see indications of micro stutters, and the bottom three, well, we have our jar of peanut butter ready to smear on their fans.

Crysis v. 1.2

Crysis is the most highly anticipated game to hit the market in the last several years. Crysis is based on the CryENGINE™ 2 developed by Crytek. The CryENGINE™ 2 offers real time editing, bump mapping, dynamic lights, network system, integrated physics system, shaders, shadows, and a dynamic music system, just to name a few of the state-of-the-art features that are incorporated into Crysis. As one might expect with this number of features, the game is extremely demanding of system resources, especially the GPU. We expect Crysis to be a primary gaming benchmark for many years to come.

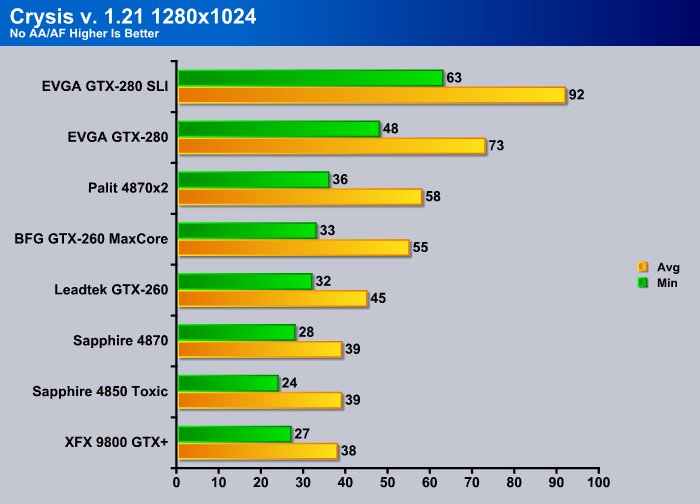

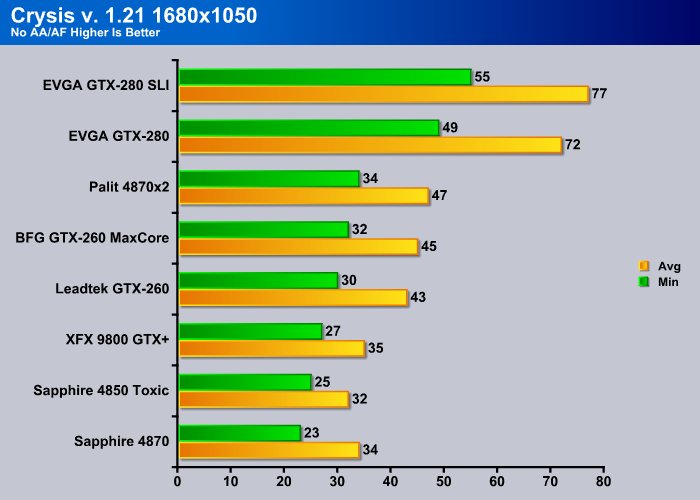

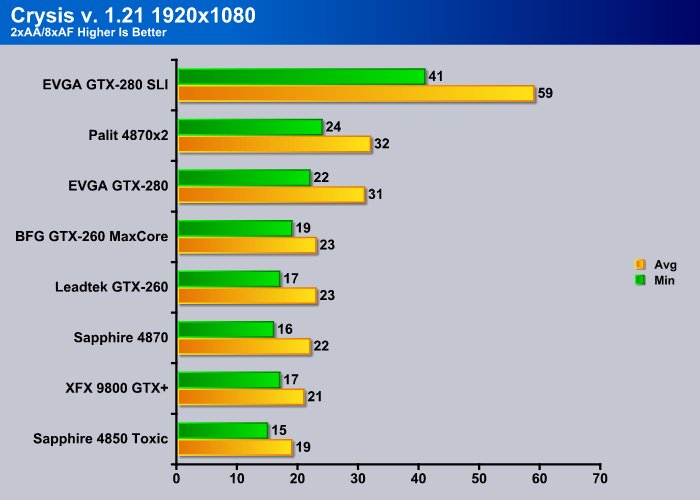

We’re thinking it’s time for things to get ugly when Crysis comes calling on GPU’s. GPU’s have been known to scurry away in fear of this GPU crushing game. Starting at the lowest resolution we tested, we’re already seeing GPU’s below the magical 30FPS, where graphics look rock steady to the eye. Crysis at this resolution liked the EVGA GTX-280 SLI setup, and it preferred the single GTX-280 over the 4870×2.

Kicking things up to 1680×1050 in this GPU crushing titan (Crysis) we see the scaling holding true with the EVGA GTX-280 SLI setup in the lead with the single GTX-280 right behind it. The programmers of Crysis deserve a sound spanking from the SLI optimization team at Nvidia. They obviously feel asleep in the “make your game run right with SLI” class.

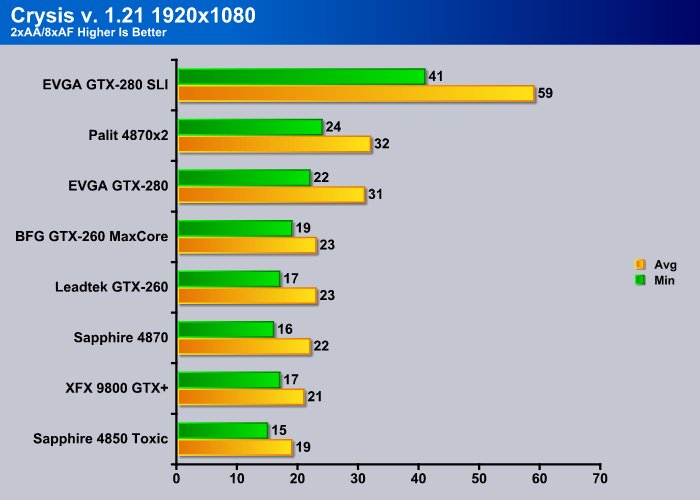

By the time we hit 1920×1080 it was getting pretty ugly in the Crysis testing. The EVGA GTX-280 SLI setup was performing like (excuse the euphemism) greased snot on glass. This is the first time we’ve seen Crysis looking good at this resolution with all the eye candy cranked up. Good GTX-280, bad Crysis programmers.

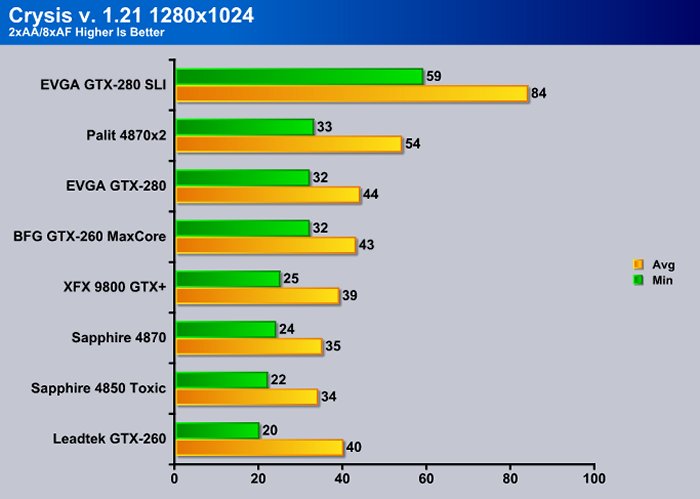

Moving to 1280×1024 with AA/AF turned on, the EVGA GTX-280 SLI setup is still in the lead. The single GTX-280 and the 4870×2 are neck and neck in the minimum FPS, but once again, in average FPS, the 4870×2 had an advantage.

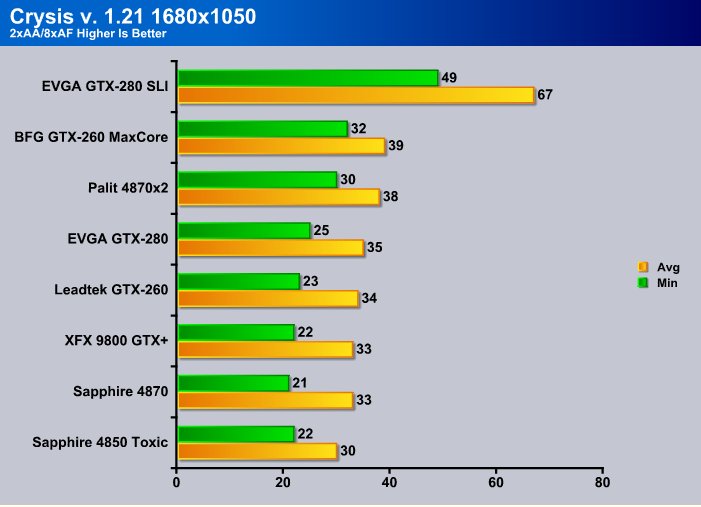

Hitting 1680×1050 with 2xAA/8xAF things got a little weird. The EVGA GTX-280 SLI setup came out on top, but the factory overclocked BFG GTX-260 MaxCore jumped into second place. The Palit 4870×2 came in third and the single GTX-280 came in fourth. It was probably just catching its breath.

By the time you get to 1900×1200 in Crysis things are starting to get pretty ugly. Of all the tested setups, the EVGA GTX-280 is the only one that stayed at entirely playable levels, which just shocked us. Usually, by this time, most GPU’s just buckle and you have to lose some eye candy for playability. One nerd around the lab was actually bowing to the test rig. We gave him some root beer and a candy bar and he’s much better now.

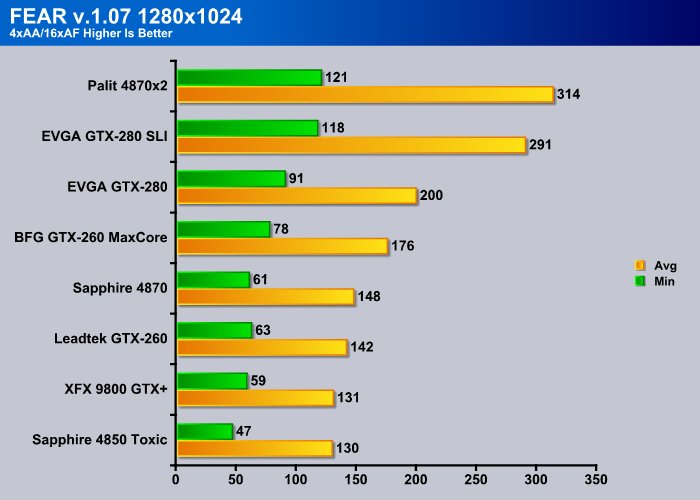

FEAR V. 1.08

F.E.A.R. (First Encounter Assault Recon) is a first-person shooter game developed by Monolith Productions and released in October, 2005 for Windows. F.E.A.R. is one of the most resource intensive games in the FPS genre of games ever to be released. The game contains an integrated performance test that can be run to determine your system’s performance based on the graphical options you have chosen. The beauty of the performance test is that it gives maximum, average, and minimum frames per second rates. F.E.A.R. is a great benchmark, and one heck of a FPS.

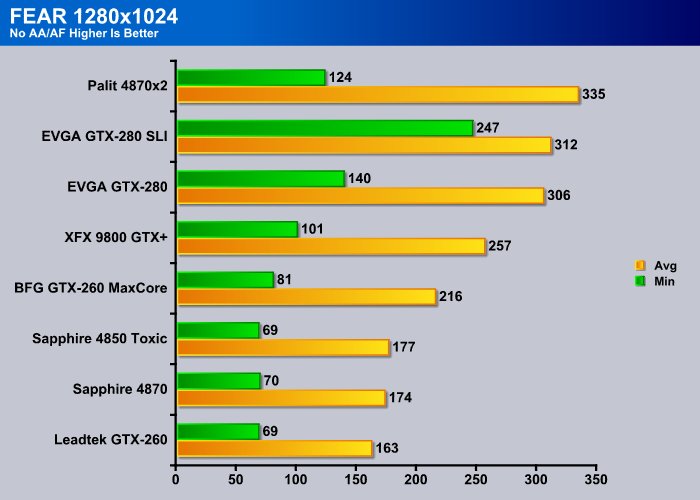

The Palit 4870×2 pulls out a few frames ahead in average FPS in this aging test. If you take into consideration that the GTX-280 SLI setup churned out almost double the minimum FPS, we’d still have to consider it the winner in the test. The EVGA GTX-280 single GPU configuration is still in the lead for single GPU core cards.

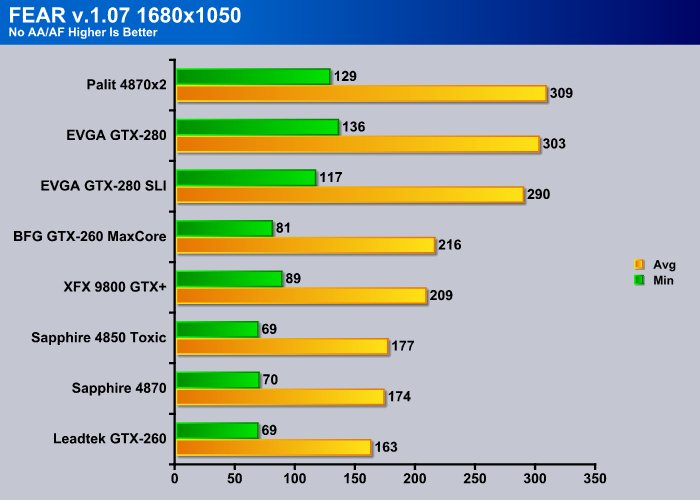

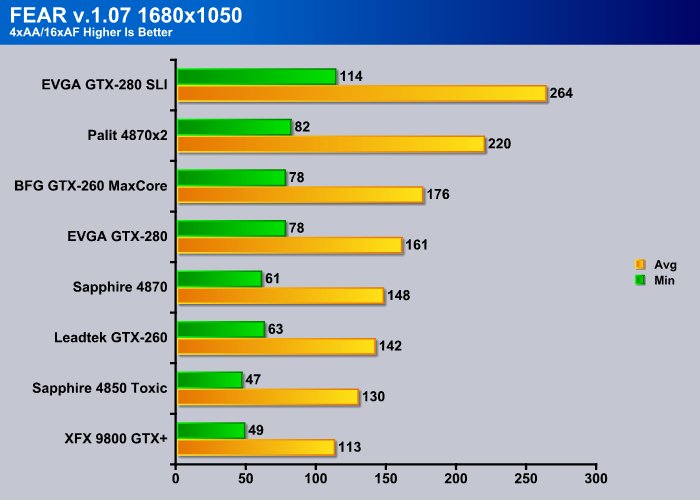

Moving to 1680×1050, we see that Fear must like the Palit 4870×2. It comes out in the lead in average FPS again, but the GTX-280 single GPU setup took second, which indicates to us this game is old enough that SLI isn’t supported well.

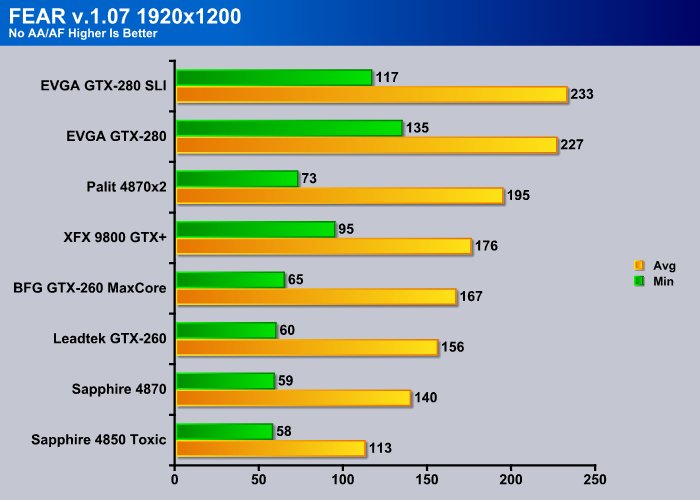

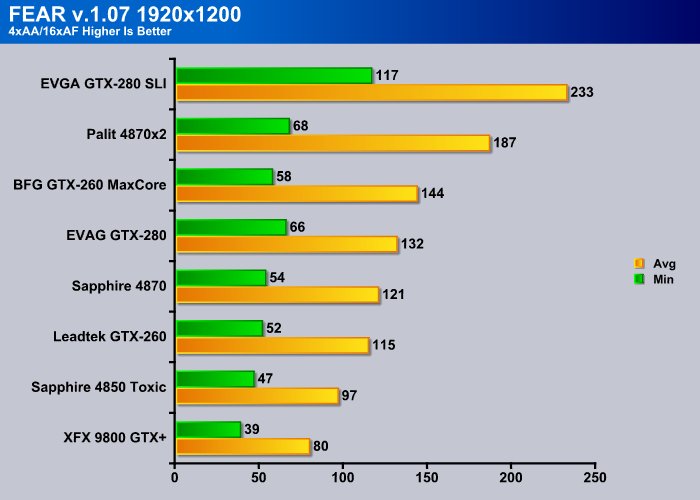

At the maximum resolution we tested, the GTX-280 SLI setup came out ahead in Average FPS, but the single GTX-280 beat it in minimum FPS, again indicating the game is getting ready for retirement from our benching regime. The Palit 4870×2 wasn’t treated any better and came in a distant third.

Dropping back to 1280×1024 with AA/AF turned on, we see the 4870×2 take the lead again. The 4870×2 is followed by the EVGA GTX-280 SLI setup and that’s followed by the EVGA GTX-280.

Stepping up to 1680×1050 with AA/AF cranked up, the EVGA GTX-280 SLI setup takes the lead again. The Palit 4870×2 comes in second and the BFG GTX-260 MaxCore comes in third. The GTX-280 single card comes in fourth, which was a surprise to us. Maybe F.E.A.R. favors the higher clock speed of the factory overclocked BFG card.

Even at 1920×1200, all the GPU’s tested ran exceptionally well, which is telling us that we probably need to be looking at a replacement for F.E.A.R. in our testing regiment. Even at the highest resolution, all the GPU’s did very well. The GTX-280 SLI setup did the best, followed by the 4870×2, the BFG card came in third again, and the single GTX-280 came in fourth. Which brings us to the replacement for F.E.A.R. that we are currently looking at, Far Cry 2.

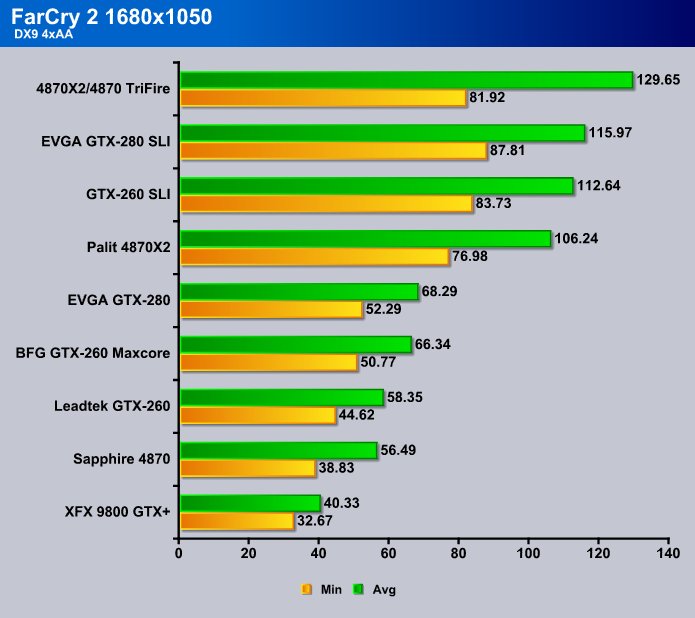

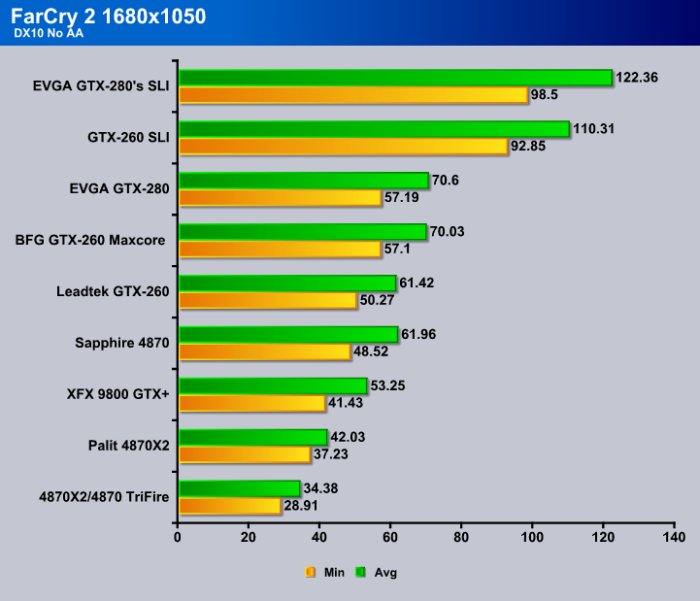

Far Cry 2

Far Cry 2, released in October 2008 by Ubisoft, was one of the most anticipated titles of the year. It’s an engaging state-of-the-art First Person Shooter set in an un-named African country. Caught between two rival factions, you’re sent to take out “The Jackal”. Far Cry 2 ships with a full featured benchmark utility and it is one of the most well designed, well thought out game benchmarks we’ve ever seen. One big difference between this benchmark and others is that it leaves the game’s AI (Artificial Intelligence ) running while the benchmark is being performed.

We had limited time to do extended testing in Far Cry 2, and while we would have liked to do more extensive testing we just didn’t have time to wade through a stack of 9 GPU’s in 10 different configurations to give complete testing results in Far Cry 2. We opted to test at 1680×1050 because it’s the medium resolution we’ve been testing at. While we understand that testing at 1920×1200 is desired by some readers, we are throwing this early result in as an extra to our normal testing regiment, so let’s keep the resolution carping down to a minimum. We did go one additional step and test in DX9 and DX10 so that Vista and XP users get an idea of performance in this instance. Enjoy the preview of things to come.

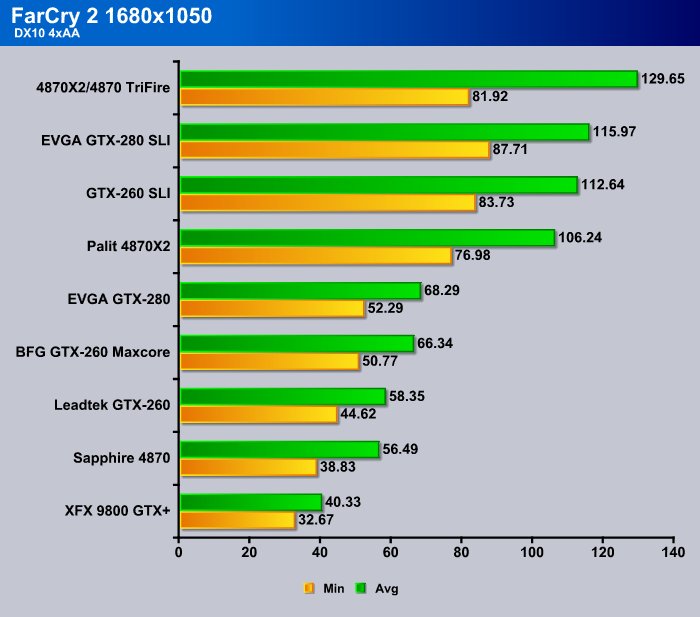

Far Cry 2 is a little different than most games in benchmarks. The benchmarking tool, which is one of the best we’ve ever seen, leaves the AI turned on while the bench is running. It also has the advantage of being optimized heavily for newer GPU’s and SLI. This game is properly optimized for SLI and we have extended GPU results to prove it.

The extended testing we did in Far Cry 2 shows the two SLI setups coming in ahead of even Trifire. Trifire and the 4870×2 come in ahead of the single GTX-280.

Leaving things in DX9, but cranking on AA/AF, the Palit 4870 TriFire setup comes out on top in average FPS but loses in minimum FPS. We thought we’d throw in some TriFire so the guys in the Forum would have something to argue about. Beyond three GPU processing cores, the GTX-280 SLI setup came in on top. The GTX-260 setup came in next, followed by the 4870×2. We had to throw in TriFire not only to give the ATi fans a little bone, but also to demonstrate it’s taking 3three of the most modern ATI cores to beat two of the current Nvidia cores.

Here’s where an anomaly with the ATI drivers that we discovered rears its ugly head. In DX10 with No AA/AF, TriFire falls to dead last with the 4870×2 next to last. We ran this test so many times that it isn’t even funny. We checked a few other sites and they’d seen the same thing. It’s a driver issue that hopefully ATI will address in the near future. The two SLI setups came in first and second, followed by the GTX-280 in this test, which ATI thoughtfully skewed with their prestigious driver programming abilities.

Then, in DX10 turn AA/AF on, TriFire rises from the ashes and takes the lead. The two SLI setups still top the charts in the dual GPU class, followed by the 4870×2 and the GTX-280 tops the single GPU core class again. We can already tell that things are going to get interesting with the X58 platform capable of supporting both SLI and Crossfire.

TEMPERATURES

We tested the EVGA GTX-280 temperatures idling at the desktop with no applications running that would utilize the GPU beyond the normal programs that load at boot. To test it at Load we ran 3Dmark Vantage looping for 30 minutes, then recorded the temperatures. Keep in mind this is an open chassis no frame test platform, so if you have a case with bad airflow temperatures will vary. We prefer to test in chassis, but with 9 GPU’s in and out, and combinations of SLI/Crossfire, it’s just not feasible.

| EVGA GTX-280 Temperatures GPU 1 | |||

| Idle GPU 1 | Load GPU 1 | Idle Fan 60% GPU 1 | Load Fan 60% GPU 1 |

| 45°C | 78°C | 43°C | 70°C |

| EVGA GTX-280 Temperatures GPU 2 | |||

| Idle GPU 2 | Load GPU 2 | Idle Fan 60% GPU 2 | Load Fan 60% GPU 2 |

| 44°C | 79°C | 45°C | 70°C |

The EVGA GTX-280 runs reasonably cool under load conditions. Be aware that in a tower chassis the heat from the second card can rise and affect the temperature of the top GPU, so you need good airflow to run two of these beasts. Run three of them and you’ll need great airflow and you’ll need ambient temperatures in the room to be kept at reasonable levels. With the fans kicked up to 60%, which was still pretty quiet even on an open chassis (Top Deck Testing Station), temperatures were even more reasonable. During a few suicide runs we made, we kicked the fans up to 100% and on two cards, it wasn’t nearly as annoying as kicking a single ATI fan (say on the 4870×2) up to 100%. If you’re a fanatic, aftermarket cooling is available for a price, but exercise good judgment because with a well vented chassis you won’t have a problem.

POWER CONSUMPTION

To get the power consumption we idled the computer at the desktop with no un-needed background tasks running on the CPU or GPU. Load testing was done with 3DMark Vantage looping for 30 minutes, then the temperature was recorded. We repeated the test three times with a 30 minute cool down time between each test.

| GPU Power Consumption | |||

| GPU | Idle | Load | |

| EVGA GTX-280 | 217 Watts | 345 Watts | |

| EVGA GTX-280 SLI | 239 Watts | 515 Watts | |

| Sapphire Toxic HD 4850 | 183 Watts | 275 Watts | |

| Sapphire HD 4870 | 207 Watts | 298 Watts | |

| Palit HD 4870×2 | 267 Watts | 447 Watts | |

| Total System Power Consumption | |||

Power consumption runs a little high on these beasts, but if you’re running the most powerful single GPU on the planet you’re probably more worried about graphics quality than power consumption. You don’t buy these to lower the electric bill. We didn’t notice much of an increase at normal activities like web surfing and building charts, but during gaming and 3D applications, power spiked above 500 Watts. It was hard to take our eyes off the games running silk smooth to check the Kill A Watt that we used for measuring power consumption; graphics nirvana is a mesmerizing thing.

OVERCLOCKING

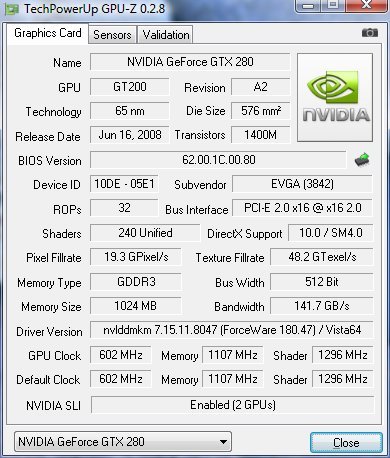

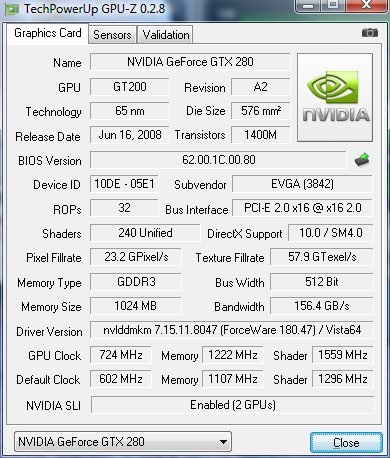

We saved the GPU-Z shot of the EVGA GTX-280 for the overclocking section this time around, otherwise we end up with one shot early on and the same picture here.

Stock core speed on the EVGA GTX-280 is 602 MHz with the memory running at 1107 and shader clocks of 1296. Notice SLI is enabled in this shot.

With stock fan speeds we were able to drive the core to 724Mhz, an increase of 122MHz, which you’ll see gave us a nice little performance boost. Shader speed went to 1559MHz with the clocks linked in EVGA Precision overclocking tool. Memory we got to 1222MHz, which is sure to help out a little when we run some benches to show the increased performance. Please note these aren’t maximum overclocks. These are overclocks with the fan set on auto. We can achieve higher overclocks by kicking the fans up or using additional cooling methods. We don’t want to represent a maximum suicide run OC and have some unlucky reader thinking that they can run that 24/7 and destroy a beautiful card like this. Please note, any damage from overclocking that you do, neither we nor EVGA are responsible for (what we have dubbed) “Bone Head Stunts”.

On the best run in 3Dmarks06 we hit 21923 with a single GTX-280 a pretty hefty score worth some serious bragging rights. Running SLI in 3Dmarks06 is a little pointless so we saved that for Vantage which shows the scaling nicely.

With the single EVGA GTX-280 we hit 13942 with PhysX enabled. Go ahead, scream, whine, plead, wail, and gnash your teeth about PhysX all you want. This GPU was designed with PhysX in mind and disabling it would be akin to running a marathon with penny loafers instead of running shoes. PhysX whining by ATI aficionados will result in one simple response, “Buy an Nvidia GPU or live without PhysX. But don’t whine about it. We don’t have any cheese to go with that whine”.

Running in SLI we jump from 13,942 to 23,724, which is more than respectable by anyone’s standards. Except ours, of course, but we’re driving for records among the staff. That’s another story though.

In an attempt to keep the whining down to a minimum we went ahead and ran SLI on Vantage without PhysX and got a score of 20,735, which is still a pretty respectable score in the somewhat stingy scoring system that 3dMark Vantage uses.

Then we cranked the SLI setup to 724/1222 and got a whopping 27,131. You might want to stop for a moment and wipe that drool from your chin. We have scored higher, but we wanted to keep it to the clocks we told you about earlier. Now imagine what score we can pull off when we break out our third EVGA GTX-280 when the board we have coming in (that supports triple SLI) gets tossed into the mix. Mind boggling isn’t it? For the Staff: I believe that puts me in the lead for now, so get crackin’!

Conclusion

We’ve tested a lot of GPU’s on Bjorn3D, and we’ll continue to test a lot of GPU’s, but currently the EVGA GTX-280 and all its brethren 280’s are the fastest single core GPU on the planet. Driver support for Nvidia GPU’s seems to be better than the support for ATI cards, and the future of SLI is looking really great. With Far Cry testing done and SLI scaling topping 60-70 percent average, and under well optimized conditions even higher than that, SLI could be coming into its prime. Nvidia has made a concentrated effort to improve SLI and the effort is paying off. With Core i7 CPU’s pumping out more power than ever before and clock speeds reaching insane levels, it’s a powerful combination that’s sure to make many people drool uncontrollably.

With blazing performance, quiet operation, and ease of setup and use, the EVGA GTX-280 has earned our respect and is sure to be a force in the ever growing demands of high end gaming for some time to come. We could easily say one of these beasts will drive any game for the foreseeable future, then later when prices drop throw another one in SLI or get crazy and throw three in SLI and you’ll have unparalleled graphics nirvana for a few years to come.

We are trying out a new addition to our scoring system to provide additional feedback beyond a flat score. Please note that the final score isn’t an aggregate average of the new rating system.

- Performance 10

- Value 8

- Quality 10

- Warranty 9

- Features 9.5

- Innovation 10

Pros:

+ Runs cooler than ATI GPU’s

+ SLI Is Scaling Better Than CrossFire

+ Quiet

+ Blazing FPS That Will Make Your Jaw Drop

+ Single GPU Performance Will Make You Smile

+ Two GTX-280’s In SLI Will Make You So Happy You Might Run Down The Street And Slap Someone

+ The Fastest Single Core GPU Made

Cons:

– Price Is A Little Hefty

– Box Doesn’t Include Cheese For PhysX Whiners

The EVGA GTX-280 is the most powerful single core GPU on the planet. It’s the fastest GPU we have ever tested and when it reaches those sweet overclocks it gets even better. The fastest GPU known to man deserves a:

Final Score: 9.5 out of 10 and the Coveted Bjorn3D Golden Bear Award.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996