Leadtek 8600 GT is an example where we can have a nice mid range chip with old school DDR2 memory. Now that AMD HD 3850 / 3870 are out, Leadtek should have priced these models more aggressively in case someone plans on bridging them

INTRODUCTION

It’s been a while since the mid-range G84 hit the market. To be frank, after testing this card I had my doubts of its hierarchy in the community. Regardless the class, these cards along with entry level ones are the actual dough makers (do not confuse it with kitchen dough). High-end and enthusiast products are just a loose change, however they play a very important role in the market. Prestige and respect among (pardon the term) fanciers.

We don’t always review uber-performing graphic cards here at Bjorn3D. Neither do we review many entry-level cards. Mid-range SKUs are fascinating because they shape all kinds of trends for the graphics market, plus they won’t cost you an arm and a leg. Okay, enough of the philosophy.

Leadtek has got quite a few PX8600 models for you to chose from. The one I have on my bench today is located on the bottom of the food chain — SLI setup to be precise. It’s the entry-level WinFast PX8600 GT TDH board featuring blistering fast GDDR2 memory. A nice way to diversify your SKUs isn’t it? Now let’s get off Leadtek’s back and check out what these two can do for us when it comes to gaming.

KEY FEATURES

Full Microsoft® DirectX® 10 Support

World’s first DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects.

NVIDIA® Lumenex™ Engine

Delivers stunning image quality and floating point accuracy at ultra-fast frame rates.

- 16x Anti-aliasing: Lightning fast, high-quality anti-aliasing at up to 16x sample rates obliterates jagged edges.

- 128-bit floating point High Dynamic-Range (HDR): Twice the precision of prior generations for incredibly realistic lighting effects

PCI Express support

Designed to run perfectly with the next-generation PCI Express bus architecture. This new bus doubles the bandwidth of AGP 8X delivering over 4 GB/sec in both upstream and downstream data transfers.

NVIDIA® PureVideo™ HD technology

The combination of high-definition video processors and NVIDIA DVD decoder software delivers unprecedented picture clarity, smooth video, accurate color, and precise image scaling for all video content to turn your PC into a high-end home theater. (Feature requires supported video software. The HDCP function is available on PX8600 GT w/ HDCP configurations. Find the information on retail package.)

NVIDIA® ForceWare™ Unified Driver Architecture (UDA)

OpenGL® 2.0 Optimizations and Support

Full OpenGL support, including OpenGL® 2.0

VPU SPECIFICATION

The codename for this lovely entry-level 8600 GT is G84. It is literally the lowest performing 8600 GT on the planet thus the classification used. As for main features, it resembles G80 in all its glory. The differences lay in the architecture which I will go over briefly.

G84 is an 80nm TSMC process with 289 million trannies (transistors, but interpret it as you please) onboard. The core comes with 32 Stream Processors which is the quarter of what G80 offers in terms of shading capabilities (132). The good thing is, G84’s texturing unit can process eight texture addresses and eight filtering operations per clock where as G80 can do four texture addresses and eight filtering ops / clock. Going down the pipe we find 8 ROP units (raster operation) as opposed to 24 on the G80. The two ROP partitions can handle up to 64 Z samples; 16 color + 16 Z samples per clock or 32 color + Z samples per clock. Another obvious difference is the memory architecture which is 128-bit compared to 320-bit / 384-bit on G80.

Video playback on G84 is handled by the video decoding engine called VP2 (short for video processor 2) and is able to open MPEG-1, VC-1 and H.264 files with the help of BSP engine (bitstream processor). The third little core, AES128 helps decrypt HD content and accelerate the whole process.

| Video card |

Leadtek PX8600 GT 512MB |

PowerColor HD 2600 XT |

| GPU (256-bit) | G84 | RV630 |

| Process |

80nm (TSMC fab) | 65nm (TSMC fab) |

| Transistors | ~289 Million | ~390 Million |

| Memory Architecture | 128-bit | 128-bit |

| Frame Buffer Size | 512 MB GDDR-2 | 256 MB GDDR-3 |

| Rasteriser |

Stream Processors: 32 Precision: FP32 Texture filtering: 16 ROPs: 8 Z samples: 64 |

Fragment Processors: 120 |

| Bus Type | PCI-e 16x | PCI-e 16x |

| Core Clock | 540 MHz | 800 MHz |

| Memory Clock | 800 MHz DDR2 | 1400 MHz DDR3 |

| RAMDACs | 2x 400 MHz DACs | 2x 400 MHz DACs |

| Memory Bandwidth | 12.8 GB / sec | 22.4 GB / sec |

| Pixel Fillrate | 4.3 GPixels / sec | 3.2 GPixels / sec |

| Texture Fillrate | 8.6 GTexels / sec | 6.4 GTexels / sec |

| DirectX Version | 10 | 10 |

| Pixel Shader | 4.0 | 4.0 |

| Vertex Shader | 4.0 | 4.0 |

NVIDIA® unified architecture

Fully unified shader core dynamically allocates processing power to geometry, vertex, physics, or pixel shading operations, delivering up to 2x the gaming performance of prior generation GPUs.

GigaThread™ Technology

Massively multi-threaded architecture supports thousands of independent, simultaneous threads, providing extreme processing efficiency in advanced, next generation shader programs.

Full Microsoft® DirectX® 10 Support

World’s first DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects.

NVIDIA® TurboCache™ Technology

Shares the capacity and bandwidth of dedicated video memory and dynamically available system memory for turbocharged performance and larger total graphics memory.

NVIDIA® SLI™ Technology

Delivers up to 2x the performance of a single graphics card configuration for unequaled gaming experiences by allowing two graphics cards to run in parallel. The must-have feature for performance PCI Express® graphics, SLI dramatically scales performance on today’s hottest games. NVIDIA SLI Technology is not available on GeForce 8400 GPUs.

NVIDIA® Lumenex™ Engine

Delivers stunning image quality and floating point accuracy with ultra-fast frame rates:

- 16X Anti-aliasing Technology:

Lightning fast, high-quality anti-aliasing at up to 16x sample rates obliterates jagged edges.

128-bit Floating Point High Dynamic-Range (HDR) Lighting:

Twice the precision of prior generations for incredibly realistic lighting effects-now with support for anti-aliasing.

NVIDIA® Quantum Effects™ Technology

Advanced shader processors architected for physics computation enable a new level of physics effects to be simulated and rendered on the GPU-all the while freeing the CPU to run the game engine and AI.

NVIDIA® ForceWare® Unified Driver Architecture (UDA)

Delivers a proven record of compatibility, reliability, and stability with the widest range of games and applications. ForceWare provides the best out-of-box experience for every user and delivers continuous performance and feature updates over the life of NVIDIA GeForce® GPUs.

OpenGL® 2.0 Optimizations and Support

Ensures top-notch compatibility and performance for OpenGL applications.

NVIDIA® nView® Multi-Display Technology

Advanced technology provides the ultimate in viewing flexibility and control for multiple monitors.

PCI Express Support

Designed to run perfectly with the PCI Express bus architecture, which doubles the bandwidth of AGP 8X to deliver over 4 GB/sec. in both upstream and downstream data transfers.

Dual 400MHz RAMDACs

Blazing-fast RAMDACs support dual QXGA displays with ultra-high, ergonomic refresh rates-up to 2048×1536@85Hz.

Dual Dual-link DVI Support

Able to drive the industry’s largest and highest resolution flat-panel displays up to 2560×1600. Available on select GeForce 8800 and 8600 GPUs.

One Dual-link DVI Support

Able to drive the industry’s largest and highest resolution flat-panel displays up to 2560×1600. Available on select GeForce 8500 GPUs.

One Single-link DVI Support

Able to drive the industry’s largest and highest resolution flat-panel displays up to 1920×1200. Available on select GeForce 8400 GPUs.

Built for Microsoft® Windows Vista™

NVIDIA’s fourth-generation GPU architecture built for Windows Vista gives users the best possible experience with the Windows Aero 3D graphical user interface, included in the new operating system (OS) from Microsoft.

NVIDIA® PureVideo™ HD Technology

The combination of high-definition video decode acceleration and post-processing that delivers unprecedented picture clarity, smooth video, accurate color, and precise image scaling for movies and video. Feature requires supported video software. Features may vary by product.

- Discrete, Programmable Video Processor:

NVIDIA PureVideo is a discrete programmable processing core in NVIDIA GPUs that provides superb picture quality and ultra-smooth movies with low CPU utilization and power.

Hardware Decode Acceleration:

Provides ultra-smooth playback of H.264, VC-1, WMV and MPEG-2 HD and SD movies.

HDCP Capable:

Designed to meet the output protection management (HDCP) and security specifications of the Blu-ray Disc and HD DVD formats, allowing the playback of encrypted movie content on PCs when connected to HDCP-compliant displays. Requires other HDCP-compatible components.

Spatial-Temporal De-Interlacing:

Sharpens HD and standard definition interlaced content on progressive displays, delivering a crisp, clear picture that rivals high-end home-theater systems.

High-Quality Scaling:

Enlarges lower resolution movies and videos to HDTV resolutions, up to 1080i, while maintaining a clear, clean image. Also provides downscaling of videos, including high-definition, while preserving image detail.

Inverse Telecine (3:2 & 2:2 Pulldown Correction):

Recovers original film images from films-converted-to-video, providing more accurate movie playback and superior picture quality.

Bad Edit Correction:

When videos are edited after they have been converted from 24 to 25 or 30 frames, the edits can disrupt the normal 3:2 or 2:2 pulldown cadence. PureVideo uses advanced processing techniques to detect poor edits, recover the original content, and display perfect picture detail frame after frame for smooth, natural looking video.

Noise Reduction:

Improves movie image quality by removing unwanted artifacts.

Edge Enhancement:

Sharpens movie images by providing higher contrast around lines and objects.

THE CARD

It comes with no surprise that this PCB is green. Most Leadtek cards are built around reference design, except for special edition cards such as Leviathan. The cooling system however signifies that’s been made by Leadtek. In a nutshell, it’s quiet and cools the GPU and RAM chips really well.

Click a picture to see a larger view

There isn’t much to say about Leadtek WinFast PX8600 GT TDH GDDR2. Its standard design does not generate uuu’s and ahhh’s. It’s simplistic without any bells and whistles. The cooling system however is well thought out. Not only it cools the GPU, but it covers RAM chips (32Mx16, PG-TFBGA-84 package) so heat dissipates faster from these areas. The board carries standard display support: VGA compatible, VESA compatible BIOS for SVGA and DDC 1/2b/2b+. All available through dual DVI-I outputs and S-Video port (HDTV ready). Going one row below I’ve stripped the card naked and exposed the GPU and RAM modules. If you fancy knowing what kind of GDDR memory is used on these cards it’s all here: Qimonda HYB18T512161BF-25. If you don’t care much about specs I will spill the beans here. This not-so-fast 8600 GT from Leadtek is equipped with 512 MB of onboard GDDR2 memory clocked at funny 400 MHz (800 MHz DDR effective).

BUNDLE

Leadtek chose daring art for their 8600 series. Regardless the model and performance the front of the box features a very strange looking girl with blades in both hands (except for Lost Planet editions). She looks stunned and that simply doesn’t appeal to me at all. As for packaging, it’s worth noting that these cards were not wrapped around antistatic bags, just a regular bubble wrap which in my opinion is insufficient.

Click a picture to see a larger view

The sides and back are nicely designed with major features, package contents and system requirements. The bundle is rather poor and includes only the most important things.

- Quick Installation guide

- General guide

- Driver & Utilities CD-ROM

- DVI to VGA converter

- HDTV cable x1

TESTING METHODOLOGY

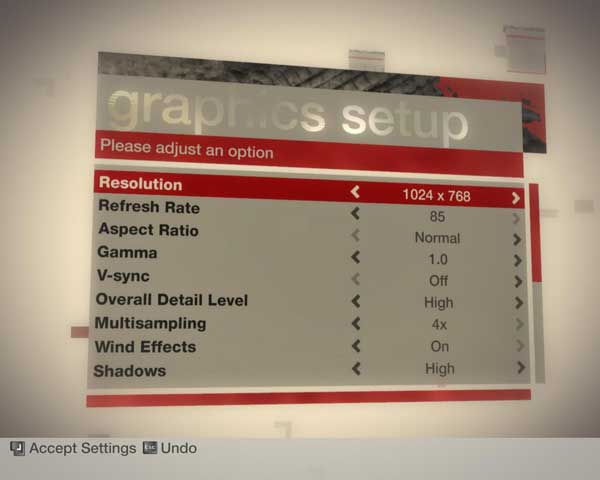

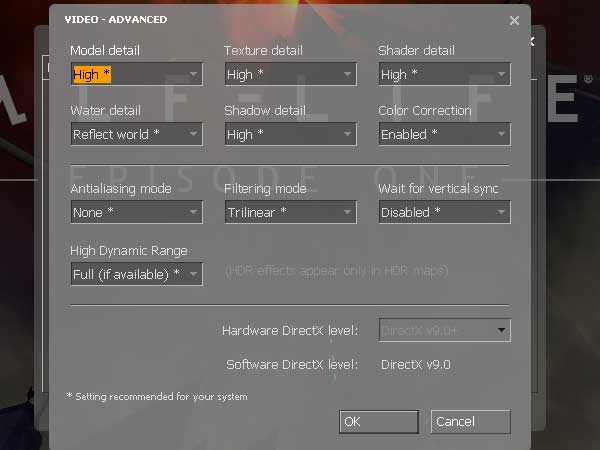

Gaming tests were performed using various image settings depending on game defaults: 0aa0af, 0aa4af, 0aa8af and 4aa8af. Because we are not strictly dealing with an entry-level video card, we will use the following resolutions: 1024×768, 1280×1024 and 1600×1200. As far as driver settings are concerned, the card was clocked at 540 MHz core and 800 MHz DDR2 for memory. High quality AF was used for anisotropic filtering. All other settings were at their default states.

PLATFORM

All of our benchmarks were ran on an Intel Core 2 Duo platform clocked at 3.0GHz. Performance of Leadtek WinFast PX8600 GT SLI was measured under ASUS P5N-E SLI motherboard. The table below shows test system configuration as well benchmarks used throughout this comparison.

Installation was flawless though I’ve noticed a lot of refresh rate problems which are likely caused by Vista’s implementation. Both cards booted without any unexpected quirks. As for overclocking, nTune software was used to determine maximum overclock along with RivaTuner to check for temperatures.

|

Testing Platform

|

||||

| Processor | Intel Core 2 Duo E6600 @ 3.0 GHz | |||

| Motherboard | ASUS P5N-E SLI | |||

| Memory | GeIL PC2-6400 DDR2 Ultra 2GB kit | |||

| Video card(s) | Leadtek WinFast PX8600 GT TDH 512MB GDDR2 SLI PowerColor HD 3850 Xtreme |

|||

| Hard drive(s) | Seagate SATA II ST3250620AS Western Digital WD120JB |

|||

| CPU Cooling | Cooler Master Hyper 212 | |||

| Power supply | Thermaltake Toughpower 850W | |||

| Case | Thermaltake SopranoFX | |||

| Operating System |

Windows XP SP2 32-bit |

|||

| API, drivers |

DirectX 9.0c DirectX 10 NVIDIA Forceware 169.04 ATI CATALYST 7.11 BETA |

|||

| Other software | nTune, RivaTuner | |||

|

Benchmarks

|

||||

| Synthetic | 3DMark 2006 D3D Right Mark |

|||

| Games |

Bioshock / FRAPS |

|||

3DMark06

The cards throughput was measured using 3DMark06 application. I’ll be comparing it to HD 3850 Xtreme from PowerColor.

I have to say G84s pixel shading capabilities are catching up HD 3850 which can be seen right below.

Hands down for 8600 GT in SLI as it does really well in all game tests. It even outscores PowerColor HD 3850 in GT1 test.

RightMark

Geometry processing (vertex processing) is much stronger on HD 3850.

DirectX 9: BIOSHOCK

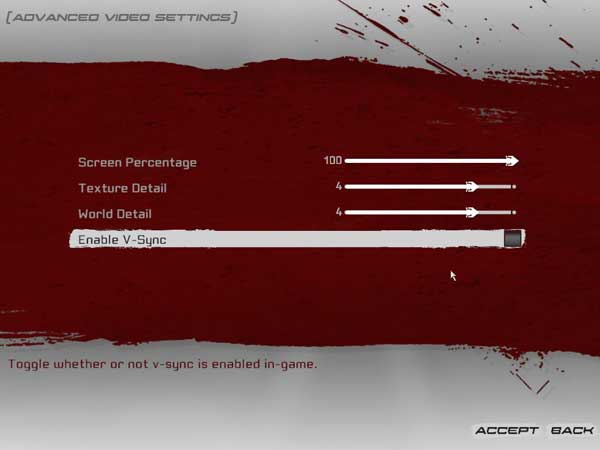

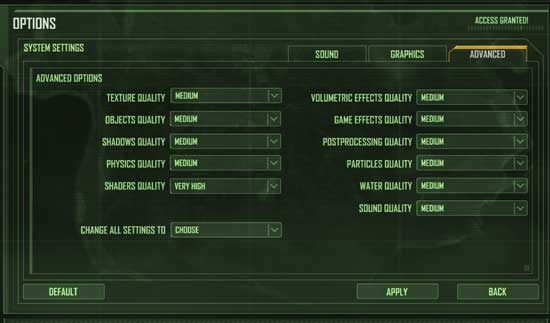

Standard settings were used with texture detail slider notched down to medium, rest at high.

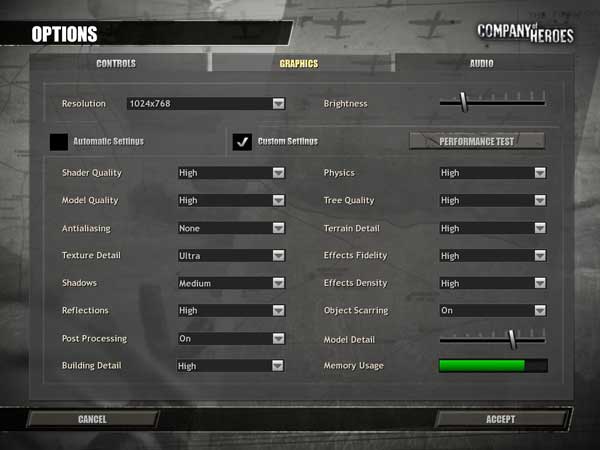

DirectX 9: COMPANY OF HEROES

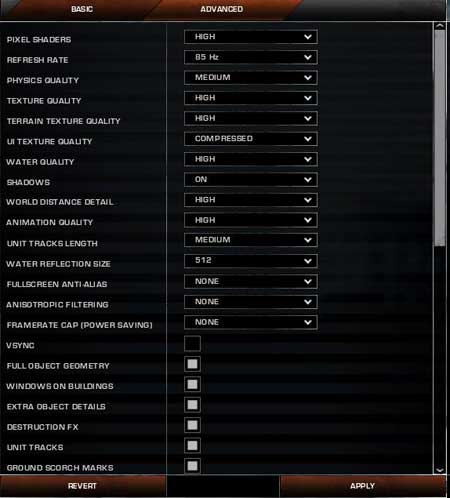

Although this isn’t a very graphic intensive game, I left most of the settings at high. Note that vSync was turned off with -novsync flag. Here you can clearly see 3850 superiority over 8600 GT SLI setup.

DirectX 9: CRYSIS

All tests were ran with medium settings and high shader quality (DX9 effects). Because the demo does not support SLI, a single 8600 GT was running instead. It seems HD 3850 lacks serious driver support for this title.

DirectX 9: COLIN MCRAE DIRT

All settings were maxed out and 8600 GT SLI simply didn’t have the juice for high resolution gaming in this title.

DirectX 9: HALF-LIFE 2 EPISODE ONE

Benchmarks were performed under maximum settings with additional 4AA and 8AF. Although this 8600 GT seems oldschool it is stepping on 3850’s toes in HL2.

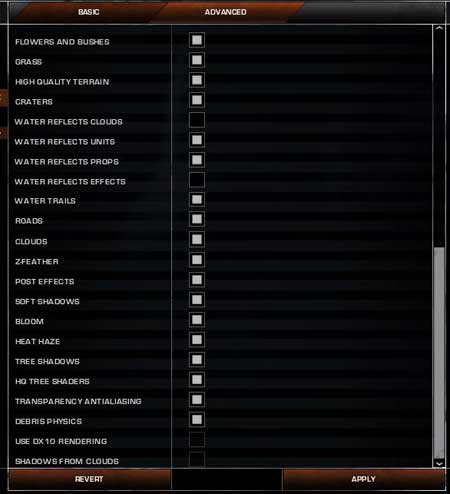

DirectX 9: UNREAL TOURNAMENT 3

The texture and world detail sliders were pushed down to level 4, but that’s still considered high settings. I didn’t bother forcing Antialiasing in this game as it required some .inf hacking. Also note that a frame limiter of 62 FPS was present during the testing.

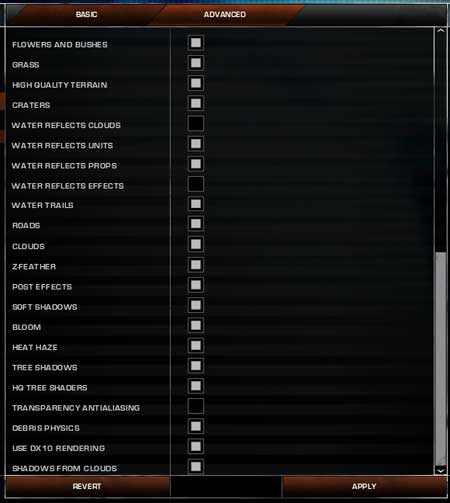

DirectX 9: WORLD IN CONFLICT

As you can see below, there were quite a few settings to play with. In the end, high quality options were chosen. WinFast 8600 GT SLI did not do really well in World in Conflict with FSAA and AF turned on.

DirectX 10: BIOSHOCK

Standard settings were used with texture detail slider notched down to medium, rest at high and DirectX 10 options set to ON. AMDs HD 3850 is a no no when it comes to DX10 performance. Hands down for a pair of 8600 GTs.

DirectX 10: COMPANY OF HEROES

Although this isn’t a very graphic intensive game, I left most of the settings at high. Note that vSync was turned off with -novsync flag. Even though 3850 manages to outperform the competition it certainly isn’t doing its best.

DirectX 10: CRYSIS

All tests were ran with medium settings and very high shader quality (DX10 effects). Because the demo does not support SLI, a single 8600 GT was running instead. Again, HD 3850 doesn’t seem to be doing a lot of damage to a single 8600 GT. No eye candy here either as it would require my intervention or worst it would simply not work.

DirectX 10: UNREAL TOURNAMENT 3

The texture and world detail sliders were pushed down to level 4, but that’s still considered high settings. I didn’t bother forcing Antialiasing in this game as it required some .inf hacking. Also note that a frame limiter of 62 FPS was present during the testing. DirectX 10 effects were automatically turned on.

DirectX 10: WORLD IN CONFLICT

There were quite a few settings to play with in WiC. In the end, high quality options were chosen. This is probably the only DX10 title where 8600 GT SLI had nothing to say to HD 3850 in terms of performance. A much appreciated frame rate and great scalability.

POWER & TEMPERATURE

Whether you’re building a simple desktop PC or power hungry gaming system, it’s always nice to know how much juice is really needed to power that graphic card you’re having. It’s pretty simple math if you look at it logically. The better the card performs, the more expensive it is and the more Watts it burns. Simple as that. Obviously this isn’t true when comparing two unlike generations of chips as they are built using totally different process.

The above graph shows various temperatures and power consumption for Leadtek WinFast PX8600 GT SLI as well as other cards for comparison. Peak power for this SLI setup oscillates around 200 Watts during loaded state and roughly 160W when the system is idling. It’s not that bad though a quality 500-600W PSU would be needed to handle rest of the system components. The temperature does not exceed 60 Celsius which indicates good heat dissipation.

OVERCLOCKING

G84 has already made friends amongst overclockers. An easy volt mod results in massive core overclock and major frame improvement. It’s quite possible to reach GTS clocks and even exceed it. However this WinFast model sports slow DDR2 memory and bumping up those chips won’t get you anywhere. Although I did not volt mod this card it has reached a miserable overclock: 620 MHz core and 420 MHz memory (840 DDR).

I strongly suggest getting a DDR3 model instead if you’re planning on overclocking it; a much better buy.

CONCLUSIONS

I honestly don’t know who to recommend such SLI setup. Leadtek has been known for having a bunch of SKUs for its customers. This 8600 GT is just the example where we can have a nice mid range chip with old school DDR2 memory. Now that AMD HD 3850 / 3870 are out, Leadtek should have priced these models more aggressively in case someone plans on bridging them. Although I have not shown scores with SLI disabled, it’s nothing to be proud of. Lack of bandwidth is present everywhere where high resolution is used and antialiasing is enabled.

Making this long story short, I thought it would be interesting to check out how the two of these can manage against much more powerful and cheaper HD 3850. Let’s not be so pessimistic as these two little 8600 GTs can do plenty of damage in DirectX 10 games. Priced at $125 green each they won’t surpass HD 3850 (as low as $180 bucks) however in either MSRP or performance.

The SLI is there, but with extra eye candy the scores don’t scale well. It’s a pity those DX10 titles don’t support antialiasing out of the box — it would be interesting to see the performance inside and out. For now, we have to live with what we have.

Pros:

+ DX10 ready

+ Second card gives pretty boost

+ Low power draw

Cons:

– Price / performance ratio not justified

– Slow DDR2 memory

– Poor overclocking

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996