Recently the game World in Conflict was released and this is yet another RTS that offers excellent visuals with lots of effects that all work together to bring your computer to its knees. We decided that it would be fun to see how different components in your system are affecting the performance in this game, possibly making it a bit easier to figure out what to upgrade if the game runs like a hog on your current system.

INTRODUCTION

I sometimes wonder what kind of computers we would use if no-one played games. It is often said that it is the games that drive the development of new and faster technology and I definitely think that is correct. Who has heard anyone talking about upgrading their computer with a new Quad-Core CPU or GeForce 8800GTX just to “surf faster” or “write documents faster”?

Most of the new games work fine with the technology available but from time to time a game comes out that really stress the computer. Most of the times the game is a FPS (First Person Shooter) like Unreal, Doom 3 and Half Life 2 but recently we have seen that even RTS games, like Company of Heroes and Supreme Commander, have started to uses lots of graphical effects that really needs a beast of a computer system.

A week ago the game World in Conflict was released and this is yet another RTS that offers excellent visuals with lots of effects that all work together to bring your computer to its knees. I decided that it would be fun to see how different components in your system are affecting the performance in this game, possibly making it a bit easier to figure out what to upgrade if the game runs like a hog on your current system.

WORLD IN CONFLICT

It is with a large portion of national pride that I talk about the game World in Conflict. The game has been developed by the Swedish developers Massive who also were behind the Ground Control games.

World in Conflict is a RTS game that has a lot in common with Massive’s earlier games. There is no base-building involved; instead you request reinforcements that are flown into the map. While the earlier games were set in a Sci-Fi environment, World in Conflict is set in our own world at the height of the cold war. In the game the Soviet Union has decided not to just give up and instead launched an attack on Europe (this reminds me of the excellent Tom Clancy book, The Red Storm). The US gets involved and it looks like the European countries and the US will be able to defeat the Soviet Union. The Soviets however use the fact that the US has most of its troops in Europe and launches an attack on US soil. The game starts right after the attack and takes you through levels both in the US and Europe.

What set the game apart from other RTS-games is its stunning visuals. Below you can find some screenshots from the game.

Click for a larger image (1600×1200 – >1 MB)

Click for a larger image (1600×1200 – >1 MB)

Click for a larger image (1600×1200 – >1 MB)

Click for a larger image (1600×1200 – >1 MB)

Click for a larger image (1600×1200 – >1 MB)

These visuals do not come for free and we will on the following pages see which components in your system that has the biggest affect on the overall game performance.

PERFORMANCE – BENCHMARK AND TEST SYSTEM

The game has a built-in benchmark that runs through a pre-set battle in a coastal town. The battle includes all sorts of effects including a nuclear blast, carpet bombing, lots of smoke, and lots of units firing on each other and so on. Below is a movie of the benchmark that I recorded using Fraps. The benchmark was running at 1024×768 with the quality set to Very High and the movie was then produced in Windows Movie Maker and compressed so the final file size landed around 26 MB. You can ignore the framerate as it is affected by Fraps recording the movie.

As you can see a lot is going on in the benchmark. During a real gaming session you will encounter a lot of these effects.

The Hardware

The game was benchmarked with the following components:

Static components:

- ASUS P5K3 Deluxe P35 motherboard

- Thermaltake 850W PSU

- Dell 24” LCD monitor

- Two 80 GB SATAII hard drives

- OS: Windows Vista (32-bit) with all updates including the specific updates for slow DX9/10 performance and slow multi-GPU performance.

CPU:

- C2D E6850 @ 3 GHz and 3.1 GHz

- Intel Quad-CPU Q6600 @ 2.4 GHz and 2.7 GHz

Memory:

- Corsair DDR3-1800MHz @ 800MHz, 1066MHz, 1080 MHz, 1500MHz and 1800MHz

Video cards:

- Gigabyte 8500GT

- AMD reference HD2600XT

- ASUS 640 MB 8800GTS

- AMD reference HD2900XT

- Sparkle Calibre 8800GTX

The drivers used for each card was the latest available drivers to download (beta or not): for NVIDIA 163.71 and for AMD, Catalyst 7.9.

GAME SETTINGS

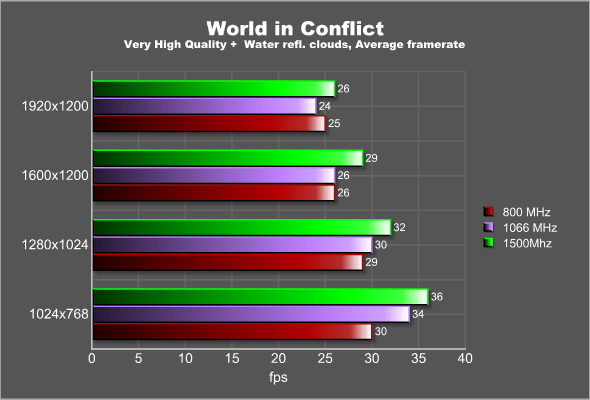

The game has several quality settings: Very Low, Low, Medium, High and Very high. In addition to using any of these prepared settings you of course also can go in and change each of the many individual quality settings by hand creating your own custom setting. For this article I choose to use Low, medium, High and Very High. The only change to the pre-settings were at Very High where I also checked “Water reflects clouds”.

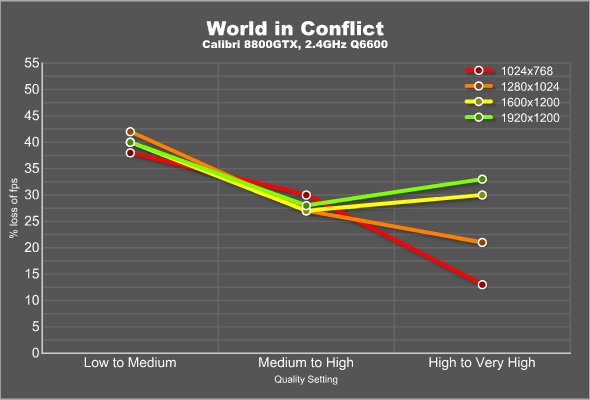

As you can see from the chart there are a lot of different settings available to turn on and off. To see how much performance we are loosing while going from setting to setting I created a chart showing the loss in % when moving from each quality setting to another.

The biggest drop in performance is when we move from the Low quality setting to the Medium quality setting. At all resolutions we see a ~40% drop in performance. The next step, which also is where we move from DX9 to DX10, sees a lesser drop at around 30%. Lastly, going to Very High setting from the High setting depends a lot on the resolution. This chart was done with the fastest tested card, the 8800GTX. With the HD2900XT , as will be seen later in this article, the drop between medium and High is far far larger.

I used Fraps to take screenshots during the benchmark so that you can see the difference in quality between the different quality level settings. All screenshots were taken at 1280×720. Beware that each page has 4 images loading at around 200-300 Kb each. So if you have a slow connection you will have to wait a bit for the images to load.

Image 1

Click to open the page where you can compare different quality levels

Image 2

Click to open the page where you can compare different quality levels

Image 3

Click to open the page where you can compare different quality levels

Image 4

Click to open the page where you can compare different quality levels

Image 5

Click to open the page where you can compare different quality levels

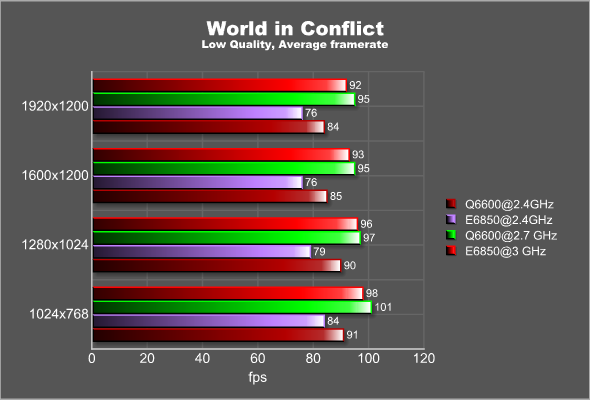

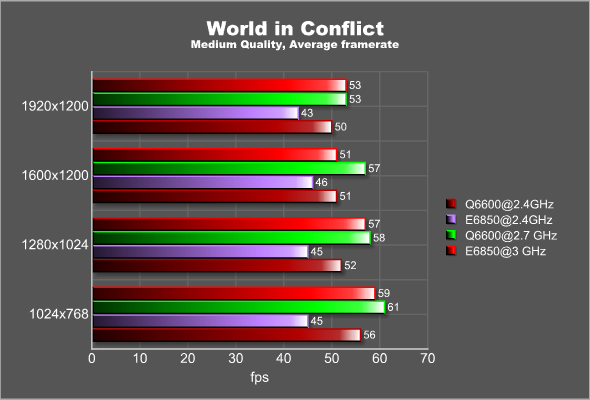

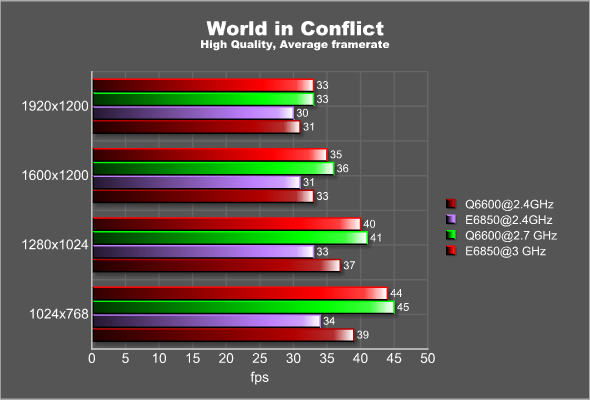

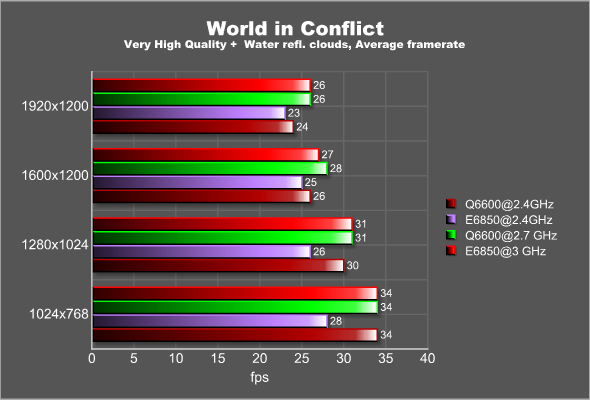

TESTING – CPU

The first component to be tested is the CPU. As this is a new game it is interesting to see how much performance difference we will see between a dual-core CPU (E6850) and a quad-core CPU (the Q6600). The settings used in this test were:

- Q6600 @ 2.4GHz (default) and 2.7GHz (overclocked)

- E6850 @ 2.4 GHz (underclocked) and 3.0 GHz (default)

The memory was kept at around 1066 MHz and the graphics card used was the GeForce 8800GTX.

As we go up in the quality settings, the importance of the CPU lessens. At the lower quality settings though it is obvious that not only is it better to have a CPU with a higher clock-rate, but a quad-core CPU performs better than a dual-core CPU at the same clockspeed. The Q6600 @ 2.4GHz ties and even beats the similary priced E6850 @ 3.0 GHz. I would definitely think about upgrading if I had an old E6400 or slower as this will undoubtedly help getting more performance in the game.

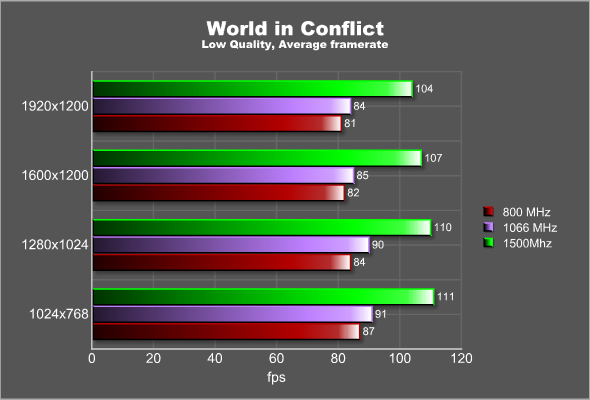

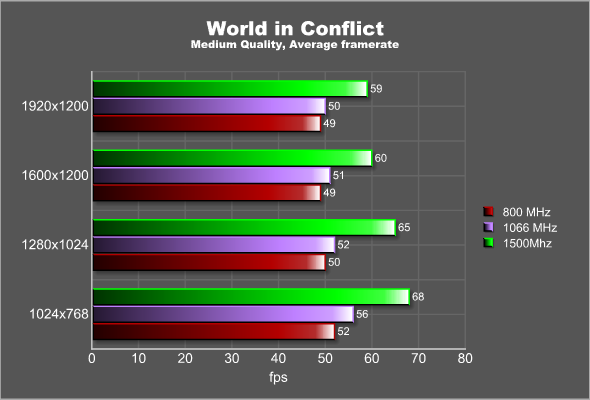

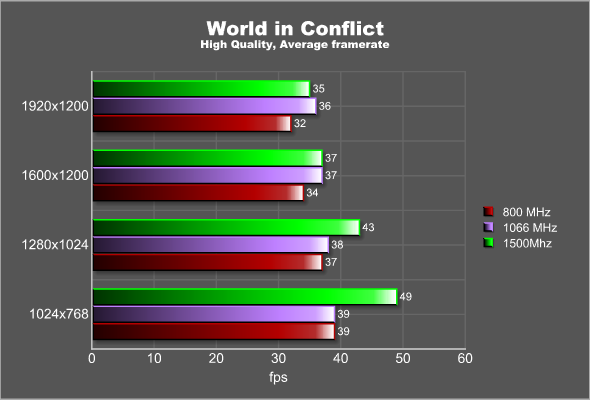

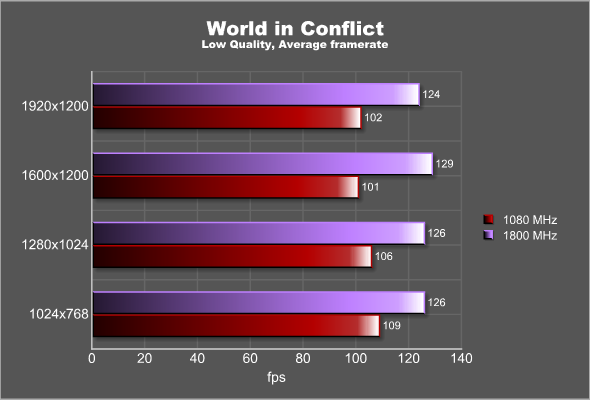

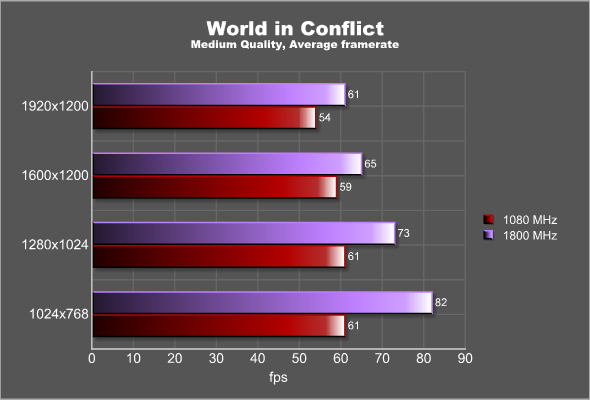

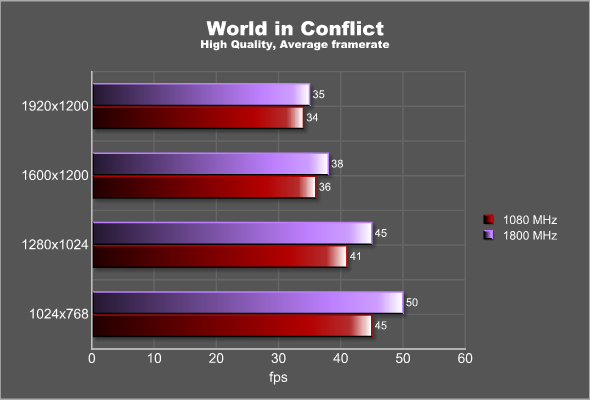

TESTING – MEMORY

The second component to be tested is the speed of the memory. In earlier memory reviews it has been obvious that it really is a hit or miss whether faster memory will affect the performance of a game. The memory used in this test was the excellent Corsair DOMINATOR TWIN3X2048-1800C7 DDR3 and the size was 2×1 GB giving us a total memory size of 2 GB. This memory easily overclocks up to 1800 MHz. To see how much the frequency of the memory affected the performance it was set in the bios to:

- 800 Mhz (with Q6600 @ 2.4 GHz, FSB: 1066 MHz)

- 1066 Mhz (with Q6600 @ 2.4 GHz, FSB: 1066 MHz)

- 1500 MHz (with Q6600 @ 2.4 GHz, FSB: 1500 MHz)

- 1080 MHz (with E6850 @ 3.1 GHz, FSB: 1800 MHz)

- 1800 MHz (with E6850 @ 3.1 GHz, FSB: 1800 MHz)

The graphics card used was the GeForce 8800GTX.

With Q6600

With E6850

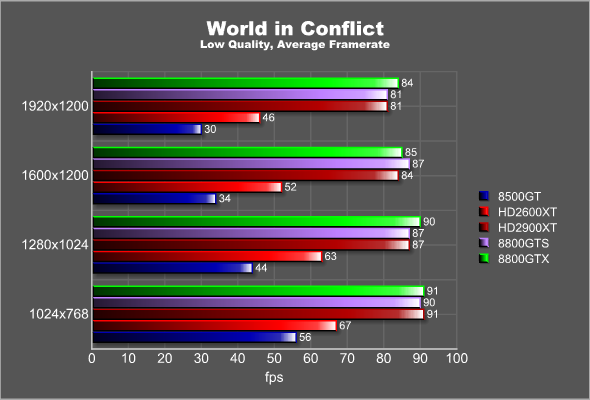

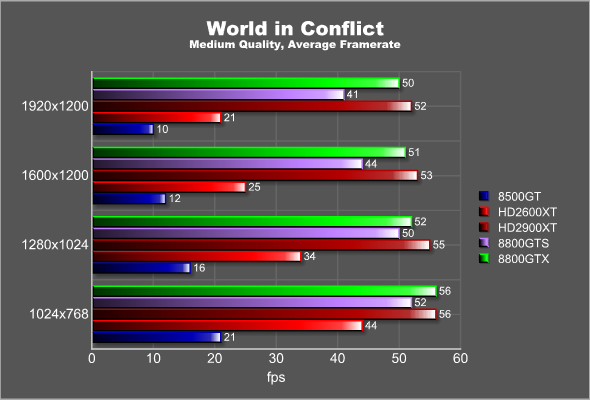

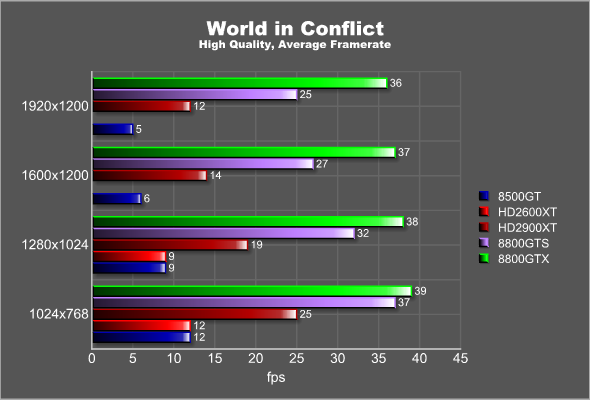

TESTING – VIDEO CARD

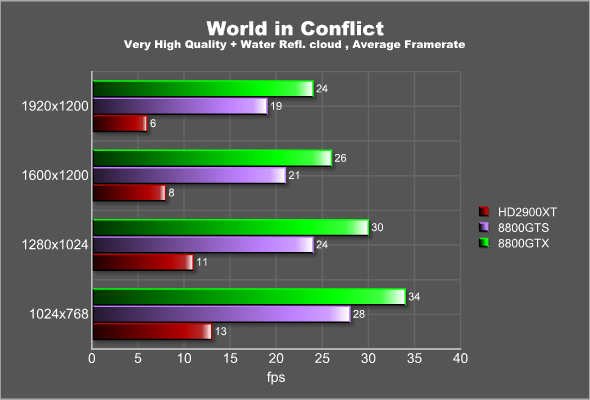

The last component that we test today is the video card. This game both supports DX9 and DX10 and while the Low and the Medium setting uses DX9, the High and Very High setting uses DX10. The cards used in this test were:

- Gigabyte 8500GT

- AMD reference HD2600XT

- ASUS 640 MB 8800GTS

- AMD reference HD2900XT

- Sparkle Calibre 8800GTX

The CPU used during the tests were the Q6600 @ 2.4 GHz. The memory was kept at around 1066 MHz.

Even at the low quality setting, the low-end 8500GT and the HD2600XT has problems keeping up at higher resolutions. While a RTS game does not need 60 fps to run well you still would like a bit more performance, expecially since the game features lots of nice explosions which are the exact effects that is dragging the average frame rate down. At the medium quality neither the GeForce 8500GT or the HD2600XT is producing playable framerates.

Looking at the high-end cards, the HD2900XT, the 8800GTS and the 8800GTX, it is quite obvious that AMD has some serious problems with this game. With the low and medium quality settings the HD2900XT has no problems keeping up, in fact beating the other two cards at 1920×1200 with the medium quality setting. As we move to the High quality setting, where we also turn on DX10, something happens. The framerate plummets and the game is not playable right now at these quality settings. I actually tried to run the game with 2 HD2900XT in Crossfire mode but could not get any improvements at all in the performance.

The conclusion is that with a 8800GTS, a 8800GTX and obviously a 8800 Ultra you can easily go up to high quality settings, as long as you do not turn up the resolution to high. Even these cards are brought to their knees as we turn on everything possible in the game. If you own a AMD HD2900XT though you either have to keep using DX9 (and the game still looks stunning) or bug AMD to release new drivers that fixes the performance in the game. AMD released a driver hotfix just before this article was published that, amonst other things, would help the performance in World in Conflict but I could not see any improvements at the few higher resolutions @ High and Very High detail settings that I quickly tested.

CONCLUSION

World in Conflict is a game that really put some stress on the various components in your system. Each component has an affect on the performance and it is therefore not easily to pin-pont the optimal upgrade path.

If you want to play at the highest quality setting at high resolutions, generally your best choice is to upgrade the video card. If you look back at the charts you notice that overall we see that regardless of the CPU speed and the memory frequency we run at, the average framerate stays the same at 1920×1200. The exception is when we use the Corsair DDR3 memory with a FSB of 1800MHz and a frequency of 1080MHz and 1800MHz. Now suddenly we get a impressive boost in performance. This indicates that using DDR3 and a high FSB also has a large effect on the performance.

The question whether it is better to use dual core or quad core is a tricky question to answer. At the same frequency the quad core definitely beats the dual core CPU. However, if we look at a dual core at the same price as the Q6600 used in the article, we see that the performance is helped up by the higher frequency edging it ahead of the quad-core Q6600. I do not know if the game can use one of the cores for AI etc., something that would not show in the benchmark. The benchmark however does support debris physics (debris in the benchmark does not fly the same way every time) and this is turned on at the High and Very High quality settings. The quad-core CPU however still does not seem to help at these quality settings so the game seems not to take advantage of the extra two cores, at least not enough to offset the lower frequency of the CPU. As we move up in the resolutions and quality settings the importance of the CPU diminishes as the GPU becomes the limited factor.

My hope at the start of this article was to be able to tell you exactly what component to upgrade to get the most out of your system. Unfortunately the reality is that the performance is affected by a lot of factors and thus it will never be possible to pick out the one component that will change everything. Hopefully however I have given you an idea on how the performance is affected so that you can make an educated guess on what to upgrade in your system.

Do not forget you can discuss not only this game but other games in our forums.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996