When planning for this mammoth task we decided the best approach would be to prepare one additional introductory article expressing what NVIDIA® brings to the table from a technology, Physics, and general usage standpoint for each of the products we’ll being presenting. The article will be a consortium of facts supplied by NVIDIA® and will express no opinions, rather it will simply act as adjunct to the accompanying reviews.

INTRODUCTION

Wednesday, November 8, 2006 which consequently happens to be today is a very special moment in time both for the Computer Enthusiast and for Bjorn3D. At 11:00 AM PST we’re finally able to put all the myths and rumors to rest about the G80 line of GPU/VPU that are being released by NVIDIA®. The G80 or 8800 based video card has been a major topic of discussion on all the computer forums for the past several months. Why? With the release of Microsoft® VISTA™ and DirectX 10 right around the corner this is the first GPU/VPU to address the many changes as well as major improvements that these two products will add to the computing world.

In our opening sentence I alluded to today not only being very special for Computer Enthusiasts in general but Bjorn3D in specific. We are privileged to having been chosen to play a role in reporting as never before all the facts about this new and extremely exciting card that NVIDIA® has released. A bold statement on Bjorn3d’s part? Read on my brothers and sisters! Today we will be releasing not one but four different reviews of products based on the NVIDIA® G80 GPU/VPU. Each review will showcase the efforts of a different major video card manufacturer and will be written by a different Bjorn3D Product Analyst. It is our hope that the diversity of this approach will give you, our readers, the most complete overview humanly possible at one site.

When planning for this mammoth task we decided the best approach would be to prepare one additional introductory article expressing what NVIDIA® brings to the table from a technology, Physics, and general usage standpoint for each of the products we’ll being presenting. The article will be a consortium of facts supplied by NVIDIA® and will express no opinions, rather it will simply act as adjunct to the accompanying reviews.

This strategy in our opinion will allow our Product Analysts that are preparing the four separate reviews to concentrate solely on the meat of the product they are presenting without having to repeat much of the same information this article will contain. Thus each of the reviews will be shorter but yet much more rich in the information our readers desire to properly assess this innovative new product. A good idea? You be the judge and let us know!

NVIDIA: The Company

This has been one hell of a year for NVIDIA® as they have brought to computer enthusiast market more new, innovative, and exciting products in a shorter time span that we can ever remember in our 20+ years of computing experience. We thought it more than appropriate to give you a short corporate overview, taken directly from NVIDIA’s Web site to better familiarize you with NVIDIA®, the company.

NVIDIA Corporation (Nasdaq: NVDA) is the worldwide leader in programmable graphics processor technologies. The Company creates innovative, industry-changing products for computing, consumer electronics, and mobile devices. The NVIDIA® graphics processing unit (GPU) and media and communications processor (MCP) brands include NVIDIA GeForce®, NVIDIA GoForce®, NVIDIA Quadro®, and NVIDIA nForce®. These product families are transforming visually-rich applications such as video games, film production, broadcasting, industrial design, space exploration, and medical imaging.

Additionally, NVIDIA invents and delivers industry-shaping technologies, including NVIDIA SLI™ technology, a revolutionary approach to scalability and increased performance; and NVIDIA PureVideo™ high-definition video technology. At the foundation of NVIDIA products is NVIDIA® ForceWare®, a comprehensive software suite that delivers industry-leading features for graphics, audio, video, communications, storage, and security. Based on the NVIDIA Unified Driver Architecture, ForceWare increases compatibility, stability, and reliability for NVIDIA-based desktop and mobile PCs.

The world’s leading PC and Handset OEMs incorporate NVIDIA technology into their products, including Apple, Dell, Fujitsu Siemens, Gateway, HP, IBM, Lenovo, LG, Medion, Mitsubishi, Motorola, MPC, NEC, Samsung, Sony Electronics, Sony Ericsson, and Toshiba. In the channel, NVIDIA partners with a broad range of system builders, such as Alienware, Falcon Northwest, HCL, SAHARA and Shuttle, to deliver solutions at every price point.

In addition, NVIDIA products have been adopted by the world’s leading add-in card and motherboard manufacturers, including ASUS, BFG, EVGA, Foxconn, GIGABYTE, MSI, Palit, Point of View, and XFX.

In the professional graphics arena, NVIDIA solutions are transforming industries and applications at organizations such as Mass General Hospital, NASA, and Oak Ridge National Laboratory.

FEATURES & SPECIFICATIONS

Based on the revolutionary new GeForce 8800 architectural platform, NVIDIA’s powerful new GeForce 8800 GTX and 8800 GTS GPUs (Graphics Processing Unit) are the industry’s first fully unified architecture-based DirectX 10 compatible GPUs that delivers incredible 3D graphics performance and image quality. Gamers will experience amazing Extreme High Definition (XHD) game performance with eye candy set to maximum, especially with SLI configurations using high-end nForce 500 and nForce 600 series SLI motherboards.

Features

- NVIDIA® unified architecture: Fully unified shader core dynamically allocates processing power to geometry, vertex, physics, or pixel shading operations, delivering up to 2x the gaming performance of prior generation GPUs.

- GigaThread™ Technology: Massively multi-threaded architecture supports thousands of independent, simultaneous threads, providing extreme processing efficiency in advanced, next generation shader programs.

- Full Microsoft® DirectX® 10 Support: World’s first DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects.

- NVIDIA® SLI™ Technology: Delivers up to 2x the performance of a single graphics card configuration for unequaled gaming experiences by allowing two cards to run in parallel. The must-have feature for performance PCI Express® graphics, SLI dramatically scales performance on today’s hottest games.

- NVIDIA® Lumenex™ Engine: Delivers stunning image quality and floating point accuracy at ultra-fast frame rates

- 16x Anti-aliasing: Lightning fast, high-quality anti-aliasing at up to 16x sample rates obliterates jagged edges.

- 128-bit floating point High Dynamic-Range (HDR): Twice the precision of prior generations for incredibly realistic lighting effects—now with support for anti-aliasing.

- NVIDIA® Quantum Effects™ Technology: Advanced shader processors architected for physics computation enable a new level of physics effects to be simulated and rendered on the GPU—all while freeing the CPU to run the game engine and AI.

- NVIDIA® ForceWare® Unified Driver Architecture (UDA): Delivers a proven record of compatibility, reliability, and stability with the widest range of games and applications. ForceWare provides the best out-of-box experience and delivers continuous performance and feature updates over the life of NVIDIA GeForce® GPUs.

- OpenGL® 2.0 Optimizations and Support: Ensures top-notch compatibility and performance for OpenGL applications.

- NVIDIA® nView® Multi-Display Technology: Advanced technology provides the ultimate in viewing flexibility and control for multiple monitors.

- PCI Express Support: Designed to run perfectly with the PCI Express bus architecture, which doubles the bandwidth of AGP 8X to deliver over 4GB/sec. in both upstream and downstream data transfers.

- Dual 400MHz RAMDACs: Blazing-fast RAMDACs support dual QXGA displays with ultra-high, ergonomic refresh rates–up to 2048 x 1536 @85Hz.

- Dual Dual-link DVI Support: Able to drive the industry’s largest and highest resolution flat-panel displays up to 2560×1600.

- Built for Microsoft® Windows Vista™: NVIDIA’s fourth-generation GPU architecture built for Windows Vista gives users the best possible experience with the Windows Aero 3D graphical user interface.

- NVIDIA® PureVideo HD™ HD Technology: The combination of high-definition video decode acceleration and post-processing that delivers unprecedented picture clarity, smooth video, accurate color, and precise image scaling for movies and video.

- Discrete, Programmable Video Processor: NVIDIA PureVideo HD is a discrete programmable processing core in NVIDIA GPUs that provides superb picture quality and ultra-smooth movies with low CPU utilization and power.

- Hardware Decode Acceleration: Provides ultra-smooth playback of H.264, VC-1, WMV and MPEG-2 HD and SD movies.

- HDCP Capable: Designed to meet the output protection management (HDCP) and security specifications of the Blu-ray Disc and HD DVD formats, allowing the playback of encrypted movie content on PCs when connected to HDCP-compliant displays.

- Spatial-Temporal De-Interlacing: Sharpens HD and standard definition interlaced content on progressive displays, delivering a crisp, clear picture that rivals high-end home-theater systems.

- High-Quality Scaling: Enlarges lower resolution movies and videos to HDTV resolutions, up to 1080i, while maintaining a clear, clean image. Also provides downscaling of videos, including high-definition, while preserving image detail.

- Inverse Telecine (3:2 & 2:2 Pulldown Correction): Recovers original film images from films-converted-to-video (DVDs, 1080i HD content), providing more accurate movie playback and superior picture quality.

- Bad Edit Correction: When videos are edited after they have been converted from 24 to 25 or 30 frames, the edits can disrupt the normal 3:2 or 2:2 pulldown cadences. PureVideo HD uses advanced processing techniques to detect poor edits, recover the original content, and display perfect picture detail frame after frame for smooth, natural looking video.

- Video Color Correction: NVIDIA’s Color Correction Controls, such as Brightness, Contrast and Gamma Correction let you compensate for the different color characteristics of various RGB monitors and TVs ensuring movies are not too dark, overly bright, or washed out regardless of the video format or display type.

- Integrated SD and HD TV Output: Provides world-class TV-out functionality via Composite, S-Video, Component, or DVI connections. Supports resolutions up to 1080p depending on connection type and TV capability.

- Noise Reduction: Improves movie image quality by removing unwanted artifacts.

- Edge Enhancement: Sharpens movie images by providing higher contrast around lines and objects.

Specifications

|

Feature/Specification |

8800 GTX |

8800 GTS |

|

Number of Transistors |

681 M |

|

|

Fabrication Process |

90nm |

|

|

GPU Clock |

575 MHz |

500 MHz |

|

Memory Clock |

900 MHz |

800 MHz |

|

Memory Data Rate |

1800 MHz |

1600 MHz |

|

Memory Size |

768MB |

640MB |

|

Memory Data Width |

384 Bits |

320 Bits |

|

Memory Type |

GDDR3 |

|

|

Memory Speed |

1.1ns |

1.2ns |

|

Memory Bandwidth |

86.4 GB/sec |

64 GB/sec |

|

Shader Clock Core Speed |

1.35 GHz |

1.2 GHz |

|

ROPS |

24 |

20 |

|

RAMDAC |

400 MHz |

|

|

Bus Type |

PCI Express |

|

|

PCB Layers |

12 |

|

|

Form Factor |

ATX |

|

|

Dimensions |

4.376 x 10.5 inches |

4.376 x 8.9 inches |

|

Fill Rate (pixels/sec) |

36.8 billion |

24 billion |

|

Stream Processors |

128 |

96 |

|

Analog Display Resolution |

2048 x 1536 x 32 bpp at 85Hz |

|

|

Digital Display Resolution |

Dual-link DVI-I Connector |

|

|

Features Supported |

DirectX 10 Shader Model 4.0 |

|

|

RoHS Ready |

Yes |

|

CLOSER LOOK

The Card

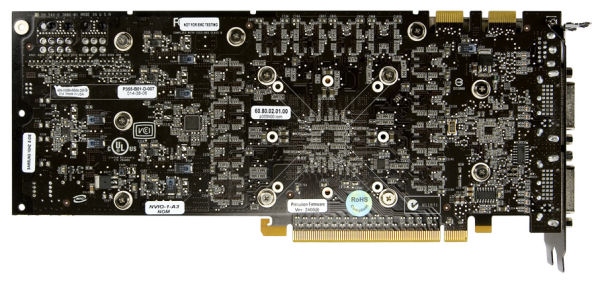

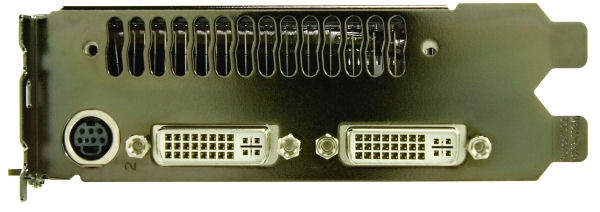

Next we’ll look at a few images of the NVIDIA® 8800 GTX card. Realize that this is the reference standard card built by NVIDIA® and that each card built by a specific manufacturer may use this reference standard or deviate somewhat as each manufacturer sculpts their own individual technological innovations into the build process.

NVIDIA® 8800 GTX Reference Card … Front View

NVIDIA® 8800 GTX Reference Card … Angled View

NVIDIA® 8800 GTX Reference Card … Rear View

NVIDIA® 8800 GTX Reference Card … Mounting Bracket

As you can see from the images the card is longer than its predecessors and will occupy two slots in your computer. While the purpose of this introductory review is simply to state facts and not opinions we’ll venture forth a “SWAG” (Scientific Wild Ass Guess) that the days of the single slotted high-end video card are all but over.

CLOSER LOOK

Architecture, Power & Efficiency

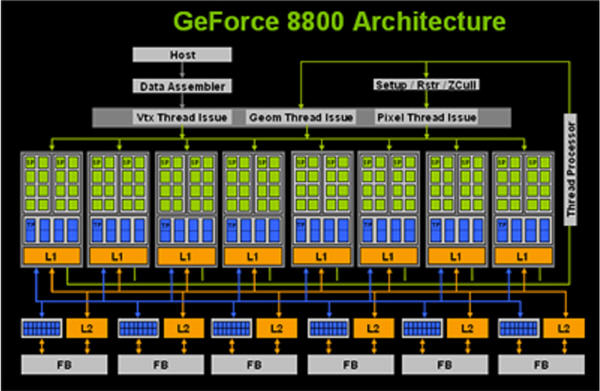

The GeForce 8800 GTX GPU implements a massively parallel, unified shader design, consisting of 128 individual stream processors running at 1.35 GHz, and the GeForce 8800 GTS includes 96 stream processors clocked at 1.2GHz. Each stream processor is capable of being dynamically allocated to vertex, pixel, geometry, or physics operations for the utmost efficiency in GPU resource allocation, and maximum flexibility in load balancing shader programs.

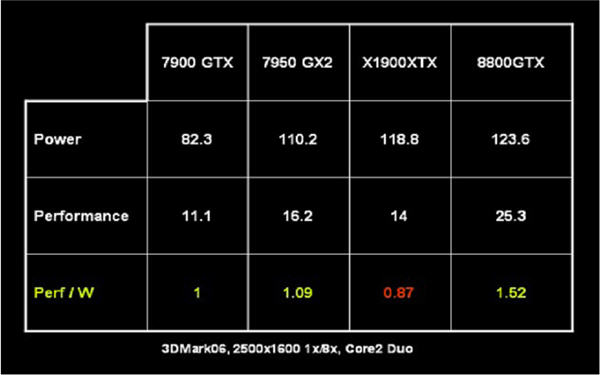

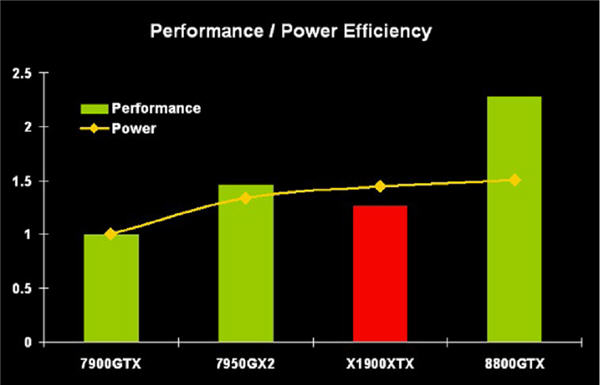

Architectural efficiency was again a leading design criterion for GeForce 8 Series GPUs as it was for GeForce 7 Series GPUs. Efficient power utilization and management delivers industry leading performance per watt and performance per square millimeter. See the table and chart below.

Compared to NVIDIA’s top-of-the-line GeForce 7 Series GPUs, a single GeForce 8800 GTX GPU is 2X the performance on current applications, with up to 11X scaling measured in certain shader operations. As future games become more shader intensive, we expect the GeForce 8800 GPUs to greatly surpass DirectX 9 architectures in performance. In general, shader- and HDR-intensive applications shine on GeForce 8800 architecture GPUs. Teraflops of raw GeForce 8800 floating point processing power are combined to deliver unmatched gaming performance, graphics realism, and real-time, film-quality effects.

NVIDIA’s ground-breaking GigaThread™ technology implemented in GeForce 8 Series GPUs supports thousands of independent, simultaneously executing threads, maximizing GPU utilization.

The GeForce 8800 GPU’s unified shader architecture is built for extreme 3D graphics performance, industry leading image quality, and 100% compatibility with DirectX 10. Not only do GeForce 8800 GPUs provide amazing DirectX 10 gaming experiences, but they also deliver the absolute fastest and best quality DX9 and OpenGL gaming experience today.

CLOSER LOOK

DirectX 10

DirectX 10 represents the most significant step forward in 3D graphics APIs since the birth of programmable shaders. Completely built from the ground up, DirectX 10 features powerful geometry shaders, a new “Shader Model 4” programming model with substantially increased resources and improved performance, a highly optimized runtime, texture arrays, and numerous other features that unlock a whole new world of graphical effects.

GeForce 8 Series GPUs include all required hardware functionality defined in Microsoft’s Direct3D 10 (DirectX 10 or DX10) specification and full support for the DirectX 10 unified shader instruction set and Shader Model 4 capabilities. The GeForce 8800 GTX is not only the first shipping DirectX 10 GPU, but it was also the reference GPU for DirectX 10 API development and certification.

New features implemented in GeForce 8800 Series GPUs that work in concert with DirectX 10 features include geometry shader processing, stream output, improve instancing, and support for the DirectX 10 unified instruction set. GeForce 8 Series GPUs and DirectX 10 also provide the ability to reduce CPU overhead, shifting more graphics rendering load to the GPU.

DX10 games running on GeForce 8800 GPUs deliver rich, realistic scenes, increased character detail, more objects, vegetation, and shadow effects, in addition to natural silhouettes, and lifelike animations. PC-based 3D graphics is raised to the next level with GeForce 8800 GPUs accelerating DirectX 10 games.

Lumenex™ Engine

Image quality is significantly improved on GeForce 8800 GPUs over the prior generation with NVIDIA’s Lumenex™ Engine. Advanced new antialiasing technology provides up to 16x full-screen multisampled antialiasing quality with the performance impact of 4x multisampled antialiasing using a single GPU.

High Dynamic Range (HDR) lighting capability in all GeForce 8800 Series GPUs now supports 128-bit precision (32-bit floating point values per component), permitting true-to-life lighting and shadows. Dark objects can appear very dark, and bright objects can be very bright, with visible details present at both extremes, in addition to rendering completely smooth gradients in between.

HDR lighting effects can be used in concert with multisampled antialiasing on GeForce 8 Series GPUs, and the addition of angle-independent anisotropic filtering combined with considerable HDR shading horsepower provides outstanding image quality. In fact, antialiasing can be used in conjunction with both FP16 (64-bit color) and FP32 (128-bit color) render targets.

An entirely new 10-bit display architecture works in concert with 10-bit DACs to deliver over a billion colors, compared to 16.7 million in the prior generation, permitting incredibly rich and vibrant photos and videos. With the next generation of 10-bit content and displays, the Lumenex Engine will be able to display images of amazing depth and richness.

Next, a few images to give you an idea of the quality that can expect from the technology this product presents. Realize for the sake of our site’s format it was necessary to shrink these images to a level where they would fit. We did so with quality in mind an feel you’ll get a fair representation of the quality you can expect.

Super Model Adrianne Curry

Animated Character “Froggie”

NVIDIA’s Animated “Waterworld”

CLOSER LOOK

SLI vs Crossfire

NVIDIA’s SLI technology is the industry’s leading multi-GPU technology delivering up to 2x the performance of a single GPU configuration for unequaled gaming experiences by allowing two graphics cards to run in parallel on a single motherboard. Running two GeForce 8800 GPUs in an SLI configuration allows extremely high image quality settings at extreme resolutions.

SLI provides performance scaling across more than 280 applications that have associated NVIDIA-certified SLI profiles, which compares to far fewer titles demonstrated to scale well in performance with Crossfire (refer to “The Definitive Multi GPU Roundup” story series that was kicked off by Penstarsys in Summer 2006, and includes nine different Websites comparing SLI to Crossfire.

The SLI ecosystem (including certified NVIDIA nForce 4, nForce 500 Series, nForce 600 Series chipset-based motherboards, power supplies, and memory) is far more developed with many more options available to the consumer compared to Crossfire.

NVIDIA® GeForce® 8800 GTX SLI

Extreme High Definition (XHD) Gaming

Installing a single GeForce 8800 GTX GPU supports playable gaming at Extreme High Definition (XHD) resolutions, where games can be played up to 2560×1600. The widescreen format of XHD allows users to see more of their PC games, enhance their video editing, and even add useful extra screen real estate to Google Earth imagery.

The sweet-spot XHD resolution for a GeForce 8800 GTX configuration is 2560×1600 on 30” LCD panels, such as Dell’s 3007 WFP or the Apple Cinema Display.

Top selling XHD titles with stunning cinematic graphics and lifelike characters at blazing speeds are available now (for more information, please visit NZone, or check out Wide Screen Gaming for a very large list of games that support widescreen resolutions and instructions on how to enable in games without explicit support.

XHD Gaming on Widescreen Monitor

CLOSER LOOK

PureVideo and PureVideo HD

NVIDIA’s PureVideo™ HD capability is built into every GeForce 8800 Series GPU and enables the ultimate HD DVD and Blu-ray™ viewing experience with superb picture quality, ultra-smooth movie playback, and low CPU utilization. High-precision subpixel processing enables videos to be scaled with great precision, allowing low resolution videos to be accurately mapped to high resolution displays.

PureVideo HD is comprised of dedicated GPU-based video processing hardware (SIMD vector processor, motion estimation engine, and HD video decoder), software drivers, and software-based players that accelerate decoding and enhance image quality of high-definition video in H.264, VC-1, WMV/WMV-HD, and MPEG-2 HD formats.

PureVideo HD can deliver 720p, 1080i, and 1080p high definition output and support for both 3:2 and 2:2 pulldown (inverse telecine) of HD interlaced content. PureVideo HD on GeForce 8800 GPUs now provides HD noise reduction and HD edge enhancement.

PureVideo HD adjusts to any display and uses advanced techniques found only on high-end consumer players and TVs to make standard and high-definition video look crisp, smooth, and vibrant, regardless of watching videos on an LCD, plasma, or other progressive display type.

AACS protected Blu-ray or HD DVD movies can be played on systems with GeForce 8800 GPUs using AACS compliant movie players from CyberLink, InterVideo, and Nero that utilize GeForce 8800 GPU PureVideo features.

All GeForce 8800 GPUs are HDCP-capable meeting the security specifications of the Blu-ray Disc and HD DVD formats, allowing the playback of encrypted movie content on PCs when connected to HDCP-compliant displays.

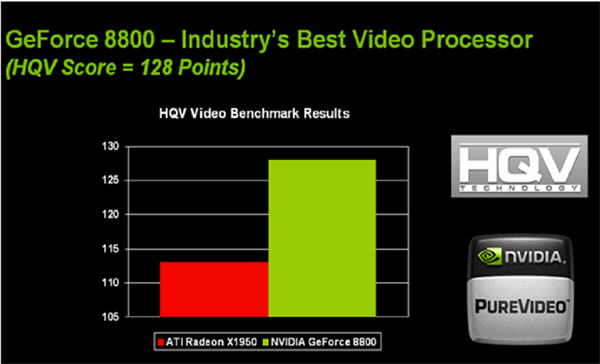

GeForce 8800 Series GPUs also readily handle standard definition PureVideo formats such as WMV and MPEG-2 for high-quality playback of computer- generated video content and standard DVDs. In the popular industry standard HQV Benchmark, which evaluates standard definition video de-interlacing, motion correction, noise reduction, film cadence detection, and detail enhancement, all GeForce 8800 GPUs achieve an unrivaled 128 points out of 130 points!

HQV Benchmark Results for GeForce 8800 GPUs

With & Without Pure Video

FINAL WORDS

We’ve done our best here to present you with the technology aspects of what the new NVIDIA® G80 series of video cards will have to offer. We’ve intentionally kept the Joe Friday, “Just the facts ma’am” approach as to keep this article as a reference source and not a review. This is just the beginning of our “Launch Day Coverage” of the NVIDIA® GeForce® 8800 series of products. Remember we at Bjorn3D are doing an unprecedented four reviews of this series of cards by four entirely different manufacturers. We certainly hope this article and its accompanying reviews will prove as meaningful for you as their preparation has for us. Below for your use are links to each of the individual reviews.

|

Our NVIDIA® 8800 Series Graphics Card Coverage |

||

|

This is the best of all worlds when it comes to graphical rendering, both 2D and 3D. This card writes a totally new standard for their competition, it should be quite interesting to see how things shake out. |

|

|

|

The Sparkle GeForce 8800GTX delivers breathtaking performance, DX10 support and impressive image quality at a good price. |

|

|

|

In conclusion other than the subtle nuances captioned above, this is by far the finest card, I’ve ever had the pleasure to test. |

|

|

|

Honestly, there is just no possible way for me to find dissappointment in this product. The FOXCONN GeForce 8800 GTS is by no means another addition to the market, it is clearly in a class all of its own. |

|

|

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996