Check out the our coverage of NVIDIA’s first ever Editor’s Day! It was a great event where they let the press get up close and personal with top-level executives, developers and PR people.

Introduction

In an effort to shake things up a bit in their public relations efforts, NVIDIA held their first ever Editors’ Day on Tuesday, October 21, 2003 in San Francisco, California. They invited web affiliates and developers from around the world to be a part of this unique event. I was lucky enough to get to go to represent Bjorn3D.com. Many of NVIDIA’s representatives stressed that this event was unlike anything else they have ever done. How you ask? For one, high level people who have been crucial in getting NVIDIA where it is today were more accessible than usual. These people included: David Kirk, Chief Scientist; Kurt Akeley, Chief Technical Officer; Dwight Diercks, Vice President of Software Engineering; Nick Triantos, Director of OpenGL Software Development; and Ben De Waal, Director of DirectX Software Development. Even NVIDIA CEO and co-founder Jen-Hsun Huang joined the group to talk to us about the company during lunch. He was joined by id’s CEO, Todd Hollenshead.

The first part of the day focused on educating the attendees about some of the technology in the GeForceFX line of graphics cards and debunking some of the negative press NVIDIA has received about performance and optimizations. And the second part of the day was focused on developers’ relationships with NVIDIA and showcasing new games that will be coming out sooner (XIII) or later (S.T.A.L.K.E.R.).

I must admit that I have never been to any event like this or anything close to this. Not even a renowned LAN event. Unfortunately, that means I cannot really compare it to anything else NVIDIA or any other company has done. So, I’ll just cover the event as it was and as I experienced it.

Shady Shader Tales and Onerous Optimizations?

The day started off with a series of four presentations given by Kurt Akeley, David Kirk, Dwight Diercks, and Nick Triantos. They presented their topics on 21st Century Graphics Architecture as the rest of the team sat in front of the audience as a panel for answering questions, which the attendees were encouraged to ask at any time and about anything. Ben De Waal was also a member of the panel.

|

| From left to right: David Kirk, Nick Triantos, Ben De Waal, Dwight Diercks, and Kurt Akeley (standing behind Diercks). |

Kurt Ackeley started off with his presentation about how graphics APIs, namely DirectX, are continuing to become more and more procedural. Earlier graphics APIs were more descriptive in nature. He covered the challenges this poses for programming and optimization. I’m not going to go into any more detail on this topic because I think people really want to read about what NVIDIA said about some of the more controversial topics as of late.

David Kirk dove right into the controversial topic of pixel shaders with his presentation. He also discussed some of the architectural choices NVIDIA made for their latest NV3x chips. Basically, much of the presentation was spent defending and explaining NVIDIA’s choice to go with mixed mode (16-bit and 32-bit) floating point precision. Of course, he pointed out all the pitfalls of going with 24-bit floating point (as ATI did with their latest generation of graphics chips). I think he would have liked to go with a completely 32-bit architecture, but, as he pointed out that is not economical right now, and the NV35 would have been twice the size it is today! That would make it even more expensive! Also, Kirk believes that a pure 16-bit or 24-bit architecture is not a good choice either because some programs will not work at all because of the lack of precision. Going with either of these options would have made NV35 smaller, faster, and easier to work with, but it also would have been less capable. Another fine point he made was that there is an IEEE standard for 32-bit floating point, which NVIDIA follows, and although there is not a standard for 16-bit, many animation studios, CAD and authoring tools, and Adobe’s Photoshop use it. However, there is no standard for 24-bit floating point. Now, I don’t mean to make this out to be a huge issue, but I think using standards and accepted practices is a good thing, and I think NVIDIA deserves some credit for it. The key thing Kirk said that NVIDIA did poorly was unintentionally making their NV3x architecture difficult to write programs for quickly. He also showed a slide that presented some limitations of the architecture that I believe had not previously been made public. I must admit that I do not fully understand all of these, and I did not get great notes about what he said about them. But, for the sake of the reader, I will provide the information as it was on his slide:

Performance Limits:

Well, there you have it, straight from the Chief Scientist. I will let you digest those facts and interpret them as you will. Finally, Kirk pointed out that the GeforceFX architecture is optimized for long shaders (up to 1024 instructions) and interleaved texture/math instructions, but it is sensitive to instruction order. That is why they put so much effort into their shader optimizing compiler. In contrast, the Radeon architecture is optimized for short shaders (only up to 64 instructions) and blocks of texture/math instructions but sensitive to instruction co-issue (vector/scalar paired instructions) and dependent texture fetch limitations. Of couse, this is all coming from an NVIDIA employee, so interpret it how you will, but I don’t think he was spinning it too much. He firmly believes that the full power of the NV3x architecture has not been exploited yet or is just now being realized.

One thing that I think we must keep in mind when reading/thinking about these issues is that developers had ATI hardware long before NVIDIA hardware; therefore, NVIDIA has had to play catch up for a while. It is the first time in many hardware generations that NVIDIA has been in this position, and it has been a learning experience for them.

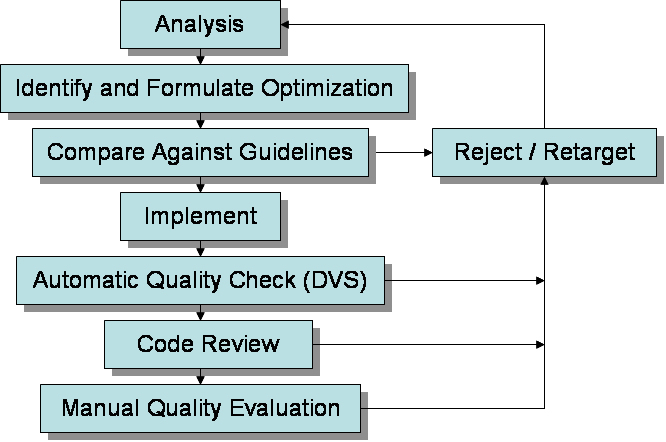

Director of Software Engineering Nick Triantos followed up the shader presentation with one about NVIDIA’s new optimization guidelines and the GeForceFX Unified Compiler’s role in this. In an effort to gain people’s trust again in the wake of the much publicized driver “compromises” the company has made over the last year or so, NVIDIA wants the world to know that is now following a strict process for optimization analysis. Before these guidelines were in place, individual developers usually decided on what would work best for an optimization, and now they feel that that model just does not fit their goals any longer. They definitely wanted a more controlled process. Here is my reproduction of a diagram that was presented to illustrate their internal development process for optimizations:

Basically, what this boils down to is a rigid set of guidelines that will force NVIDIA to make uniform decisions on driver optimizations. The Automatic Quality Check step involves hundreds of systems running various operating systems and many generations of NVIDIA hardware running an automated suite of tests. The Driver Validation System (DVS) automatically verifies that image quality is maintained. The guidelines that NVIDIA has set for optimizations are: 1) An optimization must produce the correct image, 2) An optimization must accelerate more than just a benchmark, and 3) An optimization must not contain pre-computed states.

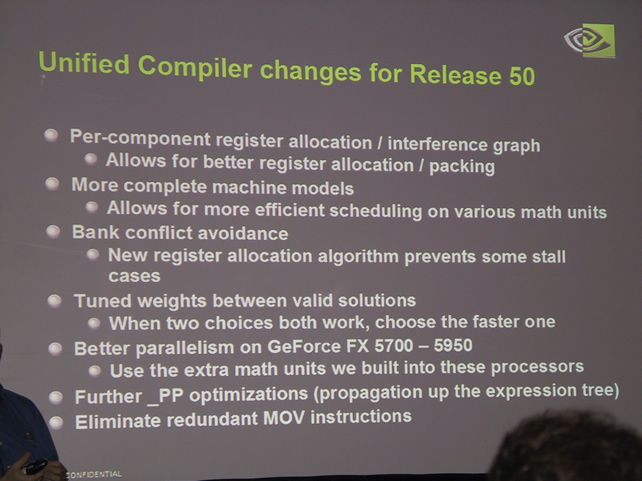

Triantos also discussed NVIDIA’s progress with their optimizing compiler. It was first introduced into the driver with the 44.12 release. One optimizing compiler is used for both Direct3D and OpenGL drivers. The goals of this compiler are to maintain high utilization of math units in shader and combiners, to minimize register usage, and to optimize program scheduling to hide latencies between shader and texture. To close this topic, I leave you with a picture of a slide that discloses the improvements introduced in the 50 series of drivers.

That pretty much sums up the major topics discussed during the first part of the day. Of course, the panel was deluged with questions as they presented their topics. That’s what they asked for, and that’s definitely what they got.

“Fireside Chat” with CEOs Huang and Hollenshead

During lunch, NVIDIA CEO Jen-Hsun Huang and id CEO Todd Hollenshead shared some of their thoughts on games and the graphics industry. Huang pointed out that all (and he stressed all, but I’m not sure how definitive that can be) of the market share NVIDIA has lost over the past year has been lost to integrated graphics and not to competitors. That was surprising to me, considering the roll that ATI has been on lately.

As expected, Hollenshead showed off Doom 3 with a trailer. I don’t think it was anything that had not been seen before. He did mention a few interesting things about id, though. The id team consists of only 22 people! I was surprised by that number. Also, in an important note for NVIDIA, all developers either have or will have GeForceFX 5900s or 5950s in their development machines. The company is working very closely with NVIDIA to make Doom 3 as good of an experience as possible on NVIDIA hardware. One other note that Hollenshead mentioned about Doom 3 is that the frame rate is hard-coded at 60 frames per second. He said that John Carmack made that decision. Now, if the game is released any time soon, not many, if any, video cards will even be able to come close to that magic number at a decent resolution. Hollenshead also noted that there probably will not be a typical time demo for Doom 3 because of the hard-coded FPS maximum.

Time to Show Off Some Games!

The rest of the day was devoted to letting developers and executives hype their games and discuss their relationships with NVIDIA. Technical questions about most games were fairly limited because most of the companies’ representatives were not developers. I will just share my impressions of the games and what I can remember of each presentation.

XIII

Damien Moret and Olivier Dauba from Ubi Soft were there to present this new spin on the first-person shooter genre.

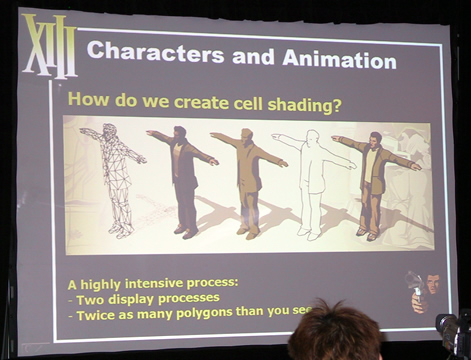

XIII is a game based on a very popular French comic book by the same name. The designers of the game decided to make the game look and feel as much like a comic book as possible, so it is cell-shaded, uses omomatopoeia, and uses in game sub-windows to show significant events like head-shots. Just take a look at a comic book, and you’ll see what I mean.

I must admit that I was not too excited about this game…until I saw it being played. I did not even know that there is a demo available. Since I was intrigued by what I saw, I decided to download the demo and give it a try. Even though it was in French (I could not find and English demo), it was just as fun as I expected! Moret and Dauba said that developers in France were putting the finishing touches on the game as they spoke, so I guess it is on schedule to be released this Fall. I will definitely be anxiously awaiting its arrival on store shelves.

Deus Ex: The Invisible War

Harvey Smith, who is a Lead Designer for Deus Ex: Invisible War from Ion Storm, was at Editor’s Day to show us a demo of this game that should be out before the end of this year. Smith said that the development cycle for The Invisible War has been about 2.5 years, and it should be in stores in early December.

The game uses the renowned HAVOC physics engine, but the team created a new graphics engine called ISIS for the rendering duties. We were lucky enough to see live action gameplay, and it looks awesome! Smith continually stressed the idea that made the original Deus Ex such a hit, which is being able to play the game as either a run and gun shooter or a stealth game. The character development will get much deeper than that, though, because the bio-mod system is even better this time around. I think fans of the first game in the series will think the long wait for the sequel was worth it.

Halo: Combat Evolved

The President of Gearbox Software, Randy Pitchford, was at Editor’s Day to discuss his company’s relationship with NVIDIA during the development of Halo for the PC.

Pitchford played the game for a few minutes to show it off to us. He mentioned that he always plays it with the frames per second set to 30. He thinks that is fine, and it did look fine when he played it. I am not sure what the settings were, though. Also, he mentioned that 30 FPS was the development goal for the game, and they are happy that they exceeded that goal. Pitchford was asked if the Halo time demo is pre-rendered animation. He replied that it is not. It is actual engine rendered scenes. As for the company’s relationship with NVIDIA, he said that he had very low expectations for relationships with chip makers, but the support he received from NVIDIA shattered his expectations! With NVIDIA’s current developer relations, it is fairly common for them to write some shader programs for the developers to show them how to write shaders that are optimized for NVIDIA hardware. That is exactly what they did for Pitchford’s Halo team. And NVIDIA deserves props for their efforts because they did not write programs that are only compatible with NVIDIA hardware. Pitchford said that some of the shader programs used in the ATI code path in Halo are the actual ones written by NVIDIA developers! That is good work in my book, and it deserves some credit.

Unreal Tournament 2004

Unreal Tournament 2004 was touted by Mark Rein, Vice President of Epic Games. He was not willing to answer any technical questions about the game, though. He really wanted to stress the industry’s and community’s acceptance of the Unreal Engine. According to Rein, around 300 developers are in the industry today because of their work on Unreal Tournament mods. He also mentioned the NVIDIA Make Something Unreal Contest for mod developers. It has gained a lot of attention, and many people/teams have entered. Many more than they expected! And for those who want to learn about using Unreal technology for modding, there are many resources like this website.

But did we get to see the game in action? Yes, but not only that. There were four AMD Athlon 64 FX-51 equipped PCs with a playable demo of the game at the attendees’ disposal. Rein chose to show off the new game type called Onslaught. In this game type, one team has to destroy the other team’s main power station, but in order to do so, the team must have a complete link to the opposing team’s main power station. This involves taking over the necessary link stations and maintaining control over them. It’s a really cool game mode, and I took an opportunity to play it for a little while. Even though the bots weren’t exactly “knowledgeable” of this map yet, it was a blast, and the game looked great!

Rein also mentioned that NVIDIA is Epic’s premiere partner. He said the two companies have a great relationship. He accidentally put something about NV50 on one of his slides and joked that he would get in trouble for it. It said that NVIDIA and Epic are collaborating on next-generation hardware so that NV50 will have UnrealEngine3 specific optimizations.

Battlefield Vietnam

Frederik Lilegren, General Manager of Dice Canada, and Reid Schneider, Producer at Electronic Arts, were in the house to show us their upcoming game Battlefield Vietnam. When it is released some time in the Spring of 2004, this will be the latest and greatest in the EA’s Battlefield franchise. They showed a trailer of the game (same trailer as is available here) and live game play on a beautiful, spacious map. In the play demo, they showed us how players will be able to use the games helicopters to transport vehicles to anywhere in a map. In one example, they showed a tank being transported with a player in it, and the player was actually able to fire the tanks main gun! It was basically a flying canon. Nice!

Lilegren and Schneider emphasized that the game uses a revamped rendering engine, and the feel of the weapons used in the game is revamped. Also, this game has been developed with completely new content. One other cool feature is that players can select the background music that is playing. The selection includes classic rock that was popular during the Vietnam war era.

S.T.A.L.K.E.R.: Oblivion Lost

If I had to guess one game that dropped the most jaws, I would guess it was S.T.A.L.K.E.R. Oleg Yavorsky, PR Manager from GSC Games, and Eric Reynolds, Media Relations Manager at THQ, were on hand to demostrate this great-looking game from these two companies.

The game is set around Chernobyl and events that have taken place there and will take place there in the future. S.T.A.L.K.E.R. was described as a sort of survival/RPG/FPS game. Yavorsky pointed out that during development of this game, the team actually went through the corridors of the facility and took hundreds of photographs to use in creating some of the game’s textures. He said that sixty percent of the texture are from the real Chernobyl. When he was showing off the game and walking through Chernobyl, it looked very real and gave an impression of actually being there. Since they started on this game three years ago, the team has developed its own engine that allows for three million polygons per frame. Yavorsky also showed off the great ragdoll physics that the game utilizes. One other great feature of the game is that it will have dynamic day to night and vice versa transitions. The sun will set and moon will rise independently of other events in the game. He showed us this effect at ten times what it will be in the released game, and it was amazing to see this happen as he wondered around the environment.

According to Yavorsky, S.T.A.L.K.E.R. will have eight (that’s right, EIGHT) different endings. Only a few games even have more than one ending, and this one is going to have eight! I hope the development team can really pull it off! The wow factor of this game just does not seem to stop. They are also planning on having around 100 NPCs in the game who are trying to complete the same missions you are assigned, and the NPCs could complete the game before you! I’m not sure what he meant by that, but it sounds interesting. I am very excited about this title. The demos that were shown were only using the DirectX 8 version of the game, and I was told by an NVIDIA rep that the game looks even better with DirectX 9 code, and it does not take a performance hit. Unfortunately, all these features take a lot of time to develop, so we will not be seeing this bad boy on shelves until next summer.

NVIDIA Headquarters

Those who were invited to the Editor’s Day event were given the option to take a tour of the NVIDIA headquarters in Santa Clara. I took them up on this offer. Below are some of the pictures I took on this tour. Unfortunately, the did not allow us to take any pictures in any of the labs or in their server rooms. The first row of pictures gives you a good look at what the campus looks like. It is beautiful and has a great area for employees to eat their lunches outside. In the second, row of pictures, you can see the demo room that is located near the main lobby.

|

|

|

|

|

|

From the server rooms to the failure analysis labs, it is a very impressive facility. One notable thing we were able to see on the tour was a prototype NV40 chip that was in the electrical failure analysis lab. In that lab, the basically look at the chips at the silicon level and “dissect” the chip to help improve yield at the fabs that produce their chips and to find causes of failures. I guess that is what they were doing with NVIDIA’s next generation chip, and we were lucky enough to see one. 🙂

Final Thoughts

Overall, I was impressed with NVIDIA’s Editors’ Day. I think it was a success for all those involved. NVIDIA should be willing to do events like this more often. It allowed the media to ask some pressing questions and get to the bottom of some issues that have been plaguing the company.

Although it may be too little too late for some people, this event renewed some faith in NVIDIA in me. I think they have started down the right path. The company’s current plan with their latest generation of hardware and software basically works like a three-legged stool – one leg is intense developer relationships, another is the optimizing compiler, and the third is raw power. The stool, of course, represents their performance. Unfortunately, they need all three legs for the stool (their performance) to work well. If one of them is missing or short, the stool just will not function like it should. Much of this is the result of next-gen ATI hardware getting to developers much sooner than the NVIDIA equivalent was in their hands, and the two architectures are different enough (think Intel vs. AMD) that code written for optimum performance on ATI’s hardware just doesn’t quite perform the same way on NVIDIA hardware. That is why NVIDIA has been forced to take on this role of “tutor” for many developers. So, in the long run, their decisions are costing them a lot of time and money, but this should benefit the consumer with increased performance and renewed stiff competition.

I would like to offer a special thanks to the people at NVIDIA who invited Bjorn3D.com to this event.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996