Bjorn3d.com has gone all out on this one! I was able to enlist the help of Mr. Parker*, who was able to sneak some photos of a recent closed-door presentation at NVIDIA. Mr. Parker is well known for his uncanny ability to get photos when no one else can. Sometimes, I swear, he must be able to climb walls or turn invisible or something (to get the shots he does)!

I will be adding my own unique perspective to this material he collected (as a professional computer graphics programmer). I will be going through NVIDIA’s slides one-at-a-time, explaining them as best I can.

* Mr. Parker (upper left in photo) is a well-known newspaper photographer, who I met on a recent trip to New York City. He’s young, but has attracted a lot of media attention lately and Bjorn3D.com is honored to have his help on this assignment.

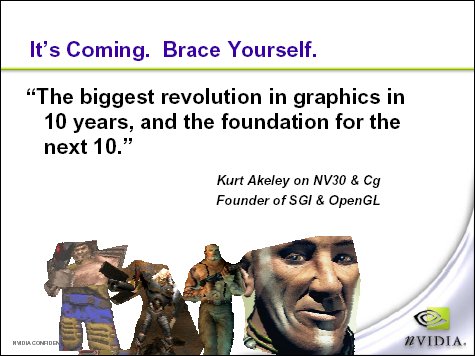

The first slide is a bit of a teaser. It mentions the mysterious Cg, which promises to revolutionize the world of computer graphics.

Cg is a high-level computer graphics language; the “syntax” (language structure) is much like the “C” programming language that most programmers know fluently. Today is the day that NVIDIA is announcing their new Cg Compiler. (A compiler is a program that computer programmers use to create applications, games, and other programs.)

What makes Cg so interesting is that it easily describes graphics rendering properties, as used by pixel shaders. (A “shader” is a term for a program that implements a particular vertex or pixel rendering effect). A vertex is the corner of a polygon, usually a triangle; a pixel is a dot on the screen. Rendering is drawing on-screen. A rendering effect is any special screen drawing technique not provided by the normal graphics pipeline. A graphics pipeline is a sequence of electronic circuits that compute graphical results (pixels).

Whew! Any more questions? No?

Good. Let’s find out more…

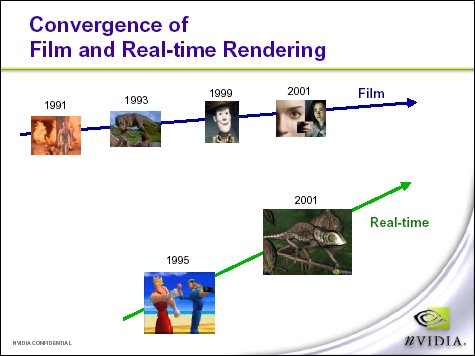

It seems that the off-line rendering techniques of film and the real-time capabilities of NVIDIA’s GPUs are on a collision course. NVIDIA calls this convergence a “discontinuity” (which is a fancy word for a break), a large step towards film type graphics on a desktop PC.

NVIDIA has a stated goal of creating Toy Story type graphics, in real-time, on your PC. I’ve seen some demos, like the Chameleon, that are amazingly close to Hollywood effects. You can download the Chameleon demo here.

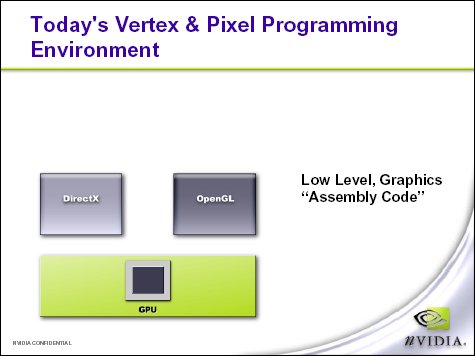

There’s a bit of a problem though, programming these rendering effects in DirectX or OpenGL is tough, because the GPU commands that are used to create effects requires the use of “assembly language”.

Assembly is a very low-level computer language that is used to program computers at the hardware level. It’s a pain-in-the-butt to use (I speak from personal experience.) and requires a lot of code to do small amounts of work. It also requires meticulous detail to direct the underlying hardware and is very hard to debug.

There are other high level rendering languages besides Cg, the most famous of which is RenderMan, which is the language used to create movies like Toy Story. (Many other programming tools are used too.) RenderMan has a bit of a problem though, at least as far as real-time graphics on a PC is concerned. Basically, RenderMan is too open, too versatile, and much too slow for use on today’s gaming computers. Cg is different; NVIDIA’s Cg Compiler uses the high level Cg language to create an assembly version of the shader, which runs directly on the GPU hardware and is fast!

This slide shows the progression of creatures in the Quake series of games by id Software and finishes with a face that looks amazingly real. (That’s war paint on the warrior’s face, and it certainly looks realistic.) The rendering of the face appears to be of cinematic quality, which is where NVIDIA is headed. The revolution implies that real-time graphics of awesome quality are going to be arriving with the NV30. (I truly hope that NVIDIA can pull this off!)

Which brings us back to Cg. Of what use is all this power, if there are no games that support it?

Programmers write the games. (Duh.) Contrary to some opinions I’ve heard on the ‘net, most programmers are not lazy; they are generally very hard workers, especially game developer programmers. The problem is that it takes a lot of effort to create the programs you use every day. Game programmers already have plenty of stuff on their plate: Monster AI, collision detection, physics simulation, user input, sound effects, debugging, etc. Shader programs just add more stuff to an already large pile of tasks.

How much time can all those programming tasks leave for special graphics effects, especially if only a few cards support a particular feature (or worse still, the feature needs to be programmed in custom / proprietary assembly code for each graphics card)? Too little, so as a consequence, a desired feature may be dropped or the game may ship with a few extra bugs. After all, there are only 24 hours in a day and when programmers are in “crunch mode” at the end of a project almost all of them are spent at the office.

Cg promises to help, by making it far easier to support all these custom effects in the game engine. How is that possible? Cg is a powerful high-level language, which means that it takes less actual code to create a desired effect. Basically, games can be developed faster and the code will be easier to maintain for future reuse.

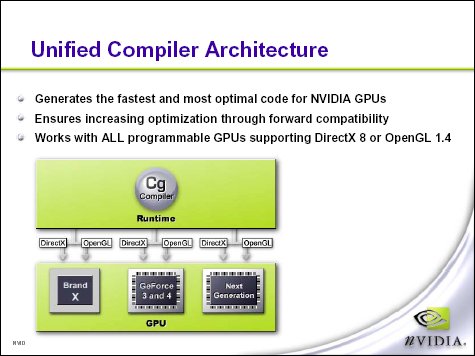

In the diagram above, it is easy to see that the DirectX and OpenGL APIs (Application Programming Interface) sit directly on top of the GPU. What is not shown is the application (game) sitting on top of DirectX or OpenGL. The game does not directly access the GPU on the bottom. Instead, the application interacts with one of the APIs in the middle to create an image on-screen.

Notice that I said one of the APIs, DirectX and OpenGL are quite different, even though they are both used (by different games) to access the same hardware. That means that the code to program an effect will likely be different, depending on the API, which means that programming snippets cannot be shared between the two.

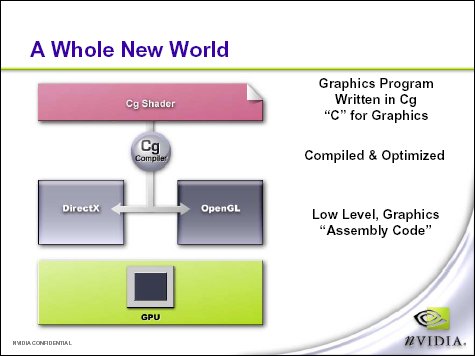

Cg promises to improve the situation:

Notice that the Cg Shading language sits on top of both APIs, but there’s also a Cg Compiler in the middle. What this diagram illustrates is that a common language, Cg, can be used to create shading effects in either DirectX or OpenGL. The Cg Compiler produces the special commands to tell the rendering APIs how to draw an effect on screen. Again, the application is not shown; it still talks directly to either DirectX or OpenGL. When the game is actually running, Cg is no longer needed; the compiled shader code is incorporated into the game program itself.

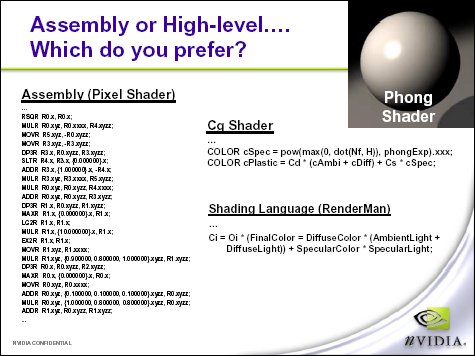

How much code are we talking about anyway? This next slide illustrates how much code is required to implement a simple Phong lighting effect, which might be used to render a billiard ball. This is about as simple as a pixel shader program gets.

In assembly there are 23 lines of very cryptic code:

RSQR R0.x, R0.x;

MULR R0.xyz, R0.xxxx, R4.xyzz;

MOVR R5.xyz, -R0.xyzz;

MOVR R3.xyz, -R3.xyzz;

DP3R R3.x, R0.xyzz, R3.xyzz;

SLTR R4.x, R3.x, {0.000000}.x;

ADDR R3.x, {1.000000}.x, -R4.x;

MULR R3.xyz, R3.xxxx, R5.xyzz;

MULR R0.xyz, R0.xyzz, R4.xxxx;

ADDR R0.xyz, R0.xyzz, R3.xyzz;

DP3R R1.x, R0.xyzz, R1.xyzz;

MAXR R1.x, {0.000000}.x, R1.x;

LG2R R1.x, R1.x;

MULR R1.x, {10.000000}.x, R1.x;

EX2R R1.x, R1.x;

MOVR R1.xyz, R1.xxxx;

MULR R1.xyz, {0.900000, 0.800000, 1.000000}.xyzz, R1.xyzz;

DP3R R0.x, R0.xyzz, R2.xyzz;

MAXR R0.x, {0.000000}.x, R0.x;

MOVR R0.xyz, R0.xxxx;

ADDR R0.xyz, {0.100000, 0.100000, 0.100000}.xyzz, R0.xyzz;

MULR R0.xyz, {1.000000, 0.800000, 0.800000}.xyzz, R0.xyzz;

ADDR R1.xyz, R0.xyzz, R1.xyzz;

“RenderMan” is the shading language used in the creation of movies like Toy Story. It’s only 2 lines of code, but it specifies the exact same effect as the assembly code above!

Ci = Oi * (FinalColor = DiffuseColor * (AmbientLight +

DiffuseLight)) + SpecularColor * SpecularLight;

And how does NVIDIA’s Cg Shader do?

COLOR cSpec = pow(max(0, dot(Nf, H)), phongExp).xxx;

COLOR cPlastic = Cd * (cAmbi + cDiff) + Cs * cSpec;

It’s also only 2 lines! That’s 1/12th the amount of assembly code! I’m sure that the code reduction varies, depending on the effect, but let’s assume that a 1/12th reduction in code size is typical. It certainly seems reasonable to me, as a programmer who has written programs in both C and assembly code. While these two lines of text might be hard for a layman to decipher (what’s going on?), but any good programmer can easily work with Cg code like this; it’s much easier than the 23 lines of assembly language. The programmer will probably be able to write Cg code at least 10 times faster that the equivalent assembly version.

It takes a highly specialized programmer to write assembly code, but Cg makes it possible for just about any programmer to write these sorts of GPU effects. Better still, NVIDIA’s SDK (Software Development Kit) includes a bunch of example effects, like Phong shading, so that a programmer can get a quick start. It’s entirely possible that most of the rendering effects needed in by a game are already coded in the SDK!

Cg is an industry standard high-level graphics language developed by NVIDIA with Microsoft’s participation; it’s 100% compatible with the DirectX 9 High Level Shading Language. That means that Cg code can be compiled into DirectX commands. (Compiling is the process of converting a high-level language like C into raw assembly language that is used to create a computer program executable (exe) file.

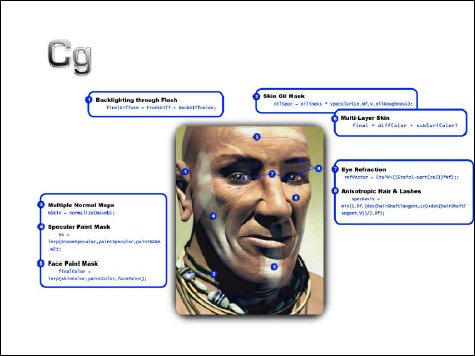

This slide shows eight (8!) of the pixel shading programs required for a realistic warrior face. Let’s examine these code snippets:

- Backlighting through Flesh (used on ears).

finalDiffuse = frontDiff + backDiffusion; - Skin Oil Mask (used to get a realistic shean on the entire face)

oilSpec = oiliness * specular(Ln, Nf, v, oilRoughness); - Multiple Normal Maps

nskin = normalize(Na+Nb); - Specular Paint Mask

ks = lerp(stoneSpecular, paintSpecular, paintRGBA, w); - Final Paint Mask

finalColor = lerp(skinColor, paintColor, facePaint); - Multi-Layer Skin

final = diffColor + subSurfColor; - Eye Refraction

refVector = Ets*V+((Eta*c1-sqrt(cs2)) * Nf); - Anisotropic Hair and Lashes

specBasis = min(1.0f, (dot(hairShaftTangent, Ln) + dot(hairShaftTangent, V)) / 2.0f);

That’s a lot of nitty, gritty details just to render a face, but human skin is a difficult surface to recreate via computer graphics.

Since most of you are not graphics programmers, I’ll try to explain the terms above and how they are being used. (You should skip this part if you’re easily bored.)

Diffuse light is general room lighting (from no particular direction). Ears are thin, so light can shine through from the back.

A “mask” is 2D set (think grayscale bitmap) of numerical values that indicate how strongly to apply another set of values. Masks are created by artists and commonly used to render lots of effects like smoke, sprites, bullet hole marks in walls, etc. In this case, the oiliness of the skin varies depending on the face location: brow, chin, cheeks, etc. An artist draws the mask and uses it to specify the shininess of a particular section of the surface.

Normalization is the process of scaling a vector to unit length. A 3D vector is basically a direction and a length. Normalization leaves the direction alone, and scales the length to a maximum of 1.0 units in length. This step sounds odd, but is required to simulate certain kinds of lighting. Otherwise, the light intensity will appear to vary when it should not.

Specular lighting is the term for light that is reflected at an angle, causing highlights like the bright spot on the Phong billiards ball we just examined.

Lerp is shorthand for “linear interpolation”, which is basically a value that somewhere in between two other values. In this case the paint mask is 0.0 to indicate no paint and it’s 1.0 to indicate that the paint is completely opaque. Lerping …

Final Paint Mask this is the combined war-paint color (with sheen) applied over the skin’s bitmapped texture.

Multi-layer skin is used to create a more lifelike skin appearance. The top skin image is slightly transparent, just like real human skin. This face apparently has muscle and blood vessel details just under the surface (or at least a rough approximation).

Eye Refraction provides a wet, shiny, eyeball surface. (What a sentence!)

Realistic hair is created via an anisotropic technique, which creates long, skinny highlights, just like natural hair. Anisotropic is a term for a material whose optical properties vary with the direction of propagation, in other words, light reflects differently, depending on the angles of the surface and the light.

Realistic surfaces, like this warrior’s face, require a great deal of detail work to get right. Think of how much assembly code would be required to create these rendering effects. No wonder graphics programmers are so enthusiastic about NVIDIA’s Cg Compiler!

This slide promises that NVIDIA will optimize the compiler for their own hardware. (Big surprise.) The Cg Compiler will also work with other hardware, like the ATI Radion 8500, which is good news for graphics programmers. This means a special effect needs to be written only once, which lets programmers spend more time perfecting effects and better still, spend more time creating new and unusual shading programs.

To the game-buying public, this provides more eye-candy and more variation in game rendering style. The new “cartoon” rendering styles in recent games is but a small taste of things to come. Doom3 super-realism is next, and I’m sure the future holds even more promise. Basically anything an artist can imagine can be created on-screen and with much less effort than before.

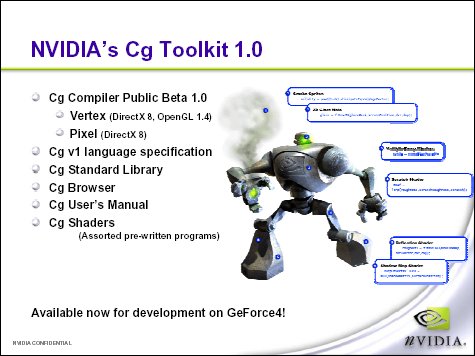

Ah, here are the components in the Cg Compiler SDK. Basically, it looks like everything a programmer needs, as far as shaders are concerned. It also looks as if only the GeForce4 is currently supported, and I’m pretty sure they do not mean a GeForce4 MX.

Improvements are planned; the version 2 toolkit will include more shaders, a browser, plug-ins, and more. The plug-ins are actually to be used by artists, not programmers. The artists use programs like Maya, Max, and XSI to create the content that is then used in games. These plug-ins let the artists design the effects that will later be incorporated into the game engine itself! We should expect to see lots more cool stuff on our monitors in the near future. I can hardly wait…

Rats! It looks like the next slide got stuck in the projector.

It appears that John Carmack said something NVIDIA’s Marketing department liked. Oh well. I’m sure we’ll get to see John’s quote eventually, but unfortunately, not today.

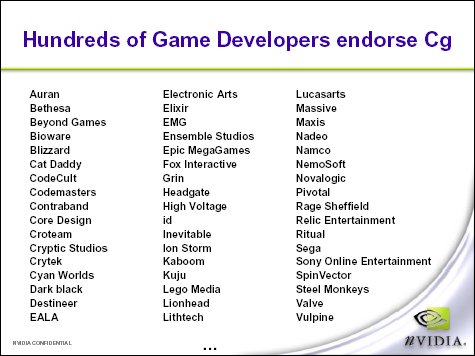

It certainly appears the NVIDIA knows what the game developers, like Mr. Carmack want. Take a look a the list of companies on this next slide:

That list represents most of the top game programming companies. I think we’re going to see some amazing graphics in the years to come.

And here’s the last slide:

Hmm… The phase “tidal wave” sets off my hype alert (in a big way), but the rest seems reasonable.

The Cg Compiler is a tool that will help programmers implement cool shader effects with much less effort. As an industry standard, it will probably work on the card you own now (or at least the next one you buy). The breakthrough is the 1/12th reduction in the code required to render effects. And it certainly seems to be indorsed by a lot of developers; NVIDIA provided a huge list of quotes from game developers, vendors, and others. Here are some select quotes:

“We have been eagerly awaiting this next generation of shader technology. Cg, with its compiler and associated toolkit, will be an indispensable tool for the creation and implementation of pixel shaders and vertex shaders. By abstracting the lower level assembly language, we are now free to spend more time and programming resources on writing more powerful custom shaders for the delivery of much more visually stunning effects on both OpenGL and DirectX.”

Dean Sekulic

Programmer

Croteam“Cg will allow us to rapidly prototype advanced special effects, and easily incorporate them into our future products. Not only will developers benefit from the speedy prototyping and sharing of Cg shaders between their products, but the consumer (gamer) will appreciate better looking games that come faster to market. Everyone wins!”

Mark Davis

Lead Programmer

“Delta Force – Black Hawk Down”“Cg is the missing connector between the world of fast, hardware-driven rendering and the detail-oriented world of software rendering. Cg provides the artist the same rich toolbox of image possibilities previously only available through slow software rendering or laborious assembly coding. Cg is the necessary link that brings hardware-accelerated rendering to within a hairs breadth of the software-driven world — and once that linkage occurs, it will not only revolutionize the experience for gamers and other end users of graphics, but it will revolutionize the practice of animation in every corner of the industry — by simplifying, unifying, and accelerating the image-development process, right there at the artists desktop. I expect that the combination of Cg-driven power and quick, interactive rendering will quickly come to surpass almost all other forms of rendering for artistic purposes in every venue — games, TV, and movies.”

Kevin Bjorke

Imaging Supervisor

Final Fantasy: The Spirits Within“Cg is a bright light for a black art. Finally, cryptic shader tricks can be explored and applied by mere mortals.”

Mike Biddlecombe

Programmer – Dungeon Siege

Gas Powered Games“NVIDIAs developer support has been fantastic. We have a great working relationship that provides a lot of value to Monolith. Cg is an enabling technology. We have a few graphics gurus at Monolith that can write assembly code shaders, but Cg allows mere human programmers with graphics interest like myself to write shaders. The inclusion of Cg in our next graphics engine will enable our future games to have an unprecedented amount of detail.”

Kevin Stephens

Direct of Engineering

Monolith“NVIDIAs understanding of graphics development goes beyond the advancement of 3D hardware. They understand that it takes software support to intelligently drive these systems. Cg is another brilliant offering that empowers us to build the worlds we imagine. The encompassing vision of Cg (art tool integration, debugging, and platform compatibility) is something that developers like us have been yearning for. Finally being able to replace complicated shader systems with a simple unified language will greatly simplify our work. Relic prides itself on its artistic and technical presentation, and anything that can bring us closer to our vision is something we are ready to embrace.”

Ian Thomson

Lead Programmer – Impossible Creatures

Relic Entertainment“One of the major difficulties in adopting advanced shader technology has been the complexity of writing custom shaders in assembly code and the difficulty trying to share these resources between developers. Now, with Cg technology, powerful and flexible shaders can easily be expressed in a high level language accessible to the entire development community, not just a handful of assembly language gurus.”

John Ratcliff

Senior Technology Architect – Planetside

Sony Online Entertainment“ILM has utilized high level graphics languages for quite some time. NVIDIA’s high level language for GPU programming is an important step in achieving an NVIDIA hardware-based development environment suitable for ILM’s production pipeline.”

Andy Hendrickson

Senior Technology Officer

Industrial Light + Magic

Wow! I guess I’d better sum this all up.

If you’re a gamer this simply means that a lot of cool games will be coming your way soon; better still, if you’re a games / graphics programmer, life just got a whole lot easier!

Note: portions of this article are FICTIONAL. The actual NVIDIA presentation is real. The two photos are fakes. John Carmack really was quoted, but it was about NVIDIA’s upcoming NV30 and I’m still under NDA and cannot publish his quote at this time.

Thanks to Mike Chambers, of nV News, for use of his original photos. You can read his account of his visit to NVIDIA here.

NVIDIA has provided the following informational links on the Cg Compiler:

For more information about the NVIDIA Cg solutions, please visit the NVIDIA Web site at: www.nvidia.com/view.asp?IO=cg.

Developers interested in learning more about the NVIDIA Cg Compiler, should visit: http://developer.nvidia.com/cg.

For a community perspective on Cg and shaders, please visit the Web site: www.cgshaders.org.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996