Bjorn3D will kick off our on-going coverage of this gala event with a detailed preview of the flagship of the line, the GeForce GTX 280 provided to us by XFX. For clarity’s sake notice we said “on-going” and “preview”. As with the debut of any new generation of graphical product there is almost always a portion of the manufacturer’s final vision of that device that is either immature or incomplete, and the GTX 280 is certainly no exception to that premise. We fully intend to go into great detail in this preview about what’s currently available and what you can expect in the future from the NVIDIA 200 series of graphical products.

INTRODUCTION

It’s once again one of those special times of the year that all computer gaming and technical enthusiasts live for, new graphics solutions. Preceding every one of these tumultuous occasions there’s always a certain amount of truth about the product(s) in question coupled with vast amounts of rumor, supposition and hype. We at Bjorn3D have been around for over ten years and have seen a number of these events come and go; some being obviously more memorable than others. Today marks the launch of what could be one of the most memorable of these occasions, NVIDIA’s radically new 200 series of graphics solutions.

Bjorn3D will kick off our on-going coverage of this gala event with a detailed preview of the flagship of the line, the GeForce GTX 280 provided to us by XFX. For clarity’s sake notice we said “on-going” and “preview”. As with the debut of any new generation of graphical product there is almost always a portion of the manufacturer’s final vision of that device that is either immature or incomplete, and the GTX 280 is certainly no exception to that premise. We fully intend to go into great detail in this preview about what’s currently available and what you can expect in the future from the NVIDIA 200 series of graphical products. For sake of this introduction, suffice it to say the features available for today’s product preview are a splash in the pan compared to what will be available shortly after this launch day. Thankfully all these missing features are software controlled and the final products are nearing release.

SPECIFICATIONS

| NVIDIA GTX200 GPU | |||

| GTX280 | GTX 260 | 9800GTX | |

| Fabrication Process | 65nm | 65nm | 65nm |

| Transistor Count | 1.4 Billion | 1.4 Billion | 754 Million |

| Core Clock Rate | 602 MHz | 576 MHz | 675 MHz |

| SP Clock Rate | 1,296 MHz | 1,242 MHz | 1,688 MHz |

| Streaming Processors | 240 | 192 | 128 |

| Memory Clock | 1,107 MHz (2,214 MHz) | 999 MHz (1,998 MHz) | 1100 MHz (2,200 MHz) |

| Memory Interface | 512-bit | 448-bit | 256-bit |

| Memory Bandwidth | 141.7 GB/s | 111.9 GB/s | 70.4 GB/s |

| Memory Size | 1024 MB | 896 MB | 512 MB |

| ROPs | 32 | 28 | 16 |

| Texture Filtering Units | 80 | 64 | 64 |

| Texture Filtering Rate | 48.2 GigaTexels/sec | 36.9 GigaTexels/sec | 43.2 GigaTexels/sec |

| RAMDACs | 400 MHz | 400 MHz | 400 MHz |

| Bus Type | PCI-E 2.0 | PCI-E 2.0 | PCI-E 2.0 |

| Power Connectors | 1 x 8-pin & 1 x 6-pin | 2 x 6-pin | 2 x 6-pin |

| Max Board Power | 236 watts | 182 watts | 156 watts |

| GPU Thermal Threshold | 105º C | 105º C | 105º C |

| Recommended PSU | 550 watt (40A on 12v) | 500 watt (36A on 12v) | – |

FEATURES

In past generations of GPUs, real-time images that appeared true-to-life could be delivered but in many cases would cause frame rates to drop to unplayable levels in complex scenes. Thanks to the increase in sheer shader processing power of the GeForce GTX 200 GPUs and NVIDIA acquisition of the PhysX technology, many new features can now be offered without causing horrific slow downs. Some of these features include:

- Convincing facial character animation

- Multiple ultra-high polygon characters in complex environments

- Advanced volumetric effects (smoke, fog, mist, etc.)

- Fluid and cloth simulation

- Fully simulated physical effects ie. live debris, explosions and fires

- Physical weather effects ie. accumulating snow and water, sand storms, overheating, freezing and more

- Better lighting for dramatic and spectacular effect, including ambient occlusion, global illumination, soft shadows, indirect lighting and accurate reflections

Realistic warrior from NVIDIA “Medusa” demo

Extreme HD

For those early adopters of new standards, you will be pleased to hear of included support for the new DisplayPort interface. Allowing up to 2560 x 1600 resolution with 10-bit color depth, the GTX 200 GPUs offer unprecedented quality. While prior-generation GPUs included internal 10-bit processing, they could only output 8-bit component colors (RGB). The GeForce GTX 200 GPUs permit both internal 10-bit internal processing and 10-bit color output.

GeForce GTX 200 GPU Architecture

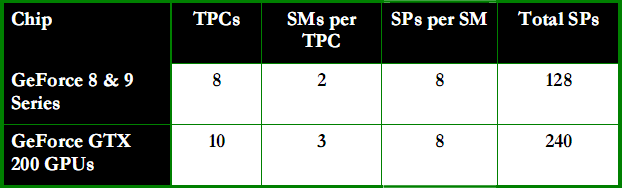

The GeForce 8 and 9 Series GPUs were the first to be based on a Scalable Processor Array (SPA) framework. With the the GTX 200 GPU, NVIDIA has upped the ante in a big way. The second-generation architecture is based on a re-engineered, enhanced and extended SPA architecture.

The SPA architecture consists of a number of TPCs (Texture Processing Cluster in graphics processing mode and Thread Processing Clusters in parallel computing mode). Each TPC is comprised of a number of streaming multiprocessors (SMs), and each SM contains eight processor cores (also called streaming processors (SPs) or thread processors). Every SM also includes texture filtering processors used in graphics processing which can also be used for various filtering operations in computing mode, such as filtering images as they are zoomed in and out.

Number of GPU processing cores

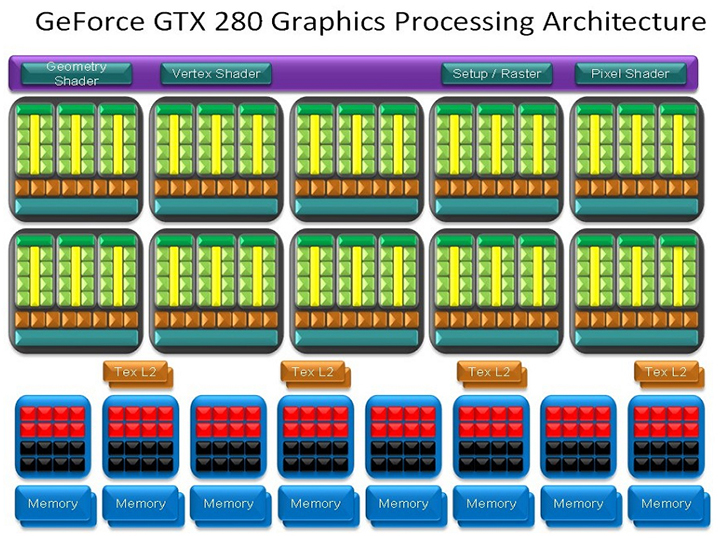

As mentioned earlier, the GeForce GTX 200 GPUs includes two different architectural personalities; graphics and computing. The image below represents the GeForce GTX 280 in graphics mode. At the top is the thread dispatch logic in addition to the setup and rater units. The ten TPCs each include three SMs and each SM has eight processing cores for a total of 240 scalar processing cores. ROP (raster operations processors) and memory interface units are locates at the bottom.

GeForce GTX 280 Graphics Processing Architecture

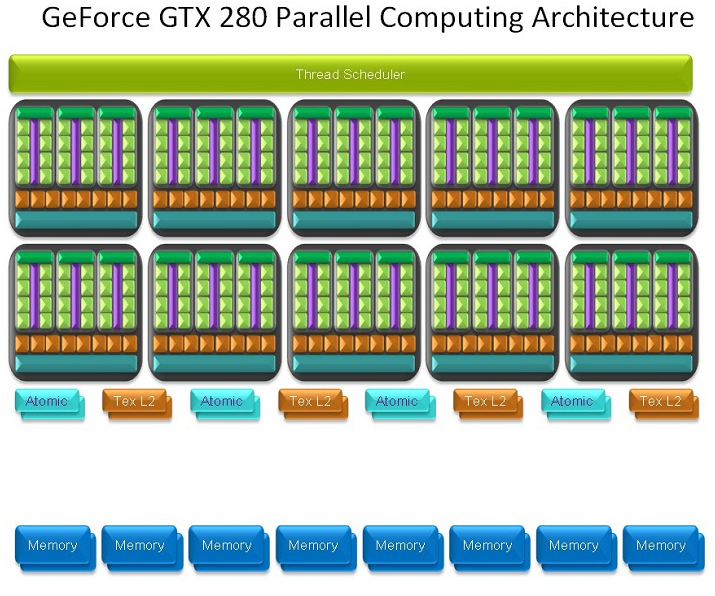

The next image depicts a high-level view of the GeForce GTX 280 GPU in parallel computing mode. A hardware-based thread scheduler at the top manages scheduling threads across the TPCs. In compute mode the architecture includes texture caches and memory interface units. The texture caches are used to combine memory accesses for more efficient and higher bandwidth memory read-write operations.

GeForce GTX 280 Computing Architecture

FEATURES cont.

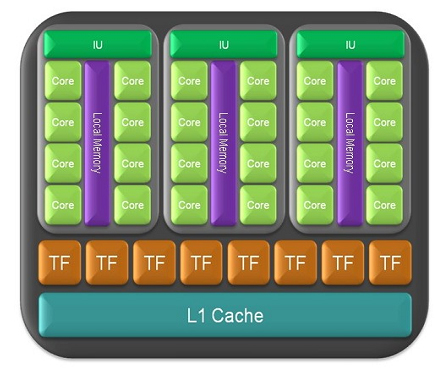

Here is a close up of a TPC in compute mode. Note the shared local memory in each of the three SMs. This allows the eight processing cores of the SM to shard data with the other processing cores in the same SM without having to read from or write to an external memory subsystem. This greatly increases computational speed and efficiency for a variety of algorithms.

TPC in compute mode

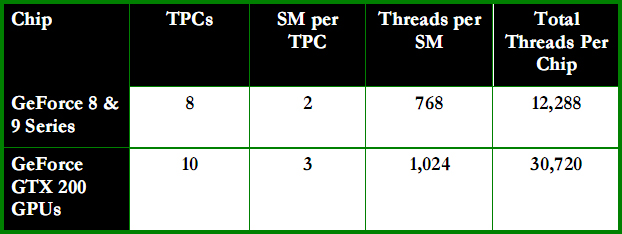

In addition to all of the aforementioned architectural changes and upgrades, the GeForce GTX 200 GPUs can support over thirty thousand threads in flight. Through hardware thread scheduling all processing cores attain nearly 100% utilization. Additionally, the GPU architecture is latency-tolerant. This means if a particular thread is waiting for memory access the GPU can perform zero-cost hardware-based context switching and immediately switch to another thread to process.

Thread count

Double Precision Support

A long requested addition has finally made its way into the new GTX 200 GPUs; double-precision, 64-bit floating point computation support. While gamers won’t find much use in this addition, it is the high-end scientific, engineering and financial computing applications requiring very high accuracy that will put this feature to good use. Each SM (Streaming Multiprocessor) incorporates a double-precision 64-bit floating math unit for a total of 30 double-precision 64-bit processing cores. The overall double-precision performance of all ten TPCs (Thread Processing Clusters) of a GeForce GTX 200 GPU is roughly equivalent to an eight-core Xeon CPU, yielding up to 90 gigaflops.

Improved Texturing Performance

The eight TPCs of the GeForce 8800 GTX allowed for 64 pixels per clock of texture filtering, 32 pixels per clock of texture addressing, 32 pixels per clock of 2x anisotropic bilinear filtering or 32-bilinear-filtered pixels per clock. Subsequent GeForce 8 and 9 Series GPUs balance texture addressing and filtering.

- For example, the GeForce 9800 GTX can address and filter 64 pixels per clock, supporting 64 bilinear-filtered pixels per clock.

GeForce GTX 200 GPUs also provide balanced texture addressing and filtering and each of the ten TPCs include a dual-quad texture unit capable of addressing and filtering eight bilinear pixels per clock, four 2:1 anisotropic filtered pixels per clock or four 16-bit floating point bilinear-filtered pixels per clock. Total bilinear texture addressing and filtering capability for an entire high-end GeFore GTX 200 GPU is 80 pixels per clock.

The GeForce GTX 200 GPU also employs a more efficient scheduler, allowing the chip to attain close to theoretical peak performance in texture filtering. In real world measurements, it is 22% more efficient than the GeForce 9 Series.

Texture filtering rates

Power Management Enhancements

GeForce GTX 200 GPUs include a more dynamic and flexible power management architecture than past generation NVIDIA GPUs. Four different performance / power modes are employed:

- Idle/2D power mode (25 watts approx.)

- Blu-ray DVD playback mode (35 watts approx)

- Full 3D performance (236 watts worst case)

- HybridPower mode (0 w effective)

For 3D graphics-intensive applications, the NVIDIA driver can seamlessly switch between the power modes based on the utilization of the GPU. Each of the new GeForce GTX 200 GPUs integrates utilization monitors that constantly check the amount of traffic occuring in side the GPU. Bases on the level of utilization reported by these monitors, the GPU driver can dynamically set the appropriate performance mode.

The GPU also has clock-gating circuitry, which effectively ‘shuts down’ blocks of the GPU which are not being used at a particular time (where time is measure in milliseconds), further reducing power during periods of inactivity.

This dynamic power range gives you incredible power efficiency across a full range of applications (gaming, video playback, web surfing, etc.)

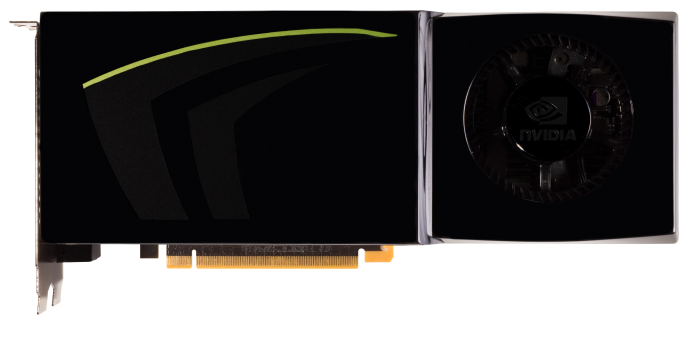

THE CARD & IMPRESSIONS

After removing the XFX GeForce GTX 280 from its protective packaging and taking a few minutes to peruse its aesthetics our first impression was: Where’s the logo? Instead of some exotic, sexy siren or some highly invigorating action scene there is only the “GTX” emblem in green and black. Not quite as bad as the all black reference card but still boring. Well as we’ve repeatedly stated it doesn’t matter what it looks like if it performs as well or better than advertised.

XFX GeForce GTX 280

NVIDIA GeForce GTX 280 Reference Card

One very noteworthy thing in our humble opinion is that all of the GTX 280’s components are now completely enclosed. This change should greatly enhance cooling and prevent incidental component during installation or removal of the card. We’ll have to give NVIDIA credit as well for opening up the area around the power inputs, we’ve heard reports from owners of the GX2 that had to use a Dremel Tool to make their PCI-e power adapters fit properly. The placement of the power inputs is also debatable as placed on top where they are currently located can interfere with large, deep fans mounted on the side of your case. Placing them on the end of the card can interfere with hard drive placement on smaller cases.

Power Pin Placement

The dimensions of the GTX 280 are exactly the same as the 8800 GTX, 9800 GTX, and all other cards housed in the longer NVIDIA designed chassis. We keep hoping for flagship, ultra high-end performance in a budget sized encasement. The top, rear and sides of the GTX 280 all have excellent venting for improved air intake which should aid the traditional squirrel caged fan in helping to cool this behemoth.

XFX GTX 280 Rear View

Another smart design change with the GTX 280 is covering the SLI ports on top of the card to prevent incidental damage during installing and removing the card. The plastic cover is easily removed and reinstalled. There has been no change to the rear card’s bracket, it is identical to all dual slotted cards. Two DVI ports remain standard and an HDMI cable is also included.

XFX GTX 280 Rear Bracket

Contents & Bundled Accessories

So many of the graphics cards manufacturers had decreased the bundled accessories that they offer with their graphics solutions. Back in the day it was not unusual to get everything supplied today but also as many as two full edition games. The accessories included with the XFX GeForce GTX 280 are not quite as lavish as they once were. This group of accessories is certainly a vast improvement over the minimalist approach used with base model cards and more than provides everything you need and then some. Probably the most disappointing element left out of the bundled accessories is a Molex to PCI-e 12V 8-pin power adapter. There are still a number of consumers, enthusiasts included, that don’t own a power supply equipped with an 8-pin PCI-e adapter. The inclusion of this $5.00 part would have helped a number of prospective consumers.

- 1 – XFX Model GX-280N-F9 GTX 280 Graphics Card

- 1 – VGA to DVI Adapter

- 1 – HDTV (YPrPb) Dongle

- 1 – Molex to PCI-e 12V 6-pin power adapter

- 1 – HDMI SPDIF cable

- 1 – Quick Start Manual

- 1 – “I’M GAMING DO NOT DISTURB” Door Sign

- 1 – Driver CD

- 1 – Assassins Creed (full version) with DX10 patch

WHAT’S HERE NOW

With every new product release comes an evolutionary process. In the case of the NVIDIA’s GTX 280 some of the magnitude of its feature set are unable to be tested in this preview due to the drivers not being quite ready for prime time. In actuality the GTX 280 hardware component is currently the only item that’s not BETA. The entire feature set captioned above is not just limited to the 200 series of cards. Many of the features will be available in those cards that are CUDA compatible, namely many of the 8 and 9 series of cards. Obviously we won’t expect the performance that we see with the new 200 series but significant improvements should be noted.

Most of you are aware that the NVIDIA has procured Aegia and now owns the PhysX technology which is already incorporated into well over 100 games currently on the market. The PhysX technology will again be available in all CUDA capable cards once the drivers are released. The same goes for hugely improved Folding @ Home client which we’ll discuss later in this preview.

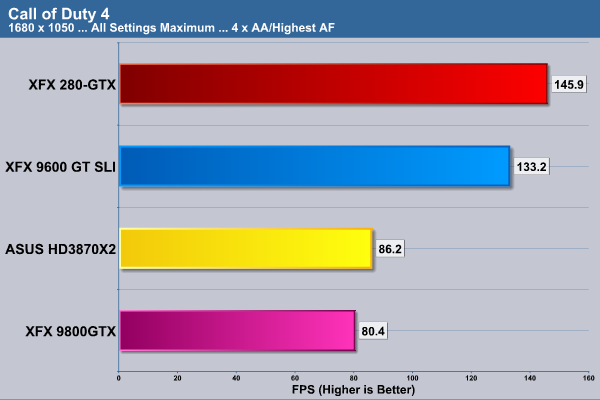

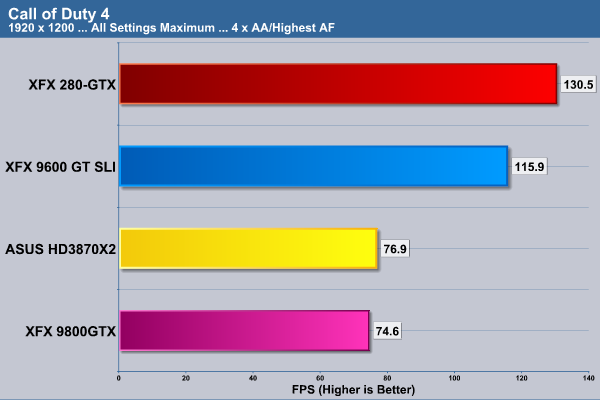

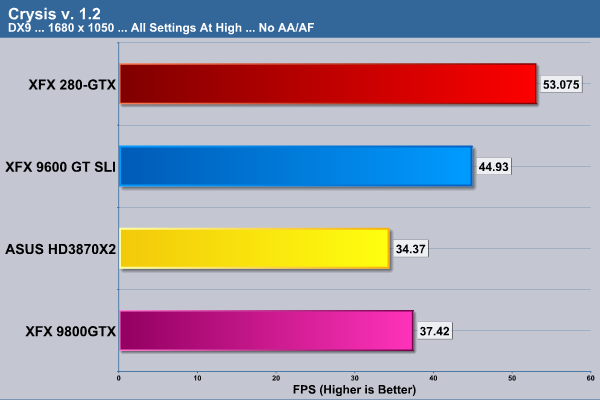

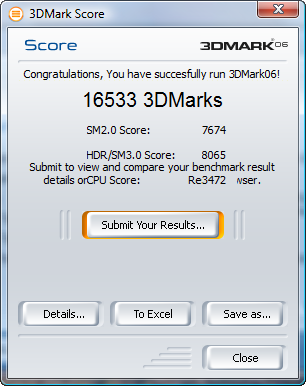

TESTING REGIME

We will run our captioned benchmarks with each graphics card set at default speed. Our E8500 will be overclocked to 3.5 GHz to overcome any bottleneck the CPU might present. 3DMark06 will be run at default settings as 3DMark Vantage but in Performance Mode. All of our gaming tests will be run at the 1680 x 1050 and 1920 x 1200 with 4x Antialiasing and 16x Anistropic Filtering. For those games that offer both DX9 and DX10 rendering we’ll test both. Each of the tests will be run individually and in succession three times and an average of the three results calculated and reported.

| Test Platforms | |

| Processor | Intel Core 2 Duo E8500 at 3.5 GHz |

| Motherboard | ASUS P5E3 Premium WIFI-AP @n, BIOS 0503 XFX 790i for SLI |

| Memory | 2GB Patriot Viking DDR3 PC3-1500, running at 7-7-7-24 |

| Drive(s) | 3 – Seagate 1 TB Barracuda ES.2 SATA Drives |

| Graphics | Test Card #1: XFX GeForce® GTX 280 running ForceWare 177.34 Test Card #2: XFX GeForce® 9800 GTX running ForceWare 175.16 Test Card #3: 2 – XFX GeForce® 9600 GT in SLI running ForceWare 175.16 Test Card #4: ASUS HD3870X2 running Catalyst 8.5 drivers |

| Cooling | Noctua NH-U12P |

| Power Supply | Tagan BZ800, 800 Watt |

| Display | Dell 2407 FPW |

| Mouse & Keyboard | Microsoft Wireless Laser 6000 v.2 Mouse & Keyboard |

| Sound Card | Onboard ADI® AD1988B 8-Channel High Definition Audio CODEC |

| KVM Switch | ATEN CS1782 USB 2.0 DVI KVM Switch |

| Case | Lian Li PC-A17 |

| Operating System | Windows Vista Ultimate 64-bit SP1 |

| Synthetic Benchmarks & Games | |

| 3DMark06 v. 1.10 | |

| 3DMark Vantage v. 1.01 | |

| Call of Duty 4 | |

| Company of Heroes v. 1.71 DX 9 & 10 | |

| Crysis v. 1.2 DX 9 & 10 | |

| World in Conflict DX 9 & 10 | |

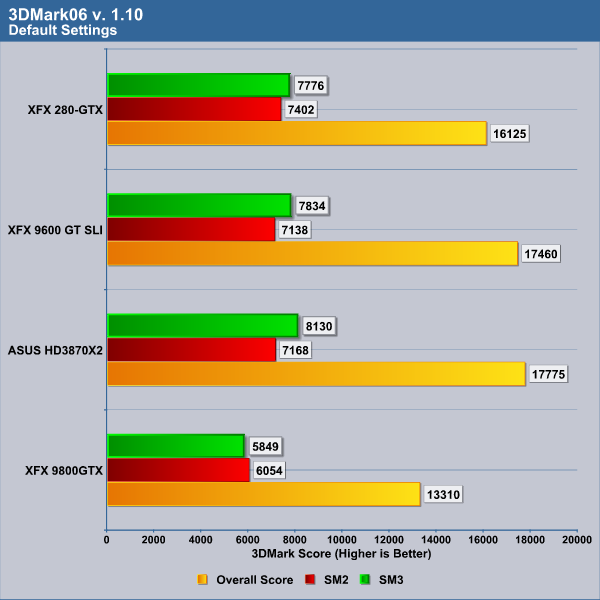

3DMARK06 v. 1.1.0

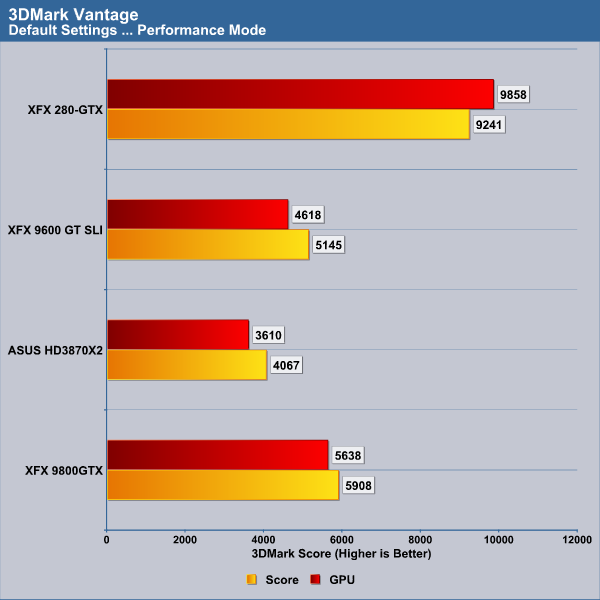

3DMark Vantage

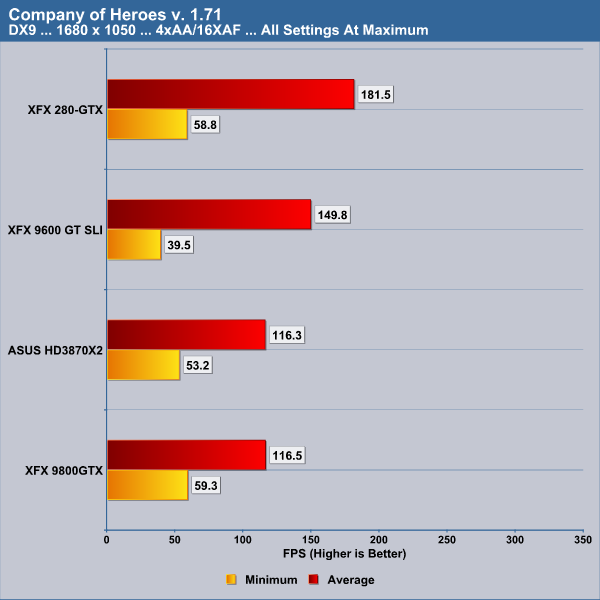

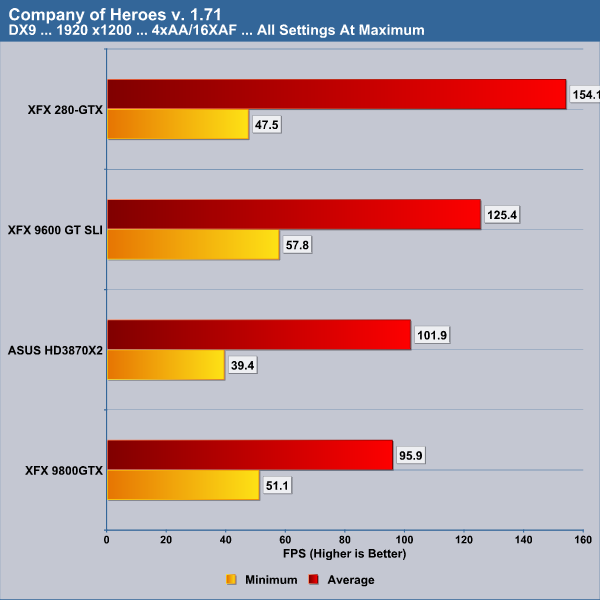

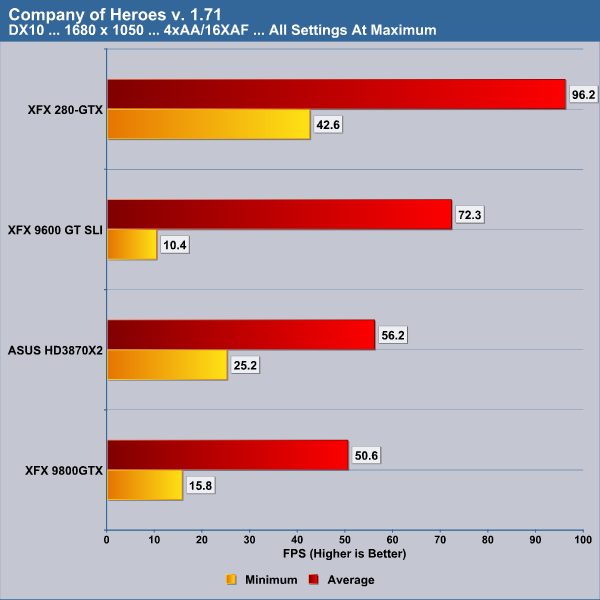

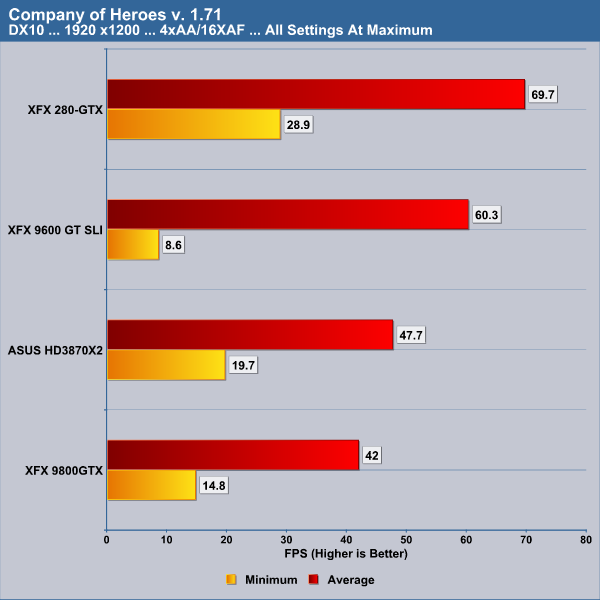

COMPANY OF HEROES v. 1.71

DX9

DX10

CALL OF DUTY 4

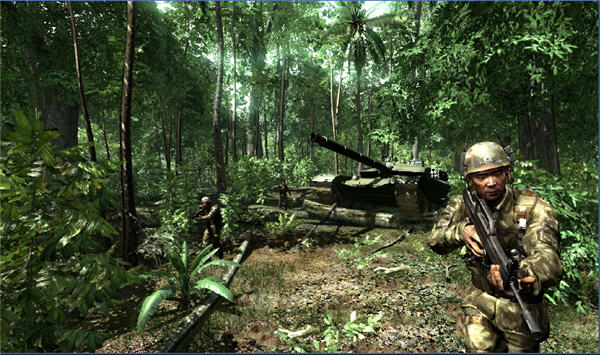

CRYSIS v. 1.1

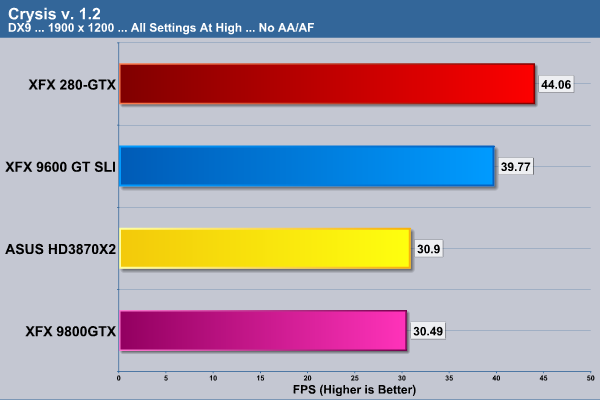

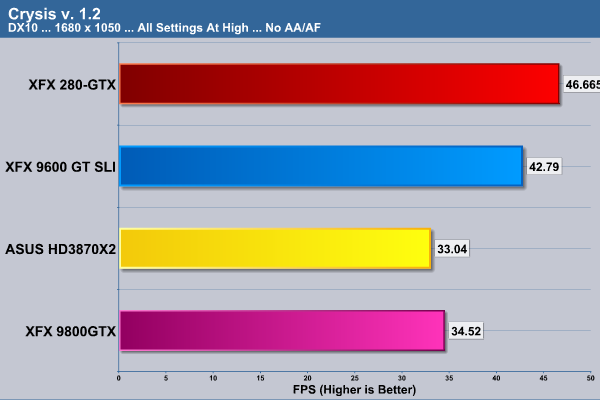

DX9

DX10

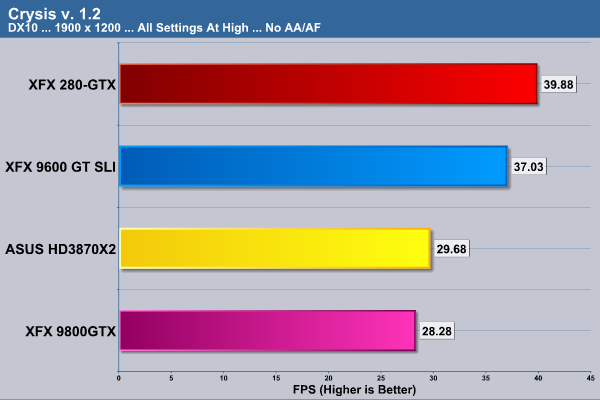

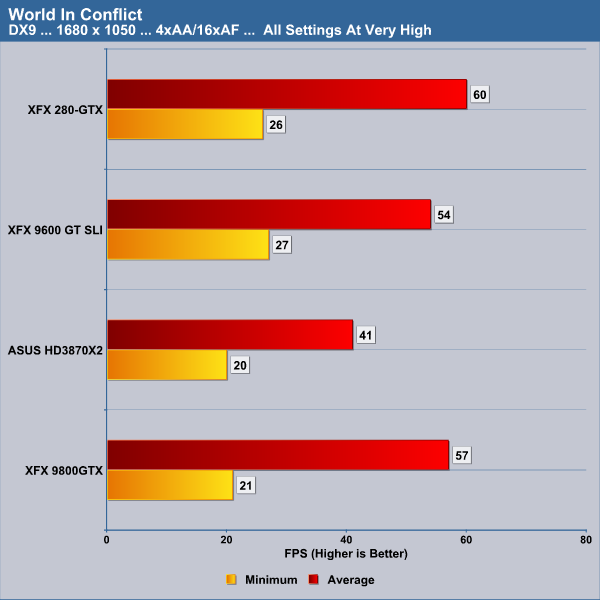

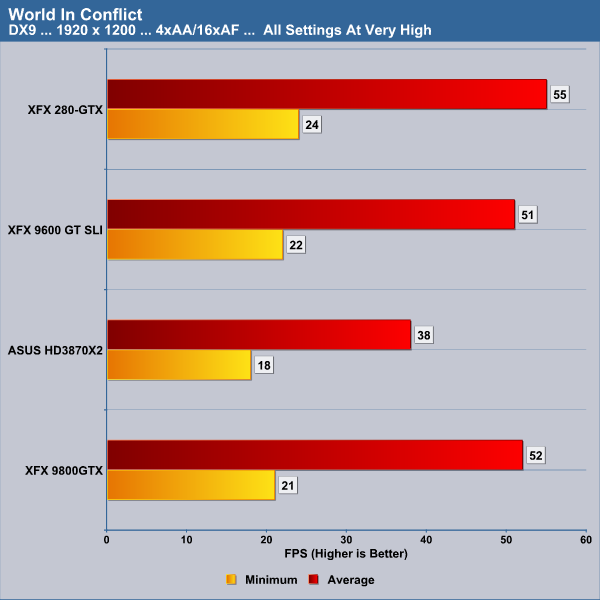

WORLD IN CONFLICT

DX9

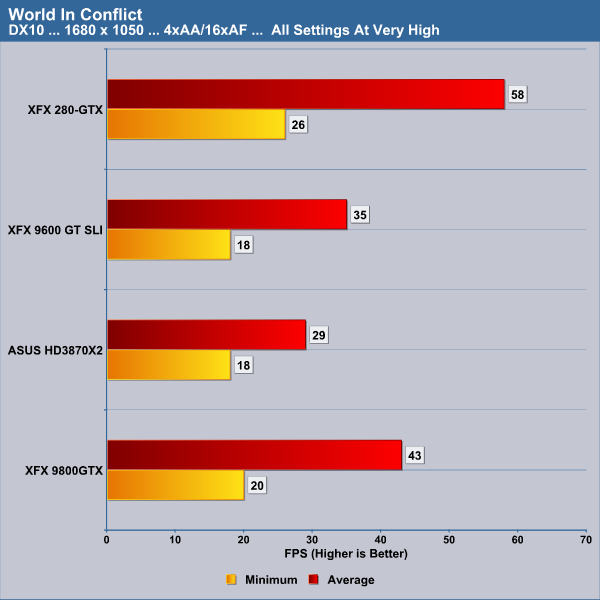

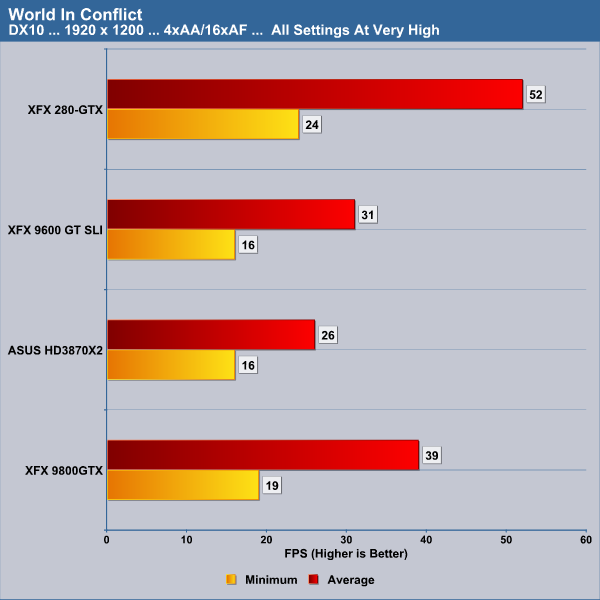

DX10

FOLDING @ HOME

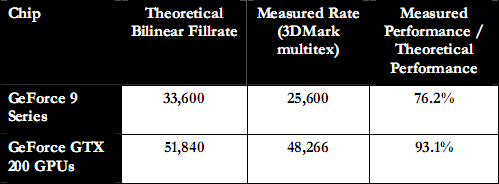

As most of you already understand Folding @ Home is one of the highest, most grandiose forms of distributed computing. Folding @ Home uses the end-users’ CPU or most recently GPU to process protein folding work units, which when processed are sent back to Stanford University. This process of protein folding on hundreds of thousands of computers acts as one of the world’s largest super computers and significantly aids researchers in pursuing possible cures for Cancer, Alzheimer’s Disease, Parkinson’s Disease and many other serious health-related issues.

For our testing we have been using a NVIDIA reference GeForce GTX280 for the last 5 days or so Folding for the Bjorn3D Folding team # 41608. A couple of weeks ago knowing that the Beta GPU client would be coming, we took a Quad Core QX9650 clocked at 3.8GHz and Folded all 4 cores for 24 hours. This score will be represented along with the score of an ASUS HD3870 folding for 48 hours and finally the score from our reference GTX280 card.

To clarify, the work units Folding @ Home gives you are not all the same size and require varying lengths of time to complete. You are awarded points for your effort with more points being associated with the more lengthy work units. In the graph below we reflect our results from the scenario captioned above.

We can see NVIDIA has a Folding power house on their hands. Just think what could be done if a 1000 people had GTX280’s and Folded on them 24×7. Off course, not everyone is going to Fold all the time on their GPU’s, but over time many of the GeForce 200 series will be purchased and shortly we will have drivers for NVIDIA 8 and 9 series cards for Folding. Just think of the great help this is going to be for the scientific community. What if our efforts in this worthwhile project helped in developing cure one or more of the diseases where protein folding plays a key role?

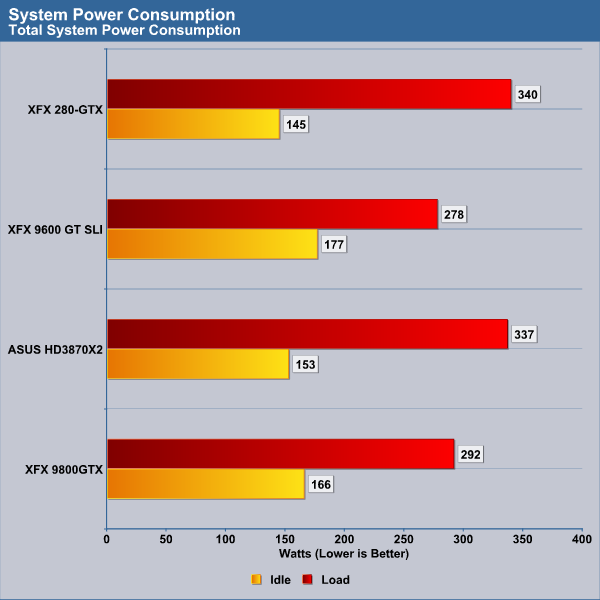

POWER CONSUMPTION

To measure power we used our Seasonic Power Angel a nifty little tool that measures a variety of electrical values. We used a high-end UPS as our power source to eliminate any power spikes and to condition the current being supplied to the test systems. The Seasonic Power Angel was placed in line between the UPS and the test system to measure the power utilization in Watts. We measured the idle load after 15 minutes of totally idle activity on the desktop with no processes running that mandated additional power demand. Load was measured taking extended peaks power measurements from the entirety benchmarking process.

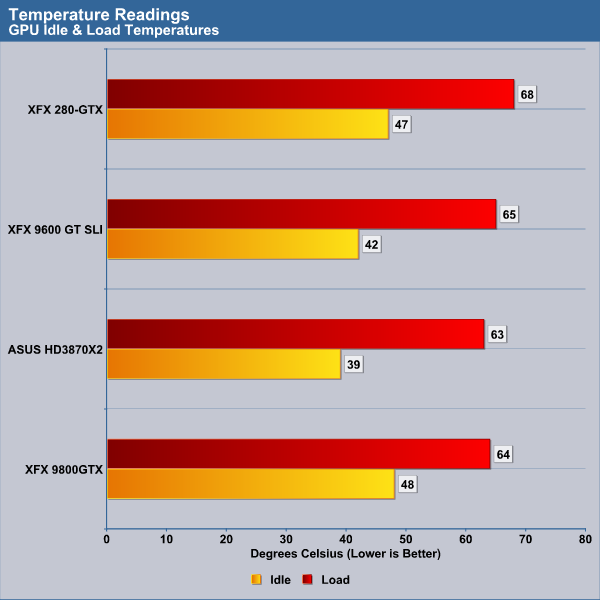

TEMPERATURE

The temperatures of the cards tested were measured using Riva TUner v. 2.09 to assure consistency and remove any bias that might be interjected with the respective card’s utilities. The temperature measurements used the same process for measuring “idle” and “load” capabilities as we did with the power consumption measurements.

Even though the cooling fan on the GTX 280 is set to a maximum of 40% that appeared to be all that was necessary. Try as we may we were unable to surpass the 70° Celsius plateau with all the stress we put on this card. Note as well that we are running this system in a very small case, the Lian Li PC-A17. We have good airflow in the case provided by 3 – 120mm fans and 1 – 140mm fan; but we are not using a special fan directed at the graphics card for additional cooling.

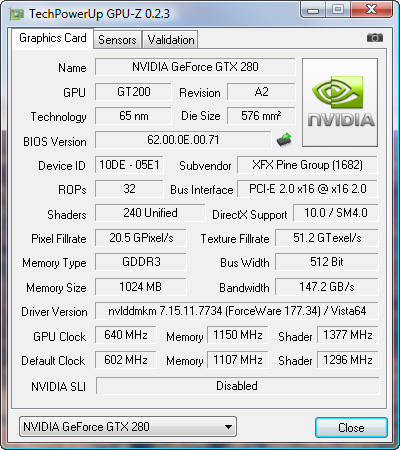

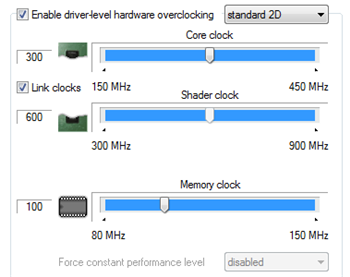

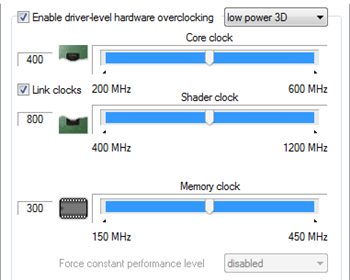

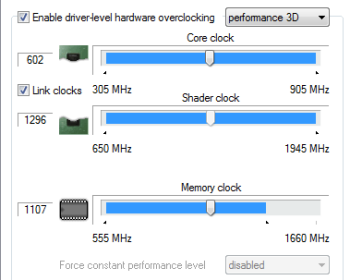

OVERCLOCKING

We at Bjorn3D are always looking for new and different ways to push the products we review to their plausible limitations. Before beginning our overclocking session we thought it prudent to break out the latest version of Riva Tuner to ascertain a true starting point. Past experience has shown us that we don’t always see the exact factory specifications on every new card that we test.

|

|

|

Standard 2D |

Low Power 3D |

|

|

Performance 3D |

We were able to push the GPU clock to 640 MHz, the shader clock to 1,377 MHz, and the memory clock to 1,150 MHz (2,300 MHz effective). These were the absolute highest settings we were able to attain where our sample of the GTX 280 was completely stable.

While not the 20+% overclocks we’ve been used to with the 8 and 9 series cards, we must force ourselves to remember that this card represents an entirely new generation of product with an entirely different architecture. So for all intents and purposes were treading in uncharted territory and only time will tell if these overclocking results are good or not.

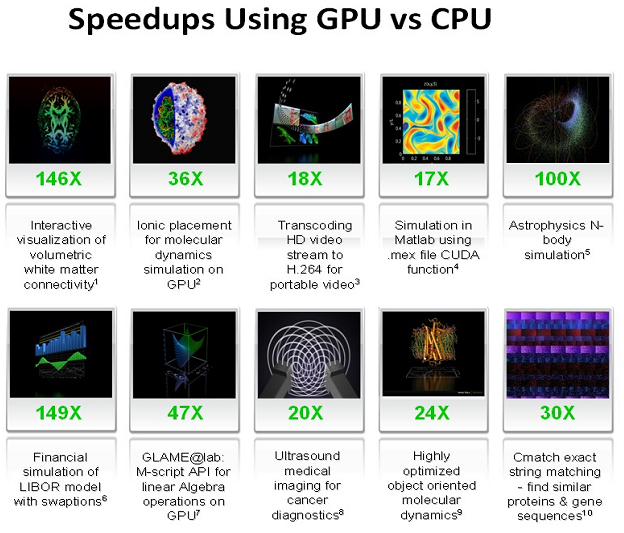

BADBOOM

NVIDIA included a cool little transcoding utility called BadaBoom that greatly speeds up the transcoding process. We quote from the users manual:

Today’s consumers are no longer satisfied viewing their media on a single, fixed display. Instead, they want their content available wherever their busy lifestyle takes them. To support different devices, the video and audio files need to be formatted differently in terms of compression used, resolution, and bitrates. Converting media streams between different formats to support multiple devices is a process known as transcoding. Transcoding is one of most compute-intensive operations running on PCs today; transcoding a 2-hour movie, for example, can take many hours on a standard CPU processors. Furthermore, not only does the processing take a long time, but it consumes the computer’s processor resources for the entire time, rendering the machine unusable for other computing tasks.

Elemental Technologies’ BadaBOOM™ Media Converter takes a fundamentally different approach. Instead of performing transcoding on the CPU, it harnesses the massively parallel GPUs, which contain as many as 240 processing cores! By utilizing the GPU, BadaBOOM™ speeds up the conversion time by as much as 18 times compared to CPU-only implementations. Not only can BadaBOOM™ run much faster than CPU-based transcoders, but it also leaves the CPU available to process other tasks. Consumers can now painlessly convert video between formats, leaving more time to enjoy the video and eliminating the frustration of transcode delays.

We experimented with BadaBoom with using Vista 64 knowing that it currently only supports Vista 32. We did this because our entire review had been performed on Vista 64 and didn’t want to change horses in the middle of the stream. Using the NVIDIA reference GTX-280 card, we were able to finally transcode a high resolution 198MB MPG file to a 32MB lower resolution MP4 file in 26.2 seconds. He was then able to download the file an play it on his IPOD. We find this phenomenal as the same process would have taken several minutes using a CPU based program. We weren’t as lucky with the XFX GTX 280 as the program refused to run no matter what we tried. This is aspect of this product that once universal support for all operating systems and all CUDA cards is established will revolutionize a process that was once painstaking and arduously slow.

NOISE

As we stated in the Temperature section the fan on the XFX GeForce GTX 280 is set to operate at 40% and the noise emitted by it is certainly very acceptable and unnoticeable even during heavy load. As a matter of fact we never heard the fan ramp up above the audible level we experienced when operation in 2D mode. What we did notice while stressing the card was a low pitched hum in any 3D performance operation. While not obnoxious or obtrusive, the hum presented itself immediately upon starting any graphics intensive function and disappeared immediately upon exiting that function.

The noise is not reminiscent at all of a loud fan, a fan with a bad bearing, or a fan with a spindle that is out of round. The noise sounds more like an electronic component’s hum. We thought we’d try an isolate so we down-clocked the card using Riva Tuner to a GPU clock to 450 MHz, and the memory clock to 800 MHz and the noise vanished in performance 3D applications. It is our hypothesis, since we are not Electronic Technicians, that we have a noisy voltage regulator or other electronic component in this card that hums with the additional voltage applied to the card in 3D performance mode. It is our sincere hope that the noise is limited to this sample alone as no noise of this type was present with NVIDIA GTX 280 reference card.

FINAL WORDS

We have decided to refrain from scoring the XFX GTX 280 at this point in time as there are so many variables that affect the overall product’s feature set that are not quite ready for release. Were we simply to score this card simply on gaming and synthetic benchmark performance it would approach the highest score we can give as its the fastest single unit graphics card (single or dual processor)that we’ve tested to date. We feel that there’s so much more to experience with this card than just sheer speed that scoring it now would be unfair to both XFX and to our readers. As soon as all items currently either unavailable or in early Beta status are fully functioning we intend to do a makeover of this preview which will reflect a true score of the overall product’s performance.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996