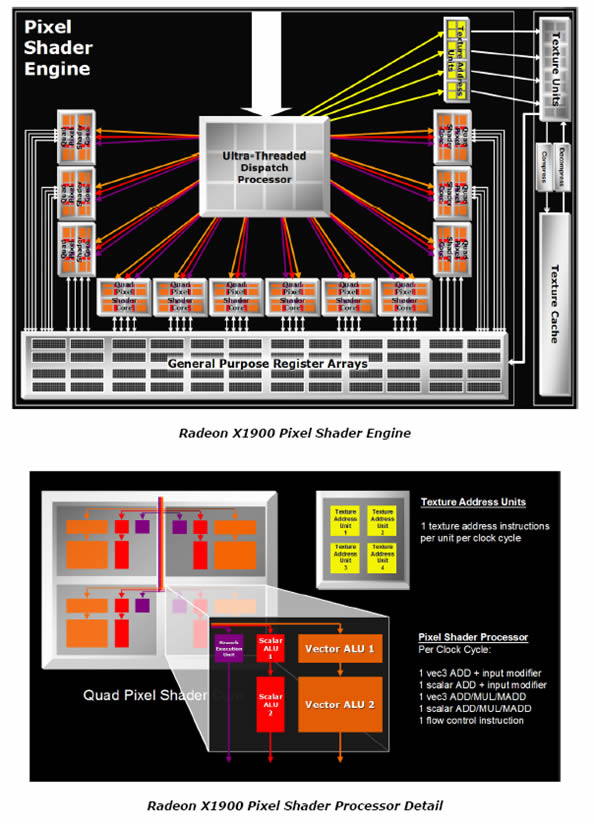

The increase in Arithmetic Logic Units to Texture Units (3:1 from 1:1) is a step in a right direction where more recent shaders tend to carry heavier proportion of arithmetic instructions (example, F.E.A.R). Next generation game titles will most likely go even further and use additional math operations on the data. With 48 pixel shader processors new fetch4 texture lookup and parallax occlusion mapping ATI seems to be set for the near future — unless NVIDIA decides to walk ATI’s path (it’s very likely it will) or even surpass its ALU:Tex ratio. There is the other side of the coin however. Many will consider 16 Texture Units to be a limiting factor for R580. That’s logically correct, but with newer applications it’s the GPU with less ALUs that’s going to be a bottleneck

Introduction

A while ago I attended a conference led by Chris Hook himself — PR Manager of ATI Technologies. While it was explicitly devoted to R580 architecture, Chris started off with R520 and its lateness. While we have been aware of it for quite some time, he went on and confirmed that the majority of chips did not pass high clockrate tests because of random failures in silicon. Once the problem was narrowed down to 3rd party IPs incompatible design, GPUs started to yield proper clockrates.

The late introduction of R520 had caused ATI to speed up the introduction of its new products. Going by how the company handles kicker products, most of us assumed it would be just that; a refresh of the older SKUs. All in all, it was a hasty and wrongful assumption. ATI had been actually cooking something tasty for us – R580 which has been in production since November 30th 2005.

As you might have noticed R580 was not a paper launch. ATI has been shipping chips to IHVs for the past 6-7 weeks to be able to start off with ~10,000 cards at launch time. Albeit ATI had launched 4 SKUs (RADEON X1900 XTX, XT, CrossFire & AIW), the company had been able to ship only 3: XTX, XT and CrossFire. I was told the fully-packed multimedia X1900 All-In-Wonder will be available week after 24th of January.

The high-end lineup has been covered by Bjorn3D some time ago and is ready for your viewing pleasure here

In a short (not so technical), but comprehensive manner let me introduce RADEON X1900:

- 48 Pixel Shaders: 3 times the shader power of X1800 XT

- Parallax Occlusion Mapping: using pixel shaders to create illusion of 3D with 1 big flat texture

- Fetch4: used to accelerate shadow map rendering

- No partial precision on HDR+AA effects

- 166 billion pixel shader operations / sec

- 553.8 Gigaflops compared to 272.5 for X1800 XT

Most hardware centric sites including ours already reviewed the X1900 XT/XTX/CrossFire. Today however, I will be showing off the actual retail product. If you’ve read my articles you probably know the card comes from Tul Coropration’s stable. Without further ado, please welcome PowerColor X1900 XT. As far as looks are concerned it’s really X1800 XT from the outside — it’s pretty hard to tell the difference without taking off the cooling system.

Should you require more detailed information on X1K GPUs please revisit our article: The X1000 series – ATI goes SM3.0

PowerColor is a consumer brand focused on providing cutting-edge graphics card products to retail customers. Our goal for the Tul brand is to be the industry’s number one provider of technology product solutions. Our goal for the PowerColor brand is to be the world’s number one brand of graphics cards. PowerColor is in effect owned by Tul Corporation, however the brands are operated independently of each other.

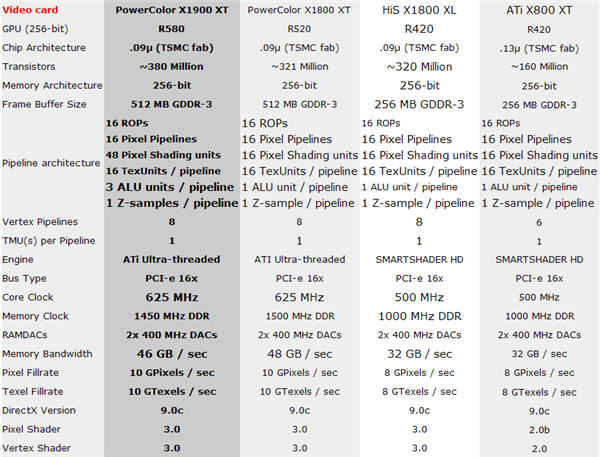

VPU Specifications

Since R580 is based heavily around R520, let’s recon some of its main features. The X1K line now sports Shader Model 3.0 which has been available from NVIDIA for over a year now. As with X1800, X1900 shares similar traits: Ultra-Threading Dispatch Processors, new cache architecture and Ring Bus. You can find more details about those features in my PowerColor X1800 XT review.

As for R580, the X1900 is fairly similar to X1600 design in terms of pipeline architecture. The pipeline is no longer in 1:1 ratio (ALU:Texture) as with R520, it’s 3:1 which means X1900 has 3 times more shading power over the X1800 – that’s where the 166 billion pixel shader operations / sec come from. ATI did say it’s the ideal balance — more would be an overkill and less could lead to insufficiency. Can this approach be utilized? Most definitely and likely with newer games. Hopefully Parallax Occlusion Mapping can benefit from it.

Where 16 implies Texture Units per pipeline, 3 stands for ALUs per pipeline and 1 is the number of Z-samples per pipeline. Confusing? It might be for a lot of you, but I will not go into much detail on the architecture. It’s been covered very well over at Beyond3D. Additionally you can read all about it in our X1900 preview.

Radeon® X1900 Graphics Technology – Specifications

- Features

-

- 384 million transistors on 90nm

- fabrication process

- 48 pixel shader processors

- 8 vertex shader processors

- 256-bit 8-channel GDDR3

- memory interface

- Native PCI Express x16 bus interface

- Ring Bus Memory Controller

-

- 512-bit internal ring bus for memory reads

- Fully associative texture, color, and Z/stencil cache designs

- Hierarchical Z-buffer with Early Z test

- Lossless Z Compression (up to 48:1)

- Fast Z-Buffer Clear

- Optimized for performance at high display resolutions, including widescreen HDTV resolutions

- Ultra-Threaded Shader Engine

-

- Support for Microsoft® DirectX® 9.0 Shader Model 3.0 programmable vertex and pixel shaders in hardware

- Full speed 128-bit floating point processing for all shader operations

- Up to 512 simultaneous pixel threads

- Dedicated branch execution units for high performance dynamic branching and flow control

- Dedicated texture address units for improved efficiency

- 3Dc+ texture compression o High quality 4:1 compression for normal maps and two-channel data formats

- High quality 2:1 compression for luminance maps and single-channel data formats

- Complete feature set also supported in OpenGL® 2.0

- Advanced Image Quality Features

-

- 64-bit floating point HDR rendering supported throughout the pipeline

- 32-bit integer HDR (10:10:10:2) format supported throughout the pipeline

- 2x/4x/6x Anti-Aliasing modes

- 2x/4x/8x/16x Anisotropic Filtering modes

- High resolution texture support (up to 4k x 4k)

- Avivo™ Video and Display Engine

-

- High performance programmable video processor

- Accelerated MPEG-2, MPEG-4, DivX, WMV9, VC-1, and H.264 decoding (including DVD/HD-DVD/Blu-ray playback), encoding & transcoding

- DXVA support

- De-blocking and noise reduction filtering

- Motion compensation, IDCT, DCT and color space conversion

- Vector adaptive per-pixel de-interlacing

- 3:2 pulldown (frame rate conversion)

- Seamless integration of pixel shaders with video in real time

- HDR tone mapping acceleration

- Maps any input format to 10 bit per channel output

- Flexible display support

- Dual integrated dual-link DVI transmitters

- DVI 1.0 / HDMI compliant and HDCP ready

- Dual integrated 10 bit per channel 400 MHz DACs

- 16 bit per channel floating point HDR and 10 bit per channel DVI output

- Programmable piecewise linear gamma correction, color correction, and color space conversion (10 bits per color)

- Complete, independent color controls and video overlays for each display

- High quality pre- and post-scaling engines, with underscan support for all outputs

- Content-adaptive de-flicker filtering for interlaced displays

- Xilleon™ TV encoder for high quality analog output

- YPrPb component output for direct drive of HDTV displays

- Spatial/temporal dithering enables 10-bit color quality on 8-bit and 6-bit displays

- Fast, glitch-free mode switching

- VGA mode support on all outputs

- Compatible with ATI TV/Video encoder products, including Theater 550

- High performance programmable video processor

- CrossFire™

-

- Multi-GPU technology

- Four modes of operation:

- Alternate Frame Rendering (maximum performance)

- Supertiling (optimal load-balancing)

- Scissor (compatibility)

- Super AA 8x/10x/12x/14x (maximum image quality)

The Card

The physical dimensions of PowerColor X1900 XT are exactly the same as X1800. Even more, the sticker does not inform you about the brand; you can see a radial type fan slapped onto the copper plate without any branding ie. default ATi logo + Ruby. An interesting tidbit, all flagship cards are built over at Foxconn and Celestica fabs to ensure maximum quality.

Click a picture to see a larger view

The PCB of X1900 XT, X1800 XT and X1800 XL look alike, the only differences are actual chips found on the boards: GPU, RAM etc. All high-end boards carry external power supply sockets. In the above gallery you will find a 6-pin PCI-e connector in the back of the card so make sure you plug in more juice, otherwise the board wont boot. Notice voltage regulators are covered with anodized heatsink.

The back of the PCB looks usual. The brace serves as a supplement which needs to hold the top copper heatsink in place. Notice that all memory chips are located on the front of the card.

All recent ATi enthusiast video cards sport dual slot cooling systems. The bracket features dual DVI links and VIVO support. The card carries a standard display support: VGA compatible, VESA compatible BIOS for SVGA and DDC 1/2b/2b+ The whole cooling system has been designed so it will reduce overall temperature inside your chassis. The fan sucks in all the hot air and pushes it out of the case through the top bracket. ATi’s leafblower spins at maximum of 5080 RPM which makes it a very loud solution. Fortunetely the fan won’t run at those speeds (unless manually specified) as the temperature does not exceed 78C degrees (even when overclocked). The default idle speed (2D) is 1500 RPM so you won’t need earplugs. When you run a 3D application and the GPU gets hot, the fan goes to something like 2500 RPM, no more. You can clearly see a copper finish on the board’s radiator. Weight wise, this seems like the heaviest (1 piece) cooling solution I have ever held in my hand. Inside you’ll find copper fins that help move heat out of the case.

Memory found on our PowerColor X1900 XT is SAMSUNG K4J52324QC-BJ11 (1.1ns) rated at maximum frequency of 900 MHz / 1800 MHz DDR. For giggles, I put in both X1800 and X1900 inside my system to give you idea how much space those two cards take up if you would run a CrossFire setup for example.

Bundle

In terms of package and bundle you’ll find a standard PowerColor box with accessories and software. If you’re looking for games, you’ll be disappointed. On the other hand you should be happy with included cabling.

Click a picture to see a larger view

Tul had decided to change the box scheme a little bit. The front and back of the box highlight cards specifications and features. In the bottom right hand you see an image representing part of the card. The back of the box sports specs and some catch phrases. The sides show system requirements. This type of design scheme has been used for the last line of cards from PowerColor. It’s standard, but pretty.

- Accessories & bundle

- HDTV Cable

- Video in/out Cable

- S-Video Cable

- Composite Cable

- 2 DVI-I connectors

- PCI-e power cable

- Driver CD

- CyberLink DVD Solutions

- PowerDVD 5

- PowerProducer 2 Gold DVD

- PowerDirector 3

- Power2Go 3

- Medi@show 2

PowerColor X1900 XT features Avivo technology:

- Avivo™ Video and Display Platform

-

- High performance programmable video processor

- Accelerated MPEG-2, MPEG-4, DivX, WMV9, VC-1, and H.264 decoding and transcoding

- DXVA support

- De-blocking and noise reduction filtering

- Motion compensation, IDCT, DCT and color space conversion

- Vector adaptive per-pixel de-interlacing

- 3:2 pulldown (frame rate conversion)

- Seamless integration of pixel shaders with video in real time

- HDR tone mapping acceleration

- Maps any input format to 10 bit per channel output

- Flexible display support

- Dual integrated dual-link DVI transmitters

- DVI 1.0 compliant / HDMI interoperable and HDCP ready

- Dual integrated 10 bit per channel 400 MHz DACs

- 16 bit per channel floating point HDR and 10 bit per channel DVI output

- Programmable piecewise linear gamma correction, color correction, and color space conversion (10 bits per color)

- Complete, independent color controls and video overlays for each display

- High quality pre- and post-scaling engines, with underscan support for all outputs

- Content-adaptive de-flicker filtering for interlaced displays

- Xilleon™ TV encoder for high quality analog output

- YPrPb component output for direct drive of HDTV displays

- Spatial/temporal dithering enables 10-bit color quality on 8-bit and 6-bit displays

- Fast, glitch-free mode switching

- VGA mode support on all outputs

- Drive two displays simultaneously with independent resolutions and refresh rates

- Dual integrated dual-link DVI transmitters

- Compatible with ATI TV/Video encoder products, including Theater 550

- High performance programmable video processor

Setup and Installation

All of our benchmarks were ran on Athlon64 3000+ system with two different clocks: default and 2.5GHz. The reason I decided to up the CPU clock for R580/R520 is the fact it would be limiting the cards by a whole lot. It still is limiting at low resolutions in some benchmarks, but I’ve tried to create as little bottlenecked environment as possible. I will stack PowerColor X1900 XT against older X1800, HiS X1800 XL and reference design ATi X800 XT. The table below shows test system configuration as well benchmarks used throughout the review.

| Components | – DFI NF4 Ultra-D – Athlon64 3000+ Venice – 2x256MB Corsair PC3200LLP (Dual Channel) – Thermaltake 520 Watt PSU – PowerColor X1900 XT 512 MB – PowerColor X1800 XT 512 MB – HiS X1800 XL – ATi X800 XT |

| Software | – Windows XP SP2 – DirectX 9.0c – nForce4 6.53 drivers – CATALYST 5.13 (non WHQL with added X1900 support) |

| Synthetic Benchmarks | – 3DMark 2005 v1.2.0 – 3DMark 2006 – D3D Right Mark 1.0.5.0 beta 4 |

| Gaming Benchmarks | – F.E.A.R / ingame benchmark + Fraps – Half-Life 2 / custom d13c17 timedemo + Fraps – Doom 3 / default timedemo + Fraps – Quake 4 / custom timedemo – NFS: Most Wanted / Fraps – Far Cry 1.32 / custom timedemo + Fraps |

| Notes | For R420 CPU was clocked at default 1.8GHz For R5x0 CPU was clocked at 2.5GHz to reduce limitation |

I haven’t had any problems fitting PowerColor X1900 XT inside the system. As you can see from below picture, the board requires 2 free slots. The PCB is quite long so make sure your hard drive is pushed all the way up to the front, otherwise you might have a problem. For giggles, I seated in a X1800.

PowerColor X1900 XT on top, X1800 XT on bottom w00t!

3DMark05 / 06

I’ve used Futuremark’s 3DMark 2005 to measure the actual throughput of the product I’m reviewing: PowerColor X1900 XT and compared it against the other bunch. Additionally I’m including scores from Futuremark’s newest 3DMark06.

X1900 XT, X1800 XT, X850 XT PE (PowerColor), X800 XT (ATI reference)

D3D RightMark is a very useful tool for measuring different theoretical throughputs of a graphics chip. I ran couple of synthetic tests to stress out PowerColor X1800 XT and put it against the rest of the bunch. The main focus of theses tests will be to stress out Geometric Processing (Vertex Shading) as well as Pixel Shaders.

With D3D RightMark you will be able to get the following information about your video card:

- Features supported by your video card

- Pixel Fillrate and Texel Fillrate

- Pixel shader processing speed (all shader models)

- Vertex shader (geometry) processing speed (all shader models)

- Point sprites drawing speed

- HSR efficency

Bearing in mind both X1800 and X1900 carry the same amount of Vertex Pipelines there is no difference between the two scores. The 1,2% difference you see is most likely a margin off error.

This is where it gets interesting. With 48 Pixel Shader processors this baby just rocks your socks! Theoretically X1900 XT score should be around 67% higher than X1800’s. If you want, calculate it yourself and find the % difference between 48 and 16 processors — you will come up with that figure.

F.E.A.R

The game has been made over at Monolith Productions studio and has been out by quite some time. Since a lot of you are interested in seeing how it plays, I’ve decided to give it a shot and bench it with our X1900 XT from PowerColor.

Texture caching in retail F.E.A.R has been improved a little. You won’t see a lot of chugging when abruptly turning around. Our benchmarking method was simple. I used default F.E.A.R benchmarking utility which nicely shows all effects and technology used throughout the game.

Game Overview

An unidentified paramilitary force infiltrates a multi-billion dollar aerospace compound, taking hostages but issuing no demands. The government responds by sending in special forces, but loses contact as an eerie signal interrupts radio communications. When the interference subsides moments later, the team has been obliterated. As part of a classified strike team created to deal with threats no one else can handle, your mission is simple: Eliminate the intruders at any cost. Determine the origin of the signal. And contain the crisis before it spirals out of control.

As you probably know, F.E.A.R uses a very sophisticated game engine (FEAR).

- Rendering

- FEAR is powered by a new flexible, extensible, and data driven DirectX 9 renderer that uses materials for rendering all visual objects. Each material associates an HLSL shader with artist-editable parameters used for rendering, including texture maps (normal, specular, emissive, etc.), colors, and numeric constants.

- Lightning Model

- FEAR features a unified Blinn-Phong per-pixel lighting model, allowing each light to generate both diffuse and specular lighting consistently across all solid objects in the environment. The lighting pipeline uses the following passes:

- Emissive: The emissive pass allows objects to display a glow effect and establishes the depth buffer to improve performance.

- Lighting: The lighting pass renders each light, first by generating shadows and then by applying the lighting onto any pixels that are visible and not shadowed.

- Translucency: The translucent pass blends all translucent objects into the scene using back to front sorting.

- FEAR features a unified Blinn-Phong per-pixel lighting model, allowing each light to generate both diffuse and specular lighting consistently across all solid objects in the environment. The lighting pipeline uses the following passes:

- Visual Effects

- FEAR features a new optimized, data driven effects system that allows for the creation of key-framed effects that can be comprised of dynamic lights, particle systems, models, and sounds. Examples of the effects that can be created using this system include weapon muzzle flashes, explosions, footsteps, fire, snow, steam, smoke, dust, and debris.

- Sample Lights

- FEAR’s lighting model is very flexible and allows developers to easily add new lights. Existing lights include:

- Point Light: The point light is a single point that emits light equally in all directions.

- Spotlight: Similar to a flashlight, the spotlight projects light within a specified field of view. The spotlight can also use a texture to tint the color of the lighting on a per pixel basis.

- Cube Projector: Similar to the point light, the cube projector uses a cubic texture to tint each lit pixel.

- Directional Light: This lighting is emitted from a rectangular plane and is used to simulate directional lights like sunlight.

- Point Fill: Although similar to the point light, the point fill is an efficient option because it does not utilize specular lighting or cast shadows.

- FEAR’s lighting model is very flexible and allows developers to easily add new lights. Existing lights include:

A more detailed overview of other F.E.A.R technologies can be found over at Touchdown Entertainment. These include: Havok Physics Engine and Modeling / Animations System.

Half-Life 2

We all love Half-Life 2 and we all want best performance out of our hardware. This has to be one of the most graphic demanding games currently on the market. Half-Life 2 is built around Source engine which utilizes a very wide range of DirectX 8 / 9 special effects. Those include:

- Diffuse / specular bump mapping

- Dynamic soft shadows

- Localized / global valumetric fog

- Dynamic refraction

- High Level-of-Detail (LOD)

Note that users with DirectX 7 and older hardware (NVIDIA MX series for example) will not be able to enjoy the above effects. Let’s see what PowerColor X1900 XT is made of.

Doom 3

Now that we are past Doom 3s release, some gamers have been left with a bit of disappointment. Main reason is Half-Life 2 and its Source engine which really showed a vast amount of potential and scalability.

Although this game needs no introduction, I will go over some of the game features and technology behind Doom 3. It took the guys at id Software over four years to complete this project. Lead programmer, John Carmack spent an awful lot of time designing the game engine, but his hard work paid off — to some extent since this is first title which houses Doom 3 engine.

Let’s look at some of the engine tech features which are present in Doom 3:

- Unified lightning and shadowing engine

- Dynamic per-pixel lightning

- Stencil shadowing

- Specular lightning

- Realistic bumpmapping

- Dynamic and ambient six-channel audio

However you look at it, Carmack’s lightning engine is the essence of Doom 3. With OpenGL being the primary API, shaders have been put to a heavy use in order to create the realisticly looking environment. Instead of using lightmaps the game engine now processes all shadows in real-time. This technique is called stencil shadowing which can accurately shadow other objects in the scene. There are disadvantage to this method however:

- Requires a lot of fillrate

- Fast CPU is needed for shadow calculations

- Inability to render soft shadows

Quake 4

This is another good title worth looking at. With success of Quake 3, id Software decided (after few years) it would be proper to have a sequel. Designed over at Raven Software’s farm, Q4 features rich single player as well as intensive and popular multiplayer mode.

It uses Doom 3 engine so you should be familiar with available effects. In any case, I listed them below

- Unified lightning and shadowing engine

- Dynamic per-pixel lightning

- Stencil shadowing

- Specular lightning

- Realistic bumpmapping

- Dynamic and ambient six-channel audio

Need For Speed: Most Wanted

We have quite a few sequels in this review and this is another one, this time from Electronic Arts. If you’ve played NFS Hot Pursuit you know what I’m talking about. There are a lot of ideas taken out from the older NFS. The main difference between Most Wanted and Underground (in terms of graphics) is addition of HDR-type effects. It’s pseudo-HDR (more like bloom), but looks lovely nonetheless. Additionally the game sports flashy new reflections, better object geometry, improved lightning system and finally physics engine.

On the prvious page I showed you how X1K line performs in NFS: Underground 2. Here we have a fresh new title with bells and whistles waiting to get benched.

Far Cry 1.32

The company behind this game is Crytek. It was pretty much the first title which used a heavy load of PS 2.0 shaders. For our benching purposes we are using the full version with the newest 1.32 patch applied (mainly fixes SM 3.0 issues that caused graphic corruption on newer ATI hardware). Anyone who played this title will admire the draw distance, beautiful outside vegetation and incredibly spooky indoor environment. The game also features topnotch self-learning A.I and very realistic physics.

Far Cry’s CryEngine is pretty scalable, however you’d need at least DirectX 8 class hardware to enjoy the refractive water effects, ripples, real time per-pixel lighting, specular bump-mapping or volumetric effects.

The map of choice was Research. It’s a high-polygon map with both outdoor and indoor environment.

Overclocking

If you look at our PowerColor X1800 XT overclocking section you’ll find that particular sample OC’ed pretty nicely up to 700 MHz GPU / 800 MHz memory with stock voltages. With most high-end cards (including X1800 & X1900) we are looking at two levels of clockrates: 2D & 3D. While this can be annoying to overclockers it’s actually healthy for the card.

Let’s look at our setup. We have PowerColor X1900 XT on the bench today and I will try to squeeze the most out of it. Although ATI white sheet says X1900 XT should be clocked at default 625 MHz / 725 MHz (1450 MHz DDR), our sample came with lower clocks of 620 MHz / 720 MHz (those are clocks read from the BIOS) for some reason. Having said that, let’s find out what are the maximum frequencies for our PowerColor gem. Bearing in mind we are dealing with SAMSUNG K4J52324QC-BJ11 (1.1ns) RAM rated at maximum frequency of 900 MHz / (1800 MHz DDR), I think it’s safe to say I can “almost” reach it — theoretically at least. Okay I will spill the info already. I’ve managed to get the core up to 683 MHz and memory up to 870 MHz (1740 MHz DDR).

If you are thinking what the hell?! you’re on the same fleck as I am. How come our X1800 XT reached 700 MHz on core and this brand new super fast X1900 can’t even climb up to 690 MHz? It seems Tul is not cherry picking samples which is a good thing but core overclock leaves much to ask. As for memory I believe 870 MHz is spot on.

Below are 3DMark05 / 06 scores representing the performance of both X1900 XT and X1800 XT.

The increase in Arithmetic Logic Units to Texture Units (3:1 from 1:1) is a step in a right direction where more recent shaders tend to carry heavier proportion of arithmetic instructions (example, F.E.A.R). Next generation game titles will most likely go even further and use additional math operations on the data. With 48 pixel shader processors new fetch4 texture lookup and parallax occlusion mapping ATI seems to be set for the near future — unless NVIDIA decides to walk ATI’s path (it’s very likely it will) or even surpass its ALU:Tex ratio. There is the other side of the coin however. Many will consider 16 Texture Units to be a limiting factor for R580. That’s logically correct, but with newer applications it’s the GPU with less ALUs that’s going to be a bottleneck. ATI backs their decision saying it’s the ideal balance and more texture units would still not prevent bandwidth limitation in most cases.

As for mainstream SKUs (read X1600 respin), those may or may not come at all. The current line of GPUs range from low-end X1300 all the way up to enthusiast X1900 XTX parts. Instead of going with additional boards, ATI may decide to change pricing on the existing lineup while getting rid of R520’s. Seems like a good move and maybe X1800 XL could become the middle-end GPU. Bare in mind this is not written in stone, just my observations of what may happen.

Concluding this product review I have to say X1900 XT has a potential which is not yet utilized. New games will prove to be a major testing arena for R580 and coming ATI products. By bringing XBOX 360 graphics to your desktop PC, ATI has given all of us a taste of what’s coming up in the near future.

+ 48 Pixel Shader processors

+ Great performer (especially with AA/AF)

+ Avivo technology

+ 512 MB framebuffer

+ Good memory overclock

+ Has potential Cons:

– Outdated and weak bundle

– Core OC could be higher

– Power hungry

– Dual bracket design

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996