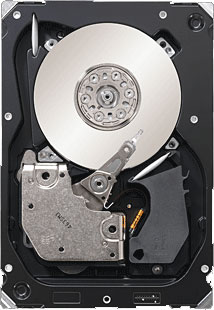

Seagate’s new 6Gb/s Cheetah NS.2 SAS 600GB 10K Hard Drives and LSI’s new 3ware 6.0Gb/s SAS 9750-4i RAID Card bring top notch performance to the Hard Drive industry that has not yet been seen in the past.

Seagate Cheetah NS.2 – Introduction

Seagate is well known for their popular Barracuda line-up of SATA hard drives that provide optimal performance for pretty much any task you are looking for. However, if you are into hard drive intensive tasks like high-definition video editing and compositing, standard SATA hard drives could be a big bottleneck in your system. I personally work with lots of high-definition 1080p uncompressed video footage, and my two Western Digital RAID Edition 1TB hard drives in a RAID 0 configuration were not able to handle uncompressed HD footage without any lags. You might wonder, well what type of performance does an uncompressed HD footage require from a hard drive? Well, to answer that, it really depends on file formats and the amount of complexity of the scene, but usually, it is in between 100-300MB/s transfer/read speed. Since two standard 7200RPM 3.0Gb/s SATA II hard drives can only handle around 115-200MB/s in average, a person could easily see performance decreases in their production, especially when combining more than one uncompressed footage in their composition.

This is why Seagate also comes with high-performance SAS (Serial Attached SCSI) server based hard drives, like the Seagate Cheetah NS.2. We are reviewing four Seagate Cheetah NS.2 600GB 10,000RPM 6.0Gbit/s SAS hard drives in order to see how well they perform in tasks like video editing and composing. We are going to explain everything in the results section on how many hard drives and what type of configurations will work well with uncompressed high-definition videos, but before we do that, let’s go a bit more into detail about the Seagate Cheetah NS.2 hard drives so we could understand what makes these hard drives so special.

The first thing that you will find very interesting is that this hard drive uses the new 6.0Gb/s interface design, which allows for double the throughput speed then what was possible previously on the older 3.0Gb/s interface. Many new motherboards are coming out with SATA slots that already use the 6.0Gb/s interface, but the difference is that these hard drives are Serial attached SCSI based and not SATA, so you would not be able to attach these drives to just the motherboard, unless the motherboard comes with a SAS controller specifically. Another interesting fact is that even though these new motherboards are already out with the new 6.0Gb/s interface, most motherboards are only able to achieve this speed by limiting the system to only non-SLI or non-Crossfire based systems, meaning, you cannot use more then one video card. It will not only limit the user on the video cards, but it will also lower the PCI-E slot speeds to 8x, which means that there will be less performance from the video card. Now just like I have mentioned before, the Seagate Cheetah NS.2 hard drives are SAS drives, so in order to get these bad boys running, we need a special RAID controller card, that is capable of Serial attached SCSI’s and the new 6.0Gb/s interface. 3.0Gb/s RAID cards will work, but the performance will be lowered. In this case, we will be using LSI’s 3ware SAS 9750-4i RAID controller card to accomplish the most out of our video editing. Since these RAID cards work separately from the motherboard, except they use the PCI-E slots, they do not have any limitations like current motherboards do. As a matter a fact, they have a very important role with SAS drives, which we will go into more detail about on the next page.

The second interesting part of this hard drive is that it runs at 10,000RPM.This not only allows for lower access times, but also for faster transfer and read speeds. The combination of the new 6.0Gb/s interface and the 10,000RPM capability allows for better performance then what we have already seen.

And finally, the main big difference between SAS drives and SATA drives is the way they access the information. SATA drives access information mainly under Sequential Access Pattern, while SAS drives access information through Random Access Patterns. If writes are sequential on a disk, it minimizes seek times on the hard drive, and the data can be written much faster, while random access results in many seeks across the hard drive that can slow down the process dramatically. This brings up another question, why would SAS drives be Random Access Pattern based if they could be Sequential Access Pattern based for more performance on tasks like video editing? Well, the answer lies behind the reason for building SAS drives. SAS drives were mainly built for “transactional applications that require constant and immediate access to data. These applications, ranging from highly critical databases to email servers, rely on exceptional performance, reliability, and availability. Online storage is powered on 24x 7, handles mostly random requests, and has high IOPS and high duty cycles” (LSI) So while its main purpose is not video editing and compositing, its exceptional performance with the help of a hardware based RAID card can drastically improve performance even though the drives are set to Random Access Patterns.

Why Not SSDs?

Well there are not many reasons why one could not use SSDs instead of hard drives. As a matter a fact, SSDs would probably be a better option when combined with a powerful RAID card, but one of the main reasons why SSDs are inconvenient are because they are extremely expensive and do not provide much capacity. We get a total of 2400GBs of hard drive space if we use the RAID0 configuration with our SAS drives, and the overall cost with the RAID card comes out to be around 3000 dollars. If we would want to get as much capacity from SSDs, the overall price would be around 8000-10000 dollars. That is about 3x more then what a SAS setup would cost. This is why a SAS setup is a better choice for film makers and people on the budget.

| Product Name | Price |

| Seagate 600GB Cheetah NS.2 SAS 6Gb/s Internal Hard Drive | $533 |

| LSI 3ware SAS 9750-4I KIT 4PORT 6GB SATA+SAS PCIE 2.0 512MB | $386 |

LSI 3Ware SAS 9750-4i 6Gb/s RAID Card – Introduction

As we have mentioned on the previous page, we will be using LSI’s 3Ware SAS+SATA 9750-4i 4-port 6Gb/s RAID Controller Card to connect our four Seagate Cheetah NS.2 600GB SAS drives to the system. LSI provides numerous RAID Controller Cards depending on the needs of the customer, but two of the main series of cards they sell are the 3Ware and MegaRAID controller cards. According to their site, the new 6.0Gb/s MegaRAID series cards provide excellent performance for SAS and SATA options, but they are on the entry-level 6.0Gb/s RAID cards. The new 3Ware RAID controller cards are specifically designed to meet the needs of numerous applications. The 3Ware 9750-4i has exceptional performance in video performance from surveillance to video editing and compositing. But LSI did not stop there, they also made it possible to meet the needs of high-performance computing, including medical imaging, digital content archiving, and for exceptional performance on file, web, database and email servers.

LSI broke up their RAID controller cards in three categories:

- 3ware® RAID controller cards are a good fit for multi-stream and video streaming applications.

- MegaRAID® entry-level RAID controllers are a good choice for general purpose servers (OLTP, WEB, File-servers and High-performance Desktops).

- High-port count HBAs are good for supporting large number of drives within a storage system.

If you are thinking about upgrading your home desktop, workstation or server based system, or if you want to future proof your business for the next generation 6.0Gb/s interface, then you are in luck because LSI made it possible to use your existing SATA or SAS hard drives with the older 3.0Gb/s interface. This not only saves money by not needing to upgrade your existing hard drives, but the new 6.0Gb/s throughput interface also helps getting a bit more performance out of the older 3.0Gb/s hard drives that previously was not possible with the older onboard software based RAID or 3.0Gb/s hardware based RAID cards.

Here is a video about the lineup of LSI’s new 6.0Gb/s hard drives released this year. The video was made by LSI.

While we have also talked about other great utilization for the 3ware and MegaRAID cards besides video editing and compositing, LSI provides a huge performance increase in science labs. This video provided by LSI explains how the new 6.0Gb/s throughput can help speed up DNA research at Pittsburgh Center for Genomic Sciences.

So according to Seagate and LSI, this setup with the 4x Seagate Cheetah NS.2 600GB 10,000RPM 6.0Gb/s SAS hard drives, and with LSI’s 3Ware SAS+SATA 9750-4i 6Gb/s RAID Controller Card will be a great combination for high-performance video editing. Let’s see if this is true.

Features – Seagate Cheetah NS.2 10K

|

Key Features and Benefits

|

Specifications – Seagate Cheetah NS.2 10K

Here is the specifications table to the Seagate Cheetah NS.2 600GB model SAS hard drive. The Cheetah NS.2 comes in 300GB, 450GB, and 600GB capacities, which means that the user has the choice to pick the capacity they really need. The user might be on a budget and want high-performance but don’t need an enormous amount of space. By combining 4x 300GB hard drives in RAID0, it would provide the user with 1.2TB of hard drive space and would also save the customer quite a bit of money over the 600GB model.

| Specifications | |

|---|---|

| Model Number | ST3600002SS |

| Interface | 6-Gb/s SAS |

| Cache | 16MB |

| Capacity | 600 GB |

| Areal density (avg) | 225 Gbits/inch2 |

| Guaranteed Sectors | 1,172,123,568 |

| PHYSICAL | |

| Height (max) | 25.73 mm (1.013 inches) |

| Width (max) | 101.57 mm (3.999 inches) |

| Length (max) | 146.53 mm (5.769 inches) |

| Weight (typical) | 672 grams (1.482 pounds) |

| PERFORMANCE | |

| Spindle Speed | 10,000 rpm |

| Average latency | 2.98 msec |

| Random read seek time | 3.8 msec |

| Random write seek time | 4.4 msec |

| RELIABILITY | |

| MTBF | 1,600,000 hours |

| Annual Failure Rate | 0.55 |

| POWER | |

| 12V start max current | 1.96 amps |

| 5V start max current | 0.60 amps |

It is important to note that the 450GB, and the 600GB models of the Seagate Cheetah NS.2 10K hard drives come with either a 6Gb/s SAS interface or a 4Gb/s Fibre Channel interface. However, the 300GB model only comes with the 4Gb/s Fibre Channel interface.We can also notice that according to the Seagate specification table, the average latency and seek times are in the 2.98-4.4msec range. Standard SATA hard drives are usually in the 7-9msec range, which is double or triple of the time you get with an SAS hard drive. Plus these SAS hard drives are built to have a higher rotation vibration tolerance. Also the reliability of these hard drives is really high, standard desktop SATA hard drives are built to function for about 750,000 hours, enterprise SATA for about 1,200,000 hours, while SAS hard drives are built to work for 1,600,000 hours. This is a bit over the double of what desktop SATA hard drives are built to last. These are some of the reasons why SAS hard drives are generally more expensive then desktop or enterprise SATA hard drives, but you can have a good feeling that the hard drives are there to last for a long time, before thinking about replacing them.

Features – LSI 3Ware SAS+SATA 9750-4i

|

Features

|

- Online Capacity Expansion (OCE)

- Online RAID Level Migration (RLM)

- Global hot spare with revertible hot spare support

o Automatic rebuild - Single controller multipathing (failover)

- I/O load balancing

- Comprehensive RAID management suite

Benefits

- Superior performance in multi-stream environments

- PCI Express 2.0 provides faster signaling for high-bandwidth applications

- Support for 3Gb/s and 6Gb/s SATA and SAS hard drives for maximum flexibility

- 3ware Management 3DM2 Controller Settings

o Simplifies creation of RAID volumes

o Easy and extensive control over maintenance features - Low-profile MD2 form factor for space-limited 1U and 2U environments

Closer Look: Seagate Cheetah NS.2 10K 600GB

Closer Look: LSI 3Ware SAS 9750-4i

And finally, here are the accessories that came with the LSI 3Ware SAS 9750-4i RAID Controller Card. You get one SAS cable which you would connect to the card and to an enclosure with the hard drives. You also get a Driver CD, and a low-profile expansion slot bracket. Since I was testing the drives without an enclosure, I was using a different cable that comes with an SAS connector on one end and on the other end with 4 separate SAS connectors and power connectors that you connect to each SAS hard drive separately.

LSI 3Ware BIOS

The LSI 3Ware BIOS is very simple to use and the learning curve is very easy and fast. Lets take a look at the following pictures and how easy it is to set up your drives in a RAID5 array.

Click Image For a Larger One

Having too many images of something can get a bit confusing, so I divided up the images to make it easier to follow my explanation. Once you go through the first screen of the BIOS you will be led to the following page. This is where you can expand or close the Available Drives section. To select the drives you can either select the whole group by pressing Space or expand the group of drives and select only the drives you want to put in a specific array. Since we are going to put all drives in a RAID5 configuration array, we are going to select all the drives. Once all the drives are selected, we can press Tab to get to the menu settings and click on the Create Unit button. This will bring up the Create Unit section where you can set up the way your drives will work. In the list, it will show all the drives you have selected to include in the array. On the Array Name section, you will put the name of the array that you want to use. If you give understandable names for these arrays, if you have multiple arrays, you will know exactly which hard drive went bad if by any chance one does go bad in a certain array. In our case, since we will be using these hard drives for video editing, we called it “VideoProduction”.

Installation of Windows 7

The installation process of Windows 7 on the SAS hard drives was a bit tricky when I first did it. I though I was doing something wrong when I got to an image that Windows 7 could not continue installing because it could not install on the SAS drives, so I will walk you through the whole process on how to get this to work.

Ok, so in order to install windows, first you will need to insert your Windows 7 DVD. Once you get to the window that you see in the first image, it is time to insert your LSI 3Ware CD. You will need to load the drivers to your RAID card, by clicking on Load Driver. It will bring up a screen asking you to look for the driver yourself. Click on Browse, and go to the drive that you inserted the CD in. Once there, navigate to Packages -> Drivers -> Windows -> 64Bit (or in case you are installing a 32bit windows, click on 32bit) -> and now click ok. It will show you the list of drivers available. In our case we got 1 driver that we needed to install. Select it, and install it. It will take a minute or two to do this. Once done, you will see your SAS drives pop up in the list of hard drives that are recognized. Select it but then you will receive a message saying that windows cannot be installed on this drive, this happens because you still have the LSI 3Ware CD in your drive. You will need to take that CD out and put in the Windows 7 DVD. Once you do this, you will need to click refresh, and now Windows can finally be installed.

3DM2 Setup

Finally, after installing Windows 7, and installing the drivers for the LSI card, there will be a shortcut to the 3DM2 page that accesses the RAID Card’s settings. This tool allows users to easily set up more arrays and maintain the drives straight from Windows. This tool comes very handy if there are more servers and large amounts of hard drives set up. This also allows the Administrator or users to change different settings on how error checking and other features should work.

RAID Levels Tested

RAID 1

Diagram of a RAID 1 setup.

A RAID 1 creates an exact copy (or mirror) of a set of data on two or more disks. This is useful when read performance or reliability are more important than data storage capacity. Such an array can only be as big as the smallest member disk. A classic RAID 1 mirrored pair contains two disks (see diagram), which increases reliability geometrically over a single disk. Since each member contains a complete copy of the data, and can be addressed independently, ordinary wear-and-tear reliability is raised by the power of the number of self-contained copies.

RAID 1 performance

Since all the data exists in two or more copies, each with its own hardware, the read performance can go up roughly as a linear multiple of the number of copies. That is, a

RAID 1 has many administrative advantages. For instance, in some environments, it is possible to “split the mirror”: declare one disk as inactive, do a backup of that disk, and then “rebuild” the mirror. This is useful in situations where the file system must be constantly available. This requires that the application supports recovery from the image of data on the disk at the point of the mirror split. This procedure is less critical in the presence of the “snapshot” feature of some file systems, in which some space is reserved for changes, presenting a static point-in-time view of the file system. Alternatively, a new disk can be substituted so that the inactive disk can be kept in much the same way as traditional backup. To keep redundancy during the backup process, some controllers support adding a third disk to an active pair. After a rebuild to the third disk completes, it is made inactive and backed up as described above.

Diagram of a RAID 0 setup.

A RAID 0 (also known as a stripe set or striped volume) splits data evenly across two or more disks (striped) with no parity information for redundancy. It is important to note that RAID 0 was not one of the original RAID levels and provides no data redundancy. RAID 0 is normally used to increase

A RAID 0 can be created with disks of differing sizes, but the storage space added to the array by each disk is limited to the size of the smallest disk. For example, if a 120 GB disk is striped together with a 100 GB disk, the size of the array will be 200 GB.

RAID 0 failure rate

Although RAID 0 was not specified in the original RAID paper, an idealized implementation of RAID 0 would split I/O operations into equal-sized blocks and spread them evenly across two disks. RAID 0 implementations with more than two disks are also possible, though the group reliability decreases with member size.

Reliability of a given RAID 0 set is equal to the average reliability of each disk divided by the number of disks in the set:

The reason for this is that the file system is distributed across all disks. When a drive fails the file system cannot cope with such a large loss of data and coherency since the data is “striped” across all drives (the data cannot be recovered without the missing disk). Data can be recovered using special tools (see data recovery), however, this data will be incomplete and most likely corrupt, and recovery of drive data is very costly and not guaranteed.

RAID 0 performance.

While the block size can technically be as small as a byte, it is almost always a multiple of the hard disk sector size of 512 bytes. This lets each drive seek independently when randomly reading or writing data on the disk. How much the drives act independently depends on the access pattern from the file system level. For reads and writes that are larger than the stripe size, such as copying files or video playback, the disks will be seeking to the same position on each disk, so the seek time of the array will be the same as that of a single drive. For reads and writes that are smaller than the stripe size, such as database access, the drives will be able to seek independently. If the sectors accessed are spread evenly between the two drives, the apparent seek time of the array will be half that of a single drive (assuming the disks in the array have identical access time characteristics). The transfer speed of the array will be the transfer speed of all the disks added together, limited only by the speed of the RAID controller. Note that these performance scenarios are in the best case with optimal access patterns.

RAID 0 is useful for setups such as large read-only NFS servers where mounting many disks is time-consuming or impossible and redundancy is irrelevant.

RAID 0 is also used in some gaming systems where performance is desired and data integrity is not very important. However, real-world tests with games have shown that RAID-0 performance gains are minimal, although some desktop applications will benefit, but in most situations it will yield a significant improvement in performance.

RAID 5

Diagram of a RAID 5 setup with distributed parity with each color representing the group of blocks in the respective parity block (a stripe). This diagram shows left asymmetric algorithm.

A RAID 5 uses block-level striping with parity data distributed across all member disks. RAID 5 has achieved popularity due to its low cost of redundancy. This can be seen by comparing the number of drives needed to achieve a given capacity. RAID 1 or RAID 0+1, which yield redundancy, give only s / 2 storage capacity, where s is the sum of the capacities of n drives used. In RAID 5, the yield is equal to the Number of drives – 1. As an example, four 1TB drives can be made into a 2 TB redundant array under RAID 1 or RAID 1+0, but the same four drives can be used to build a 3 TB array under RAID 5. Although RAID 5 is commonly implemented in a disk controller, some with hardware support for parity calculations (hardware RAID cards) and some using the main system processor (motherboard based RAID controllers), it can also be done at the operating system level, e.g., using Windows Dynamic Disks or with mdadm in Linux. A minimum of three disks is required for a complete RAID 5 configuration. In some implementations a degraded RAID 5 disk set can be made (three disk set of which only two are online), while mdadm supports a fully-functional (non-degraded) RAID 5 setup with two disks – which function as a slow RAID-1, but can be expanded with further volumes.

In the example above, a read request for block A1 would be serviced by disk 0. A simultaneous read request for block B1 would have to wait, but a read request for B2 could be serviced concurrently by disk 1.

RAID 5 performance

RAID 5 implementations suffer from poor performance when faced with a workload which includes many writes which are smaller than the capacity of a single stripe; this is because parity must be updated on each write, requiring read-modify-write sequences for both the data block and the parity block. More complex implementations may include a non-volatile write back cache to reduce the performance impact of incremental parity updates.

Random write performance is poor, especially at high concurrency levels common in large multi-user databases. The read-modify-write cycle requirement of RAID 5’s parity implementation penalizes random writes by as much as an order of magnitude compared to RAID 0.

Performance problems can be so severe that some database experts have formed a group called BAARF — the Battle Against Any Raid Five.

The read performance of RAID 5 is almost as good as RAID 0 for the same number of disks. Except for the parity blocks, the distribution of data over the drives follows the same pattern as RAID 0. The reason RAID 5 is slightly slower is that the disks must skip over the parity blocks.

In the event of a system failure while there are active writes, the parity of a stripe may become inconsistent with the data. If this is not detected and repaired before a disk or block fails, data loss may ensue as incorrect parity will be used to reconstruct the missing block in that stripe. This potential vulnerability is sometimes known as the write hole. Battery-backed cache and similar techniques are commonly used to reduce the window of opportunity for this to occur.

RAID 6

Diagram of a RAID 6 setup, which is identical to RAID 5 other than the addition of a second parity block

Redundancy and Data Loss Recovery Capability

RAID 6 extends RAID 5 by adding an additional parity block; thus it uses block-level striping with two parity blocks distributed across all member disks. It was not one of the original RAID levels.

Performance

RAID 6 does not have a performance penalty for read operations, but it does have a performance penalty on write operations due to the overhead associated with parity calculations. Performance varies greatly depending on how RAID 6 is implemented in the manufacturer’s storage architecture – in software, firmware or by using firmware and specialized ASICs for intensive parity calculations. It can be as fast as RAID 5 with one fewer drives (same number of data drives.)

Efficiency (Potential Waste of Storage)

RAID 6 is no more space inefficient than RAID 5 with a hot spare drive when used with a small number of drives, but as arrays become bigger and have more drives the loss in storage capacity becomes less important and the probability of data loss is greater. RAID 6 provides protection against data loss during an array rebuild; when a second drive is lost, a bad block read is encountered, or when a human operator accidentally removes and replaces the wrong disk drive when attempting to replace a failed drive.

The usable capacity of a RAID 6 array is the total number of drives capacity -2 drives.

Implementation

According to SNIA (Storage Networking Industry Association), the definition of RAID 6 is: “Any form of RAID that can continue to execute read and write requests to all of a RAID array’s virtual disks in the presence of any two concurrent disk failures. Several methods, including dual check data computations (parity and Reed Solomon), orthogonal dual parity check data and diagonal parity have been used to implement RAID Level 6.”

TESTING & METHODOLOGY

There are some things to keep in mind about testing the Seagate Cheetah Drives. The first thing is that they’re not really designed for this purpose, which is what makes it fun. Second, they’re designed for a server environment with features that we can’t really test. For instance, they’re specifically designed to be low noise and anti vibration and we can tell you that they accomplish those quite nicely. They are entirely quiet in operation, and seem to be vibration free.

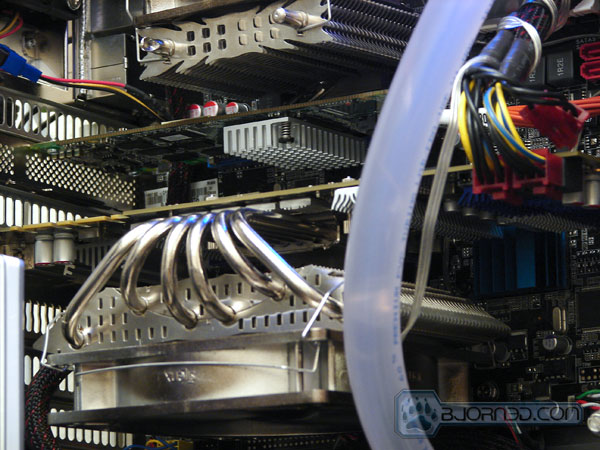

Another important thing to remember is that we are using a LSI 3ware SAS 9750-4i hardware RAID controller with a built in 800MHz processor and 512MB dedicated DDR2 800MHz RAM. It’s a very capable hardware RAID controller and it provides a bunch of different possible RAID configurations.

(Picture showing Seagate Cheetah Drives and WD RE3 Drives. This is not the actual testing system)

We chose to do testing on RAID 0 with 4 drives on a Xeon based system and a Core i7 bases system. We tested every level of RAID0 that we possibly could given our setup. We also tested one single SAS hard drive with the RAID card to see how one drive performs and what are the advantages of 4 drives compared to just 1. And finally we also took a look at RAID 5 where we get the capacity of 3 hard drives but still work in a very similar way as RAID0, except it has enough information of each hard drive on heat disk that in case one drive fails, the system is still capable of functioning.

(Picture showing the LSI 3ware SAS 9750-4i RAID card in a PCI-E 16x video card slot at 8x. This is not the actual testing system)

We did a fresh load of Windows 7 Pro on a boot drive, then because much of the testing on Hard Drive and RAID Array’s is destructive we left each Array blank and tested them in that manner. If you try to do any write testing on an Array, a lot of utilities will destroy the data on them and you’d end up loading Vista three times for each test. That’s just not feasible. Then, after we tested all the different arrangements we wanted to test we fired up what we suspected to be the most popular enthusiast RAID array (RAID0) and loaded Windows 7 Pro on it and did some real life testing with an 4xRAID0 array.

Test Rig

| Test Rig | |

| Case Type | Zalman MS-1000 H1 |

| CPU | Intel Xeon X3470 2.66GHz @ 3.9GHz. |

| Motherboard | Asus P7P55 WS SuperComputer Motherboard LGA1156 |

| Ram | OCZ Gold-Series 4x2GB (8GB) DDR3-12800 1600MHz Memories |

| CPU Cooler | Thermalright True Black 120 CPU Cooler with two SilenX 120mm fans. |

| Hard Drives |

4x Seagate Cheetah NS.2 10K 600GB |

| Optical | LG Blu-Ray Disk Drive |

| GPU |

BFG GeForce GTX 275 OC, Thermalright HR-03 GTX VGA Cooling with 120mm SilenX fan. |

| Case Fans |

4x Noctua NF-P12 120mm Fans on Bottom, Back, and Top 1x 92mm Zalman Fan on Hard Drive compartment, Front |

| Docking Stations | None |

| Testing PSU | Seasonic X-Series 750W 80Plus Gold Modular Power Supply |

| Legacy | None |

| Mouse | Logitech G5 Gaming Mouse |

| Keyboard | Logitech G15 Gaming Keyboard |

| Gaming Ear Buds |

None |

| Speakers | Creative 2.1 Speaker Setup. |

Test Suite

|

Benchmarks |

|

ATTO |

|

HDTach |

|

Crystal DiskMark |

|

Adobe After Effects CS4 |

|

Uncompressed HD Footage Video Playback |

|

HD Tune Pro |

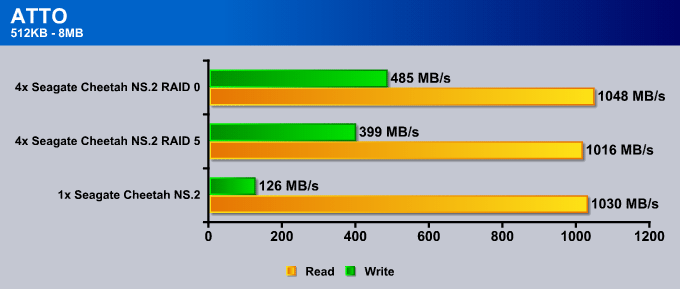

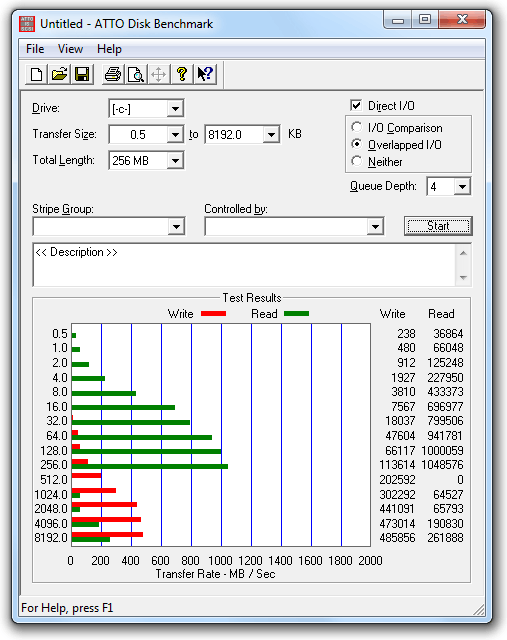

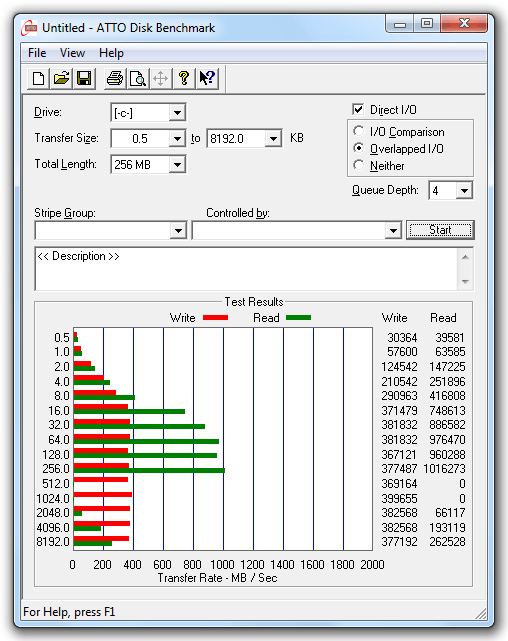

ATTO

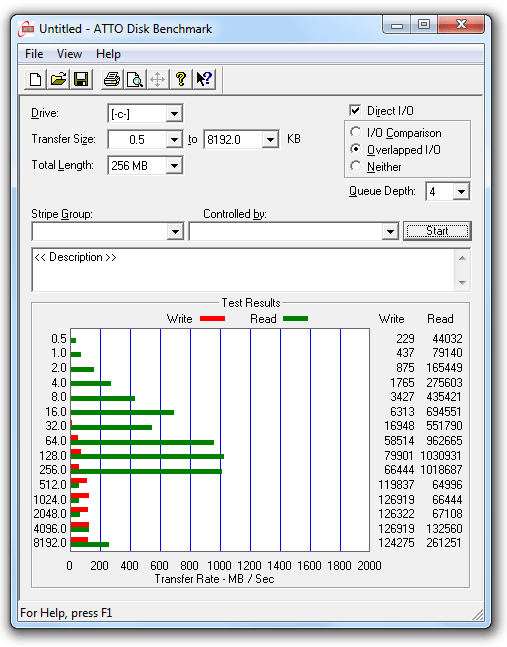

ATTO was the first benchmark we have ran on our new SAS setup. It was very interesting to see that no matter how we had the hard drives set up, ATTO always reported around 1000-1050MB/s read speed. The Write speed was more understandable since it reflected exactly how the hard drives were set up. Of course a RAID 0 configuration with four hard drives is going to be faster than a RAID 5 array. It is extremely interesting to see that there is about a 3.8x performance increase between 1 hard drive and 4 hard drives. I believe that the fast read speed and the very nice scalability in increased performance between 1 drive and 4 drives has to do with LSI’s hardware based RAID configuration. The read speeds seem to reflect the read cache setting on LSI’s cards.

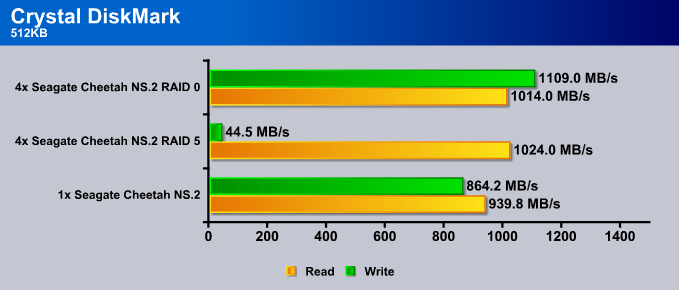

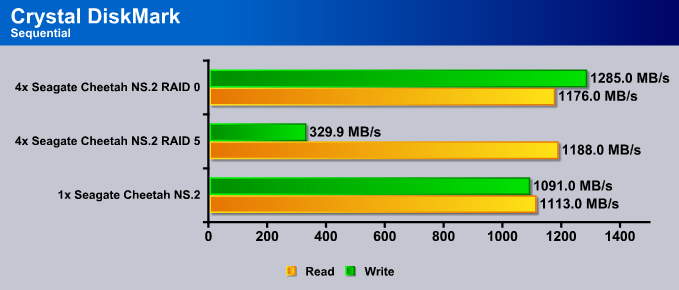

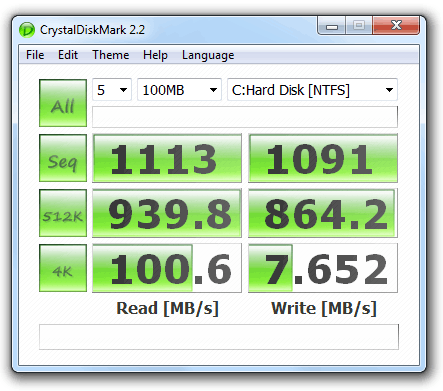

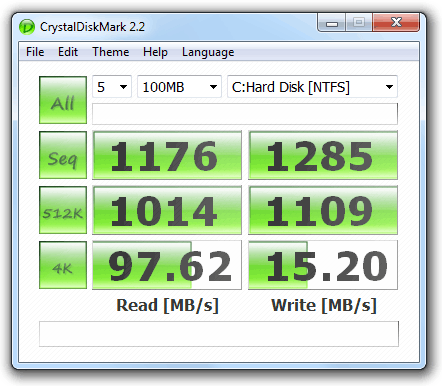

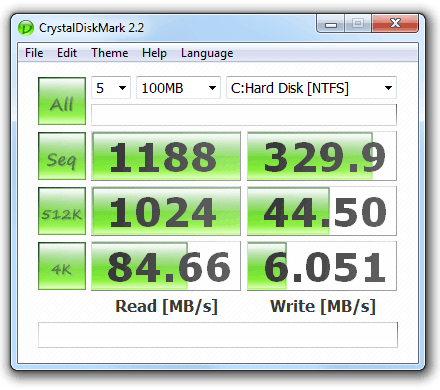

Crystal DiskMark

This test was a bit odd. For some reason the Cheetah NS.2 drives in a RAID 5 configuration performed poorly on the write benchmark.The difference between a single drive and 4 drives was not too big if we considering it percentage wise.

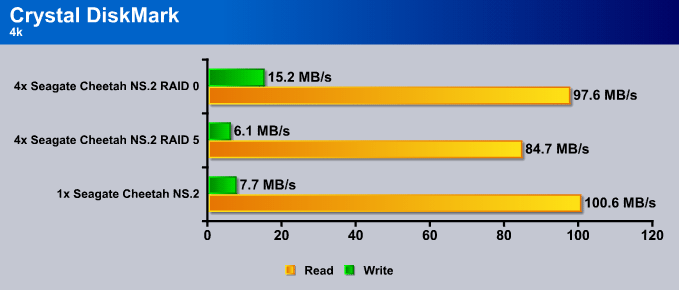

This test is interesting because this shows how random access drives poorly perform under different file size reads. As mentioned on the first page, a sequential based drive would perform better in this type of benchmark.

Finally, this last Crystal DiskMark benchmark looked at sequential tests. With the combination of a powerful sequential based LSI card, we saw tremendous performance above 1000MB/s read and write speeds, except for the RAID5 drives as seen in the first benchmark as well.

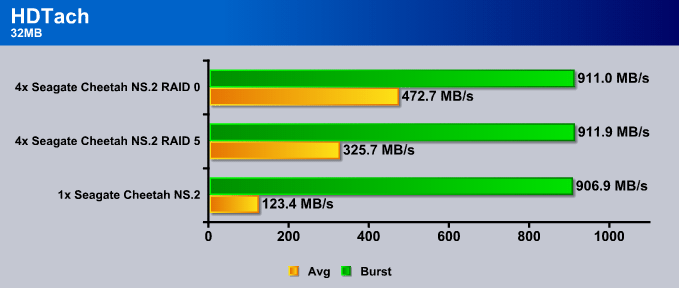

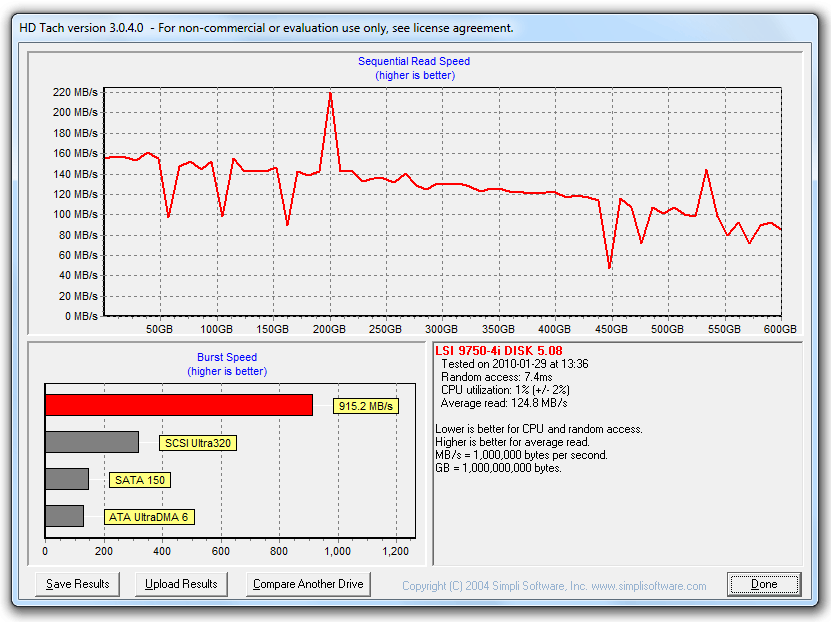

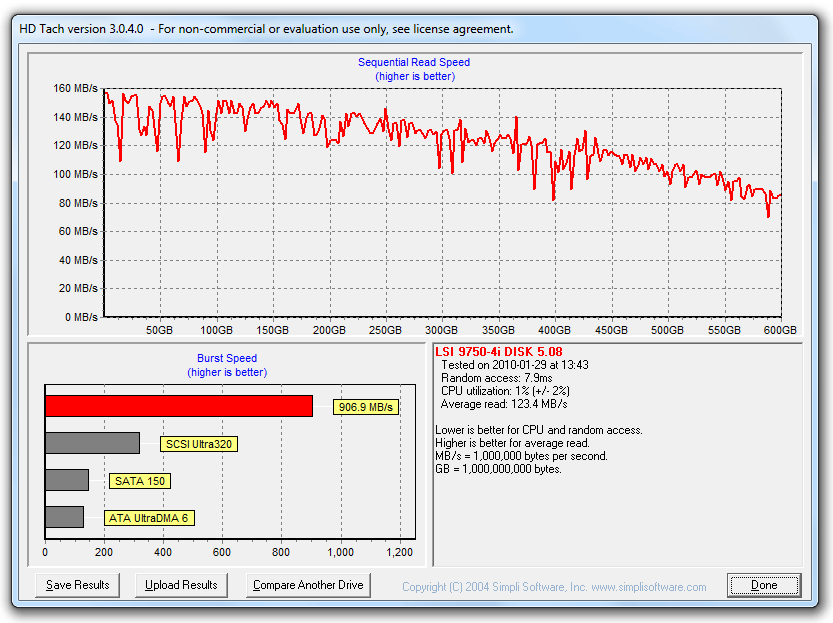

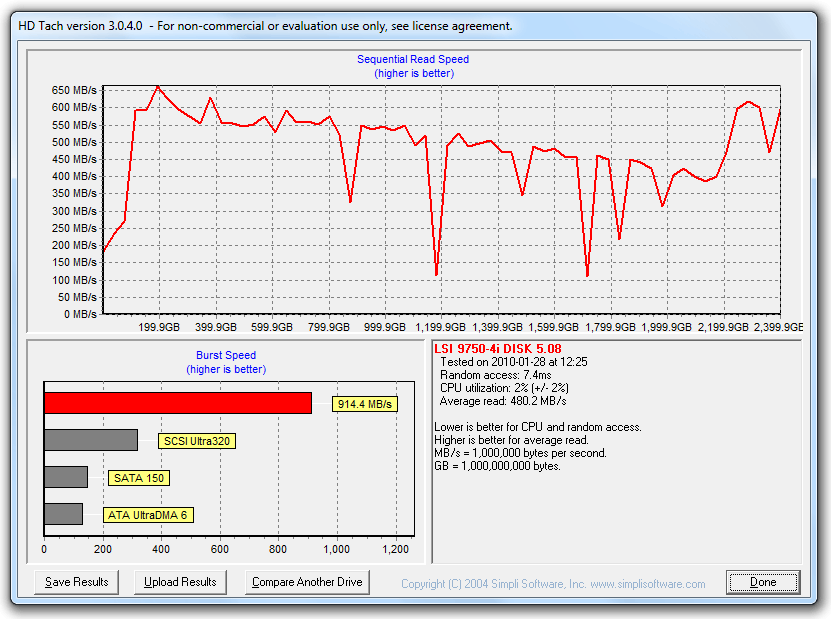

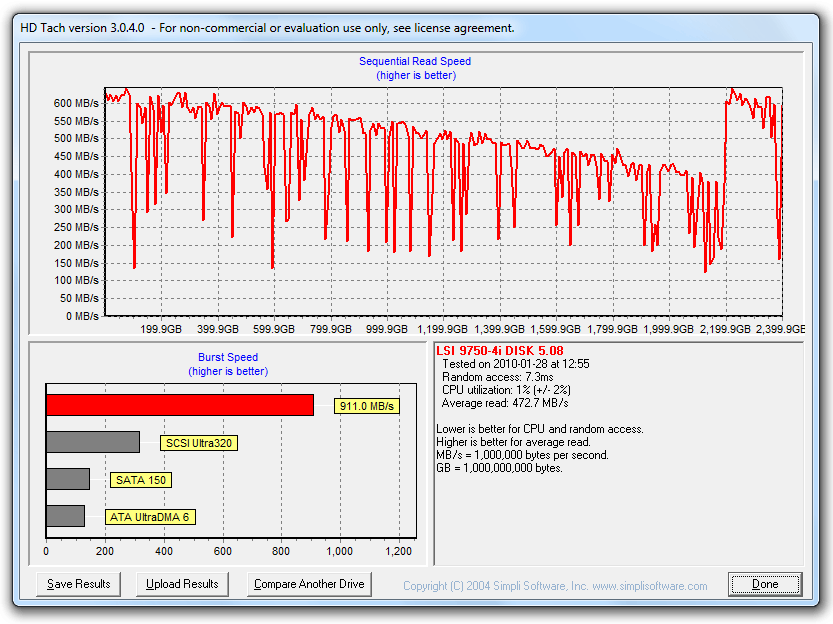

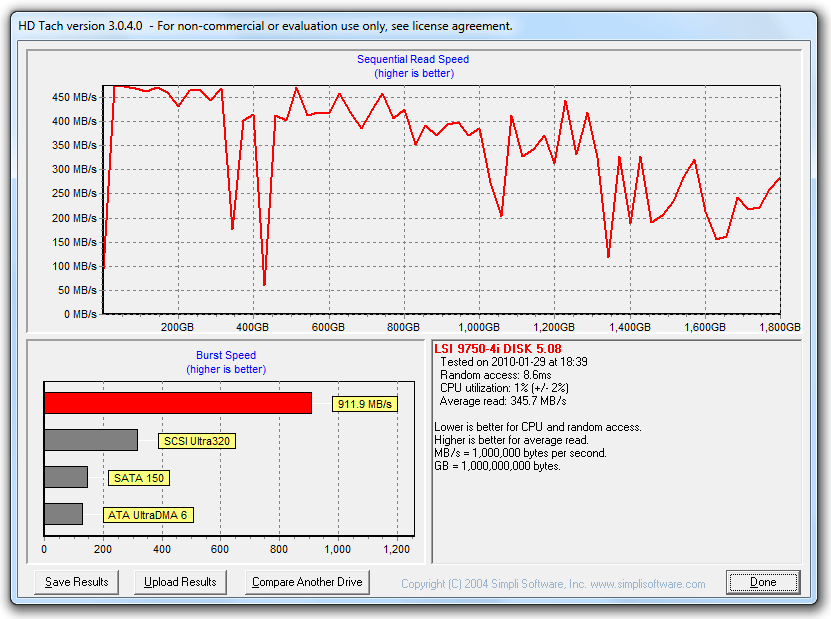

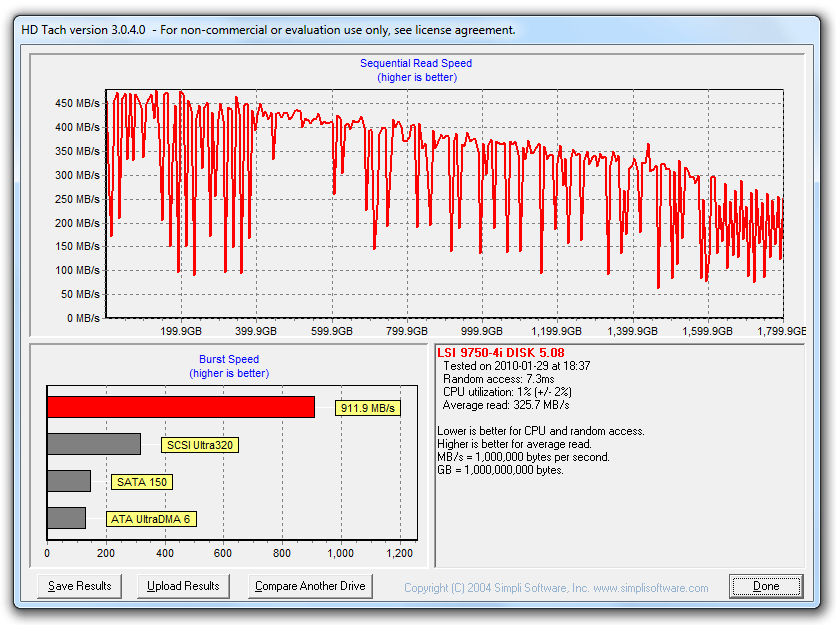

HDTach

HDTach is one of my favorite benchmarks since most of the time we get very accurate performance results that reflect real-life application performances. We can clearly see a performance increase from single drive to 4 drives. RAID 5 is a bit slower then RAID 0 but that is expected.

There is not too much to explain here. The results are very similar to the previous test done with only about 10mb/s difference.

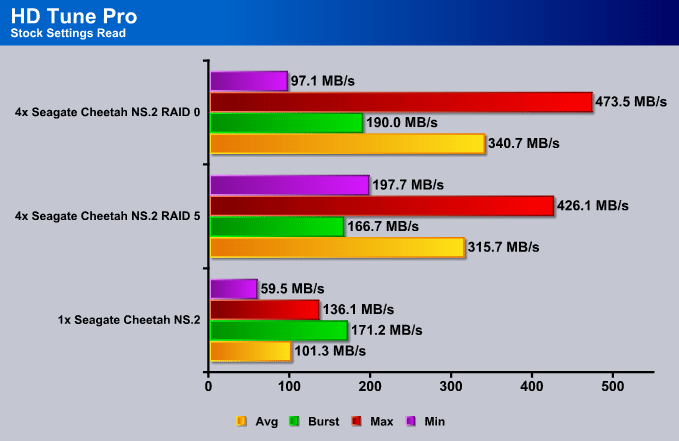

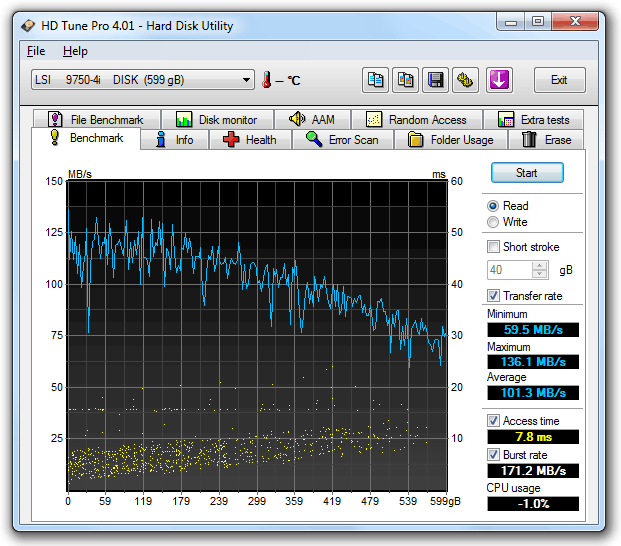

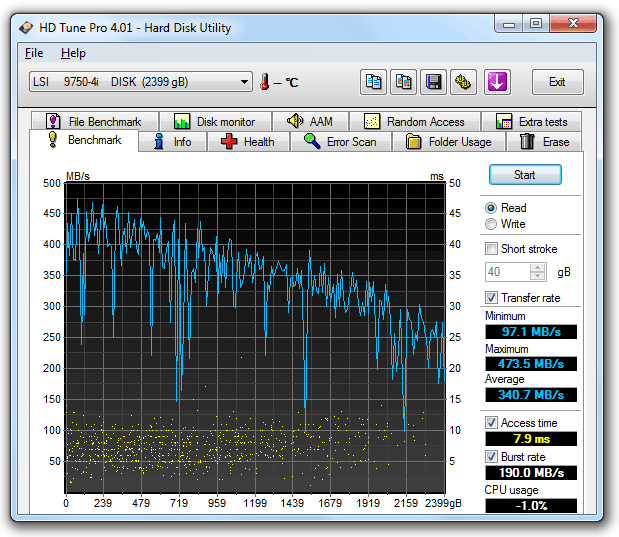

HD Tune Pro

HD Tune Pro is another benchmark application I like since this application also reflects very much of how other real-life applications would perform. One single Cheetah NS.2 performs on average at around 101.3MB/s. This is good to see because people might wonder how well a 6Gb/s 10k hard drive would perform to older 3Gb/s hard drives. From past experience 3Gb/s hard drives perform around 60-80MB/s on average. The minimum on the other hand is around 60mb/s which is quite good for a single SAS hard drive.

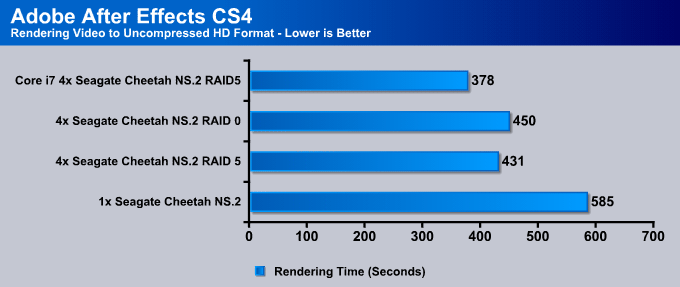

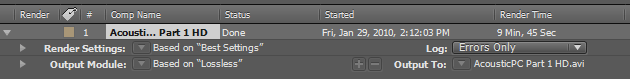

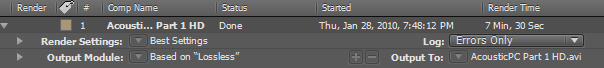

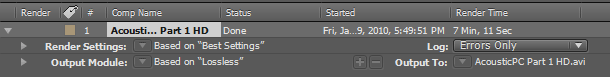

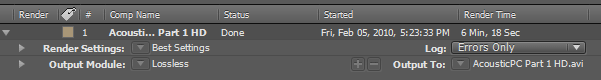

Adobe After Effects CS4

Now the best way to do performance tests on a hard drive is by actually running a real-life application and seeing what kind of performance difference can be expected from more complex and higher-end products like the Seagate Cheetah NS.2 hard drives and the LSI 3Ware SAS 9750-4i RAID Controller Card. For those still working with old 7200RPM 3Gb/s SATA hard drives, performance is somewhere around 600-700 seconds for a render. Of course rendering speed will depend on what is being rendered and how much data is being written to the drive. 1x Seagate Cheetah NS.2 hard drive took 585 seconds to convert a 5 minute and 11 second video to uncompressed HD format. As we started using more hard drives, performance increased dramatically, but it still depended on other hardware like motherboard, memory and CPU that determined the actual performance of the rendering. To minimize other factors and try to get the hard drives to work as much as they could, we forced the software to use up all the memory available (8GB) in order to put all the other necessary files onto the scratch disk (SAS drives). This way, while the uncompressed HD footage is being rendered and written to the drives, After Effects also needed to write additional data to the drives that would have gone to the memory instead, if the memory would not have been full. By using 4x Seagate Cheetah NS.2 hard drives we were able to knock down 207 seconds from the rendering time, which is roughly 3 minutes and 27 seconds. That is a lot of time if you are on a deadline and you need to render many files. We can clearly see how the LSI 3Ware 9750-4i also plays a big role in making sure that video editing and writing files to the drive is performed with maximum efficiency and performance available from the drives.

Benchmark Screenshots

These are screenshots of the benchmarks we have performed. Since just graphs cannot really shows the actual performance of these hard drives with the LSI 3Ware SAS 9750-4i RAID Controller card, we have also included this section with the benchmark results screenshots.

Single SAS Benchmarks

ATTO

Crystal DiskMark

HDTach

Click Image for a Larger One

HD Tune Pro

After Effects CS4 – Uncompressed Video Rendering

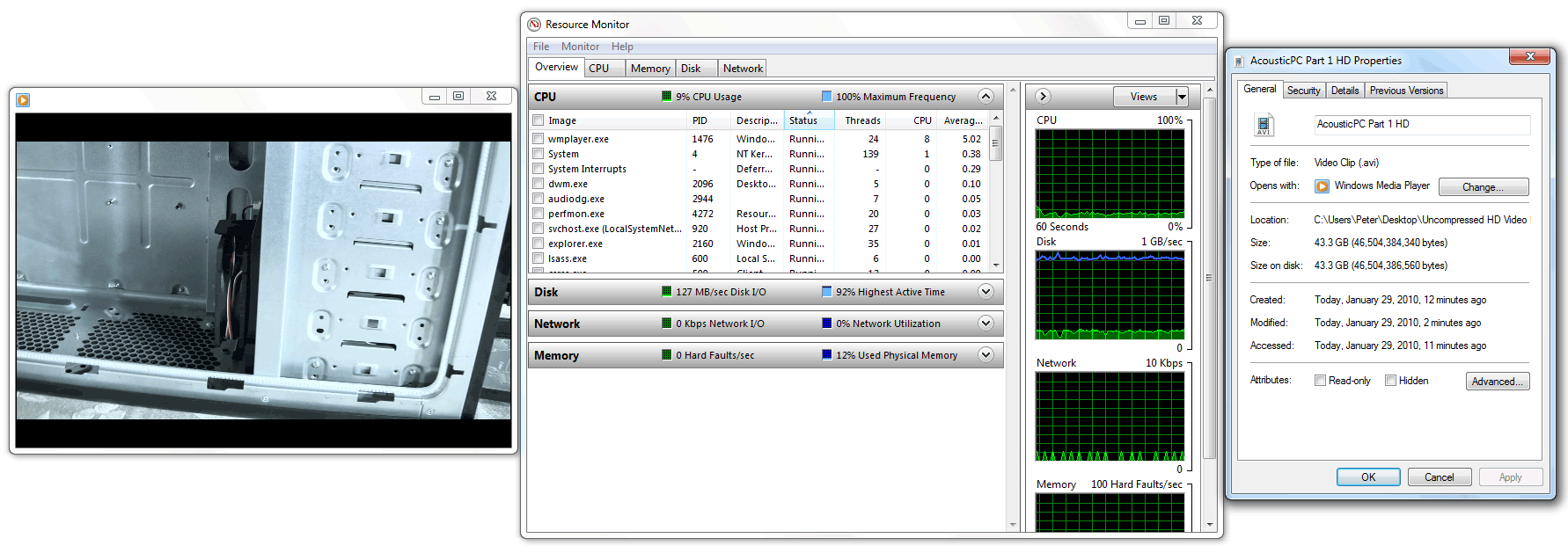

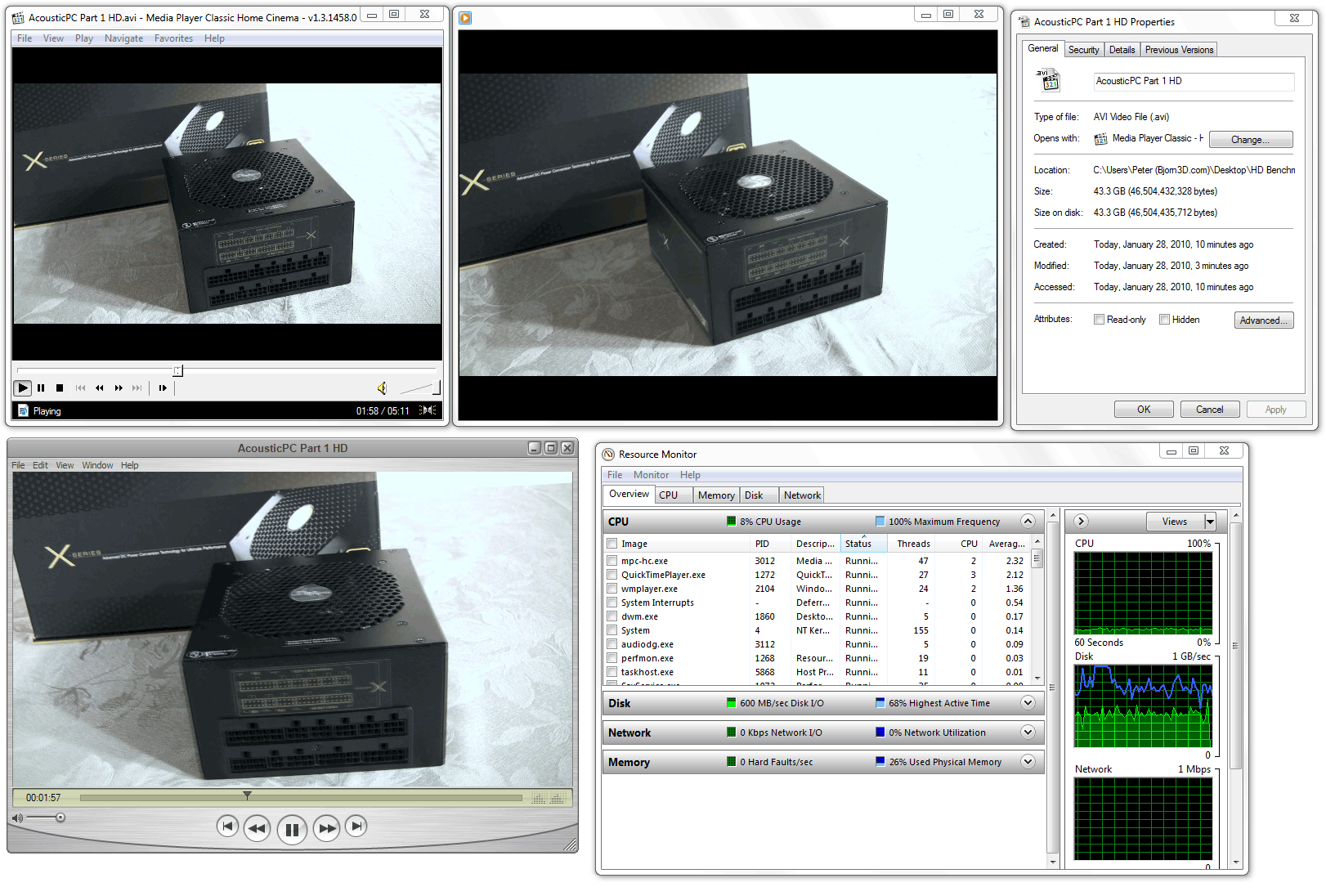

Uncompressed HD Video Playback

Click Image for a Larger One

4x RAID 0 Benchmarks

ATTO

Crystal DiskMark

HDTach

Click Image for a Larger One

HD Tune Pro

After Effects CS4 – Uncompressed Video Rendering

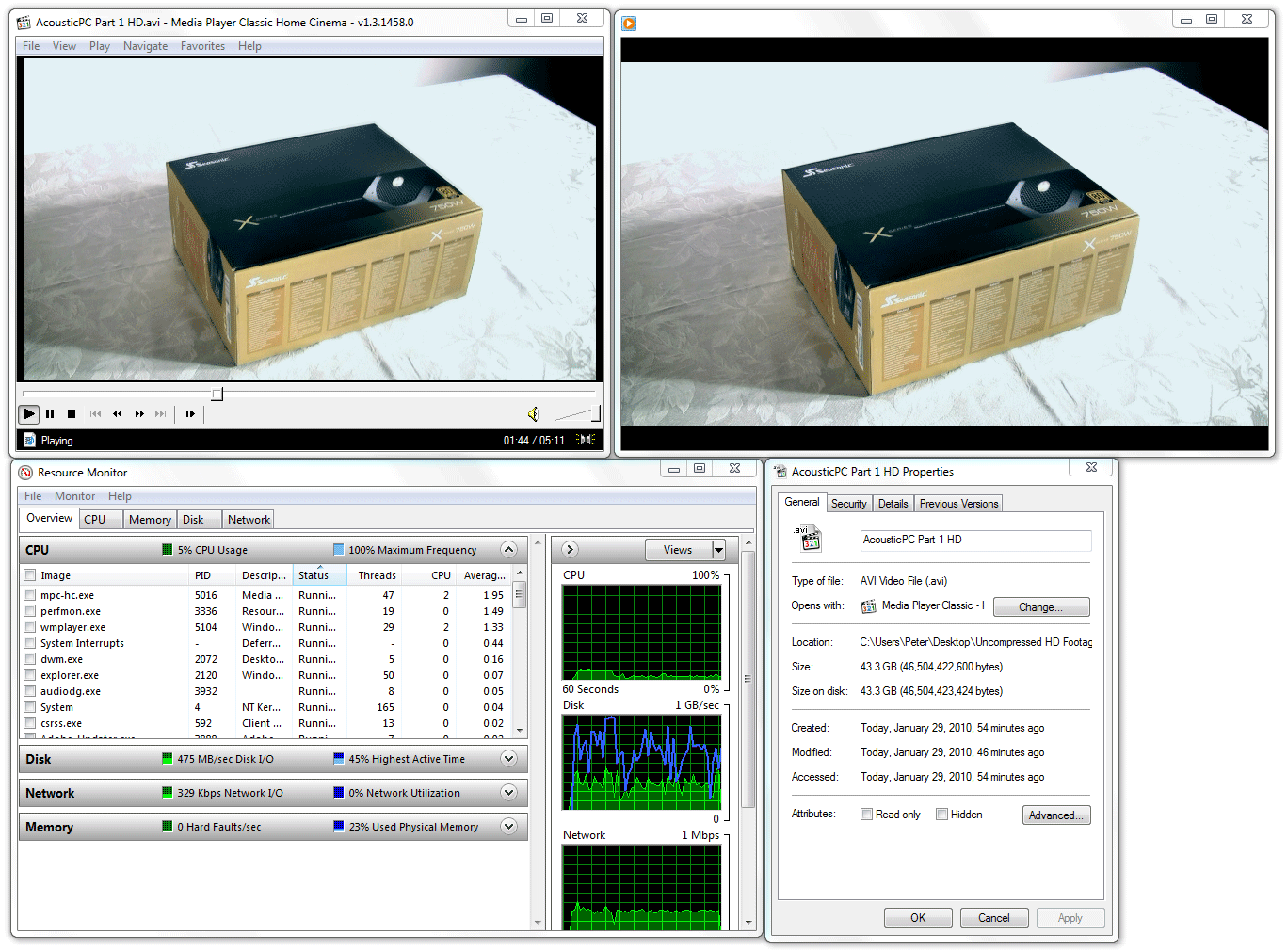

Uncompressed HD Video Playback

Click Image for a Larger One

I have 2x WD RAID Edition 3 1TB Hard Drives in my main Core i7 system. When I play one single Uncompressed video as you see in the picture (43.3GB in size for a 5:11min long video), the hard drives are barely able to keep up with the video, causing many lags and glitches. The performance of both of those 3.0Gb/s SATA hard drives in RAID0 is around 180MB/s maximum. It’s average is somewhere around 150-160MB/s.

Now I played the same video on the current 4 drive RAID 0 SAS setup, and one single video was playing completely fine. I decided to add another player to play the same video. Still no problems. I was already hitting around 400MB/s as I was measuring the read performance through the Windows Hard Drive Performance section in the Resource Monitor. Then I turn on a 3rd instance of the video, and the load went up to 600MB/s. The video was still playing just fine, with maybe one or two lags every minute. So we can see that we are starting to hit the limit of the hard drives in average performance, not burst.

I consider this very impressive. If we look at this from a film maker’s point of view, this allows the film maker to film videos and capture at real-time to the hard drives in uncompressed HD format. This means, the film maker working with a cheaper prosumer camcorder that allows the user to do direct capture to a computer be able to get the highest quality video possible making green screening and other jobs a piece of cake. This also means that with such setup you are able to get video quality that are only capable in professional studios working with cameras costing more than what an average user could afford. The way these cameras work is by having an external large memory storage capable of 350MB/s transfer rates. As you can imagine this won’t run very cheap for a film maker.

Editing uncompressed HD videos with this performance is also very good, no lags during playback even when the hard drive needs to access multiple uncompressed videos at once. Scrubbing through the video is also much smoother and easier since less time needs to be given to the system in order to skip from one part of the video to the next.

4x RAID 5 Benchmarks

ATTO

Crystal DiskMark

HDTach

Click Image for a Larger One

After Effects CS4 – Uncompressed Video Rendering

Uncompressed HD Video Playback

Click Image for a Larger One

During the RAID 5 setup, the hard drives were able to handle up to 2 videos at the same time. When we started playing another instance of the video, we could start seeing the videos lag. With RAID 5 we saw the average performance drop a bit but the main point of this setup is to have a backup plan in case one of the drives fails. The way RAID 5 works is in case one drive fails, the other hard drives still have enough information to rebuild the files and make it possible for the user to use their PC like before.

Core i7 System 4x RAID 5 Benchmarks

After Effects CS4 – Uncompressed Video Rendering

While this test was just for the heck of it, I found it very interesting that an actual LGA 1366 Core i7 system which used a triple-channel DDR3 memory would perform even faster with the help of these SAS hard drives then previously.

Conclusion – Seagate Cheetah NS.2

The Seagate Cheetah NS.2 600GB 10k 6Gb/s SAS hard drives got me very excited when I first put them in my system, and it kept me excited throughout the testing period. For serious film makers or businesses, I consider the Seagate Cheetah NS.2 SAS drives a must. I measured the temperatures on these bad boys and they only went up to around 38C. My older WD RE3 hard drives that spin at 7200RPM go up to even 45C in a 20C ambient room temperature while the Cheetah NS.2 hard drives that are 10,000RPM kept a cool 38C temperature. Very impressive. Another great addition to its already amazing quality was the quiet operation. Unless something is being searched or the hard drives are booting up, I couldn’t even tell that they were on. No vibrations, nothing! Its performance was exceptional, reaching an average of 600MB/s reading speed when playing 3 instances of a 43.3GB uncompressed HD video files simultaneously. Overall these hard drives deserve to be noticed!

| OUR VERDICT: Seagate Cheetah NS.2 600GB 10,000RPM 6Gb/s Hard Drives | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The Seagate Cheetah NS.2 10k 600GB 6Gb/s hard drives cannot go unnoticed. With its great features, performance, and reliability, they are perfect for businesses with lots of transactional applications that require constant and immediate access to data. The Seagate cheetah NS.2 10k drives deserve a 9.5 out of 10 points and Bjorn3D’s Golden bear Award! |

Conclusion – LSI 3Ware SATA+SAS 9750-4i RAID Controller Card

While it was difficult to really test the overall performance of the LSI 3Ware SATA+SAS 9750-4i RAID Controller Card because there was no way for us to test the SAS drives straight through the motherboard, it is a bit hard to tell how much it helped out, but from past experience I know for a fact that hard drives do not have such a high scalability or in another words performance increase when used in any RAID array as we have seen with the LSI 3Ware 9750-4i RAID Card. Also, the card was designed with tons of features including error checking, RAID Recovery and even read/write cache that helps with performance tremendously due to the included 512MB DDR2 800Mhz cache and the 800Mhz processor. One of the things which really impressed me was the sequential read and write speeds that we were able to accomplish even when the hard drives were designed for random access pattern. Overall it made the editing of large uncompressed HD files enjoyable.

| OUR VERDICT: LSI 3Ware SATA+SAS 9750-4i RAID Controller Card | ||||||||||||||||||

|

||||||||||||||||||

| Summary: The LSI 3Ware SATA+SAS 9750-4i 6Gb/s RAID Contoller Card provided excellent scalability in performance for the Seagate Cheetah 6Gb/s SAS hard drives and has lots of security and error checking features. It’s superb design also deserves 9.5 out of 10 points and Bjorn3D’s Golden Bear Award! |

| Product Name | Price |

| Seagate 600GB Cheetah NS.2 SAS 6Gb/s Internal Hard Drive | $533 |

| LSI 3ware SAS 9750-4I KIT 4PORT 6GB SATA+SAS PCIE 2.0 512MB | $386 |

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996